Towards Linear Algebra over Normalized Data Lingjiao Chen 1 Arun Kumar 2 Jeffrey Naughton 3 Jignesh M. Patel 1 1 University of Wisconsin-Madison 2 University of California, San Diego 3 Google {lchen, jignesh}@cs.wisc.edu, [email protected], [email protected] ABSTRACT Providing machine learning (ML) over relational data is a mainstream requirement for data analytics systems. While almost all the ML tools require the input data to be pre- sented as a single table, many datasets are multi-table, which forces data scientists to join those tables first, leading to data redundancy and runtime waste. Recent works on “factor- ized” ML mitigate this issue for a few specific ML algorithms by pushing ML through joins. But their approaches require a manual rewrite of ML implementations. Such piecemeal methods create a massive development overhead when ex- tending such ideas to other ML algorithms. In this paper, we show that it is possible to mitigate this overhead by leverag- ing a popular formal algebra to represent the computations of many ML algorithms: linear algebra. We introduce a new logical data type to represent normalized data and devise a framework of algebraic rewrite rules to convert a large set of linear algebra operations over denormalized data into oper- ations over normalized data. We show how this enables us to automatically “factorize” several popular ML algorithms, thus unifying and generalizing several prior works. We pro- totype our framework in the popular ML environment R and an industrial R-over-RDBMS tool. Experiments with both synthetic and real normalized data show that our framework also yields significant speed-ups, up to 36x on real data. 1. INTRODUCTION The data management industry and academia are working intensively on tools to integrate machine learning (ML) algo- rithms and frameworks such as R with data systems [1,9,10, 18, 20, 23, 33]. While almost all the ML tools require the in- put data to be presented as a single table, many datasets are multi-table, typically connected by primary key-foreign key (PK-FK) or more general “M:N” dependencies [28], which forces data scientists to join those tables first. However, such joins often introduces redundancy in the data [28], leading to extra storage requirements and runtime inefficiencies due to the redundant computations in the ML algorithms. A few recent works [25, 29, 30] aim to avoid such redun- dancy by decomposing the computations of some specific ML algorithms and pushing them through joins. However, a key limitation of such approaches is that they require man- ually rewriting each ML algorithm’s implementation to ob- tain a factorized version. This creates a daunting develop- ment overhead in extending the benefits of factorized ML to other ML algorithms. Moreover, the prior approaches are too closely tied to a specific data platform, e.g., an in- memory engine [30] or an RDBMS [25]. This state of the art is illustrated in Figure 1(a) and it raises an important question: Is it possible to generalize the idea of factorized ML and “automate” its application to a much wider variety of ML algorithms and platforms in a unified manner? In this paper, we present the first systematic approach that takes a step towards generalizing and automating fac- torized ML. Our idea is to use a common formal representa- tion language for ML algorithms: linear algebra (LA). Many popular ML algorithms such as linear regression, logistic regression, and K-Means clustering can be expressed suc- cinctly using LA operators such as matrix multiplication and inversion [9]. Moreover, data scientists often write new ML algorithms in popular LA-based frameworks such as R [2]. The data management community has embraced LA and R as a key environment for ML workloads [1,3,4,9]. For exam- ple, Oracle R Enterprise (ORE) lets users write LA scripts over an R “DataFrame” that is stored as an in-RDBMS ta- ble [1], while IBM’s SystemML provides an R-like language to scale to data on HDFS [9]. While such systems pro- vide scalability and sophisticated optimizations (e.g., Sys- temML’s hybrid parallelism [8] and SPOOF [16]), they do not optimize LA scripts over normalized data. Our high-level approach is illustrated in Figure 1(c). Given an ML algorithm in LA (logistic regression in the figure) and the normalized schema, our middleware framework named Morpheus automatically creates the factorized version of the ML algorithm, i.e., one that operates on the base tables. As illustrated in Figure 1(b), this approach lets us factorize many ML algorithms with one framework, thus mitigating the development overhead. Furthermore, by decoupling how the ML algorithm is factorized from which platform it is run on, Morpheus lets us leverage existing scalable LA systems. Realizing a framework like Morpheus is technically chal- lenging due to three crucial desiderata. First is generality, i.e., it should be able to handle a wide variety of ML algo- rithms expressible in LA, as well as both PK-FK and M:N joins. Second is closure, i.e., it should ideally rewrite an LA script only into a different LA script so that the internals of the LA system used need not be modified, which could make practical adoption easier. Third is efficiency, i.e., it should offer mechanisms to ensure that the factorized version is only used when it is faster than the single-table version. As a step towards providing high generality, in this pa- per, we focus on a set of LA operations over the data ma- trix that are common for several popular ML algorithms. These LA operations are listed in Table 1. We introduce a new “logical” data type, the normalized matrix, to repre- sent multi-table data inputs in LA. In a sense, this brings the arXiv:1612.07448v4 [cs.DB] 1 Jan 2017

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

Towards Linear Algebra over Normalized Data

Lingjiao Chen1 Arun Kumar2 Jeffrey Naughton3 Jignesh M. Patel11University of Wisconsin-Madison 2University of California, San Diego 3Google

{lchen, jignesh}@cs.wisc.edu, [email protected], [email protected]

ABSTRACTProviding machine learning (ML) over relational data is amainstream requirement for data analytics systems. Whilealmost all the ML tools require the input data to be pre-sented as a single table, many datasets are multi-table, whichforces data scientists to join those tables first, leading to dataredundancy and runtime waste. Recent works on “factor-ized” ML mitigate this issue for a few specific ML algorithmsby pushing ML through joins. But their approaches requirea manual rewrite of ML implementations. Such piecemealmethods create a massive development overhead when ex-tending such ideas to other ML algorithms. In this paper, weshow that it is possible to mitigate this overhead by leverag-ing a popular formal algebra to represent the computationsof many ML algorithms: linear algebra. We introduce a newlogical data type to represent normalized data and devise aframework of algebraic rewrite rules to convert a large set oflinear algebra operations over denormalized data into oper-ations over normalized data. We show how this enables usto automatically “factorize” several popular ML algorithms,thus unifying and generalizing several prior works. We pro-totype our framework in the popular ML environment R andan industrial R-over-RDBMS tool. Experiments with bothsynthetic and real normalized data show that our frameworkalso yields significant speed-ups, up to 36x on real data.

1. INTRODUCTIONThe data management industry and academia are working

intensively on tools to integrate machine learning (ML) algo-rithms and frameworks such as R with data systems [1,9,10,18,20,23,33]. While almost all the ML tools require the in-put data to be presented as a single table, many datasets aremulti-table, typically connected by primary key-foreign key(PK-FK) or more general “M:N” dependencies [28], whichforces data scientists to join those tables first. However, suchjoins often introduces redundancy in the data [28], leadingto extra storage requirements and runtime inefficiencies dueto the redundant computations in the ML algorithms.

A few recent works [25, 29, 30] aim to avoid such redun-dancy by decomposing the computations of some specificML algorithms and pushing them through joins. However,a key limitation of such approaches is that they require man-ually rewriting each ML algorithm’s implementation to ob-tain a factorized version. This creates a daunting develop-ment overhead in extending the benefits of factorized MLto other ML algorithms. Moreover, the prior approachesare too closely tied to a specific data platform, e.g., an in-memory engine [30] or an RDBMS [25]. This state of the

art is illustrated in Figure 1(a) and it raises an importantquestion: Is it possible to generalize the idea of factorizedML and “automate” its application to a much wider varietyof ML algorithms and platforms in a unified manner?

In this paper, we present the first systematic approachthat takes a step towards generalizing and automating fac-torized ML. Our idea is to use a common formal representa-tion language for ML algorithms: linear algebra (LA). Manypopular ML algorithms such as linear regression, logisticregression, and K-Means clustering can be expressed suc-cinctly using LA operators such as matrix multiplication andinversion [9]. Moreover, data scientists often write new MLalgorithms in popular LA-based frameworks such as R [2].The data management community has embraced LA and Ras a key environment for ML workloads [1,3,4,9]. For exam-ple, Oracle R Enterprise (ORE) lets users write LA scriptsover an R “DataFrame” that is stored as an in-RDBMS ta-ble [1], while IBM’s SystemML provides an R-like languageto scale to data on HDFS [9]. While such systems pro-vide scalability and sophisticated optimizations (e.g., Sys-temML’s hybrid parallelism [8] and SPOOF [16]), they donot optimize LA scripts over normalized data.

Our high-level approach is illustrated in Figure 1(c). Givenan ML algorithm in LA (logistic regression in the figure) andthe normalized schema, our middleware framework namedMorpheus automatically creates the factorized version ofthe ML algorithm, i.e., one that operates on the base tables.As illustrated in Figure 1(b), this approach lets us factorizemany ML algorithms with one framework, thus mitigatingthe development overhead. Furthermore, by decoupling howthe ML algorithm is factorized from which platform it is runon, Morpheus lets us leverage existing scalable LA systems.

Realizing a framework like Morpheus is technically chal-lenging due to three crucial desiderata. First is generality,i.e., it should be able to handle a wide variety of ML algo-rithms expressible in LA, as well as both PK-FK and M:Njoins. Second is closure, i.e., it should ideally rewrite an LAscript only into a different LA script so that the internals ofthe LA system used need not be modified, which could makepractical adoption easier. Third is efficiency, i.e., it shouldoffer mechanisms to ensure that the factorized version is onlyused when it is faster than the single-table version.

As a step towards providing high generality, in this pa-per, we focus on a set of LA operations over the data ma-trix that are common for several popular ML algorithms.These LA operations are listed in Table 1. We introducea new “logical” data type, the normalized matrix, to repre-sent multi-table data inputs in LA. In a sense, this brings the

arX

iv:1

612.

0744

8v4

[cs

.DB

] 1

Jan

201

7

Original Logistic Regression

Input: Regular matrix T, Y, and w

for i in 1 : max_iter do

w = w + a * t(T) %*% (Y/(1 + exp(T %*% w)))

end

Factorized Logistic Regression

Input: Normalized matrix (S; FK; R), Y, and w

for i in 1 : max_iter do

tmp = t(Y/(1 + exp(S %*% w[1:dS, ] +

FK%*% (R %*% w[dS+1:dS+dR, ]))))

w = w + t(cbind(tmp %*% S, (tmp %*%

FK) %*% R))

end

Optimization Rule:

LMM, RMM,

Crossprod,

RowSums,

Transpose, etc.

Heuristic Decision

Rule

Schema/Metadata Information

GLM/GD OLS/CP

Stack 1

Other

modelsPaper [25] Paper [30]

In-memory

Factorized ML: Prior Work Our Proposed Approach

GLM/GD OLS/CP K-Means GNMF

MORPHEUS: Factorized Linear Algebra

Systems that support LA workloads

In-DBMS

Stack 2 …

…

a b c MORPHEUS

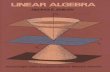

Figure 1: (a) In contrast to prior approaches to factorized ML, which were ML algorithm- and platform-specific, (b) Morpheusprovides a unified and generic framework to automatically factorize many ML algorithms over any platform that supports LAworkloads. (c) Illustration of how Morpheus automatically rewrites the standard single-table version of logistic regressioninto the factorized version using the normalized schema information. The LA operators whose rewrite rules are invoked arehighlighted. The heuristic decision rule uses input data statistics to predict if the factorized version will be faster.

classical database notion of logical data independence [28] toLA systems. To ensure tractability, we focus only on a fewjoin schemas that are ubiquitous in practice: “star schema”PK-FK joins and “chain schema” M:N joins. More complexjoin schemas are left to future work.

To provide closure, we devise an extensive framework ofalgebraic rewrite rules that transform an LA operation overa denormalized data matrix into a set of LA operations overthe normalized matrix. In a sense, this is a first principlesextension of the classical idea of pushing relational opera-tions through joins [12,32] to LA. Some LA operations suchas scalar-matrix multiplication and aggregations are trivialto rewrite and are reminiscent of relational optimization.But more complex LA operations such as matrix-matrixmultiplication, matrix cross-product, and matrix inversionenable us to devise novel rewrites that exploit their LA-specific semantics with no known counterparts in relationaloptimization. We also handle matrix transpose. For ex-position sake, we describe the rewrite rules for a PK-FKjoin and then generalize to star schema multi-table PK-FKjoins and M:N joins. We apply our framework to four pop-ular and representative ML algorithms to show how theyare automatically factorized: logistic regression for classifi-cation, linear regression, K-Means clustering, and GaussianNon-negative Matrix Factorization (GNMF) for feature ex-traction. Our automatic rewrites largely subsume the ideasin [25, 30] for logistic and linear regression and produce thefirst known factorized versions of K-Means and GNMF.

Finally, we discuss the efficiency trade-offs involved in therewrites of complex LA operations and present ways to opti-mize their performance. We also present simple but effectiveheuristic decision rules to predict when a factorized LA oper-ation might cause slow-downs; this happens in some extremecases depending on the dimensions of the base tables [25].

We prototype Morpheus on standalone R, which is popu-lar for ML-based analytics [4], and the R-over-RDBMS toolOracle R Enterprise (ORE) [1] (but note that our frameworkis generic and applicable to other LA systems as well). Wepresent an extensive empirical evaluation using both realand synthetic datasets. Our experiments validate the ef-fectiveness of our rewrite rules and show that Morpheusyields speed-ups of up to 36.4x for popular ML algorithmsover real data. Compared to a prior ML algorithm-specificfactorized ML tool [25], Morpheus achieves comparable orhigher speed-ups, while offering higher generality. Finally,we also evaluate the scalability of Morpheus on ORE.

In summary, this paper makes the following contributions:

• To the best of our knowledge, this is the first paperon generalizing and automating the idea of factorizedML, focusing on ML algorithms expressed in LA.

• We present a mechanism to represent normalized datain LA and an extensive framework of rewrite rules forLA operations. Our framework provides high general-ity and closure with respect to LA, enabling us to au-tomatically factorize several popular ML algorithms.

• We extend our framework to star schema multi-tablePK-FK joins as well as M:N joins.

• We provide prototypes of our framework in both R andORE and perform an extensive empirical analysis oftheir performance using both real and synthetic data.

Outline. Section 2 presents the problem setup and back-ground. Section 3 introduces the normalized matrix, ex-plains the architecture of Morpheus, and dives deep intothe rewrites rules. Section 4 applies our framework to fourML algorithms. Section 5 presents the experiments and Sec-tion 6 presents the related work. We conclude in Section 7.

2. PRELIMINARIES AND BACKGROUNDProblem Setup and Notation. For simplicity of exposi-tion, we start with a single PK-FK join. Multi-table joinsand M:N joins will be discussed later in Section 3. Con-sider two tables: S(Y,XS , FK) and R(RID,XR), whereXS and XR are the feature vectors, and Y is called thetarget (for supervised classification and regression). FK isthe foreign key and RID is the primary key of R. Fol-lowing [25], we call S the entity table and R the attributetable. The output of the join-project query is denoted byT(Y,X)← π(S ./FK=RID R), wherein X ≡ [XS ,XR] is theconcatenation of the feature vectors. We adopt a standardconvention on data representation: let R.XR (resp. S.XS ,S.Y , T.X) correspond to the feature matrix R (resp. S, Y ,T ). Table 2 summarizes our notation.

Example (based on [25]). Consider an insurance analystclassifying customers to predict who might churn, i.e., can-cel their policy. She builds a logistic regression classifier us-ing a table with customer details: Customers (CustomerID,Churn, Age, Income, EmployerID). EmployerID is the ID ofthe customer’s employer, a foreign key referring to a ta-ble about organizations that potentially employ the cus-tomers: Employers (EmployerID, Revenue, Country). Thus,S is Customers, R is Employers, FK is S.EmployerID, RIDis R.EmployerID, XS is {Age, Income}, XR is {Country,Revenue}, and Y is Churn. She joins the tables to bring inXR because she has a hunch that customers employed byrich corporations in rich countries are unlikely to churn.

Linear Algebra (LA) Systems and R. LA is an elegantformal language in which one can express many ML algo-rithms [9, 15]. Data are formally represented as matrices,

Op Type Name Expression Output Type Parameter X or x Factorizable

Element-wiseScalar Op

Arithmetic Op(� = +,−, ∗, /, ˆ, etc)

T � x or x � T

Normalized Matrix

A scalar

Yes

Transpose t(T ) N/A

Scalar Function f(e.g., log, exp, sin)

f(T ) Parameters for f

Aggregation

Row Summation rowSums(T) Column Vector

N/AColumn Summation colSums(T) Row Vector

Summation sum(T) Scalar

Multiplication

Left Multiplication T %*% X

Regular Matrix

(dS + dR)× dx matrix

Right Multiplication X %*% T nx × nS matrix

Cross-product crossprod(T)

N/AInversion

Solve solve(T)

Pseudoinverse ginv(T)

Element-wiseMatrix Op

Arithmetic Op(� = +,−, ∗, /) X � T or T � X nS × (dS + dR) matrix No

Table 1: Operators and functions of linear algebra (using R notation) handled in this paper over a normalized matrix T .

Symbol MeaningR / R Attribute table/feature matrixS / S Entity table/feature matrixT / T Join output table/feature matrix

FK Indicator matrix for PK-FK joinIS / IR Indicator matrices for M:N join

Y Target matrix (regression and classification)nR / nS Number of rows in R / S (and T)dR / dR Number of columns in R / S

d Total number of features, dS + dRnU M:N join attribute domain size

Table 2: Notation used in this paper

with scalars and vectors being special cases. LA operatorsmap matrices to matrices. Basic operators include unaryoperators such as element-wise exponentiation, as well asbinary operators such as matrix-matrix multiplication. De-rived operators include Gram matrix and aggregation oper-ators. There are several systems that implement LA, e.g.,R, Matlab, and SAS. The open-source system R has gainedimmense popularity and has free ML libraries for various do-mains [2]. R is primarily an in-memory tool but recent workin the database community has focused on building systemsto scale LA scripts written in R (or R-like languages) todata resident in an RDBMS, Hive/Hadoop, and Spark. Ex-amples of such “R-based analytics systems” include RIOT-DB [33], Oracle R Enterprise (ORE), IBM’s SystemML [9],and SparkR [4]. In this paper, we implement our frame-work on standard R and also ORE. Note that our ideas aregeneric enough to be applicable to the other LA systemssuch as Matlab or the other R-based analytics systems aswell. We use R-style notation in this paper for matrices andLA operations.

Factorized ML. Factorized ML techniques were introducedin a recent line of work for a few specific ML algorithms [25,29, 30]. We briefly explain the idea of a key representativefactorized ML technique known as “factorized learning” [25].Given a model vector w, a GLM with gradient descent com-

putes the inner products w′x, in each iteration, for eachfeature vector x from T. Since T has redundancy, this mul-tiplication has redundancy, which is what factorized learningavoids. The crux of its idea is to decompose the inner prod-ucts over x into inner products over the feature vectors xSand xR from the two base tables. Thus, the partial innerproducts from R can be saved and then reused for each tu-ple in S that refers to the same tuple in R. This is correctbecause w′x = w′SxS +w′RxR, wherein wS (resp. wR) is theprojection of w to the features from S (resp. wR). Factorizedlearning often has significantly faster runtimes.

Problem Statement and Scope. We ask: Is it possible totransparently “factorize” a large set of LA operations over Tthat are common in ML into operations over S and R with-out losing efficiency? Our goal is to devise an integratedframework of such algebraic rewrite rules for the key appli-cation of automatically “factorizing” ML algorithms writ-ten in LA, which means that developers need not manuallyrewrite ML implementations from scratch. The challenge indevising such a framework is in preserving generality (i.e.,applicability to many ML algorithms and both PK-FK andM:N joins), closure (i.e, rewrites only produce a different LAscript), and efficiency (i.e., faster or similar runtimes). MostLA systems support a wide variety of operations on matri-ces. For tractability sake, we restrict our focus to a largesubset of LA operations that still support a wide variety ofML algorithms; Table 1 lists and explains these operations.

3. FACTORIZED LINEAR ALGEBRAWe introduce the normalized matrix, give an overview

of how Morpheus is implemented, and dive deep into ourframework of rewrite rules for a single PK-FK join. We thenextend our framework to multi-table joins and M:N joins.

3.1 The Normalized MatrixWe introduce a new multi-matrix logical data type called

the normalized matrix to represent normalized data. It iscalled a logical data type because it only layers a logical

abstraction on top of existing data types. For simplicity ofexposition, this subsection focuses on a PK-FK join; Section3.5 and 3.6 present the extensions of the normalized matrixto star schema multi-table PK-FK joins and M:N joins, re-spectively. Note that each R.RID in the attribute table Rcan be mapped to its sequential row number in the matrixR. Thus, S.FK can be viewed as an attribute containingentries that are the row numbers of R. An indicator matrixFK of size nS × nR can thus be constructed as follows:

FK[i, j] =

{1, if ith row of S.FK = j0, otherwise

The normalized matrix corresponding to T is defined asthe matrix triple (S, FK,R). One can verify that T =cbind(S, FK %*% R).1 It is worth noting that FK is ahighly sparse matrix. In fact, the PK-FK relationship im-plies that the number of non-zero elements in each row ofFK is 1. Thus, nnz(FK), the number of non-zero elementsin FK, is exactly nS . Without loss of generality, assume∀j, nnz(FK[, j]) > 0, i.e., each tuple in R is referred to byat least one tuple in S. Otherwise, we can remove from Rall the tuples that are never referred to in S. Note that anyof R, S, and T can be dense or sparse.

3.2 Overview of MorpheusMorpheus is an implementation of the normalized matrix

and our framework of rewrites rules as a class in standard Rand ORE. Our class has three matrices: S, FK, and R. AllLA operators in Table 1 are overloaded to support our class.The details of how the operators are executed over normal-ized matrices is the subject of Section 3.3. Interestingly,some operators output a normalized matrix, which enablesMorpheus to propagate the avoidance of data redundancyin a given LA script with multiple operators. Another in-teresting point is how to handle transposes of a normalizedmatrix. A straightforward way is to create a new class fortransposed normalized matrices and overload the operatorsagain. Instead, we adopt a different approach that makesour implementation more succinct and exploits more rewriteopportunities. We add a special binary “flag” to indicate ifa normalized matrix is transposed. If the flag is false, Sec-tion 3.3 rules are used; otherwise, Section 3.4 rules are used.Compared to the straightforward approach, our approachavoids computing repeated transposes and allows develop-ers to focus on only one new class.2

Finally, we explain how to construct a normalized matrixfrom the base tables S and R given as, say, CSV files. Weillustrate this process with a code snippet. For the sake ofbrevity, we assume that RID and FK are already sequentialrow numbers. Note that “list” is used to allow different datatypes (e.g., dense or sparse) and multi-table data.

S = read.csv (‘‘S.csv’’) //foreign key name FK

R = read.csv (‘‘R.csv’’) //primary key name RID

FK = sparseMatrix (i=1:nrow(S), j=S[,"FK"], x=1);

T = NormalizedMatrix (EntTable=list(S),

AttTables=list(R),

FKIndicators=list(FK));}

1cbind is R notation to stitch matrices column-wise.2Our architecture fits easily into any interpreted environ-ments for LA; we leave to future work an integration with acompiler environment such as SystemML [9].

Overall, Morpheus is packaged as easy-to-use librariesfor both standard R and Oracle R Enterprise. We plan toopen source Morpheus on our project webpage.

3.3 Factorized Linear Algebra OperatorsWe now dive deep into our framework of algebraic rewrite

rules for the groups of operators listed in Table 1.

3.3.1 Element-wise Scalar OperatorsThese are trivial to rewrite but they are ubiquitous in ML.

They include multiplication and addition of a matrix with ascalar, element-wise exponentiation, and element-wise scalarfunctions, e.g., log and exp used for logistic regression. Theoutput is a normalized matrix with the same structure as theinput. The rewrite rules are given below; “�” represents anarithmetic operator, x is a scalar, and f is a scalar function.

T � x→ (S � x, FK,R� x) ; x� T → (x� S, FK, x�R)

f(T )→ (f(S), FK, f(R))

In the above, T � x→ (S � x, FK,R� x) means that anoperation T � x can be replaced implicitly with operationson the normalized matrix (S, FK,R) to yield a new normal-ized matrix (S�x, FK,R�x). These rewrites avoid redun-dant computations. For instance, computing 2 ∗ T requiresnS(dS +dR) multiplications but computing (2∗S, FK, 2∗R)requires only nSdS + nRdR. The ratio of these two quanti-ties is the ratio of the size of T to the total size of S and R.The speed-ups depend on this ratio and thus, the speed-upscould be significant when this ratio is large.

3.3.2 Aggregation OperatorsThese include rowSums(T ), which sums the matrix row-

wise, colSums(T ), which sums the matrix column-wise, andsum(T ), which adds up all of the elements. These operatorsalso arise frequently in ML, especially when computing lossor gradient values, which are aggregates over examples (orfeatures). The rewrite rules are as follows.

rowSums(T )→rowSums(S) + FK %*% rowSums(R)

colSums(T )→cbind(colSums(S), colSums(FK) %*% R)

sum(T )→ sum(S) + colSums(FK) %*% rowSums(R)

The rule for rowSums pushes down the operator to beforethe join and then multiplies the pre-aggregated R with FK,before adding both parts. The rule for colSums, however,first pre-aggregates FK before multiplying it with R andthen attaches (cbind) it to the pre-aggregated S. Finally, therewrite rule for sum is more complicated and involves a sumpush-down along with a rowSums and a colSums. Theserewrite rules are essentially the LA counterparts of SQL ag-gregate push-down optimizations in RDBMSs [12, 32]. Byextending such operator rewrite ideas to LA operations, ourwork makes them more widely applicable, especially, for LA-based ML workloads that may not use an RDBMS.

3.3.3 Left Matrix Multiplication (LMM)LMM is an important and time-consuming operator aris-

ing in many ML algorithms, typically for multiplying thedata matrix with a model/weight vector. In fact, it arises inall of GLMs, K-Means, and GNMF. Interestingly, a specialcase of LMM is the key operation factorized in [25]. Ourrewrite rule expresses that idea in LA and generalizes it to

1.0 2.0 1.1 2.2

4.0 3.0 3.3 4.4

5.0 6.0 3.3 4.4

8.0 7.0 1.1 2.2

9.0 1.0 3.3 4.4

1.0

2.0

1.0

2.0

T A

X

10.5

22.1

29.1

27.5

23.1

T %*% X

1.0 2.0

4.0 3.0

5.0 6.0

8.0 7.0

9.0 1.0

1.0

2.0

X[1:2,]

5.0

10.0

17.0

22.0

11.0

S %*% X[1:2,] = Z1 S

1.1 2.2

3.3 4.4

1.0

2.0

X[3:4,] R

5.5

12.1

R %*% X[3:4,] = Z2

1 0

0 1

0 1

1 0

0 1

FK

5.5

12.1

Z2

5.5

12.1

12.1

5.5

12.1

FK %*% Z2 = Z3 B C D Figure 2: Illustration of factorized LMM. (A) Materialized LMM T %*% X. (B) The first step in factorized LMM isS %*% X[1 : dS , ] = Z1 (say). Note that dS = 2. (C) Next, R %*% X[dS + 1 : d, ] = Z2 (say). Note that d = 4. (D) Then,FK %*% Z2 = Z3 (say). Finally, the factorized LMM is Z1 + Z3, the same as the result in (A).

a weight matrix, not just a weight vector. The rewrite ruleis as follows; X is a regular d× dX (dX ≥ 1) matrix.

T %*% X → S %*% X[1 : dS , ] +

FK %*% (R %*% X[dS + 1 : d, ])

Essentially, we first split up X, then pre-multiply with Sand R separately, and finally add them. A subtle but crucialissue is the order of the multiplication in the second compo-nent. There are two orders: (1) (FK %*% R) %*% X[dS +1 : dS + dR, ], and (2) FK %*% (R %*% X[dS + 1 : d, ]).The first is equivalent to materializing (a part of) the outputof the join, which causes computational redundancy! Thesecond avoids the computational redundancy and thus, weuse the second order. Most LA systems, including R, allowus to fix the multiplication order using parentheses. A keydifference with [25] is that their approach stores the partialresults over R in an in-memory associative array. We avoidusing associative arrays, which are not a native part of mostLA systems, and instead, use regular matrix multiplications.While this could lead to a small performance penalty, it en-ables us to satisfy the closure property explained before.Figure 2 illustrates how factorized LMM works.

3.3.4 Right Matrix Multiplication (RMM)RMM also appears in many ML algorithms, including

GLMs, especially when the normalized matrix is transposed(more in Section 3.4). Let X be a regular m× nS (m ≥ 1)matrix. The rewrite rule is as follows.

X %*% T → cbind(X %*% S, (X %*% FK) %*% R)

This rewrite does not need to split up X but pushes downthe RMM to the base tables and then attaches the resul-tant matrices. Once again, the second component has twopossible orders, with the one that is not used being logicallyequivalent to materializing the join output.

A related matrix multiplication operation involves multi-plying two normalized matrices; we call this operation Dou-ble Matrix Multiplication (DMM). In contrast to LMM andRMM, to the best of our knowledge, DMM does not arisein any popular ML algorithm. Nevertheless, we show in theappendix that it is indeed possible to rewrite even a DMMinto operations over the base tables’ matrices although therewrite is more complicated.

3.3.5 Cross-productThe cross-product of a matrix T , denoted crossprod(T ) in

R, is equivalent to t(T ) %*% T .3 Most LA systems provide

3By convention, data examples are rows in R, which meanscrossprod is actually the Gram matrix in LA textbooks [21].

Algorithm 1: Cross-product (Naive method)

P = t(R) %*% (t(FK) %*% S)return cbind(rbind(t(S) %*% S , P ), rbind(t(P ),

t(R) %*% ((t(FK) %*% FK) %*% R)))

cross-product as a unary function.4 It arises in ML algo-rithms where feature-feature interactions are needed, e.g.,inversion-based linear regression, covariance, and PCA [15].Interestingly, alternative rewrites are possible for crossprod.We start with a straightforward “naive method” in Algo-rithm 1. Since t(T ) %*% T is symmetric, we only needhalf of the elements in the output, along with the diago-nal. Thus, this rewrite first computes the lower-left (andupper-right) by multiplying t(R) by the product of t(FK)and S, the order of which again avoids materialization. Sec-ond, it computes the cross-product of S for the upper-left.Third, it computes the cross-product of FK and thus, thecross-product of FK %*% R without materializing the join.Finally, the results are stitched appropriately using rbindand cbind. The approach in [30] to factorize a part of theso-called “co-factor” matrix for linear regression is similar.

While already a bit optimized, Algorithm 1 still has twoinefficiency issues. First, it does not fully exploit the symme-try of some components. Second, the transposed multipli-cation of a sparse matrix (t(FK) %*% FK) is a non-trivialcost in many cases. We present a novel rewrite–the “efficientmethod”–that resolves both issues. The first one is resolvedby using crossprod(S) directly instead of t(S) %*% S. Thesecond one is more subtle; we make three observations: (1)t(FK) %*% FK, denoted FKp, is not only symmetric butalso diagonal. (2) FKp[i, i] is simply the number of ones inthe ith column of FK. Thus, FKp ≡ diag(colSums(FK)),where diag is a standard LA operation that creates a diago-nal matrix given a vector. Third, denoting the element-wisesquare root of FKp by FKp 0.5, we have:

t(R) %*% (t(FK) %*% FK) %*% R

≡ crossprod(FKp 0.5 %*% R)

Integrating these observations, the efficient method is pre-sented in Algorithm 2.

3.3.6 Matrix Inversion Operators

4There is also a binary version: crossprod(T1, T2) =t(T1) %*% T2. If only T2 is normalized, it is RMM; if onlyT1 is normalized, it is transposed RMM, which is discussedin Section 3.4. If both are normalized, it is a transposeddouble multiplication, which is discussed in the appendix.

Algorithm 2: Cross-product (Efficient method)

P = t(R) %*% (t(FK) %*% S)return cbind(rbind(crossprod(S), P ), rbind(t(P ),

crossprod(diag(colSums(FK))ˆ(0.5) %*% R)))

In ML, matrix inversion (called solve in R) typically arisesonly over a full-rank crossprod of the denormalized datamatrix T (which becomes a regular matrix), e.g., for linearregression [19] as shown in Section 4. T is seldom directly in-verted in ML. Nevertheless, we do have the following rewriterules if T is square and invertible:

solve(T )→ solve(crossprod(T )) %*% (t(T ))

Interestingly, we show in the appendix that it is highly un-likely in practice that T is invertible because it imposes astrict constraint on the relative dimensions of the base ta-bles [13]. Thus, we also consider the Moore-Penrose pseudo-inverse operation (called ginv in R). For pseudo-inverse, wehave the following rewrite rules:

ginv(T )→ ginv(crossprod(T )) %*% t(T ), if d < n

ginv(T )→ t(T ) %*% ginv(crossprod(t(T ))), o/w

3.3.7 Non-Factorizable OperatorsElement-wise matrix arithmetic operators such as addi-

tion do not necessarily have redundancy in their computa-tions when applied to the output of joins. We call such oper-ators “non-factorizable.” To see why such operators may nothave redundancy, consider the addition Z = T + X, whereT is the normalized matrix and X is a regular matrix of thesame size, i.e., nS × (dS +dR). In general, it is possible thatX has no redundancy, i.e., no repetitions in its entries, eventhough T has the redundancy introduced by the join. Thus,computing each element of Z involves adding an element ofT (repeated or not) with a potentially unique element ofX, which means there is no redundancy in the computa-tions for Z. Of course, there could be “instance-specific”redundancy in X, e.g, some elements happen to be repeatedby chance. Exploiting such instance-specific redundancy isbeyond the scope of this work. Fortunately, element-wisematrix arithmetic operations are rare in ML. To the bestof our knowledge, there is no popular ML algorithm wherethese operations are the performance bottleneck.5 Thus, weignore these operations henceforth.

3.4 Operator Rewrites with TransposeWhen a normalized matrix is transposed, i.e., we compute

t(T ), the redundancy in T is preserved but its structurechanges. Since transpose is a unary matrix operator, anyLA expression with transpose is no longer a rewrite of a sin-gle operation but rather an expression-level rewrite, whichcould make the integration more complicated (e.g., we mightneed to build a parser for R). As mentioned in Section 3.2,this issue is circumvented by adding a transpose flag to thenormalized matrix data structure. This flag is set when Tis transposed and unset if it is transposed again. We nowpresent a new set of rewrite rules that replace an operation

5Such operations do arise in non-ML applications of LA,e.g., scientific simulations and financial engineering, but itis not clear if these applications have normalized data.

on t(T ) with an operation on T , which means the rewriterules from Section 3.3 can be reused.

Element-wise Scalar Operators. The output is a trans-posed normalized matrix.

t(T )� x→ t(T � x) ; x� t(T )→ t(x� T )

f(t(T ))→ t(f(T ))

Aggregation Operators. The output is a column vector,row vector, or a scalar.

colSums(t(T ))→t(rowSums(T ))

rowSums(t(T ))→ t(colSums(T )) ; sum(t(T ))→ sum(T )

LMM and RMM. The output is a regular matrix.

t(T ) %*% X → t(t(X) %*% T )

X %*% t(T )→ t(T %*% t(X))

Cross-product. If the input is a transposed normalizedmatrix, this is the Gram matrix, which is used in some MLalgorithms such as kernel-based SVMs. The output is aregular matrix.

crossprod(t(T ))→ S %*% t(S)+

FK %*% (R %*% t(R)) %*% t(FK)

Matrix Inversion Operators. It suffices to use the rewritesdevised for crossprod, LMM, RMM, and transposes directly:

solve(t(T ))→ ginv(crossprod(t(T ))) %*% T

ginv(t(T ))→ ginv(crossprod(t(T ))) %*% T

ginv(t(T ))→ T %*% ginv(crossprod(T ))

3.5 Extension to Multi-table JoinsWe now extend our framework to multi-table PK-FK joins,

specifically, star schema joins, which are ubiquitous in prac-tice. For example, in recommendation systems such as Net-flix and Amazon, the table with ratings has two foreignkeys referring to tables about users and products. Thus,there is one entity table and two attribute tables. For-mally, the schema is as follows: one entity table/matrix S,q attribute tables, R1, . . . , Rq, and q associated PK-FK ma-trices FK1, . . . , FKq. The materialized join output T iscbind(S, FK1 %*% R1, . . . , FKq %*% Rq). The extendednormalized matrix is the tuple (S, FK1, . . . , FKq, R1, . . . , Rq).We now present the extended rewrite rules.

Element-wise Scalar Operators.The extension is straight-forward and as follows:

T � x→ (S � x, FK1, . . . , FKq, R1 � x, . . . , Rq � x)

x� T → (x� S, FK1, . . . , FKq, x�R1, . . . , x�Rq),

f(T )→ (f(S), FK1, . . . , FKq, f(R1), . . . , f(Rq)).

Aggregation Operators. These require pre-aggregationof each Ri using FKi and then combining the partial results,shown as follows.

colSums(T )→ cbind(colSums(S), colSums(FK1) %*% R1,

· · · , colSums(FKq) %*% Rq)

rowSums(T )→ rowSums(S) +

q∑

i=1

FKi %*% rowSums(Ri)

sum(T )→ sum(S) +

q∑

i=1

colSums(FKi) %*% rowSums(Ri)

LMM. We need some notation. Let the dimensions of Ri

be nRi × dRi. Thus d = dS +∑Q

i=1 dRi Define d′i = dS +∑ij=1 dRi, for i = 1 to q, and d′0 = dS . Given X of size

d×m (m ≥ 1), the rewrite is as follows.

T %*% X → S %*% X[1 : dS , ] +q∑

i=1

FKi %*% (Ri %*% X[d′i−1 + 1 : d′i, ])

RMM. Note that the dimensions of FKi is nS×nRi. GivenX of size m× nS (m ≥ 1), the rewrite is as follows.

X% ∗%T → cbind(X %*% S, (X %*% FK1) %*%

R1, . . . , (X %*% FKq) %*% Rq)

Cross-product. Since this rewrite is more tedious, for thesake of readability, we specify the parts of crossprod(T ) (inshort, C(T )) separately. Using the notation from LMM, therewrite is as follows (1 ≤ i ≤ q).

C[1 : d′0, 1 : d′0] = crossprod(S),

C[1 : d′0, d′0 + 1 : d] = cbind((t(S) %*% FK1) %*% R1,

(t(S) %*% FK2) %*% R2, · · · ,(t(S) %*% FKq) %*% Rq),

C[(d′i−1 + 1) : (d′i), (d′i−1 + 1) : (d′i)]

= crossprod(diag(colSums(FKi)0.5) %*% Ri),

C[d′i−1 + 1 : d′i, d′i + 1 : d]

= cbind(t(Ri) %*% (t(FKi) %*% FKi+1) %*% Ri+1,

t(Ri) %*% (t(FKi) %*% FKi+2) %*% Ri+2, · · · ,t(Ri) %*% (t(FKi) %*% FKq) %*% Rq),

C[d′i + 1 : d, d′i−1 + 1 : d′i] = t(C[d′i−1 + 1 : d′i, d′i + 1 : d])

On the other hand, the rewrite rule for the cross-productof t(T ) is as follows:

crossprod(t(T ))→ crossprod(t(S))

+

q∑

i=1

FKi %*% crossprod(t(Ri)) %*% t(FKi)

3.6 Extension to M:N JoinsWe now extend our framework to a general non-PK-FK

equi-join (“M:N” join) between S and R. We discuss thecase of multi-table M:N joins in the appendix. Let the joinattribute in S (resp. R) be denoted JS (resp. JR). Attachattributes NS and NR to the respective tables to encoderow numbers, i.e., NS (resp. NR) takes values from 1 to nS

(resp. nR). We need to capture which tuples (rows) of Sand R get mapped to which rows of T = S ./JS=JR R. Todo so, first compute T′ = πNS,JS(S) ./JS=JR πNR,JR(R)with non-deduplicating projections (potentially, a relationalcross-product of the projected join columns). Then, createtwo indicator matrices IS and IR of dimensions |T′| × nS

and |T′| × nR respectively:

Algorithm 3: Cross-product for M:N join (Naive)

P = t(R) %*% ((t(IR) %*% IS) %*% S)TS = (t(S) %*% ((t(IS) %*% IS) %*% S)TR = (t(R) %*% ((t(IR) %*% IR) %*% R)return cbind(rbind(TS, P ), rbind(t(P ),TR)

Algorithm 4: Cross-product for M:N join (Efficient)

P = t(R) %*% ((t(IR) %*% IS) %*% S)TS = crossprod(diag(colSums(IS))ˆ(0.5) %*% S)TR = crossprod(diag(colSums(IR))ˆ(0.5) %*% R)return cbind(rbind(TS, P ), rbind(t(P ),TR)

[IS|IR]([i, j]) =

{1, if ith row of T′.[NS|NR]) = j0, otherwise

IS and IR are also very sparse: nnz(IS) = nnz(IR) =|T′|. Without loss of generality, assume each column of ISand IR has at least one non-zero, i.e., each tuple of S and Rcontributes to at least one tuple of T; otherwise, we can re-move those tuples a priori. The extended normalized matrixis now (S, IS, IR,R) and T = cbind(IS %*% S, IR %*% R).The extensions to the rewrite rules are as follows:

Element-wise Scalar Operators.

T � x→ (IS,S � x, IR,R� x)

x� T → (IS, x� S, IR, x�R) ; f(T )→ (IS, f(S), IR, f(R))

Aggregation Operators.

rowSums(T )→IS %*% rowSums(S)

+ IR %*% rowSums(R)

colSums(T )→cbind(colSums(IS) %*% S,

colSums(IR) %*% R)

sum(T )→colSums(IS) %*% rowSums(S)+

colSums(IR) %*% rowSums(R)

LMM and RMM.

T %*% X → IS %*% (S %*% X[1 : dS , ]) +

IR %*% (R %*% X[dS + 1 : dS + dR, ])

X% ∗%T → cbind((X %*% IS) %*% S,

(X %*% IR) %*% R)

Cross-product. The cross-product for M:N join using thenaive method and the efficient method are presented in Al-gorithm 3 and Algorithm 4, respectively. On the other hand,the rewrite rule for the cross-product of t(T ) is as follows:

crossprod(t(T ))→IS %*% crossprod(t(S)) %*% t(IS)+

IR %*% crossprod(t(R)) %*% t(IR)

Observe that if the join is PK-FK, we have IS = I (iden-tity matrix of size nS × nS) and the above rules implicitlybecome equivalent to their counterparts from Section 3.3.

3.7 Will Rewrites Always Be Faster?The rewrites avoid computational redundancy caused by

joins. But if the joins are (too) selective and/or introduce noredundancy, the rewrites could worsen performance becauseT could become smaller than S and R put together. This

dichotomy is an instance of the classical problem of cardi-nality estimation; it is orthogonal to our work and we leaveit to future work to integrate sophisticated cardinality esti-mation ideas into LA systems. In this work, We drop tuplesof S and R that do not contribute to T, as explained in Sec-tion 3.1 and 3.6. Since many ML algorithms are iterative,this pre-processing time is relatively minor. But interest-ingly, in some extreme cases, even after such pre-processingand even if the joins introduce some redundancy, rewritescould worsen performance because the overheads caused bythe extra LA operations could dominate the computationalredundancy saved. Empirically (Section 5.1), we found suchslow-downs to be almost always < 2x, but it is still help-ful to predict and avoid these. One approach is to deviseexact “cost models” for the runtimes of LA operations, butsuch an approach is too cumbersome and ties us closely tothe internals of the LA system used. Instead, we proposea simpler, more generic approach that uses a heuristic deci-sion rule that thresholds on key statistics of the join inputmatrices (relative numbers of rows and columns). We ex-plain more about why our approach is feasible and what thedecision rule looks like in Section 5.1.

4. APPLICATION TO ML ALGORITHMSWe now show how Morpheus automatically “factorizes”

a few popular ML algorithms. We pick a diverse and repre-sentative set of ML algorithms: logistic regression for classi-fication, least squares for regression, K-Means for clustering,and Gaussian non-negative matrix factorization (GNMF) forfeature extraction. For each algorithm, we present the stan-dard single-table version of their LA scripts, followed by the“factorized” versions for a PK-FK join. Note that these canbe easily extended to multi-table joins and M:N joins usingrewrite rules from Sections 3.5 and 3.6, respectively. Theserewrites are shown for illustration only; Morpheus uses therewrite rules on-the-fly without code regeneration.

Logistic Regression for Classification. The standardalgorithm using gradient descent (GD) is in Algorithm 5;the automatically factorized version is in Algorithm 6. Thefollowing rewrite rules are used: LMM from Section 3.3 (forT %*% w) and transposed multiplication from Section 3.4(for t(T ) %*% t(tmp)).

Algorithm 5: Logistic Regression (Standard)

Input: Regular matrix T , Y , w, αfor i in 1 : max iter do

w = w + α ∗ (t(T ) %*% (Y/(1 + exp(T %*% w))))end

Algorithm 6: Logistic Regression (Factorized)

Input: Normalized matrix (S, FK,R), Y , w, α.for i in 1 : max iter do

tmp = t(Y/(1 + exp(S %*% w[1 : dS , ]+FK %*% (R %*% w[dS + 1 : dS + dR, ]))))

w = w + α ∗ t(cbind(tmp %*% S,(tmp %*% FK) %*% R))

end

Least Squares Linear Regression. The standard al-gorithm using matrix inversion is in Algorithm 7; the au-tomatically factorized version is in Algorithm 8. The fol-lowing rewrite rules were used: cross-product from Sec-

tion 3.3 and transposed multiplication from Section 3.4 (fort(T ) %*% Y ). If d is too large, or if the cross-product is sin-gular, GD is used instead; this is similar to Algorithm 5 andAlgorithm 6 and for brevity sake, we skip it here and presentit in the appendix. A hybrid algorithm that constructs theso-called “co-factor” matrix (using the cross-product) andthen uses GD was presented and factorized in [30]. Their al-gorithm can also be automatically factorized by Morpheus;we discuss this in more detail in the appendix.

Algorithm 7: Linear Regression (Standard)

Input: Regular matrix T , Y , w, αw = solve(crossprod(T )) %*% (t(T ) %*% Y )

Algorithm 8: Linear Regression (Factorized)

Input: Normalized matrix (S, FK,R), Y , w, α.tmp = solve(crossprod((S, FK,R))) //Use Algo. 2w = tmp %*% t(cbind(t(Y ) %*% S,

(t(Y ) %*% FK) %*% R))

K-Means Clustering. The factorized version is in Algo-rithm 9 while the standard version is shown in the appendix.The following rewrite rules are used: element-wise exponen-tiation and aggregation from Section 3.3 (for rowSums(T 2)),LMM from Section 3.3 (for T %*% C), and transposed LMMfrom Section 3.4 (for t(T ) %*% CA). Note that K-Means re-quires matrix-matrix multiplications, not just matrix-vectormultiplications. This demonstrates a key benefit of the gen-erality of our approach.

Algorithm 9: K-Means Clustering (Factorized)

Input: Normalized matrix (S, FK,R), # centroids K//Initialize centroids matrix Cd×K

Un1 = matrix(1, nrow = nS, ncol = 1)Ud1 = matrix(1, nrow = dS + dR, ncol = 1)U1K = matrix(1, nrow = 1, ncol = K)DT = (rowSums(S 2)+

FK %*% rowSums(R 2)) %*% U1KtwoS = 2 ∗ S; twoR = 2 ∗Rfor i in 1 : max iter do

tmp = twoS %*% C + FK %*% (twoR %*% C)D = DT − tmp+ Un1 %*% colSums(C 2)CA = (D == (apply(D, 1,min) %*% U1K))tmp = t(cbind(t(CA) %*% S,

(t(CA) %*% FK) %*% R))C = tmp/(Ud1 %*% colSums(CA))

end

GNMF for Feature Extraction. The factorized versionis in Algorithm 10 (the standard version is shown in theappendix). The following rewrite rules are used: RMM andLMM from Section 3.3 (for t(W ) %*% T and T %*% t(H)respectively). Similar to K-Means, GNMF also requires fullmatrix-matrix multiplications.

5. EXPERIMENTSWe compare the runtime performance of our rewrite rules

for key LA operators and the four automatically factor-ized ML algorithms. Our goal is to evaluate the speed-upsprovided by Morpheus and understand how they vary fordifferent data dimensions. Both synthetic and real-worlddatasets are used.

Algorithm 10: Gaussian NMF (Factorized)

Input: Normalized matrix (S, FK,R), rank r//Initialize W and Hfor i in 1 : max iter do

tmp = t(cbind(t(W ) %*% S,(t(W ) %*% FK) %*% R))

H = H ∗ tmp/(H %*% crossprod(W ))tmp = S %*% H + FK %*% (R %*% H)W = W ∗ tmp/(W %*% crossprod(H))

end

Datasets. We generate synthetic datasets for PK-FK andM:N joins with a wide range of data dimensions as listedin Table 3 and Table 4, respectively. For the PK-FK joins,the quantities varied are the tuple ratio (nS/nR) and featureratio (dR/dS), which as explained in [25], help quantify theamount of redundancy introduced by a PK-FK join. Theother parameters are fixed as per Table 3. For M:N joins,the number of tuples, number of features, and join attributedomain size are varied and the other parameters fixed as perTable 4. Seven real-world normalized datasets are adaptedfrom [26] for the ML algorithms. These datasets are repre-sented as sparse feature matrices to handle nominal features.Recall that Morpheus supports both dense and sparse ma-trices. The dimensions and sparsity are listed in Table 5.All real datasets have numeric target features in S, whichwe binarize for logistic regression and treat as regular fea-tures for K-Means and GNMF. The schemas and featuresare listed in the appendix.

PK-FK Join nS dS nR dR

Tuple Ratio Varied 20 106 40 or 80Feature Ratio 2× 107 or 107 20 106 Varied

Table 3: Data dimension parameters for PK-FK joins.

M:N Join nS = nR dS = dR nU

# Tuples Varied 200 or 100 1000# Features 2× 105 or 105 Varied 1000

Domain Size 2× 105 or 105 200 Varied

Table 4: Data dimension parameters for M:N joins. nU isthe domain size (number of unique values) of JS/JR.

Experimental Setup. All experiments were run are on amachine with 20 Intel Xeon E5-2660 2.6 GHz cores, 160 GBRAM, and 3 TB disk with Ubuntu 14.04 LTS as the OS.Our code is implemented in R v3.2.3. Since all real datasetsfit in memory as R matrices, we use Morpheus on standardR for all experiments, except for the scalability study withMorpheus on ORE.

5.1 Operator-level ResultsWe start by studying the effects of the rewrites on indi-

vidual LA operator performance using synthetic data. Thiswill be useful to understand the performance results for theML algorithms later. The data preparation time is excludedfor both the materialized version (in short, M), viz., join-ing the tables, and for the factorized version (in short, F),viz., constructing FK (IS and IR) matrices. As such, datapreparation takes < 5% of the total time in almost all cases.

Dataset (nS ,dS ,nnz) q (nRi ,dRi ,nnz)

Expedia 942142,28,5652852 211939,12013,107451

37021,40242,555315

Movies 1000209,1,0 26040,9509,30200

3706,3839,81532

Yelp 215879,1,0 211535,11706,380655

43873,43900,307111

Walmart 421570,2,421570 22340,2387,23400

45,53,135

LastFM 343747,1,0 24099,5019,39992

50000,50233,250000

Books 253120,1,0 227876,28022,83628

49972,53641,249860

Flights 66548,21,55301 3

540,718,3240

3167,6464,22169

3170,6467,22190

Table 5: Dataset statistics for the real-world datasets.

PK-FK Join. Figure 3 shows the speed-ups of F overM for four key operators. Other operators exhibit similartrends, so we present them (and the runtimes) in the ap-pendix. Note that F is significantly faster than M for awide range of data dimensions for all operators. The speed-ups increase with both the tuple ratio and feature ratio, butgrow faster with the latter because the amount of redun-dancy in T , and thus, in the ML computations, increasesfaster with the feature ratio. Figures 3(b) shows that thespeed-ups are slightly lower for LMM compared to scalarmultiplication since the rewrite for LMM has slightly higheroverhead. Interestingly, Figure 3(c,d) shows that the speed-ups for cross-product and pseudo-inverse grow much fasterwith the feature ratio. The reason is that their runtimes areat least quadratic in d, while the others are O(d).

Heuristic Decision Rule. Figure 3 also shows that Fis indeed sometimes slower than M, as suggested earlier inSection 3.7. In these cases, the tuple ratios and/or featureratios are very low. Since these regions exhibit an “L” shape,it motivates us to consider a heuristic decision rule that is adisjunctive predicate with two thresholds: if the tuple ratiois < τ or if the feature ratio is < ρ, we do not use F. We tuneτ and ρ conservatively using the speed-up results from all ofour experiments on synthetic data; we set τ = 5 and ρ = 1.This is conservative because it is unlikely to wrongly predictthat a slow-down will not occur when it does, but it mightwrongly predict that a slow-down will occur even though itdoes not; but even in the latter cases, the speed-ups of Fover M were minor (< 50%). We leave more sophisticatedapproaches to future work.

M:N Join. We now evaluate the rewritten operators foran M:N join. We set (nS , dS) = (nR, dR) and vary nU . De-fine the “join attribute uniqueness degree” as nU/nS . Notethat as nU becomes smaller, more tuples are repeated af-ter the join. nU = 1 leads to the full cartesian product.Figure 4 presents the speed-ups for two key operators thatarise in ML: LMM and cross-product. Other operators andother parameters are discussed in the appendix. We see that

0 5 10 15 200

1

2

3

4

Tuple Ratio

Featu

re R

ati

o

speedup<1 1<speedup<2 2<speedup<3 speedup>3

a 0 5 10 15 200

1

2

3

4

Tuple Ratio

Featu

re R

ati

o

speedup<1 1<speedup<2 2<speedup<3 speedup>3

b 0 5 10 15 200

1

2

3

4

Tuple Ratio

Featu

re R

ati

o

speedup<1 1<speedup<2 2<speedup<3 speedup>3

c 0 5 10 15 200

1

2

3

4

Tuple Ratio

Fe

atu

re R

ati

o

1<speedup<2 2<speedup<3 speedup>3

d

Figure 3: Speed-ups of factorized LA operators over the respective materialized versions on synthetic data for a PK-FK join.(a) Scalar multiplication, (b) LMM, (c) Cross-product, and (d) Pseudo-inverse. Data dimensions are in Table 3. For thecross-product, Algorithm 2 is used (a comparison with Algorithm 1 is presented in the appendix).

10−2

10−1

10−1

100

101

102

Join Attribute Uniqueness Degree

Ru

nti

me

(s

)

F(nS=2e+05)

M (nS=2e+05)

F (nS=1e+05)

M (nS=1e+05)

a 10−2

10−1

101

102

103

104

Join Attribute Uniqueness Degree

Ru

nti

me

(s

)

F(nS=2e+05)

M (nS=2e+05)

F (nS=1e+05)

M (nS=1e+05)

b

Figure 4: Runtimes for an M:N join of materialized (M) andfactorized (F) versions of LA operators. (a) LMM and (b)Cross-product. We fix nS (= nR) as shown on the plots,dS = dR = 200, and vary nU/nS from 0.01 to 0.5.

F is again significantly faster than M for a wide range ofnU values for both operators. In fact, when nU = 0.01,the speed-ups are nearly two orders of magnitude, which iscomparable to the average number of times each tuple is re-peated after the join. This confirms that our framework canefficiently handle M:N joins as well.

5.2 ML Algorithm-level ResultsWe compare the materialized versions (M) of the ML al-

gorithms with the Morpheus-factorized versions (F) fromSection 4. Due to space constraints, we focus primarily ona PK-FK join; M:N join is discussed in the appendix (thetakeaways are similar). We study the effects of the datadimensions using synthetic data and then present the re-sults on the real data. We then compare Morpheus againstprior ML algorithm-specific factorized ML tools. Finally, westudy the scalability of Morpheus on ORE.

5.2.1 Results on Synthetic DatasetsLogistic Regression. Figure 5(a) shows the runtime ver-sus different tuple ratio (TR) and the feature ratio (FR),while the appendix presents a plot varying the number ofiterations. It is clear that F is significantly faster than Min most cases across a wide range of data dimensions. Theruntime is dominated by the LMM T %*% w and the trans-posed LMM t(T ) %*% tmp. Thus, the speed-up trends aresimilar to that for those operators in Figure 3.

Linear Regression. The results are in Figure 5(b). Again,F is significantly faster than M for a wide range of datadimensions. The runtime is dominated by crossprod(T ) (seeAlgorithms 7 and 8). Thus, the speed-up trends are similarto that for crossprod in Figure 3. Gradient descent-basedlinear regression is similar to logistic regression; thus, weskip it for brevity and discuss it in the appendix.

K-Means and GNMF. The results for K-Means and GNMFare in Figure 5(c) and Figure 5(d), respectively. The run-time trends for the number of iterations for both are simi-

Lin. Reg. Log. Reg. K-Means GNMFM Sp M Sp M Sp M Sp

E 73.1 22.2 71.2 14.0 102.7 4.5 80.9 5.9M 20.3 36.3 65.4 30.3 93.3 6.0 75.4 8.0Y 20.4 36.4 20.2 30.1 25.8 6.1 21.3 12W 12.0 10.9 13.2 9.8 19.5 2.0 14.0 2.8L 7.5 11.0 7.7 8.7 13.8 2.3 9.4 3.4B 3.2 5.2 3.1 3.9 7.8 1.3 4.1 1.4F 1.4 4.4 1.7 3.4 2.9 1.8 1.9 2.0

Table 6: Runtimes (in seconds) on real data for the mate-rialized approach (M) and speed-ups of Morpheus (Sp).E, M, Y, W, L, B, and F correspond to the datasets Ex-pedia, Movies, Yelp, Walmart, LastFM, Books, and Flightsrespectively. Number of iterations is 20 for all ML algo-rithms; number of centroids is 10 for K-Means, and numberof topics is 5 for GNMF.

lar to logistic regression. Figure 5(c2) shows that K-Meansruntime increases linearly with the number of centroids (k).The speed-up of F over M decreases slowly because unlikelogistic regression, K-Means has extra computations beyondfactorized operators whose contribution to the runtime in-creases as k increases. The trends for GNMF are similar.

5.2.2 Results on Real DatasetsSince all the real datasets have multi-table star schema

joins, this experiment also evaluates our multi-table join ex-tension. Table 6 presents the results. We see that Mor-pheus is significantly faster than the materialized approach(M) in almost all cases for all datasets although the exactspeed-ups differ widely across datasets and ML algorithms.The lowest speed-ups are mostly for GNMF and on Books,e.g., 1.4x for GNMF on Books, and 1.3x for K-Means onBooks, primarily because the dataset has low feature ratios(as shown in Table 5) and GNMF and K-Means have extracomputations after the factorized portions. On the other ex-treme, Movies and Yelp see the highest speed-ups, e.g., 36.3xand 36.4x resp. for linear regression, 30.3x and 30.1x for lo-gistic regression. This is primarily because the runtimesof these ML algorithms are dominated by matrix multipli-cation operators, which are factorized by Morpheus, andthese datasets have high feature and/or tuple ratios. Over-all, these results validate Morpheus not only generalizesfactorized ML, but also yields over an order of magnitude ofspeed-ups on some real datasets for a few popular ML tasks.

5.2.3 Comparison with ML Algorithm-specific ToolsWe would like to know if the generality of Morpheus is

at the cost of possible performance gains compared to priorML algorithm-specific tools. Since the tool from [30] is not

5 10 15 200

200

400

600

Tuple Ratio

Ru

nti

me

(s

)

F(FR=4)

M (FR=4)

F (FR=2)

M (FR=2)

a1 1 2 3 40

200

400

600

Feature Ratio

Ru

nti

me (

s)

F(TR=20)

M (TR=20)

F (TR=10)

M (TR=10)

a2 5 10 15 200

50

100

150

Tuple Ratio

Ru

nti

me

(s

)

F(FR=4)

M (FR=4)

F (FR=2)

M (FR=2)

b1 1 2 3 40

50

100

150

Feature Ratio

Ru

nti

me (

s)

F(TR=20)

M (TR=20)

F (TR=10)

M (TR=10)

b2

5 10 15 200

500

1000

1500

2000

Number of Iterations

Ru

nti

me

(s

)

F(FR=4)

M (FR=4)

F (FR=2)

M (FR=2)

c1 5 10 15 200

2000

4000

6000

Number of Centroids

Ru

nti

me

(s

)

F(FR=4)

M (FR=4)

F (FR=2)

M (FR=2)

c2 5 10 15 200

500

1000

Number of Iterations

Ru

nti

me

(s

)

F(FR=4)

M (FR=4)

F (FR=2)

M (FR=2)

d1 2 4 6 8 100

500

1000

1500

Number of Topics

Ru

nti

me

(s

)

F(FR=4)

M (FR=4)

F (FR=2)

M (FR=2)

d2

Figure 5: ML algorithms on synthetic data for a PK-FK join. Row 1: (a) Logistic Regression and (b) Linear Regression (usingmatrix inversion). Row 2: (c) K-Means and (d) GNMF. For (a), fix number of iterations to 20 (we vary this in the appendix.All data dimensions are listed in Table 3. For (c1) and (d1), we vary the number of iterations while fixing the number ofcentroids (resp. topics) for K-Means (resp. GNMF) to 10 (resp. 5). For (c2) and (d2), we set (nS , nR, dS) = (2 · 107, 106, 20),while dR is 40 (FR=2) and 80 (FR=4), and number of iterations is 20 for both algorithms.

Feature Ratio 1 2 3 4

Orion [25] 1.6 2.0 2.5 2.8Morpheus 2.0 3.7 4.8 5.7

Table 7: Speed-ups of factorized logistic regression overmaterialized for a PK-FK join. Fix (nS , nR, dS , Iters) =(2× 106, 105, 20, 10); vary feature ratio (dR/dS).

Feature Ratio 0.5 1 2 4

Materialized 98.27 130.09 169.36 277.52Morpheus 56.30 62.51 68.54 73.33Speed-up 1.8x 2.1x 2.5x 3.8x

Table 8: Per-iteration runtime (in minutes) of logistic re-gression on ORE for a PK-FK join. Fix (nS , nR, dS) =(108, 5 · 106, 60) and vary dR as per feature ratio (FR).

open sourced, we contacted the authors; after discussions,we realized that their tool does not support the equivalent ofM, which makes an apples-to-apples comparison impossible.Thus, we only compare with the Orion tool from [25]. Notethat Orion only supports dense features and PK-FK joins,unlike Morpheus. We vary the feature ratio and reportthe speed-ups in Table 7. Morpheus achieves comparableor higher speed-ups (in fact, the runtimes were also lowerthan Orion). This is primarily due to hashing overheadsin Orion. Overall, we see that Morpheus provides highgenerality without sacrificing on possible performance gains.

5.2.4 Scalability with OREORE executes LA operators over an ore.frame (physi-

cally, an RDBMS table) by pushing down computations tothe RDBMS [1]. However, since ORE does not expose theunderlying RDBMS multi-table abstractions (or the opti-mizer) to LA, by default, it needs the materialized singletable. In contrast, Morpheus on ORE realizes the benefitsof factorized ML on top of ORE (e.g., the ore.rowapply op-erator) without requiring changes to ORE. We compare theruntimes of Morpheus with the materialized version for lo-gistic regression on larger-than-memory synthetic data. Theresults are presented in Table 8 (PK-FK join) and Table 9(M:N join). We see that Morpheus yields speed-ups at scalefor both PK-FK and M:N joins, thus validating our claim

Domain Size 5× 105 105 5× 104 104

Materialized 1.98 13.04 119.54 346.93Morpheus 0.96 1.00 1.02 1.16Speed-up 2.1x 12.9x 117.3x 298.2x

Table 9: Per-iteration runtime (in minutes) of logistic re-gression on ORE for a M:N join. Fix (nS , nR, dS , dR) =(106, 106, 200, 200) and vary nU (join attribute domain size).

that Morpheus can leverage the scalability of existing LAsystems. Since SystemML [9], Matlab, SciDB [14], and mostother LA systems do not expose normalized data abstrac-tions either, we expect our framework to benefit them too.

6. RELATED WORKFactorized ML. As Figure 1(a) illustrates, prior works onfactorized ML are ML algorithm-specific [24,25,29,30]. Ourwork unifies and generalizes such ideas to a wide variety ofML algorithms by leveraging linear algebra (LA). Further-more, many prior approaches are mostly platform-specific,with some being restricted to in-memory data [29]. By usingLA, our work decouples the ML algorithm from the systemenvironment issues, which enables us to leverage existingindustrial-strength LA systems for scalability and other or-thogonal benefits. Nevertheless, these prior works provideus the inspiration to pursue a unifying and generic mecha-nism for optimizing ML over normalized data.

LA Systems. Several recent projects focus on supportingLA workloads over data systems [1,4,5,9,33]. There are alsofrom-scratch systems that support LA such as SciDB [14]and TensorFlow [5], both of which support tensor algebra,not just matrices. None of these systems optimize LA overnormalized data. While they offer physical data indepen-dence for LA, our work brings logical data independenceto LA. Since Morpheus offers closure, it could be inte-grated into any of these LA systems; our prototype on OREis an encouraging first step in this direction. Closely re-lated to our goals are two recent optimizations introducedby SystemML: compressed LA (CLA) [17] and SPOOF [16].CLA re-implements LA operators from scratch over com-pressed matrix formats to reduce the memory footprint.

Morpheus can be viewed as a “logical” form of compres-sion that exploits schema information. Unlike CLA, theclosure property of Morpheus means it does not requirere-implementing LA operators from scratch. Furthermore,CLA does not target runtime speed-ups [17] and thus, iscomplementary to Morpheus. SPOOF enables “sum-product”optimizations for LA expressions to avoid creating large in-termediate matrices. While this is conceptually similar toavoiding the materialization of joins, our work differs onboth technical and architectural aspects. SPOOF does notexploit schema information, which means it cannot subsumefactorized ML without an abstraction like our normalizedmatrix. But if SPOOF is extended with new optimizationsthat include cbind, it can subsume rewrites for simple op-erations like matrix aggregation although not our rewritesfor complex operations such as cross-product, inverse, andmulti-table multiplication. Architecturally, SPOOF requiresa compiler for LA [16], while Morpheus also works in inter-preted LA environments such as R and ORE. Overall, Mor-pheus is largely complementary to both CLA and SPOOFand it is interesting future work to integrate these ideas.

Query Optimization. Factorized computations generalizeprior work on optimizing SQL aggregates over joins [12,32].In particular, FDB (“factorized database”) is an in-memorytool that factorizes and optimizes relational algebra (RA)operations over joins [6, 7]. In contrast, our focus is on LAoperations with the aim of automatically factorizing manyML algorithms. This raises a grander question of whetherLA can be “subsumed” by RA and RDBMSs, which is a longstanding debate that is perhaps yet to be settled [14]. Dif-ferent systems take different paths: [9,14] build from-scratchsystems without an RDBMS, while [1, 33] aim to layer LAon top of an RDBMS even if they do not fully exploit theRDBMS optimizer for LA. Our work is orthogonal to thisdebate; Morpheus is applicable to both kinds of systems,easily integrates with existing LA sytems, provides closurewith respect to LA, and does not force ML users to use RA orSQL. Furthermore, our work shows the benefits of database-style optimization ideas for LA operations regardless of thesystem environment, while also introducing new LA-specificoptimizations with no known counterparts in RA. Neverthe-less, it is interesting future work to more deeply integrateRA and LA, say, by creating a new intermediate languageas suggested in [27]. TensorDB proposed a similar integra-tion to improve the tensor decomposition operation by cre-ating a tensor-relational query framework [22], although itdoes not target or optimize LA-based ML workloads. Thereis also a need for benchmarks of LA systems in the spiritof [11]. While these questions are beyond the scope of thispaper, such efforts could expose interesting new interactionsbetween LA operations and complex optimizations such asmulti-query optimization [31].

7. CONCLUSION AND FUTURE WORKFactorized ML techniques help improve ML performance

over normalized data. But they have hitherto been ad-hocand ML algorithm-specific, which causes a daunting develop-ment overhead when applying such ideas to other ML algo-rithms. Our work takes a major step towards mitigating thisoverhead by leveraging linear algebra (LA) to represent MLalgorithms and factorizing LA. Our framework, Morpheus,generically and automatically factorizes several popular MLalgorithms, provides significant performance gains, and can

leverage existing LA systems for scalability. As ML-basedanalytics grows in importance, our work lays a foundationfor more research on integrating LA systems with RA oper-ations, as well as a grand unification of LA and RA opera-tions and systems. As for future work, we are working ondistributed versions of Morpheus on SystemML and Ten-sorFlow. Another avenue is to include more complex LAoperations such as Cholesky decomposition and SVD.

8. REFERENCES[1] Oracle R Enterprise.

[2] R. r-project.org.

[3] SAP HANA and R.

[4] SparkR. spark.apache.org/R.

[5] M. Abadi et al. TensorFlow: A System for Large-Scale MachineLearning. In OSDI, 2016.

[6] N. Bakibayev et al. Aggregation and Ordering in FactorisedDatabases. In VLDB, 2013.

[7] N. Bakibayev, D. Olteanu, and J. Zavodny. FDB: A QueryEngine for Factorised Relational Databases. In VLDB, 2012.

[8] M. Boehm et al. Hybrid Parallelization Strategies forLarge-Scale Machine Learning in SystemML. In VLDB, 2014.

[9] M. Boehm et al. SystemML: Declarative Machine Learning onSpark. In VLDB, 2016.

[10] Z. Cai et al. Simulation of Database-valued Markov ChainsUsing SimSQL. In SIGMOD, 2013.

[11] Z. Cai et al. A Comparison of Platforms for Implementing andRunning Very Large Scale Machine Learning Algorithms. InSIGMOD, 2014.

[12] S. Chaudhuri and K. Shim. Including Group-By in QueryOptimization. In VLDB, 1994.

[13] L. Chen et al. Towards Linear Algebra over Normalized Data.https://arxiv.org/abs/1612.07448.

[14] P. Cudre-Mauroux et al. A demonstration of SciDB: Ascience-oriented DBMS. PVLDB, 2(2):1534–1537, 2009.

[15] L. Elden. Matrix Methods in Data Mining and PatternRecognition. SIAM, 2007.

[16] T. Elgamal et al. SPOOF: Sum-Product Optimization andOperator Fusion for Large-Scale Machine Learning. In CIDR,2017.

[17] A. Elgohary et al. Compressed Linear Algebra for Large-scaleMachine Learning. In VLDB, 2016.

[18] X. Feng, A. Kumar, B. Recht, and C. Re. Towards a UnifiedArchitecture for in-RDBMS Analytics. In SIGMOD, 2012.

[19] T. Hastie et al. The Elements of Statistical Learning: Datamining, Inference, and Prediction. Springer-Verlag, 2001.

[20] J. Hellerstein et al. The MADlib Analytics Library or MADSkills, the SQL. In VLDB, 2012.

[21] R. A. Horn and C. R. Johnson. Matrix Analysis. CambridgeUniversity Press, New York, NY, USA, 2nd edition, 2012.

[22] M. Kim and K. S. Candan. TensorDB: In-Database TensorManipulation with Tensor-Relational Query Plans. In CIKM,2014.

[23] T. Kraska et al. MLbase: A Distributed Machine-learningSystem. In CIDR, 2013.

[24] A. Kumar et al. Demonstration of Santoku: OptimizingMachine Learning over Normalized Data. In VLDB, 2015.

[25] A. Kumar et al. Learning Generalized Linear Models OverNormalized Data. In SIGMOD, 2015.

[26] A. Kumar et al. To Join or Not to Join? Thinking Twice aboutJoins before Feature Selection. In SIGMOD, 2016.

[27] A. Kunft et al. Bridging the Gap: Towards OptimizationAcross Linear and Relational Algebra. In SIGMOD BeyondMRWorkshop, 2016.

[28] R. Ramakrishnan and J. Gehrke. Database ManagementSystems. McGraw-Hill, Inc., 2003.

[29] S. Rendle. Scaling Factorization Machines to Relational Data.In VLDB, 2013.

[30] M. Schleich et al. Learning Linear Regression Models overFactorized Joins. In SIGMOD, 2016.

[31] T. K. Sellis. Multiple-Query Optimization. ACM TODS,13(1):23–52, Mar. 1988.

[32] W. P. Yan and P.-A. Larson. Eager Aggregation and LazyAggregation. In VLDB, 1995.

[33] Y. Zhang et al. I/O-Efficient Statistical Computing with RIOT.In ICDE, 2010.

APPENDIXA. DISCUSSION ABOUT MATRIX SOLVE

In practice, we have observed that the number of featuresis typically much less than that of tuples. Thus, the ma-terialized matrix T is often not square and therefore notinvertible. When T is indeed square, we have the followingtheorem:

Theorem A.1. Consider TnS×(dS+dR) = [SnS×dS , FKnS×nr ·RnR×dR ]. If T is invertable, then

TR ≤ 1

FR+ 1, (1)

where TR = nSnr

and FR = dRdS

.

Proof. Invertibility of T implies that FK ·R is full col-umn rank, which imples R is full column rank and thusdR ≤ nR. Noting that T is square, we have

nS = dS + dR = dR(1

FR+ 1) ≤ nR(

1

FR+ 1). (2)

Moving nR to the left side, we obtain the result TR ≤ 1FR

+1and thus complete the proof.

The theorem above indicates that invertibility of T implieslow redundancy.

B. DOUBLE MATRIX MULTIPLICATION(DMM)

This is our name for the operation of multiplying twonormalized matrices. While this scenario is rare in ML overa two-table join, it arises over multi-table joins (more inSection 3.5). Thus, we need to be able to rewrite this op-eration. Let the left normalized matrix be denoted A =(SA,FKA,RA) and the right, B = (SB,FKB,RB). Thedimensions of a data matrix X ∈ {SA,RA, SB,RB} arenX × dX and dA = dSA + dRA. Let SB1 and SB2 de-note SB[1 : dSA, ] and SB[(dSA + 1) :, ] respectively. Sim-ilarly, let FKB1 and FKB2 denote FKB[1 : dSA, ] andFKB[(dSA + 1) :, ] respectively. Note that dA = nB . Therewrite is as follows:

A %*% B → cbind(SA %*% SB1 +

FKA %*% (RA %*% SB2),

(SA %*% FKB1) %*% RB) +

FKA %*% ((RA %*% FKB2) %*% RB))

Transposed DMM. First, we consider both normalizedmatrix inputs (A and B) being transposed. The output is aregular matrix.

t(A) %*% t(B)→ t(B %*% A)

Now, we consider a normalized matrix multiplied with atransposed normalized matrix (and vice versa). These aregeneralizations of the Gram Matrix and Gramian matrices.We are given two normalized matrices A and B, similar tothe case of double matrix multiplication. We need to rewriteA %*% t(B) and t(A) %*% B, both of which yield regularmatrices as outputs. For A %*% t(B), there are three cases:(1) dSA = dSB , (2) dSA < dSB , and (3) dSA > dSB . Therewrite for case (1) is as follows:

A %*% (t(B))→ SA %*% t(SB)+

FKA %*% (RA %*% t(RB)) %*% t(FKB)

As for case (2), let SB1 = SB[, 1 : dSA], SB2 = SB[, dSA+1 : dSB ], RA1 = RA[, 1 : dSB−dSA], and RA2 = RA[, dSB−dSA + 1 : dRA]. Note that we have dSA + dRA = dSB + dRB .The rewrite for case (2) is as follows:

A %*% (t(B))→ SA %*% t(SB1)+

FKA %*% (RA1 %*% t(SB2))+

FKA %*% (RA2 %*% t(RB)) %*% t(FKB)

Finally, case (3) can be recast as case (2) with a transpose:

A %*% (t(B))→ t(B %*% t(A))

As for t(A) %*% B, there is only one case but the rewriteis more complex.

t(A) %*% B → cbind(rbind(t(SA) %*% SB,

t(RA) %*% (t(FKA) %*% SB)),

rbind((t(SA) %*% FKB) %*% RB,

t(RA) %*% t(FKA) %*% FKB %*% RB))

An interesting thing to note in the above rewrite is thatit is not straightforward to determine what the order ofmatrix multiplication should be for the fourth tile, viz.,t(RA) %*% t(FKA) %*% FKB %*% RB. If we computethe product FKB %*% RB first, we are effectively mate-rializeing B. Thus, we might need to compute the productt(FKA) %*% FKB = P (say) first but this temporary out-put matrix could become a dense nRA×nRB matrix, whichcould be quite large depending on these dimensions. Thisrequires a deeper understanding of the sparsity of P . Weprovide the following results that bound nnz(P ) from bothbelow and above.

Theorem B.1. nnz(P ) ≥ max{nRA, nRB}Proof. Let Ai,·, A·,j , and Ai,j denote the ith row, the

jth column, and the entry in the ith row and jth column ofmatrix A, respectively.