Signal Processing 1 Representation and Approximation in Vector Spaces Univ.-Prof.,Dr.-Ing. Markus Rupp WS 18/19 Th 14:00-15:30EI3A, Fr 8:45-10:00EI4 LVA 389.166 Last change: 6.12.2018

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

Signal Processing 1Representation and Approximation in

Vector Spaces

Univ.-Prof.,Dr.-Ing. Markus RuppWS 18/19

Th 14:00-15:30EI3A, Fr 8:45-10:00EI4LVA 389.166

Last change: 6.12.2018

2Univ.-Prof. Dr.-Ing.

Markus Rupp

Learning Goals Representation and Approximation in Vector

Spaces (4Units, Chapter 3) Approximation problem in the Hilbert space (Ch 3.1) Orthogonality principle (Ch 3.2)

Minimization with gradient method (Ch 3.3) Least Squares Filtering, (Ch 3.4-3.9,3.15-3.16)

linear regression, parametric estimation, iterative LS problem

Signal transformation and generalized Fourier series Examples for orthogonal functions, Wavelets (Ch 3.17-3.18)

3Univ.-Prof. Dr.-Ing.

Markus Rupp

Motivation The success of modern communication techniques

is based on the capability to transmit information under constrained bandwidths and in distorted environments without loosing much of quality.

This success is based on principles in source coding that allow for reducing the redundancy of signals in order to obtain low amounts of data rate.

This in turn requires an approximation of the signals.

4Univ.-Prof. Dr.-Ing.

Markus Rupp

Vector Spaces At this point several interesting questions arise:

Under which conditions is the linear combination of vectors unique?

Which is the smallest set of vectors required to describe every vector in S by a linear combination?

If a vector x can be described by a linear combination of pi ;i=1..m, how do we get the linear weights ci ;i=1..m?

Of which form do the vectors pi ;i=1..m need to be in order to reach every point x in S?

If x cannot be described exactly by a linear combination of pi ;i=1..m, how can it be approximated in the best way (smallest error)?

5Univ.-Prof. Dr.-Ing.

Markus Rupp

Approximation in the Hilbert Space Problem: Let (S,||.||) be a linear, normed vector

space and T=p1,p2,...,pm a subset of linear independent vectors from S and V=span(T). Given a vector x from S, find the coefficients cm so, that

x can be approximated in the best sense by a linear combination, thus the error e

becomes minimal.

mm pcpcpcx +++= ..ˆ2211

xxe ˆ−=

6Univ.-Prof. Dr.-Ing.

Markus Rupp

Approximation in the Hilbert Space In order to minimize e it is of advantage to

introduce a norm. If taken an l1- or l∞-norm, the problem

would become mathematically very difficult to treat.

However, utilizing the induced l2-norm, we typically obtain quadratic equations, solvable by simple derivatives.

Later, we will also introduce iterative LS methods that can be used to solve problems in other norms.

7Univ.-Prof. Dr.-Ing.

Markus Rupp

Approximation in the Hilbert Space Note that if x is in V then the error can become

zero. However, if x is not in V, it is only possible to find

a very small value for ||e||2. Application: Let x be a signal to transmit. The

vectors in T allow to find an approximate solution with a very small error ||e||2. The receiver knows T. We thus need only to transmit the m coefficients cm. Is the number m of the coefficients much smaller than the number of samples in x, we obtain a considerable data reduction.

8Univ.-Prof. Dr.-Ing.

Markus Rupp

Approximation in the Hilbert Space In order to visualize the problem let us first consider a single

vector T=p1 in R. We thus have: x= c1p1+e. To minimize:

( ) ( )( ) ( ) ( )

2

21

1

11

11,

11111

11211111

11111

1

1111

2

2112

2

,

02

minminmin11

p

px

pppx

c

ppcpxxp

ppcpxcxpcxxc

pcxpcxc

pcxpcxpcxe

T

T

LS

TTT

TTTTT

Tcc

==

=+−−=

+−−∂∂

=−−∂∂

−−=−=

9Univ.-Prof. Dr.-Ing.

Markus Rupp

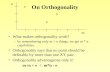

Approximation in the Hilbert Space Geometric interpretation:

It appears that the minimal error eLS and p1 are orthogonal onto each other.

p1c1p1

x

eLSe e‘

10Univ.-Prof. Dr.-Ing.

Markus Rupp

Approximation in the Hilbert Space Note, the problem is not restricted to vectors.

We can compute it more general for objects in a linear vector space (vectors, functions, series) with an induced l2 norm:

2

21

1

11

11,

111111

11111

2

2111

2

2112

2

11

,,,

0,,

,

minmin

:

1

ppx

pppx

c

ppcxpcxp

pcxpcxc

pcxc

pcxe

pcxe

LS

c

==

=−−+−−=

−−∂∂

=−∂∂

−=

−=Let

11Univ.-Prof. Dr.-Ing.

Markus Rupp

Approximation in the Hilbert Space Precise analysis shows:

Indeed the coefficient is chosen so that the error e is orthogonal to p1.

Note also that:

0,,,,,

,

,,,,

111111

11

111,1111,1

=−=−=

−=−=

pxpxpppppx

px

ppcpxppcxpe LSLSLS

xepecxe

pcxee

LSLSLSLS

LSLSLS

,,,

,

11,

11,2

2

=−=

−=

Approximation in the Hilbert Space Which amounts of p1 lead to the smallest

difference (error)? Which is the point on p1 that is closest to x?

12Univ.-Prof. Dr.-Ing.

Markus Rupp

x

p1

13Univ.-Prof. Dr.-Ing.

Markus Rupp

Approximation in the Hilbert Space We need to proceed systematically to show how the

approximation works with more than one vector. We like to approximate x by a linear combination

so that the norm of the error becomes minimal. Thus:mm pcpcpcx +++= ..ˆ

2211

2

20; 1,2,...,

k

TT

k k kp

e e e e e k mc c c

−

∂ ∂ ∂= + = = ∂ ∂ ∂

14Univ.-Prof. Dr.-Ing.

Markus Rupp

Approximation in the Hilbert Space

( )

2

2

1 2

0; 1,2,...,

0

0

, ,...,

k

TT

k k kp

T T T T

k k k k

TT

i ik i i k

TT T T

i ik k k i i k

T T T T T Ti ik k i k i k k k m

e e e e e k mc c c

p e e p p e e p

p x c p x c p p

p x x p p c p c p p

p x p c p c p p p p p p p p c

−

∂ ∂ ∂= + = = ∂ ∂ ∂

= − + − = − −

= − − − − =

= − − + +

= = =

∑ ∑

∑ ∑

∑ ∑

15Univ.-Prof. Dr.-Ing.

Markus Rupp

Approximation in the Hilbert Space We thus obtain m equations that we can

combine in matrix form:

The solution of such matrix equation is called (linear) Least-Squares solution.

1 1 2 1 1 1,1

,2 21 2 2 2 2

,1 2

, , , ,

,, , ,

,, , ,

m LS

LSm

LS mmm m m m

LS

p p p p p p x pcc x pp p p p p p

c x pp p p p p p

Rc p

=

=

Resume Induced norm or not?

16Univ.-Prof. Dr.-Ing.

Markus Rupp

1

1

2

2

sup

sup

xxA

A

xxA

A

xxx

x

x

H

=

=

=

1

1ind,1

2

2ind,2

2

sup

sup

,

xxA

A

xxA

A

xxx

x

x

=

=

=

17Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume Find with help of the small gain theorem

whether the following linear, time-variant system given in state space form (Ak,Bk,Ck,Dk in R) is stable.

Choice of norm????

kkkkk

kkkkk

xDzCyxBzAz

+=+=+1

18Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume Choose the induced 2-norm (spectral norm), since

With the triangle inequality and the submultiplicative property we obtain:

)(sup max,2 kTkT

kTk

T

xindk AAxx

xAAxA λ==

2,22,22

2,22,221

kindkkindkk

kindkkindkk

xDzCy

xBzAz

+≤

+≤+

19Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume The small gain theorem provides us with:

Stability of the state equation is given for: ||Ak||2,ind<1.

q-1

-Ak +

zkzk+1

uN=0

Bkxk

-

kkkkk

kkkkk

xDzCyxBzAz

+=+=+1

Ck

20Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume Alternative approach by triangle inequality and

summation:1 2 2, 2 2, 2

2 1 11 12 2, 2 2, 2

1 2 2, 2 2, 20 0 01 1

1 1 002 2, 2 2, 2 2, 20 0 0

00 2, 2 2, 20

max

max

1

k k kk kind ind

k k kk kind ind

N N N

k k kk k kind indk k kN N N

k k kk k kind ind indk k k

N

kkind indk

z A z B x

z A z B x

z A z B x

z A z A z B x

A z B x

+

+ + ++ +

+= = =

− −

+ += = =

=

≤ +

≤ +

≤ +

≤ + +

+≤

∑ ∑ ∑

∑ ∑ ∑

∑

2,maxk k ind

A−

21Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume Thus we obtain l2-stability of the time-variant

system if:

We did not use specifics of l2. Thus, any lp -norm would have worked equally.

.const,,)2

1)(maxmax)1 max,2

≤

<=

kkk

kTkkindkk

DCB

AAA λ

Resume Consider the following problem: A signal x is measured by n sensors so that:

Design a linear filter g for x so that the SNR of its output becomes maximal.

Assume that E[vvT]=σv2 I.

22Univ.-Prof. Dr.-Ing.

Markus Rupp

1 1 1

2 2 2

n n n

r h vr h v

r x hx v

r h v

= = + = +

Resume

Due to Cauchy Schwarz, the maximum is obtained for g=αh.

The quality increases linearly with the number of sensors. The solution is called Maximum Ratio Combiner (MRC)

23Univ.-Prof. Dr.-Ing.

Markus Rupp

[ ][ ]

[ ] [ ]hhgg

hgxEhh

gg

xEhg

gvvEg

xEhg

vgE

xhgErg

TT

T

g

TT

T

gTT

T

g

T

T

g

T

g

2

2

2

2

2222

2

2

maxmaxmax

max)SNR(max

vvσσ

===

=

[ ]2

2

maxSNRv

T xEhh

σ=

vxhr +=

24Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume A linear vector space for which is defined

an inner vector product, is called: Pre-Hilbert space, inner product space To obtain a Hilbert space, we must have

the additional property: 1) Norm 2) completeness

25Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume How does the method of Least Squares work? 1) have a vector x (observation) from S 2) have a set of linearly independent vectors pi

from V (subset of S)

3) compute the linear combinations ci, so that

2

2

2

2

2

2

2211

ˆminminmin

...:

xxpcxe

pcpcpcxe

ii ciii

c

mm

−=−=

−−−=

∑

Let

26Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume 4) Obtain linear matrix equation with Gramian R

5) Solve c by matrix inversion, or if orthonormal basis simply as c=p.

1 1 2 1 1 11

2 21 2 2 2 2

1 2

, , , ,

,, , ,

,, , ,

m

m

mmm m m m

p p p p p p x pcx pp p p p p p c

c x pp p p p p p

Rc p

=

=

27Univ.-Prof. Dr.-Ing.

Markus Rupp

Approximation in the Hilbert Space Whether such a matrix equation has a unique

solution, depends solely on matrix R. Definition 3.1: An m x m matrix R built by inner

vector products of pi ;i=1,2,…,m from T is called Gramian (Ger.: Gramsche) of set T.

We find:

RR

ppRH

ijij

=

= ,

1 1 2 1 1

1 2 2 2 2

1 2

, , ,, , ,

, , ,

m

m

m m m m

p p p p p pp p p p p p

R

p p p p p p

=

Jørgen Pedersen Gram 27. 6.1850; † 29. 4.1916 Danish Mathematician

28Univ.-Prof. Dr.-Ing.

Markus Rupp

Approximation in the Hilbert Space We find:

Sufficient: R needs to be positive-definite in order to obtain a unique solution.

Definition 3.2: A matrix is called positive-definite if for arbitrary vectors q unequal to zero:

RR

ppRH

ijij

=

= ,

0>qRqH

1 1 2 1 1

1 2 2 2 2

1 2

, , ,, , ,

, , ,

m

m

m m m m

p p p p p pp p p p p p

R

p p p p p p

=

29Univ.-Prof. Dr.-Ing.

Markus Rupp

Approximation in the Hilbert Space Theorem 3.1: The Gramian matrix R is positive

semi-definite. It is positive-definite if and only if the elemnts p1,p2,...,pm are linearly independent.

Proof: Let qT=[q1,q2,...,qm] be an arbitrary vector:

0

,,

,

2

21

111 1

1 1

*

1 1

*

≥=

==

==

∑

∑∑∑∑

∑∑∑∑

=

=== =

= == =

m

iii

m

iii

m

jjj

m

i

m

jiijj

m

i

m

jijji

m

i

m

jijji

H

pq

pqpqpqpq

ppqqRqqqRq

30Univ.-Prof. Dr.-Ing.

Markus Rupp

Approximation in the Hilbert Space If R is not positive-definite, then a vector q must

exist (unequal to the zero vector) so that:

Thus also:

Which means the elements p1,p2,...,pm are linearly dependent.

0=qRqH

0

0

1

2

21

=

=

∑

∑

=

=

m

iii

m

iii

pq

pq

31Univ.-Prof. Dr.-Ing.

Markus Rupp

Approximation in the Hilbert Space Note that such method by matrix inverse

of the Gramian requires a large complexity. This can be reduced considerably if the

basis elements p1,p2,...,pm are chosen orthogonal (orthonormal).

In this case the Gramian becomes diagonal (identity) matrix.

We thus concentrate on the search for orthonormal bases.

32Univ.-Prof. Dr.-Ing.

Markus Rupp

Orthogonality Principle Theorem 3.2: Let (S,||.||2) be a linear,

normed vector space and T=p1,p2,...,pm a subset of linear independent vectors from S and V=span(T). Given a vector x from S, the coefficients cm minimize the error e in the induced l2-norm by a linear combination

if and only if the error vector eLS is orthogonal to all vectors in T.

mm pcpcpcx +++= ..ˆ2211

33Univ.-Prof. Dr.-Ing.

Markus Rupp

Orthogonality Principle Proof: to show (by substitution)

Note: since eLS is orthogonal to every vector pj, eLS must also be orthogonal to the estimate:

0,,,

0,

11=−→−=−=

=

∑∑==

cRpppcpxppcx

pe

jji

m

iijji

m

ii

j

allfor

0,ˆ,1

== ∑=

m

iiiLSLS pcexe

Follows also from min||e||2

34Univ.-Prof. Dr.-Ing.

Markus Rupp

Orthogonality Principle Example 3.1: A nonlinear system f(x) is excited

harmonically. Which amplitudes have the harmonics? A possibility to solve this problem is to approximate the

nonlinear system in form of polynomials. For each polynomial the harmonics can be pre-computed and thus the summation of all terms results in the desired solution. For high order polynomials this can become very tedious.

An alternative possibility is to assume the output as given:

Since the functions build an orthogonal basis the results are readily computed by LS.

( ))cos(ˆ...)2cos(ˆ)cos(ˆ)sin(ˆ...)sin(ˆˆ))(sin()(

...)2cos()cos(...)2sin()sin())(sin(

2110

21210

mxbxbxbmxaxaaxfxe

xbxbxaxaaxf

mm +++++++−=

++++++=

35Univ.-Prof. Dr.-Ing.

Markus Rupp

Gradient Method If the vectors p1,p2,...,pm are not given as

orthonormal (orthogonal) set, the matrix inversion can be difficult (high complexity, numerically challenging).

A possible solution are iterative gradient methods also called the steepest descent method (Ger.: Verfahren des stärksten Abfalls).

Rc=p is solved iteratively for c:

Instead of a matrix inversion, a matrix multiplication is being used several times

( )kkk cRpcc −+=+ µ1

36Univ.-Prof. Dr.-Ing.

Markus Rupp

Gradient Method Important is here the selection of the

step-size µ: Is µ too small then many iterations are to be

performed. Is µ too large, then the method does not

converge.

More about such iterative and adaptive methods in lecture on “Adaptive Filters 389.167”

37Univ.-Prof. Dr.-Ing.

Markus Rupp

Gradient Method Example: 3.2 The matrix equation Rc=p with the

non-negative matrix R is to solve:

( )

( )

( )kkk cRpcc

cRpcc

ppcc

ccpcR

−+=

=

−

+

=−+=

==−+=⇒=

=⇒

=

→=

+ µ

µ

µµ

1

112

10

15,075,0

3,06,0

3112

12

3,03,06,0

12

3,0000

01

12

3112

:withStart

38Univ.-Prof. Dr.-Ing.

Markus Rupp

Gradient Method

0 2 4 6 8 10 12 14 16 18 200

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

number of iterations k

c(1)

,c(2

) c(1)c(2)

39Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering Let us reformulate the problem in matrix form,

utilizing a matrix A=[p1,p2,...,pm]:

( )

( )

1 2

1

ˆ , ,...,

, 0; orthogonal to each

, 0; compactly written for all

, 0

m

j j

j

H H H

Rp

H H

H H

x p p p c Ac

x Ac p p

x Ac A p

x Ac A A x Ac A x A Ac

A Ac A x

c A A A x Bx−

= =

− =

− =

− = − = − =

=

= =

40Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering Definition 3.3: The matrix B=(AHA)-1AH is called

pseudoinverse of A. left pseudinverse. Note that c is obtained by a linear transformation

B of the observation x. Also, the estimate is obtained by a linear

transformation of the observation x:

The matrix PA=A(AHA)-1AH is called projection matrix and deserves closer consideration.

( ) xAAAAcAx HH 1ˆ −==

E. H. Moore in 1920, Roger Penrose in 1955.

41Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering Let S be a linear vector space that can be

constructed by two disjoint (Ger.: disjunkte) subspaces W and V: S=W+V. Thus, each vector x=w+v from S can uniquely be combined by a vector w from W and a vector v from V.

If this construction is unique, then w and vshould be found from x knowing W and V.

The operator Pv that maps x onto v= Pv x is called projection operator, or short: projection.

42Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering Definition 3.4: A linear mapping of a linear

vector space onto itself is called a projection, if P=P2. Such an operator is called idempotent.

Obviously, PA=A(AHA)-1AH is a projection matrix.

Lemma 3.1: If P is a projection matrix then I-P is also a projection matrix.

Proof: (I-P)2=I-2P+P2=I-P

43Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering Thus, we have for the LS error eLS:

Therefore, also the error is build by a linear transformation (projection) of the observation x.

Since the LS error e and the estimate of x are orthogonal onto each other, we have:

( )

( )

1

1

ˆ

V

W

H HLS

H H

P

P

e x x x Ac x A A A A x

I A A A A x

−

−

= − = − = −

= −

( )[ ] 2

2

12

2xxAAAAIxexe HHH

LSH

LS ≤−==−

44Univ.-Prof. Dr.-Ing.

Markus Rupp

LS as projection

( ) ( )( )( ) ( ) ( )( )( ) wwvAAAAIvwvAAAA

xAAAAIexAAAAx

WwVvwvxLet

HHHH

HHLS

HH

=+−==+=

−==

∈∈+=

−−

−−

11

11ˆ

,:

( )( )( )xAAAAIe

xAAAAxHH

LS

HH

1

1ˆ−

−

−=

=VWSWV +=

x

45Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering Applications:

Data/Curve Fitting Parameter Estimation

Channel Estimation Iterative receiver Underdetermined Equations

Minimum norm solution, compressed sensing Weighted LS

Filter Design, iterative LS

46Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering Data/Curve fitting

Given are observation data in form of pairs (xi,yi). We assume a specific curve describing the relation of the x and y data. Given the data pairs we like to fit them optimally to such curve.

Example 3.3: Polynomial fitgiven a function f(x) that is to be fit by a polynomial p(x) of order m optimally in the interval [a,b]. For this the following quadratic cost function is selected:

47Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering Thus

Due to the orthogonality principle we know

( )∫ −−−−−−

−

b

a

mmccc dxxcxccxf

mo

21110,...,, ...)(min

11

10

1

11 1 11

1 1

1,1 ,1 ... ,1 ,1,1, ,

,1, ... ,

,1

m

mm m mm

b i j i ji j i j

a

x x fcf xcx x x

c f xx x x

b ax x x dxi j

−

−− − −−

+ + + ++

=

−= =

+ +∫

48Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering Normalizing the interval to [0,1], we obtain for the

Gramian the so called Hilbert matrix:

For this matrix it is known that for growing order m the matrix is very poorly conditioned. It is thus difficult to invert the matrix.

1 11 ...2

1 1 12 3 1

1 1 1...1 2 1

m

m

m m m

+ + −

49Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering Due to this reason typically (simple) polynomials are not being

used for approximation problems. For small values of m this effect is not so dramatic. Example 3.4: The function ex is to approximate by

polynomials.The Taylor series results in: ex =1+x+x2/2+..LS on the other hand delivers: 1,013+0,8511x+0,8392 x2

If we like the largest error to become minimal, it is not sufficient to minimize the L2-norm but in this case the L∞-norm is required:

( )pb

a

pmmpccc dxxcxccxf

mo

/1

1110,...,, ...)(limmin

11

−−−−∫ −

−∞→−

Brook Taylor: English mathematician (18.8.1685 – 29.12.1731)Colin Maclaurin: Scottish mathematician (2.1698 – 14.6.1746)

50Univ.-Prof. Dr.-Ing.

Markus Rupp

INSTITUT FÜRNACHRICHTENTECHNIK UND HOCHFREQUENZTECHNIK

51Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering Example 3.5: Linear Regression

Probably the most popular application of LS. The intention is to fit a line so that the distance between the observations and their projection onto the line becomes (quadratic) minimal.

52Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering start: yi=axi+b

We thus obtain the LS solution as:

1 1 1 1 1

2 2 2 2 2

11

1 cn n n n n

y A e

y ax b e x ey ax b e x ea

by ax b e x e

+ + = + = + +

( ) yAAAc HH 1−=

53Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering Example 3.6: In order to describe observations in

terms of simple and compact key parameters, often so-called parametric process models are being applied.

A frequently used process is the Auto-Regressive (AR) Process, that is build by linear filtering of past values:

The (driving) process vk is a white random process with unit variance.

kPkPkk aa vx...xx 11 +++= −−

54Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering AR processes are applied to model strong spectral

peaks:

( )2

1

xx

11

...11

]xx[

v...1

1x

PjP

j

jllkk

l

j

kPP

k

eaea

eEes

qaqa

Ω−Ω−

Ω−+

∞

−∞=

Ω

−−

−−−=

=

−−−=

∑

frequency [Hz]

0 500 1000 1500 2000 2500 3000 3500 4000

power s

pectrum

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

55Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering A typical (short-time) spectrum of human speech

looks like :

( )2

33

22111

Ω−Ω−Ω−Ω

−−−= jjj

j

eaeaeaesxx

Formants at 120, 530 and 3400 Hz

56Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering LS methods can be used to estimate such parameters a1,…,aP of an

AR process:

For more details of the stochastic background, please look into lecture „Signal Processing 2“

1 1 2 2

1 2

1 2

1 2

1 21 2

x x x ... x vx x x v

x ...x x x v

x x x ... x v

k k k P k P k

k k k k

k

k M k M k M k M

k k k k P kP

a a a

a a

a a a

− − −

− −

− − − − − −

− − −

= + + + +

= = + + +

= + + + +

57Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering write M+1 observations in a vector:

An estimation for a can be found from the observation xk by minimizing the estimation error:

kkP

kPkkk

kPkPkkk

aa

aaa

vXv]x,...,x,x[

vx...xxx

,

21

2211

+=+=

++++=

−−−

−−−

2

2,min akPka Xx −

58Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering We obtain:

bringing this back to a Least-Squares problem Interpretation of the matrix XH

P,k XP,k as an estimate of the ACF matrix and the vector XH

P,k xk as estimate of the autocorrelation vector in the Yule-Walker equations.

-1xx

, , ,

1

, , ,

x

X x X X 0

X X X x

H HkP k P k P k

H HkP k P k P k

rR

a

a−

≈≈

− =

=

59Univ.-Prof. Dr.-Ing.

Markus Rupp

Least-Squares Filtering Example 3.7: Channel estimation. A training

sequence ak with L symbols is sent at the beginning of a TDMA slot, to estimate the channel hk of length 3 (L>3).

20211202

31221303

13221101

22110

:thus

vahahahrvahahahr

vahahahr

vahahahr

LLLLL

kkkkk

+++=+++=

+++=

+++=

−−−−−

−−

60Univ.-Prof. Dr.-Ing.

Markus Rupp

Least-Squares Filtering

11 10 1 2

22 20 1 2

33 3

0 1 202 2

1 2 3

2 3 4 0

3 4 5 1

2

2 1 0

LL L

LL L

LL L

L L L

L L L

L L L

r H a var v

h h har v

h h har v

h h har v

a a aa a a ha a a h

ha a a

−− −

−− −

−− −

− − −

− − −

− − −

= +

= + =

1

2

3

2

L

L

L

vv

Ah vv

v

−

−

−

+ = +

Hankelmatrix

61Univ.-Prof. Dr.-Ing.

Markus Rupp

Least-Squares Filtering With this reformulation the channel can be

estimated by the Least-Squares method:

Note that the training sequence is already known at the receiver and thus the Pseudo-Inverse [AHA]-1AH can be pre-computed.

[ ] rAAAh

vhArHH 1ˆ −

=

+=

62Univ.-Prof. Dr.-Ing.

Markus Rupp

Least-Squares Filtering Example 3.8: Iterative Receiver. Consider once

again the equivalent description:11 1

0 1 222 2

0 1 233 3

0 1 202 2

1 2 3

2 3 4 0

3 4 5 1

2

2 1 0

LL L

LL L

LL L

L L L

L L L

L L L

ar vh h h

ar vh h h

a H a vr v

h h har v

a a aa a a ha a a h

ha a a

−− −

−− −

−− −

− − −

− − −

− − −

= + = + =

1

2

3

2

L

L

L

vv

Ah vv

v

−

−

−

+ = +

63Univ.-Prof. Dr.-Ing.

Markus Rupp

Least-Squares Filtering Example 3.8: This can be continued after the

training symbols L..2L-1:2 2 2 1 2 2

0 1 22 3 2 2 2 3

0 1 22 4 2 3 2 4

0 1 21 1

2 1 2 2 2 3

2 2 2 3 2 4

2 3 2 4

L L L

L L L

L L L

L L L

L L L

L L L

L L

r a vh h h

r a vh h h

H a vr a v

h h hr a v

a a aa a aa a

− − −

− − −

− − −

+ +

− − −

− − −

− −

= + = +

=

2 2

0 2 3

12 5 2 4

2

2 1 1

L

L

L L

L L L L

vh vh Ah va vh

a a a v

−

−

− −

+ + +

+ = +

64Univ.-Prof. Dr.-Ing.

Markus Rupp

Least-Squares Filtering This means that the transmitted symbols as well

as the channel coefficients can be estimated in a ping-pong manner.

This is the principle of an iterative receiver.

A#

H#

h aSlicer

a~Soft

Symbols

Hard Symbols

72Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume How does the method of Least Squares work? 1) have a vector x (observation) from S 2) have a set of linearly independent vectors pi

from V (subset of S)

3) compute the linear combinations ci, so that

2

2

2

2

2

2

2211

ˆminminmin

...:

xxpcxe

pcpcpcxe

ii ciii

c

mm

−=−=

−−−=

∑

Let

73Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume 4) Obtain linear matrix equation with Gramian R

5) Solve c by matrix inversion, or if orthonormal basis simply as c=p.

1 1 2 1 1 11

2 21 2 2 2 2

1 2

, , , ,

,, , ,

,, , ,

m

m

mmm m m m

p p p p p p x pcx pp p p p p p c

c x pp p p p p p

Rc p

=

=

74Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume 6) The Gramian is in general positive semi-

definite. The Gramian is positive definite if and only if the vectors pi are linearly independent.

7) The so obtained error eLS is always orthogonal to the estimation signal the vectors pi.

0,,

0ˆ,

≥=

=

LSLSLS

LS

eexe

xe

Resume Consider the following example of

vectors pi:

Compute the Gramian matrix

75Univ.-Prof. Dr.-Ing.

Markus Rupp

−−

−

−

=

1111

1111

1111

T

Resume Result:

Is the Gramian positive definite?

76Univ.-Prof. Dr.-Ing.

Markus Rupp

pcR

px

px

px

ccc

=

=

3

2

1

3

2

1

,

,

,

400040004

77Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume LS as projection:

Given the vector x to approximate and basis vectors A=[p1,p2,...,pm].

The coefficients: The approximation:

The error:

( )( )

( )

1

1

1

ˆ

V

W

H H

H H

H HLS

P

P

c A A A x

x A A A A x

e I A A A A x

−

−

−

=

=

= −

78Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume Note:

The estimate is in the column space of A (=span(A)), i.e., it is a linear combination of the columns of A!

The error eLS does not lie in the column space of A, it is perpendicular to the column space of A,

( )( )

[ ]cpppcAcAxAAAAx

xAAAc

m

HH

HH

,...,,ˆ

21

1

1

===

=−

−

79Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume This technique is ideally suited to separate signal

and noise: Let the signal x=[a,a]T, a=..-2,-1,0,1,2,… receive ( ) ( )

( )( )

1 1

2 2

1 1

1 1 2

2

1 1 2

11

ˆ

1 1 111 1 12 2

112

H H H H

V

H HLS

W

n nay x n a

n na

x A A A A y x A A A A n

n n nx xn

n ne I A A A A y

− −

−

= + = + = +

= = +

+= + = +

−= − = −

80Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume Why do we have: We had:

Separate x into two components:

Then we have:

thus:

2

2

2

2xeLS ≤

( )[ ] ...2

2

12

2−=−==

− xxAAAAIxexe HHHLS

HLS

⊥+= xcAx

( ) ( ) ( )( )[ ] ⊥−

⊥−−

=−

=+=

xxAAAAI

cAxcAAAAAxAAAAHH

HHHH

1

11

( )[ ][ ] 2

2

2

2

2

2

2

2

12

2

⊥⊥⊥⊥⊥

−

+=≤=+==

−==

xcAxxxxcAxx

xAAAAIxexeHH

HHHLS

HLS

Ac

x ⊥x

81Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering Consider an underdetermined system of equations, i.e., there

are more parameters to estimate than observations. Example 3.9

Since the system of equations is underdetermined, there are infinitely many solutions.

Rttx

x

xxx

∈

+

=

=

−=

−

−

;111

321

321

:

64

145321

3

2

1

solution one Find

82Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering Of all these solutions that one with the least norm

is of most interest.

We assume again that an estimate of x is constructed by a linear combination of the observations, thus

bxAx = :constraint with; 2

min

( )( ) bAAAx

bAAcbcAAxAcAxHH

HHH

1

1

ˆ

ˆˆ−

−

=

=⇒==⇒=ReformulateAH instead

of A

83Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering Note that AH(AAH)-1 is also a pseudoinverse to A

since A x AH(AAH)-1=I. right pseudoinverse. Surprisingly, this solution delivers always the

minimum norm solution. The reason for this is that all other solutions have additional components that are not linear combinations of AH (they are not in the column space of AH).

Example 3.10: Consider the previous example again. The minimum norm solution is given by x=[-1,0,1]T and not as possibly assumed [1,2,3]T!.

84Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering

not. are and of space row the in is thus

: of space (column of space row the in isthat solution a Seek

:againConsider

−

−=

−−

−

∈

+

−=

−=

−

−

111

321

;101

;707

145

321

2

;111

101

)

64

145321

3

2

1

A

Rttx

AA

xxx

H

85Univ.-Prof. Dr.-Ing.

Markus Rupp

Least Squares Filtering

( )

( ) ( )

( ) 0for solution 06)1(2212

11111

101

solution? Norm Minimum e truly th101

AAA Is

;111

101

:) of space(column of space row in the ishat solution t aSeek

64

145321

:againConsider

2

2

222

2

2

2

2

1HH

3

2

1

=⇒==++++−=∂

∂

++++−=

+

−=

−=

∈

+

−=

−=

−

−

−

ttttttx

ttttx

b

Rttx

AA

xxx

H

Sparse Least Squares Filtering The general condition

can also be modified, when particular (sparse) conditions are of interest:

Basis Persuit (practical approximation)

86Univ.-Prof. Dr.-Ing.

Markus Rupp

bxAx = :constraint with; 2

min

bxAx

bxAx

=

=

:constraint with;

:constraint with;

1

0

min

min

Sparse Least Squares Filtering Such a problem is known to be NP hard thus of high complexity to

be solved. Alternative forms are:

For some value of λ the first one is identical to the previous sparse problem. problem of finding λ.

The second formulation is a convex approximation for which efficient numerical solutions exist. It is typically the preferred formulation for compressive sensing problems.

87Univ.-Prof. Dr.-Ing.

Markus Rupp

1

2

2

0

2

2

min

min

xbxA

xbxA

λ

λ

+−

+−

88Univ.-Prof. Dr.-Ing.

Markus Rupp

Weighted Least Squares Filtering Recall linear regression. There are not only linear

relations. Depending on the order m, we speak of quadratic, cubic … regression.

If we have observations available with different precision (e.g., from different sensors), we can weight them according to their confidence. This can be obtained by a weighting matrix W:

In general W needs to be positive-definite. Indefinite matrices are treated in the special lecture on “Adaptive Filters LVA 389.167”.

( ) yWAWAAc HH 1−=

89Univ.-Prof. Dr.-Ing.

Markus Rupp

Iterative LS Problem Up to now we only considered quadratic forms

(L2 and l2-norms). The question is open, how other norms can be computed:

The problem is thus formulated as classical quadratic problem with a diagonal weighting matrix W.

( )

( ) ( )

1

2 2

1

min min min

mini

m ppc c c ip p i

im p

c i ii ii

w

x Ac x Ac x Ac

x Ac x Ac

=

−

=

− ⇒ − = −

= − −

∑

∑

90Univ.-Prof. Dr.-Ing.

Markus Rupp

Iterative Algorithm to solve weighted LS Problem Iterative algorithm:

)()(1)()1(

2)(2)(2

2)(1

)(

)()(

1)1(

)1()(

,...,,

..1for )(

kkHkHk

pkm

pkpkk

kk

HH

cxWAAWAc

eeediagW

cAxe

kxAAAc

λλ −+=

=

−=

==

−+

−−−

−

[ ]1,0∈λ

91Univ.-Prof. Dr.-Ing.

Markus Rupp

Iterative LS Problem Example 3.12 Filter design

A linear-phase FIR filter of length 2N+1 is to design such that a predefined magnitude response |Hd(ejΩ)| is approximated in the best manner. Note that for linear-phase FIR filters we have hk=h2N-k ;k=0,1,…,2N. We form b0=hN and bk=2hk+1; k=1,2,...,N:

If we would use a quadratic measure,

( ) ( ) ( )∫ Ω− ΩΩΩ

π

0

2min deHeH j

dj

reH jr

( ) ( ) )()cos(0

Ω=Ω== Ω−

=

Ω−ΩΩ−Ω ∑ cbenbeeHeeH TjNN

nn

jNjr

jNj

92Univ.-Prof. Dr.-Ing.

Markus Rupp

Iterative LS Problem The magnitude response would be approximated

only moderately. With a larger norm p∞ a much better result is

obtained (equiripple design).

( ) ( ) ( )∫ Ω− ΩΩ∞→ Ω

π

0

minlim deHeHpj

dj

reHp jr

93Univ.-Prof. Dr.-Ing.

Markus Rupp

Iterative LS Problem

Linear phase filterN=40Remez vs FIR

94Univ.-Prof. Dr.-Ing.

Markus Rupp

Signal Transformation In the following we will treat the (still open)

question which basis functions are best suited for approximations.

We have seen so far that simple polynomials lead to poorly conditioned problems.

We have also seen that orthogonal sets are in particular of interest since the inverse of the Gramian becomes very simple.

We thus will search for suitable basis functions with orthogonal (better orthonormal) properties.

95Univ.-Prof. Dr.-Ing.

Markus Rupp

Signal Transformation We approximate a function x(t) in the LS sense

(L2- norm) for orthonormal functions pi(t):

Bessel‘s Inequality Note under which conditions the inequality is

satisfied with equality. Parseval’s Theorem.

,

12

22

21 12

22,2

1

ˆ ( ) ( )

( ) ( ) ( ) ( ), ( )

( ) 0

LS i

m

m i ii

m m

i i ii i c

m

LS ii

x t c p t

x t c p t x t x t p t

x t c

=

= =

=

=

− = −

= − ≥

∑

∑ ∑

∑

pcpcR LSLS =→=

96Univ.-Prof. Dr.-Ing.

Markus Rupp

Signal Transformation Consider the limit of this series:

Since the estimate is a Cauchy series and the Hilbert space is complete, we can follow that the limit is also in the Hilbert space.

However, not every (smooth) function can be approximated by an orthonormal set point by point.not in C[a,b]!

∑

∑∞

=∞

=

==

=

1

1

)()(ˆ)(ˆ

)()(ˆ

iii

m

iiim

tpctxtx

tpctx

97Univ.-Prof. Dr.-Ing.

Markus Rupp

Signal Transformation Let us now restrict ourselves to approximations in

the L2 norm. Even then not every function can be approximated

(well) with a set of orthonormal basis functions.

Example 3.13: The set sin(nt) ;n=1,2,...∞ builds an orthonormal set. The function cos(t) cannot be approximated, since all coefficients disappear:

∫ ==π2

0

0)sin()cos( dtnttcn

98Univ.-Prof. Dr.-Ing.

Markus Rupp

Signal Transformation We thus require a specific property of orthonormal sets, in

order to guarantee that every function can be approximated. Theorem 3.3: A set of orthonormal functions is complete in

an inner product space S with induced norm (can approximate an arbitrary function) if any of the following equivalent statement holds:

set. lorthonorma an forms set the whichfor function nonzero no is There

Theorem sParseval'

allfor

),...(),()()(

;)(),()(

,;)()(),()(

)()(),()(

21

1

22

1

1

tpt,ptfStf

tptxtx

NNntptptxtx

tptptxtx

ii

n

iii

iii

∈

=

∞<≥<−

=

∑

∑

∑

∞

=

=

∞

=

ε

99Univ.-Prof. Dr.-Ing.

Markus Rupp

Signal Transformation It is also said: the orthogonal set of basis functions is

complete (Ger.: vollständig). Note that this is not equivalent to a complete Hilbert space (Cauchy)!

It is noteworthy to point out the difference to finite dimensional sets. For finite dimensional sets it is sufficient to show that the functions pi are linearly independent.

If an infinite dimensional set satisfies the properties of Theorem 3.3, then the representation of x is equivalently obtained by the infinite set of coefficients ci.

The coefficients ci of a complete set are also called generalized Fourier series.

100Univ.-Prof. Dr.-Ing.

Markus Rupp

Signal Transformation Lemma 3.2: If two functions x(t) and y(t) from S

have a generalized Fourier series representation using some orthonormal basis set pi(t) in a Hilbert space S, then:

Proof:

∑∞

=

=1

,i

iibcyx

∑

∑∑

∑∑

∞

=

∞

=

∞

=

∞

=

∞

=

=

=

==

1

11

11

;

)(,)()(),(

)()();()(

lll

kkk

iii

kkk

iii

bc

tpbtpctytx

tpbtytpctx

101Univ.-Prof. Dr.-Ing.

Markus Rupp

Signal Transformation Compare Parseval’s Theorem in its most general

form:

cmp:

( )

( ) ( )

YXbc

djYjX

dzzz

YzXj

yx

djkjXx

iii

Ck

kk

k

,

)exp()exp(21

11)(21

)exp()exp(21

*

**

=

ΩΩΩ=

=

ΩΩΩ=

∑

∫

∫∑

∫

∞

−∞=

−

∞

−∞=

−

π

π

π

π

π

π

π

102Univ.-Prof. Dr.-Ing.

Markus Rupp

Orthogonal Functions Most prominent examples of orthonormal sets are:

Example 3.14:Fourier series in [0,2π]

For periodic functions f(t)=f(t+mT) we select:

dtetfc

ectf

jntn

n

jntn

−

∞

−∞=

∫

∑

=

=

π

π

π2

0)(

21

21)(

dtetfT

c

ectf

tT

jnT

n

n

tT

jn

n

π

π

π

π2

0

2

)(2

121)(

−

∞

−∞=

∫

∑

=

=

103Univ.-Prof. Dr.-Ing.

Markus Rupp

Orthogonal Functions Most prominent examples of orthonormal sets are:

Example 3.15: Discrete Fourier Transform (DFT)A series xk is only known at N points: k=0,1,..N-1.

Note that in both transformations often orthogonal rather than orthonormal sets are being applied.

∑

∑−

=

−

−

=

=

=

1

0

/2

1

0

/2

1

1

N

k

Nkljkl

N

l

Nkljlk

exN

c

ecN

x

π

π

104Univ.-Prof. Dr.-Ing.

Markus Rupp

Orthogonal Functions Note that in this case the orthogonal functions

are constructed by trigonometric functions ejnt/T

and ej2πn/N, respectively. The weighting function is thus w(t)=1.

We have:

∑

∑

∫

∞

−∞=+−

−

=

−

−

=

=−=

=≠

=

rrNmn

N

k

NkmjNknj

jmtjnt

Nmnee

N

mnmn

dtee

δ

π

ππ

π

0mod;1;01

;1;0

21

1

0

/2/2

2

0

else

105Univ.-Prof. Dr.-Ing.

Markus Rupp

Orthogonal Functions Orthogonal Polynomials

We already noticed that „simple“ polynomials lead to poor conditioned problems because they are not orthogonal. However, it is possible to build orthogonal polynomial families.

Lemma 3.3: Orthogonal polynomials satisfy the following recursive equation:

1 1

1 1( )

( ) ( ) ( ) ( )( ) ( ) ( ) ( )

n

n n n n n n n

n n n n n n ng t

tp t a p t b p t c p ttp t a p t b p t c p t

+ −

+ −

= + +

− = +

106Univ.-Prof. Dr.-Ing.

Markus Rupp

Orthogonal Functions Proof: Let gn(t)=tpn(t)-anpn+1(t) be of degree n (by

choice of an). Then we must have:

Since the polynomials are orthogonal onto each other, we have

∑=

− ==+=n

iiniiinnnnn tptgdtpdtpctpbtg

01 )(),();()()()(

2,...2,1,0;0)(),()(),(

1,...2,1,0;0)(),(

−===

−==

nittptptpttp

nitptp

inin

in

107Univ.-Prof. Dr.-Ing.

Markus Rupp

Orthogonal Functions Since gn(t)=tpn(t)-anpn+1(t) is true, we also must

have:

Thus only two coefficients (for i=n-1 and i=n) remain:

2,...2,1,0;0)(),()(),(

)(),()()(),(

1

1

−==

−=

−==

+

+

nitptpatpttp

tptpattptptgd

innin

innnini

)(),(;)(),( 11 tptgcdtptgbd nnnnnnnn −− ====

108Univ.-Prof. Dr.-Ing.

Markus Rupp

Orthogonal Functions Orthogonal functions hold the property

that their inner vector product becomes zero in the interval of interest [a,b]:

Often a positive weighting function w(t)>0 is being applied.

∫=

=b

aw

w

dttqtptwqp

qp

)()()(,

0,

109Univ.-Prof. Dr.-Ing.

Markus Rupp

Orthogonal Functions Example 3.17: Hermite Polynomials:

yn(t)=dn/dtn (exp(-t2/2))=pn(t)exp(-t2/2)

tpn(t)=-pn+1(t)-npn-1(t) p0(t)=1, p1(t)=-t, p2(t)=t2-1, p3(t)=-t3+3t

( )2

( )

exp / 2( ) ( )

2 !n m n m

w t

tp t p t dt

nδ

π

∞

−−∞

−=∫

110Univ.-Prof. Dr.-Ing.

Markus Rupp

Orthogonal Functions Example 3.18: There are also time-discrete Binomial

Hermite sequences: x(r+1)

k=-x(r+1)k-1+x(r)

k-x(r)k-1 ; x(r)

-1=0

Z-Transform results in: X(r+1)(z)=-z-1X(r+1)(z)+X(r)(z)-z-1X(r)(z) =(z-1)/(z+1)X(r)(z)

=[(z-1)/(z+1)]r+1 X(0)(z)

( )

( ) ( ) rNrr

NkN

k

k

r

zzzX

zzkN

zX

kN

x

Nrx

−−−

−−

=

−

+−=

+=

=

=

≤≤=

∑11)(

1

0

)0(

)0(

)(1

11)(

1)(

0;0

111Univ.-Prof. Dr.-Ing.

Markus Rupp

Orthogonal Functions

( ) ( )

2

( ) ( )

( ) ( )

0

Discrete Hermite-Polynomials:

2 ( / 2)Note, for large we have: exp/ 2/ 2

Note also that:

Orthogonality is w.r.t.

2

k

r rk k

N

r kk r

NNr s

k kk

w

Nx P

k

N k NNk NN

P PNk

NP P

Nks

π

=

=

−

≈ −

=

=

∑ r sδ −

112Univ.-Prof. Dr.-Ing.

Markus Rupp

Orthogonal Functions Binomial Filter Bank

(1+z-1)N (z-1)/(z+1) (z-1)/(z+1) (z-1)/(z+1)

x(0)k

δk

( ) ( ) ( )r

NrNrr

zzzzzzX

+−

+=+−= −

−−−−−

1

1111)(

11111)(

x(1)k x(2)

k

113Univ.-Prof. Dr.-Ing.

Markus Rupp

Orthogonal Functions Binomial

Filter Bank

0 0.5 1 1.5 2 2.5 3 3.50

2

4

6

8

10

12

14

16

Ω

|Xi(e

j Ω)|

|X1(ejΩ)|

|X2(ejΩ)|

|X3(ejΩ)|

114Univ.-Prof. Dr.-Ing.

Markus Rupp

0 0.5 1 1.5 2 2.5 3 3.50

2

4

6

8

10

12

14

16

Ω

|Xi(e

j Ω)|

|X1(ejΩ)|

|X2(ejΩ)|

|X3(ejΩ)|

|X4(ejΩ)|

Orthogonal Functions Binomial Filter

Bank normalized(w.r.t. max|Xi(ejΩ)|)

115Univ.-Prof. Dr.-Ing.

Markus Rupp

Orthogonal Functions Example 3.19: Legendre Polynomials (w(t)=1)

tttpttpttptp

tpnntp

nnttp nnn

2/32/5)(;2/12/3)(;)(;1)(

)(12

)(12

1)(

33

22

10

11

−=−=

==+

++

+= −+

116Univ.-Prof. Dr.-Ing.

Markus Rupp

Orthogonal Functions Example 3.20: Tschebyscheff Polynomials

( )

1

21

( )

1 12 3

0 1 2 3

01 ( ) ( ) 0

1 / 2 0

( ) 0,5 ( ) 0,5 ( )

( ) 1; ( ) ; ( ) 2 1; ( ) 4 3( ) cos arccos( )

n m

w t

n n n

n

n mp t p t dt n m

t n m

tp t p t p t

p t p t t p t t p t t tp t n t

ππ−

+ −

≠= = =

− = ≠

= +

= = = − = −

=

∫

117Univ.-Prof. Dr.-Ing.

Markus Rupp

Legendre Tschebyscheff

Example 3.21 Consider the following homogeneous

differential equation:

with condition The solution is well known:

Let us solve it with a polynomial.

118Univ.-Prof. Dr.-Ing.

Markus Rupp

1)0(

0)()(

==

=+

t

tdt

td

ϕ

ϕϕ

...61

211)( 32 +−+−== − tttet tϕ

Example 3.21 Simple basis functions:

What does dϕ/dt cause on such basis?

119Univ.-Prof. Dr.-Ing.

Markus Rupp

∑=+++=

==

nnn

nn

tpatataatnttp

)(..)(,...2,1,0;)(2

210ϕ

=

+++=

3

2

1

3

2

1

0

2321

32

300002000010

..32)(

aaa

aaaa

tataadt

tdϕ

Example 3.21 Solving the differential equation:

Is equivalent to solving

120Univ.-Prof. Dr.-Ing.

Markus Rupp

0)()(=+ t

dttd ϕϕ

−

−=

→

=

6/12/11

1

;000

310002100011

3

2

1

0

3

2

1

0

aaaa

aaaa

tettt −=−+−→ ...61

211 32

Example 3.21 Now consider the inhomogeneous

problem:

with solution:

121Univ.-Prof. Dr.-Ing.

Markus Rupp

5.0)0(',5.1)0(;2

1)()(−====+=+ tttt

dttd ϕϕϕϕ

1

21

21)(

005.05.0

;05.0

1

310002100011

3

2

1

0

3

2

1

0

=

++=→

=

→

=

−

α

αϕ t

LSett

aaaa

aaaa

130Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume The Banach space is a linear vector

space with the following two properties :

1) normed 2) complete

In order to make it a Hilbert space it requires an:

inner product

Resume

Remember the inner product An inner vector product maps two

vectors onto one scalar with the following properties:

131Univ.-Prof. Dr.-Ing.

Markus Rupp

zyzxzyx

yxyx

xyyx

xxx

,,,)3

,,)2

,,)1

else. 0 and 0for 0,)0*

+=+

=

=

≠>

αα

Resume Right or wrong ????

An inner product induces a norm. The inner product is a norm on x when x=y. The inner product is a squared norm on x

when x=y.

132Univ.-Prof. Dr.-Ing.

Markus Rupp

RightWrongRight

Resume Right or wrong ????

trace(AHB) defines an inner product on the matrices A and B.

trace(AHB) induces a norm on A when A=B. trace(AHB) is a norm on A when A=B. trace(AHB) is a squared norm on A when

A=B.

133Univ.-Prof. Dr.-Ing.

Markus Rupp

Right

RightWrongRight

134Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume Note, that next to (AHA)-1AH also AH(AAH)-1 is a

pseudoinverse to A since A x AH(AAH)-1= (AHA)-1AH xA=I.

Interestingly, this right-pseudoinverse always delivers the minimum norm solution.

The reason for this is that all other solutions have components that are not linear combinations in AH

(thus are not in the column space of AH).

135Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume remember Bezout:

DN

lll qqGqH

R−

=

−− =∑1

11 )()(

H2 G2

Equal.

SignalRx signal2

H1 G1Tx signal Rx signal1

+

136Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume( ) ( ) ( ) ( )( )( ) ( )( )( ) ( )

( )

=

=++

+++++=

+++++=

+

−−

−

−−−−

−−−−

010

1

)2(1

)2(0

)1(1

)1(0

)2(1

)1(1

)2(0

)2(1

)1(0

)1(1

)2(0

)1(0

2)2(1

)2(1

)1(1

)1(1

1)2(0

)2(1

)2(1

)2(0

)1(0

)1(1

)1(1

)1(0

)2(0

)2(0

)1(0

)1(0

1)2(1

)2(0

1)2(1

)2(0

1)1(1

)1(0

1)1(1

)1(0

12

12

11

11

gggg

hhhhhh

hh

qqghghqghghghghghgh

qggqhhqggqhhqHqGqHqG

D 3 equationsfor4 variables!!!OK

LS minimum norm solution?

137Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume

138Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume Example 3.11 But how does noise act on

the reception?

H2 G2

Equal.

Signal

H1 G1Tx signal r(1)

++

v(1)

r(2)

+v(2)

139Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume Consider only noise, signal sk =0:

∑∑∑∑∑∑

∑

−

==

−

==

−

==

−

+−−−

+−−−=

−

==

=

+++

++++

+++==

1

0

2

1

21

0

2

1

221

0

)(

1

2

)(11

)(0

)2(11,2

)2(121

)2(20

)1(11,1

)1(111

)1(10

)(

1

1

)(

......

...

...)(

M

mlm

N

lv

M

mlm

N

lv

M

m

lklm

N

ly

NkN

NkN

MkMkk

MkMkkl

k

N

llk

ggvgE

vgvg

vgvgvg

vgvgvgvqGy

RR

l

R

R

R

R

R

R

σσσ

140Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume In order to minimize the influence of noise, we

have to find the solution G1,2 that generates the smallest noise variance:

We thus have to find the solution g that has the smallest l2 norm!

2

2

2

1

0

2

1

21

0

2

1

2

min

minmin

g

gg

LS

R

lm

R

lm

ggv

M

mlm

N

lgv

M

mlm

N

lvg

=

−

==

−

==

=

= ∑∑∑∑

σ

σσ

141Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume Example 3.11

( )

++

+++++

++

×

=

=⇒=→

=

−

−

010

0

0

010

1

2)2(1

2)1(1

)2(1

)2(0

)1(1

)1(0

)2(1

)2(0

)1(1

)1(0

2)2(1

2)1(1

2)2(0

2)1(0

)2(1

)2(0

)1(1

)1(0

)2(1

)2(0

)1(1

)1(0

2)2(0

2)1(0

)2(1

)1(1

)2(0

)2(1

)1(0

)1(1

)2(0

)1(0

1

)2(1

)2(0

)1(1

)1(0

)2(1

)1(1

)2(0

)2(1

)1(0

)1(1

)2(0

)1(0

hhhhhh

hhhhhhhhhhhh

hhhhhh

hhhhhh

hhg

bAAAgbgA

gggg

hhhhhh

hh

H

LS

HH

Thus we select the solution of the underdetermined LS problem:

Resume Remember Projection: A matrix P is a projection if P=P2. Thus:

Consider relaxed projection mapping (John von Neumann, 1933)

János Neumann Margittai, (*28.6.1903 in Budapest as János Lajos; Neumann † 8.2.1957)

142Univ.-Prof. Dr.-Ing.

Markus Rupp

ffffffPfPfPfPf ,,,,,010

≤===≤≤≤ε

ε

( ) 20;1

≤≤−+=+

µµkkkk

ffPff

Resume RPM: If we have converged to f*, then Pf*=f*

143Univ.-Prof. Dr.-Ing.

Markus Rupp

( ) 20;1

≤≤−+=+

µµkkkk

ffPff

( )

( )( )

( )

1

* * * *

1

1

2 2 22 21 2 2 2

22 2

2... 1 for 0 2

(1 )

(1 ) 2 (1 )

(1 ) 2 (1 )

k

k k k k

f

k k k k k k

k k k

k

f f f f P f P f f f

f f P f f f P f

f f P f

fε µ

µ

µ µ µ

µ µ µ µ

µ µ µ µ ε

+

+

+

+

≤ ≤ ≤ ≤

− = − + − + −

= + − = − +

= − + + −

= − + + −

Resume

144Univ.-Prof. Dr.-Ing.

Markus Rupp

0 0.2 0.4 0.6 0.8 1 1.2 1.4 1.6 1.8 20.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

µ

ε=0.9ε=0.1

( )2 2

... 1 for 0 2

2

(1 ) 2 (1 )

(1 )(1 )ε µ

µ µ µ µ ε

ε µ ε≤ ≤ ≤ ≤

− + + −

= − − +

145Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume Let a,b be from R. Consider two mappings A1 and A2 from R2

R3: A1: X=R2 Y=R3

A2: X=R2 Y=R3

Which mapping leads to a y that spans a subspace in R?

==→

=

+−==→

=

abba

xAyba

x

abab

axAy

ba

x

)(

)(

2

1

146Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume Definition 2.12: Let S be a vector space. If V is a subset of

S such that V itself is a vector space then V is called a subspace (Ger.: Unterraum) of S.

Answer: The first mapping A1 leads to a subspace, the second A2 not.

)(200

)(2

422

)(;111

)(

2212

22

2

2

22

11

1

1

12

xAxA

baba

xAba

ba

xA

=

+

=

=

=

=

Is not element of a subspace

147Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume

R3

−

+−

201

111

span:

: of Subspace 3

baab

a

RV

−=⊥

112

span

complementorthogonal

V

⊥⊥⊥ ∪∈∪∉∉∉

−+

=

=

VVxVVxVxVx

x

span,,,

112

201

113

148Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume Consider a wireless transmission with two

transmitting sensors and one receiver. The two corresponding transmit paths are described by a complex attenuation h1,h2 from C.

Transmit training sequence at sensor1: [s,0,s,s] at sensor2: [0,s,s,-s].

Which subspace is spanned by the undistorted receive signal?

−

→

−

+

=

−

+

=

1110

1101

1110

11010

0 :receive 2211

2

2

22

1

1

1

1 spanshsh

sss

h

ss

s

hr

149Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume Design an LS filter that projects onto the

undistorted subspace:

( )

r

vshsh

rAAArA

vvvv

shsh

A

vvvv

sss

h

ss

s

hr

HHLS

~11101101

31

~~ˆ

1110

1101

00~ :receive

22

111

4

3

2

1

22

11

4

3

2

1

2

2

22

1

1

1

1

−

=

+

==→

−

=

+

=

+

−

+

=

−

matched filter

Remember: Journal article

150Univ.-Prof. Dr.-Ing.

Markus Rupp

151Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume

Compact=closed and bounded, (Ger: abgeschlossen und beschränkt!)

152Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume Formulate

the problem

153Univ.-Prof. Dr.-Ing.

Markus Rupp

Presentalternativemethods

154Univ.-Prof. Dr.-Ing.

Markus Rupp

New ideabased onapproxi-mation

155Univ.-Prof. Dr.-Ing.

Markus Rupp

Propose an improved method

Orthogonal Basis

156Univ.-Prof. Dr.-Ing.

Markus Rupp

Remember: complete set We thus require a specific property of orthonormal sets, in

order to guarantee that every function can be approximated. Theorem 3.3: A set of orthonormal functions is complete in

an inner product space S with induced norm (can approximate an arbitrary function) if any of the following equivalent statement holds:

set. lorthonorma an forms set the whichfor function nonzero no is There

Theorem sParseval'

allfor

),...(),()()(

;)(),()(

,;)()(),()(

)()(),()(

21

1

22

1

1

tpt,ptfStf

tptxtx

NNntptptxtx

tptptxtx

ii

n

iii

iii

∈

=

∞<≥<−

=

∑

∑

∑

∞

=

=

∞

=

ε

157Univ.-Prof. Dr.-Ing.

Markus Rupp

-1 -0.8 -0.6 -0.4 -0.2 0 0.2 0.4 0.6 0.8 1-1

-0.8

-0.6

-0.4

-0.2

0

0.2

0.4

0.6

0.8

1

x

φ c(x), φ

s(x)

sin(k=1)cos(k=1)sin(k=2)cos(k=2)

158Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume The so selected basis functions are well suited for

a Hilbert Transform.

Resume

159Univ.-Prof. Dr.-Ing.

Markus Rupp

160Univ.-Prof. Dr.-Ing.

Markus Rupp

Resume Numerical

Example:

161Univ.-Prof. Dr.-Ing.

Markus Rupp

Orthogonal Functions Example 3.22: Consider

( )

( )

( ) ( )

( ) ( ) ),min(2sinc2sinc2

;0;1

2sinc2sinc2

)sin(sinc

2sinc)(

mndtmBtnBtmnB

mnmn

dtmBtnBtB

xxx

nBttpn

=

≠=

=−−

=

−=

∫

∫∞

∞−

∞

∞−

ππ

Shift

Stretch

162Univ.-Prof. Dr.-Ing.

Markus Rupp

Sampling revisited For band-limited functions f(t), with F(ω) =0 for

|ω|>2πB, we find the coefficients:

Interpolation can thus be interpreted as approximation in the Hilbert space.

( )( )

( )∑∞

−∞=

−=

===

n

nn

nn

nBtnTftf

nTfBnftptptptf

c

2sinc)()(

)(2/)(),()(),(

Sampling revisited Now let us approach this from a different

point of view. We like to approximate a given function

f(t):

by an orthonormal basis pn(t)

163Univ.-Prof. Dr.-Ing.

Markus Rupp

( )

)(),()(),()(),(

2sinc)(

tptftptptptf

c

nBtctf

nnn

nn

nn

==

−= ∑∞

−∞=

Sampling revisited What is

We recall a convolution integral slightly different:

164Univ.-Prof. Dr.-Ing.

Markus Rupp

( ) τττ dnBftptf n −= ∫∞

∞−

2sinc)()(),(

( ) ( ) )()(2sinc)()(2sinc)( tfdtBfdtBf L=−=− ∫∫ ττττττ

LPf(t) fL(t)

Sampling revisited We thus have to set only 2Bt=n and

find

165Univ.-Prof. Dr.-Ing.

Markus Rupp

( )

( )

==

−=−

=−

∫∫

BnfnTf

dT

nfdBnf

nBttf

LL 2)(

sinc)(2sinc)(

2sinc),(

ττττττ

Sampling revisited In other words: The sampling and interpolation can

equivalently be interpreted as an approximation problem to resemble a continuous function f(t) by basis functions sinc(2Bt-n) that are shifted in time by equidistant shifts T=1/(2B).

The approximation works with zero error only if function f(t) is bandlimited by |ω|<2π B.

166Univ.-Prof. Dr.-Ing.

Markus Rupp

Sampling revisited Now remember the following:

Thus, by selecting pn(t)=p(2Bt-n) we can select the space that fits our original signal best!

167Univ.-Prof. Dr.-Ing.

Markus Rupp

( )( )

( )

( )nBtpctf

nBtptfc

nBtctf

nBttfc

cnTfdtnBttf

n

n

n

n

n

−=

−=

−=

−=

==−

∑

∑

∫

2)(

)2(),(

2sinc)(

2sinc),(

)(2sinc)(

:to dgeneralize be can

Specific Basis Functions Find the right basis for your problem: Wavelet or DCT

168Univ.-Prof. Dr.-Ing.

Markus Rupp

Curvelet Basis

169Univ.-Prof. Dr.-Ing.

Markus Rupp

Ridgelet Basis

170Univ.-Prof. Dr.-Ing.

Markus Rupp

X-ray image from a famous painting: how do we get rid of the wooden structure?

171Univ.-Prof. Dr.-Ing.

Markus Rupp

Image/Signal Decomposition find appropriate basis in which either

the desired or undesired parts of the signal can be described in sparseform.

by this the desired and undesired parts can be differentiated andfinally decomposed.

172Univ.-Prof. Dr.-Ing.

Markus Rupp

173Univ.-Prof. Dr.-Ing.

Markus Rupp

Wavelets Consider again the function:

We thus have a two-dimensional transformation with modifications in position/location and scale (Ger: Streckung/Granularität).

( )kttp jjkj −= −− 22)( 2/

, φ

ShiftStretch

174Univ.-Prof. Dr.-Ing.

Markus Rupp

Wavelets Note that, if φ(t) is normalized (||φ(t)||=1), then

we also have ||pjk(t)||=1. We select the function φ(t) in such way that they

build for all shifts n an orthonormal basis for a space:

The shifted functions thus build an orthonormal basis for V0.

lklk pp

ZnntV

−=

∈−=

δ

φ

,0,0

0

,

),(span

175Univ.-Prof. Dr.-Ing.

Markus Rupp

Wavelets Example 3.23: Consider the unit pulse

With this basis function all functions f0(t), that are constant for an integer mesh (im Raster ganzzahliger Zahlen) can be described exactly. Continuous functions can be approximated with the precision of integer distance.

We write:

ZnntVtUtUt∈−=

−−=),(

)1()()(

0 φφ

)()()()(),()( 0,0,00 tetftptptftfn

nn −== ∑

176Univ.-Prof. Dr.-Ing.

Markus Rupp

Wavelets The so obtained coefficients

can also be interpreted as piecewise integrated areas over the function f(t).

)(),( ,0)0( tptfc nn =

177Univ.-Prof. Dr.-Ing.

Markus Rupp

Wavelets Stretching can also be used to define new bases

for other spaces.

If these spaces are nested (Ger:Verschachtelung):

course scale fine scale

then we call φ(t) a scaling function (Ger: Skalierungsfunktion) for a wavelet.

ZnntV

ZnntVjj

j ∈−=

∈−=−−

−

),2(2span),2(2span

2/1

φ

φ

...... 1012 −⊂⊂⊂ VVVV

178Univ.-Prof. Dr.-Ing.

Markus Rupp

Wavelets Next to nesting, there are other important

properties of Vm. Shrinking and closure:

Multi-resolution property:

)(;0 2 RLVV mZmmZm=∪=∩

∈∈

)()(for )2()( 21 RLxfVxfVxf mm ∈∈⇔∈ −

179Univ.-Prof. Dr.-Ing.

Markus Rupp

Wavelets Example 3.24: Consider the unit impulse

)2(2)(

),2(2

)12()2()2(

,1

1

nttp

ZnntV

tUtUt

n −=

∈−=

−−=

−

−

φ

φ

φ

)12()2()( −ttt φφφ1 ½ 1

180Univ.-Prof. Dr.-Ing.

Markus Rupp

Wavelets Example 3.24: Consider the unit impulse

With this function we can resemble all functions f(t), that are constant in a half-integer (n/2) mesh. All continuous functions can be approximated by a half-integer mesh.

)2(2;),2(2

)12()2()2(

,11 ntpZnntV

tUtUt

n −=∈−=

−−=

−− φφ

φ

)()()()(),()( 1,1,11 tetftptptftfn

nn −−−− −== ∑

181Univ.-Prof. Dr.-Ing.

Markus Rupp

Wavelets The function f-1(t) thus is an even finer

approximation of f0(t) in V0. Since V0 is a subset of V-1 we have:

With a suitable basis ψ0,n(t) from W0 withW0 U V0 =V-1

∑∑

∑

∑

+=

+=

=

−

−−

−

nnn

nnn

nnn

nnn

tdtpc

tetpc

tpctf

)()(

)()(

)()(

,0)0(

,0)0(

1,0,0)0(

,1)1(

1

ψ

182Univ.-Prof. Dr.-Ing.

Markus Rupp

Wavelets In other words, the set Wj complements the set

Vj in such a way that:

Wj U Vj =Vj-1

With Vj-1 the next finer approximation can be built.

Hereby, Wj is the orthogonal complement of Vj:

1in −⊥= jjj VVW

Vj-1Vj Wj

183Univ.-Prof. Dr.-Ing.

Markus Rupp

Wavelets These functions ψj,n(t) are called Wavelets. Thus, we can decompose any function at an

arbitrary scaling step into two components ψj,n and pj,n.

Very roughly, one can be considered a high pass, the other a low pass.

By finer scaling the function can be approximated better and better.

The required number of coefficients is strongly dependent on the Wavelet- or the corresponding scaling function.

Word creation from French word ondelette (small wave), by Jean Morlet, Alex Grossmann

~1980

184Univ.-Prof. Dr.-Ing.

Markus Rupp

Wavelets Wavelets have also the scaling property:

As well as orthonormal properties:

nkljnlkj

jjnj

tt

ntgt

−−

−−

=

−=

δδψψ

ψ

)(),(

)2(2)(

,,

2/,

185Univ.-Prof. Dr.-Ing.

Markus Rupp

Alfréd Haar (Hungarian: 11.10.1885– 16.3.1933 )

Wavelets Example 3.25: Haar Wavelets (1909)

[ ]

[ ])()(2

1)(

)()(2

1)(

12,2,,1

12,2,,1

tptpt

tptptp

nmnmnm

nmnmnm

++

++

−=

+=

ψ

)12()2()()2(2)()()( 0,00,10,0 −−=== − tttttpttp φφψφφ

186Univ.-Prof. Dr.-Ing.

Markus Rupp

Example 3.25f-1(t)

f0(t)

e0,-1(t) )(

)(

0,0

0,0

t

tp

ψ

187Univ.-Prof. Dr.-Ing.

Markus Rupp

Multirate Systems Such procedure for wavelets can also be

interpreted as a dyadic (Ger: dyadisch, oktavisch) tree structure:

Since low and high passes divide the bandwidth every time, it can be worked afterwards with lower data rate.

L

H

L

H

L

H

188Univ.-Prof. Dr.-Ing.

Markus Rupp

Multirate Systems Example: consider the following picture:

Calculate the mean of the entire picture

Correct picture by its mean

Calculate means of the remaining half-picture errors

Correct by means ofhalf pictures

Compute means of quarter picture errors…

189Univ.-Prof. Dr.-Ing.

Markus Rupp

Multirate Systems Thus:

By such a procedure complexity can be saved in every stage without loosing signal quality.

L

H

L

H

L

H

2 2

Subband Coding This approach connects wavelets with

classical subband coding in which an original large bandwidth is split into smaller and smaller subunits.

This view (at end of the 80ies) however did not reveal the true potential of wavelets as they only offered equivalent performance.

190Univ.-Prof. Dr.-Ing.

Markus Rupp

New Wavelets This situation changed when Ingrid

Daubechies introduced new families of wavelets, some of them not having the orthogonality property but a so-called bi-orthogonal property.

Ingrid Daubechies (17.8.1954) is a Belgian physicist and mathematician.

191Univ.-Prof. Dr.-Ing.

Markus Rupp

192Univ.-Prof. Dr.-Ing.

Markus Rupp

Remember: Vector Spaces Definition : If there are two bases,

that span the same space with the additional property:

then these bases are said to be dual or biorthogonal (biorthonormal for ki,j=1).

,...,,;,...,,2121 mm

qqqUpppT ==

jijijikqp −= δ,,

Example: Le Gall Wavelets

Univ.-Prof. Dr.-Ing. Markus Rupp

Daubechies Wavelets

New Wavelets Daubechies and LeGall wavelets share

this biorthogonal property which makes them of linear phase.

Unfortunately, they lose the orthogonality and thus the energy preserving property (not unitary).

They are the two sets defined in JPEG 2000 image coding.

195Univ.-Prof. Dr.-Ing.

Markus Rupp

Related Documents