Technical University at Kosice Faculty of Electrical Engineering and Informatics Department of Electronics and Multimedia Communications Ing. Michal Aftanas Through Wall Imaging Using M-sequence UWB Radar System Thesis to the dissertation examination Supervisor: doc. Ing. Miloˇ s Drutarovsk´ y, CSc. Program of PhD study: Infoelectronic Field of PhD study: 5.2.13 Electronic Form of PhD study: Daily Koˇ sice December 2007

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

Technical University at KosiceFaculty of Electrical Engineering and Informatics

Department of Electronics and Multimedia Communications

Ing. Michal Aftanas

Through Wall Imaging Using M-sequenceUWB Radar System

Thesis to the dissertation examination

Supervisor: doc. Ing. Milos Drutarovsky, CSc.Program of PhD study: InfoelectronicField of PhD study: 5.2.13 Electronic

Form of PhD study: Daily

Kosice December 2007

Contents

Head Page i

Contents iii

List of Abbreviations iv

List of Symbols v

Introduction 6

1 UWB Radar Systems 71.1 History of Radar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71.2 Radars Fundamentals and Applications . . . . . . . . . . . . . . . . . 71.3 UWB and M-sequence Advantages . . . . . . . . . . . . . . . . . . . 81.4 M-sequence UWB Radar System . . . . . . . . . . . . . . . . . . . . 9

2 Data Preprocessing 132.1 Through Wall Radar Basic Model . . . . . . . . . . . . . . . . . . . . 132.2 Through Wall Penetrating . . . . . . . . . . . . . . . . . . . . . . . . 142.3 Through Wall Radar Data Representation . . . . . . . . . . . . . . . 162.4 Data Calibration and Preprocessing . . . . . . . . . . . . . . . . . . . 18

3 Basic Radar Imaging Methods 233.1 Backprojection vs. Backpropagation . . . . . . . . . . . . . . . . . . 233.2 SAR Imaging . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 243.3 Kirchhoff Migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . 263.4 f-k Migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 313.5 Multiple Signal Classification . . . . . . . . . . . . . . . . . . . . . . 363.6 The Inverse Problem . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

4 Improvements in Radar Imaging 404.1 Cross-correlated Back Projection . . . . . . . . . . . . . . . . . . . . 404.2 Fast Back Projection . . . . . . . . . . . . . . . . . . . . . . . . . . . 414.3 Migration by Deconvolution . . . . . . . . . . . . . . . . . . . . . . . 424.4 SEABED and IBBST . . . . . . . . . . . . . . . . . . . . . . . . . . . 434.5 f-k Migration Improvements . . . . . . . . . . . . . . . . . . . . . . . 44

4.5.1 Nonuniform FFT . . . . . . . . . . . . . . . . . . . . . . . . . 444.5.2 f-k Migration in Cylindrical Coordinations . . . . . . . . . . . 454.5.3 f-k Migration with Motion Compensation . . . . . . . . . . . . 454.5.4 f-k Migration with Surface and Loss Compensation . . . . . . 464.5.5 Prestack Residual f-k Migration . . . . . . . . . . . . . . . . . 464.5.6 f-k Migration with Anti-Leakage Fourier Transform . . . . . . 464.5.7 Lagrange’s Interpolation in f-k Migration . . . . . . . . . . . . 47

ii

CONTENTS iii

4.6 Migrations with Antenna Beam Compensation . . . . . . . . . . . . . 47

5 Experiments with Through Wall Imaging 495.1 Review of Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . 495.2 First Experiments with TWI . . . . . . . . . . . . . . . . . . . . . . . 51

Conclusion 55

Thesis for dissertation work 56

References 63

List of Abbreviations

1D – One dimension2D – Two dimensions3D – Three dimensionsADC – Analog to Digital ConverterART – Algebraic Reconstruction TechniqueAWGN – Additive White Gaussian NoiseBHDI – Beamspace High Definition ImagingBST – Boundary Scattering TransformECS – Extended Chirp ScalingEOK – Extended Omega KFFT – Fast Fourier TransformGPR – Ground Penetrating RadarHDVI – High Definition Vector ImagingIBST – Inverse Boundary Scattering TransformIFFT – Inverse Fast Fourier TransformIR – Impulse ResponseLAN – Local Area NetworkM-sequence – MLBS – Maximum Length Binary SequenceMUSIC – MUltiple SIgnal CharacterizationNUFFT – NonUniform Fast Fourier TransformationRADAR – RAdio Detection And Ranging systemRAW – Unprocessed dataRX – ReceiverSAR – Synthetic Aperture RadarSEABED – Shape Estimation Algorithm based on Boundary Scattering Transform

and Extraction of Directly scattered wavesSMA – SubMiniature version A connectorTWI – Through the Wall ImagingSNR – Signal to Noise RatioTCP/IP – Transmission Control Protocol/Internet ProtocolTOA – Time Of ArrivalTWR – Through Wall RadarTX – TransmitterT&H – Track And HoldUWB – Ultra Wide Band

iv

List of Symbols

∗ – Convolutionα – Attenuation constantc – Speed of light in the vacuum~CA – CrosstalkdTXRX – Distance between Tx and Rxdinwall – Thickness of the wallδ – Dirac delta functionεr – Permitivity~Erad – Radiated Electric field~Emeas – Measured Electric fieldf – FrequencyG(x0;x) – Greens function, x0: source point , x: observation point~hA – Measurement system transfer function~hC – Clutter transfer function~hT – Target transfer functionk – WavenumberK – Multiple response matrixλ – EigenvalueΛ – Impulse responseLair−wall – Loss due to air-wall interfaceLwall – Loss inside the wallε – Permeability−→∇ – Divergence∇2 – Laplacianω – Angular frequencyφ – Scalar wavefield in frequency domainψ – Scalar wavefieldr – Distance from the antennaσ – ConductivityT – Time reversal matrixtTXRX – Wave travel time between Tx and Rxtinwall – Wave travel time inside the walltan δ – Loss tangent of the wallv – Velocity of wave propagationvn – Eigenvectorvwall – Velocity of wave propagation inside the wallVS(t) – Voltage time evolution applied to the TX antennaVR(t) – Received voltage from RX antennaw – Point spread function~xo – Source point position~x – Observation point positionZc – Impedances of the feed cableZ0 – Impedances of the free space[xtr, ztr] – Transmitter coordinates[xre, zre] – Receiver coordinates[xT , zT ] – Target coordinates

v

Introduction

Nowadays, rescue and security of people is very serious problem. Persons buriedunder ruins caused by earthquake or under snow after avalanche can be find veryhard with present technique. Lots of firemen died inside building full of smoke withzero visibility. In all of these scenes through wall radar can help on a large scale.”Seeing” through the wall and clothes can help rapidly also in field of airport se-curity, police raid actions and lots of another. In this work I am focused on theimaging algorithms in order to image interior of room including walls and objectsinside of the room. The field of algorithms for through wall imaging is really poor,most of algorithms for this purpose were derived from aircraft radars imaging, seis-mology, ground penetrating radars imaging, sonars etc. There is a huge number ofmodifications and improvements in imaging algorithms in all fields.

In January 2007 started project ultra wideband radio application for localisa-tion of hidden people and detection of unauthorized objects with acronym RADIO-TECT, COOP-CT-2006 co-funded by the European Commission within the SixthFramework Programme with duration of two years. Our department is one of theresearch team in this project. My contribution in this project should be to developenew, or implement some already existing methods that allow to image the interiorof room behind wall. One of the most promising radar device used in this project isM-sequence UWB radar developed at Technische Universitat in Ilmenau (the coor-dinator of this project). I made through wall measurements with this radar and usedthem for processing. That is the reason why I describe main features of M-sequenceUWB radar device in chapter 1. In chapter 2 basic through wall radar model, datarepresentation and preprocessing is described. Three basic imaging methods fromwhich most another methods are derived and they are described in section 3. Inthe next section 4, review of some improved imaging methods that can be used forthrough wall imaging are described. Simulated and real experiments with throughwall imaging are reviewed in section 5. My first experience with through wall imag-ing measurements and processing are shown in section 5.2. At the end of workconclusion and proposed thesis are presented.

This thesis originated within the support of the project Ultra Wideband Radioapplication for localisation of hidden people and detection of unauthorised objects(Acronym: RADIOTECT) and project Digital Processing of UWB Radar Signals(Acronym: DSP-UWB-RAD).

6

1 UWB Radar Systems 7

1 UWB Radar Systems

1.1 History of Radar

In 1904 Christian Huelsmeyer gave public demonstrations in Germany and theNetherlands of the use of radio echoes to detect ships so that collisions could beavoided, which consisted of a simple spark gap aimed using a multipole antenna.When a reflection was picked up by the two straight antennas attached to the sepa-rate receiver, a bell sounded. The system detected presence of ships up to 3 km. Itdid not provide range information, only warning of a nearby metal object, and wouldbe periodically ”spun” to check for ships in bad weather. He patented the device,called the telemobiloscope, but due to lack of interest by the naval authorities theinvention was not put into production [9]. Nikola Tesla, in August 1917, proposedprinciples regarding frequency and power levels for primitive radar units. Tesla pro-posed the use of standing electromagnetic waves along with pulsed reflected surfacewaves to determine the relative position, speed, and course of a moving object andother modern concepts of radar [71]. The world war two moved forward developingof airborne radars [16]. The next major development in the history of radars was theinvention of the cavity magnetron by John Randall and Harry Boot of BirminghamUniversity in early 1940’s. This was a small device which generated microwave fre-quencies much more efficiently. The UWB (Ultra WideBand) term was at first usedin the late 1960’s Harmuth at Catholic University of America, Ross and Robbins atSperry Rand Corporation and Paul van Etten at the USAF’s Rome Air DevelopmentCenter [7]. Till the end of 20th century there were lot of radar types for differentapplications like airborne radars, ground penetrating radars, sonars and one whichwill be discussed detailed in this paper - the through wall penetrating radar.

1.2 Radars Fundamentals and Applications

The term RADAR is derived from the description of its first primary role as a RAdioDetection And Ranging system. Most natural and man-made objects reflect radiofrequency waves and, depending on the radars purpose, information can be obtainedfrom objects such as aircraft, ships, ground vehicles, terrain features, weather phe-nomenon, and even insects. The determination of the objects position, velocity andother characteristics, or the obtaining of information relating to these parameters bythe transmission of radio waves and reception of their return is sometimes referredto radio determination. In most cases, a basic radar operates by generating pulsesof radio frequency energy and transmitting these pulses via a directional antenna.When a pulse fall on object in its path, a small portion of the energy is reflectedback to the antenna. The radar is in the receive mode in between the transmittedpulses, and receives the reflected pulse if it is strong enough. The radar indicatesthe range to the object as a function of the elapsed time of the pulse traveling to

1.3 UWB and M-sequence Advantages 8

the object and returning. The radar indicates the direction of the object by thedirection of the antenna at the time the reflected pulse was received [18].

Radar is an important tool in the safe and efficient management of the airspacesystem. Safe and efficient air travel involves radars for short-range surveillance ofair traffic and weather in the vicinity of airports, the long-range surveillance andtracking of aircraft and weather on routes between airports, and the surveillanceof aircraft and ground vehicles on the airport surface and runways. The aircraftheight above ground is determined by radar altimeters that assist in safe and efficientflight. Airborne Doppler navigation radars measure the vector velocity of the aircraftand determines the distance traveled. Weather avoidance radars identify dangerousweather phenomena and assist the pilot to avoid them. Some military pilots, whotrain at low altitudes, use terrain-following and terrain-avoidance radars that allowthe pilot to closely fly over the ground and fly over or around other obstacles in hispath. Navy and coast guard ships employ navigation radars to assist in avoidingcollisions, assisting in making landfall, and piloting in restricted waters. Radarsare also used on shore for harbor surveillance supporting the vessel traffic system.Spaceborne radars have also been used for altimetry, ocean observation, remotesensing, mapping, navigation, and weather forecasting. The familiar police speed-gun radars are a well-known applications of radar for law enforcement.

Another big category of radar systems are ground penetrating and thorough wallradar system. In general, they use shorter range but better resolution like airborneradars. Ground Penetrating Radar (GPR) can by used e.g. for geological survey orlandmines detection. GPR allow the construction of subsurface 3D seismic images.Detection of landmines is very serious problem in all over the world. One hundredmillion uncleared landmines lie in the fields and alongside the roads and footpathsof one-third of the countries in the developing world and leave over 500 victims aweek [26]. Through Wall Radar (TWR) system is in general used for rescue andsecurity activity. It offers ability to detect criminals including terrorists obscuredfrom view e.g. behind walls, to detect and locate trapped people after accidents orcatastrophes like burred building, earthquake, avalanche, etc. and to detect unlawfulobjects hidden under clothes, including non-metallic objects. Another opportunityof utilize the TWR is imaging interior of room and inside walls, this work will presentreview of methods for this purpose.

1.3 UWB and M-sequence Advantages

We use UWB Maximum Length Binary Sequence (MLBS or M-sequence) radar sys-tem, because it has many advantages in comparison with classical pulse, or contin-uous wave radar. The main advantages of UWB radar are: The UWB signal can betransmitted with no carrier, producing transmit signal require less power, improvedrange measurement accuracy and object identification (greater resolution), reducedradar effects due to passive interference (rain, mist, aerosols, metalized strips, ...),

1.4 M-sequence UWB Radar System 9

decreased detectability by hostile interceptor, availability of low cost transceiversand many others [56], [84], [54].

The first idea to use a very well known M-sequence in UWB radar was proposedin 1996 by Jurgen Sachs from Technical University in Ilmenau and Peter Peyerl fromMEODAT GmbH Ilmenau, US patent No. 6272441 [93], [90]. The main advantagesof using M-sequence are: The use of periodic signals avoids bias errors, allows linearaveraging for noise suppression, M-sequence has low crest factor what allow to usethe limited dynamics of real systems and the signal acquisition may be carried outby undersampling. Thus signals of an extreme bandwidth may be sampled using lowcost, commercial Analog to Digital Converters (ADC) in combination with samplinggates.

1.4 M-sequence UWB Radar System

For illustration how M-sequence looks and what are its property, 3 shift stages forgenerate M-sequence with period 7 chips will be used. It is given from (1.1), wheren is number of chips and N is number of generator shift stages [104].

n = 2N − 1 (1.1)

Then generated M-sequence in time domain is shown on Figure 1.1. Length of one

Period T

Clock period tc

Time

1

-1

Am

plitu

de

Figure 1.1: M-sequence with 7 chips in period T

chip is tc that is clock period and M-sequence acquire amplitude values -1 and 1.Autocorrelation function of this M-sequence is shown on Figure 1.2. It can be seenthat maximum amplitude is 2N − 1 so increasing number of chips will increase alsocorrelation gain. On Figure 1.3 spectrum of M-sequence can be seen.

UWB radar system with M-sequence generator [87], [123], [29], [93], [90], [92],[94], [120], [91], [121], [119], [1], [86] is made up from 9 shift registers and 511 chips.From Figure 1.4 can be seen that only 2 feedback connections and one summationare needed. For illustration one period of ideal 511 chips length M-sequence isshown on Figure 1.5 (top), the same but delayed and noised M-sequence with 6dBSNR (middle) and output after correlation of these two signal (top and middle)is shown in bootom of Figure 1.5. From so noised signal (Figure 1.5 middle) can

1.4 M-sequence UWB Radar System 10

Time

-1

2n - 1

2 tc

Am

plitu

de

Figure 1.2: Autocorrelation function of M-sequence

fc 2fc0

Usable Bandwidth

FrequencyClock Rate

Am

plitu

de [d

B]

Figure 1.3: Spectrum of M-sequence

z-1 z-1 z-1 z-1 z-1 z-1 z-1 z-1 z-1

Figure 1.4: M-sequence generator with 9 shift registers

be very well obtain information about delay, that is common problem in radartechniques. This is one of the biggest advantage of using M-sequence on radarfield. Block schematic of complete M-sequence UWB radar system used in ourcase is shown on Figure 1.6. System clock generate clock rate fc = 9 GHz. 9 stageshift register generates 511 chips length M-sequence that is periodically transmitted.Reflected signal is received with two receive antennas and serve to the Track andHold (T&H) stages. T&H stages are controlled by clock rate divided by binarydivider. They divide clock rate n times so in our case it is 512 times. Because numberof chips is 511 with this very well made synchronization the undersampling withfactor 512 is provided. Undersampled signals are processed by ADC and averagedby summators

∑, this is called hardware averaging and mostly is set to 256. So

averaged signal is correlated with transmitted M-sequence in signal processing block.One can not forget, that very well synchronization is absolutely necessary to obtain

1.4 M-sequence UWB Radar System 11

0 50 100 150 200 250 300 350 400 450 500−1

0

1

One period of 511 chips length M−sequence

0 50 100 150 200 250 300 350 400 450 500−5

0

5Delayed and noised M−sequence

0 50 100 150 200 250 300 350 400 450 500

0

200

400

600Output of corelation receiver

Figure 1.5: Illustration of M-sequence correlation gain

Systemclock

N-stageshift register

Binarydivider

Signalprocessing

Σ/ADC/T&H

Clock rate fc M-sequence

Response

TX

RX1

RX2ResponseΣ/ADC/T&H

Figure 1.6: Block schematic of M-sequence UWB radar system

correct information about shift between transmitted a received signal. In signalprocessing block is furthermore made so called software averaging that is mostly setto 7. Both averaging can be done because processed signal is periodic and averagingis made up with respect to this period. First advantage of this averaging is that theAdaptive White Gaussian Noise (AWGN) is reduced very well, the second is thatalso data rate is reduced to acceptable level. With clock frequency fc = 9 GHz,

1.4 M-sequence UWB Radar System 12

number of chips 511, undersampling factor 512, hardware averaging factor 256 andsoftware averaging factor 7 the output frequency is approximately 9809 chips persecond. It means that we practically receive approximately 19 impulse responsesper second. This is enough for detecting of moving people, or for capturing datafrom man made moving radar measurements.

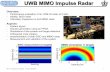

Final product is very robust in one box. It include two SMA connectors for re-ceive antennas, one for transmit antenna, standard power supply connector (230V),TCP/IP LAN connector and power supply switch. M-sequence UWB radar deviceis shown on Figure 1.7:

Figure 1.7: M-sequence UWB radar device

In conventional pulse radar system one short pulse is transmitted and impulseresponse of all targets, radar device and antenna system is received. In M-sequenceradar case M-sequence is transmitted periodically and in receiver part is receivedsignal correlated with ideal M-sequence . Output after correlation in ideal casewhen transmitter is directly connected with receiver is also one short pulse withduration one chip. Practically it means that measurement results from pulse radar,or M-sequence radar are similar and all processing methods for calibration andimaging can be done by the same way.

2 Data Preprocessing 13

2 Data Preprocessing

Before any imaging methods can be applied to the measured data they have to bepreprocessed. There are many special unwanted effects and unknowns that shouldbe reduced as much as possible. The main of these effects and methods to reducedthem will be described in next sections right after TWR basic model and datarepresentation will be described. Moreover one part of this section will be focusedon wave penetrating through the wall.

2.1 Through Wall Radar Basic Model

TWR basic model specify all processes which contribute to the measured data.These processes are caused mainly by the measurement system, the measurementenvironment and in small part also by measured objects. This model will be de-scribed on TWR system with one RX and one TX stationary antenna.

Antenna signal effects will be shown for both RX and TX antennas. The electricfield which is generated by the TX antenna is called ~Erad and can be modeled by[59], [100]:

~Erad(r, θ, ϕ, t) =1

2πrc~hTX(θ, ϕ, t) ∗

√Z0√Zc

dVs(t)

dt(2.1)

where r, θ, ϕ are spatial coordinates and t is time, Zc and Z0 the impedances of thefeed cable and free space resp., and c the vacuum speed of light. The voltage timeevolution applied to the TX antenna is denoted VS(t), ~hTX is the vectorial transferfunction for the emitting antenna and ∗ represents the convolution. The receivedvoltage from RX antenna VR is then:

VR(t) =

√Zc√Z0

~hRX(θ, ϕ, t) ∗ ~Emeas(t) (2.2)

where ~hRX is the transfer function of the receiving antenna, and ~Emeas(t) is the fieldat the RX antenna. VS(t) and VR(t) are scalars due to the fact that the antenna

integrates all spatial components into one signal. The process that transforms ~Erad

into ~Emeas depends on the different travel signal paths and is denoted temporarilyas the unknown impulse response ~X(r, θ, ϕ, t). The received voltage thus becomes:

VR(t) =

√Zc√Z0

~hRX(θ, ϕ, t) ∗ ~X(r, θ, ϕ, t) ∗ ~Erad(r, θ, ϕ, t) (2.3)

If we take the 1/r dependency out of the definition of ~Erad we can combine all

antenna terms into one term ~hA:

VR(t) =1

r~hA(θ, ϕ, t) ∗ ~X(r, θ, ϕ, t) (2.4)

2.2 Through Wall Penetrating 14

~hA contains the contributions of the measurement system to the signal. It can beaccurately measured in lab conditions but it is very difficult and exacting challenge,that require very precise measurements.

Antenna crosstalk effects means signal traveling directly from the transmitterto the receiver. This signal is thus constantly present in all measurements and shallremain constant for a fixed antenna setup. The impulse response of the direct pathbetween both antennas will be expressed as ~CA. The expression of he received fieldbecomes:

VR(t) =1

dTXRX~hA(θ, ϕ, t) ∗ ~CA +

1

r~hA(θ, ϕ, t) ∗ ~X1 (2.5)

where ~X1 represents the other (non-direct) paths between the antennas, and dTXRXis the direct distance between TX and RX. The first term can be measured (cali-

brated) by pointing the antenna to the sky or to an absorber, so that ~X1 becomeszero. It can thus be easily subtracted out of any measured data. The second termcontains the information about eventual target presence, and is thus the most in-teresting one.

~X1 contains a combination of the impulse responses of target ~hT and clutter~hC .

~X1 = ~hT + ~hC (2.6)

Here targets are behind wall scatterers we want to detect, while clutter is anythingelse which adds misinformation to the signal. A typical clutter examples, presentsin most measurements are the multiple reflections inside wall, multiple reflectionsbetween walls inside room, not constant permitivity in wave propagation, movingtrees in outside measurements, false objects received from behind antennas etc. Themodel of the received signal (2.5) becomes:

VR(t) =1

dTXRX~hA(θ, ϕ, t) ∗ ~CA(t) +

1

r~hA(t) ∗ (~hT (t) + ~hC(t)) + n (2.7)

The last term n is added to represent measurement noise which is not added to thesignal due to a reflection, but due to the measurement system itself.

Even if most variables in (2.7) can be measured, it is very difficult to measurethem precisely. In practical measurements only VR(t) is measured what is summationof all this variables, this is the first source of systematic error.

2.2 Through Wall Penetrating

Through wall imaging require wave penetrating through specific building materialssuch as concrete blocks, clay brick, drywall, asphalt shingle, fibreglass insulation etc.The transmitted signal is attenuated due to free space loss, scattering from air-wallinterface, loss in the wall, and the scattering from objects. The loss due to air-wallinterface Lair−wall depends on orientation of the electric field with respect to the

2.2 Through Wall Penetrating 15

incident plane. If the electric field is parallel to the incident plane, the loss can becalculated by [116]:

Lair−wall = 20 log10

(2√εr − sin2 θi

εr cos θi +√εr − sin2 θi

)(2.8)

where θi is the incident angle and εr is the relative dielectric constant of the wall.The propagation loss inside the wall Lwall is related to the wall thickness h, its

dielectric property, and its loss tangent. Lwall can be estimated by:

Lwall = 20 log10 [exp (−αh)] (2.9)

where α is the attenuation constant of the wall and is given by:

α =2πf

c

√ε′r

√1

2

(√1 + tan2 δ − 1

)(2.10)

where ε′r is the real part of the wall dielectric constant, and tan δ is the loss tangentof the wall which is given by:

tan δ =σ

ωε(2.11)

where σ is the conductivity, ω is the angular frequency, and ε is the absolute permit-tivity of the wall. (2.10) shows that the loss in the wall is depends on its dielectricconstant and loss tangent. From (2.11) can be seen that loss tangent is a function offrequency [116]. In praxis the waves are able to penetrate through the concrete wallsup to approximately 3 - 4 GHz. Higher frequencies are significantly attenuated anddo not penetrate the wall. Radar with higher frequencies are used e.g. for detectionobjects under clothes, because so high frequencies penetrate through the clothes,but do not penetrate through the human body.

Example of through wall attenuation is shown on Figure 2.1. On this figure isshown through the air measurement in comparison with through the wall measure-ment. Both measurements were made under same conditions, only the difference wasthe 60 cm thickness concrete wall between antennas in through wall measurement.Frequencies from 1 GHz to 3 GHz are attenuated almost constant approximatelyby 20 dB. Frequencies around 4 GHz are attenuated around 60 dB what is morethan dynamic range of our radar device. Through wall limitation for approximately3 GHz is also limitation for image resolution. The higher the frequency is usedthe better image resolution can be obtained. This is why is not possible to obtainbig image resolution from through wall measurements. The resolution limitation isaround tenths of centimeters.

Another diagrams and tables of different wall penetrating looses and frequenciescharacteristics can be found in [2], [4], [78], [63].

2.3 Through Wall Radar Data Representation 16

0 1 2 3 4 5 6−60

−50

−40

−30

−20

−10

0

Through the Air and Wall Measurements

Frequency [GHz]

Am

plit

ud

e [d

B]

Through the Air MeasurementThrough the Wall Measurement

Figure 2.1: Through the air and through the wall measurement

2.3 Through Wall Radar Data Representation

3 types of data representation are used in most bistatic radar measurements, A-scan,B-scan and C-scan [28], [59], [29]. Bistatic means that one antenna is transmittingand another antenna is receiving, so not only one antenna is used for transmittingand receiving like in monostatic case.

A-scan is obtained during the acquisition of one TWR time signal (one impulseresponse), in our case it is 511 chips. It is assumed that radar system is stationary,or moves very slowly. In reality all signals, arriving at the antenna at the same timeare superposed with a weight which is dependent on the direction of arrival (theantenna pattern). An A-Scan thus contains for each time t all signals arriving fromall directions at that time. Figure 2.2 shows example of unprocessed A-scan. It ismeasurement of metal plate(1m × 0.5m) 1 meter behind wall. X-axis represents timepropagation of one impulse response expressed in number of chips, Y-axis representsamplitude of received signal.

One A-Scan provides only a very limited amount of information, therefore theinformation in more than one A-Scan has to be combined. In order to have a morecomplete view of the area under study, the TWR system shall be moved e. g. alongthe wall. This movement is said to be part of a scanning, i.e. movement on a more orless regular grid. If the movement is quasi linear, with a near constant velocity, thena number of A-scans are acquired at positions of the measurement system whichare almost equidistant. This set of 1D A-Scans, can thus be assembled togetherin a two dimensional (2D) structure, and visualized as an image. This 2D datasetis called a B-scan. Usually it is visualized with the scanning direction (distance)horizontally, and the time vertically. Figure 2.3 shows example of two the sameunprocessed B-scans. They differ only in colormap chosen in Matlab. It can be seen

2.3 Through Wall Radar Data Representation 17

0 50 100 150 200 250 300 350 400 450 500−1

−0.5

0

0.5

1

Am

plitu

de

Time [Chips]

A−scan example

Wall

Metal plateCrosstalk

Figure 2.2: Example of A-scan, metal plate behind wall

Impulse responses in scaning points

Tim

e [C

hips

]

B−scan example

0 50 100 150

100

200

300

400

500−0.06

−0.04

−0.02

0

0.02

0.04

0.06

Impulse responses in scaning points

Tim

e [C

hips

]

B−scan example

0 50 100 150

100

200

300

400

500−0.06

−0.04

−0.02

0

0.02

0.04

0.06Front wall

Rear wall

Metal plate

Crosstalk Front wall

Rear wall

Metal plate

Crosstalk

a) Colormap - Jet b) Colormap - HSV

Figure 2.3: Example of B-scan, metal plate behind wall, a) Colormap Jet, b) Col-ormap HSV

that different colormap can be little beat confusing and can emphasize or attenuatesmall amplitudes, therefore have to be chosen carefully. From this point of viewsometimes is better to show A-Scans step by step in points of interest or use 3Dimage. Measurement system was moved 1 meter before front wall along 2 meters.150 impulse responses was collected during movements.

B-scan can be represented also by 3D image, X-axis represents time propagationof one impulse response expressed in number of chips, Y-axis represent movementdirection and Z-axis represents amplitude of received signal. Example of this imageis shown on Figure 2.4.

C-scan method can add one more dimension to the measured data. Finally, itis possible to acquire a set of A-Scans, where for each acquisition the radar systemis placed at a well known position in a regular grid. This way a three dimensionaldata set is obtained, with the scanning coordinates in X and Y direction, and the

2.4 Data Calibration and Preprocessing 18

Time [Chips] Impu

lse re

spon

ses

Am

plitu

de

Crosstalk

Front wall

Metal plate

Rear wall

Figure 2.4: Example of B-scan, metal plate behind wall, 3D image

time/depth coordinate in the Z direction. The problem is that there is one moredimension to show (also in A-scan and B-scan), the amplitude of received signal.Imagine 4 dimensions in one image is not so easy, so in praxis only one X-Y planein specific depth Z is shown.

2.4 Data Calibration and Preprocessing

There are several types of calibrations and preprocessing methods that help us toremove some unwanted artifacts from measured data like finding time zero, crosstalkremoving, oversampling, deconvolution techniques, multiply echoes removing, tar-get resonances etc. Most of these calibrations and preprocessing operations areextremely important and affects the imaging results on a large scale. For examplecrosstalk do not contain any information about scanned object, but mostly representbiggest part of signal. Time zero estimation is very important for correct focusingmeasured hyperbolas back to the one point, what will be described in section 3, iftime zero estimation will be incorrect even in a few chips, artifacts after imagingwill be considerable.

One have to mentioned that through wall imaging is much more problematiclike detection or localization of moving objects. Whereas in moving objects detec-tion background subtraction can be applied what solve lot of problems, in imagingantenna system is moving and all what is measured is background and it can notbe subtracted. Background subtraction eliminates influence of antennas, radar elec-tronics, walls etc. on measured objects. Therefore the calibration in imaging haveto be done with great precision and it significantly manipulates the results.

Time zero is the time instant (or corresponding digitized sample) where theactual signal starts. TX antenna transmit M-sequence periodically around. The

2.4 Data Calibration and Preprocessing 19

exact time at which the TX antenna starts emitting the first chip from M-sequenceis time zero (t0) [59]. This time depends on antennas cables lengths and in ourcase moreover from one unpredictable value, because M-sequence generator startsgenerating every time on another chip position after power supply is reconnected.Finding time zero in our case means rotate all received impulse responses so theywill have first chip corresponded with spatial position of TX antenna. There areseveral methods how to find number of chips to rotate all impulse responses. Mostoften used method is with using crosstalk signal, that is very well described in[117]. Principle is very simple, whichever measured object can be used, positionin data that corresponded to well known spatial position of object have to be find.Alternatively, since the geometry of antenna system is known, and the transmissionmedium of the cross talk is air, the time needed for the signal to arrive at RX canbe easily estimated as:

tTXRX = dTXRX/c (2.12)

with tTXRX the estimated travel time, dTXRX the known distance between the an-tennas and c the velocity of propagation in air. As can be seen in Figure 2.2 thecrosstalk has both a negative and a positive peak. The study [117] has shown thatthe best and most reliable part of the crosstalk response to estimate its position isthe first peak.

After the cross talk has been used to determine time zero, it may be removed outfrom the data. The cross talk is the part of the signal that travels directly betweenthe emitting and receiving antennas, it is the first and often the largest peak in theA-Scan signal. The removal of this cross talk is thus important. The cross talk canbe obtained by measuring with radar at the free space, or with absorbers around.This reference measurement can be then subtracted out from the data. In praxis itis very difficult to made a good quality cross talk measurements. It can be madein anechoic chamber room like it is shown on Figure 2.5 a), then the result is veryclose to ideal, but is not usable, because the whole system is positioned differentlythan during normal measurements. Due to this change in positioning it is possiblethat the alignment of the antennas changes slightly due to mechanical relaxation ofthe system. Better results are obtained often when crosstalk measurement is doneduring normal measurements with absolutely same arrangement of whole system.However, in this case it is very difficult to made measurement in ”free space”. Thecrosstalk measurement inside building is shown on Figure 2.5 b), it can be seen thatlots of another artifacts like floor, roof, near walls etc. are present. In this case onlya few chips are used like reference for crosstalk removing, e.g. from chip 80 to 150.Cutting in time domain will cause unwanted effects in frequency domain, so somewindowing function should be used. On Figure 2.6 examples of data (metal platebehind front wall) before a) and after b) crosstalk removing can be seen.

Effort to remove influence of antenna and whole system impulse response tothe measured data is the most complex calibration process. There are lots of less ormore complicated methods how to do it, described here [98], [28], [100], [101], [89],[5], [39]. However, the main principle is every time the same. Long impulse response

2.4 Data Calibration and Preprocessing 20

50 100 150 200 250 300 350 400 450 500−1

−0.5

0

0.5

1Crosstalk mesured in anechoic chamber room

Time [Chips]

Am

plitu

de

50 100 150 200 250 300 350 400 450 500−1

−0.5

0

0.5

1Crosstalk mesured inside building

Time [Chips]

Am

plitu

de

Crosstalk

Another artifacts

a) b)

Figure 2.5: a) Example of crosstalk measured in anechoic chamber room with hornantennas b) Example of crosstalk measured inside building with double horn anten-nas

50 100 150 200 250 300 350 400 450 500−1

−0.5

0

0.5

1Before crosstalk removing

Time [Chips]

Am

plitu

de

50 100 150 200 250 300 350 400 450 500−1

−0.5

0

0.5

1After crosstalk removing

Time [Chips]

Am

plitu

de

Front wall Front wall

Metal plate Metal plateCrosstalk

a) b)

Figure 2.6: Metal plate behind front wall a) Before crosstalk removing b) Aftercrosstalk removing

of antenna causing that every subject that reflect the wave and is received by thisantenna is presented in data also in long area. Influence of antenna impulse responseto the received signal can be reduced by deconvolving whole data with this impulseresponse in frequency domain. This is time consuming process and require lotsof experience for adjusting lots of parameters when is made manually. Therefore,there were made some automatic optimization processes [98], [28] pages 298 - 310,[39], that reduce manually adjusting parameters and improve deconvolution quality.Example of manually made antenna deconvolution is shown on Figure 2.7. It canbe seen that after deconvolution main energy form front wall and metal plate isconcentrated in one peak, that will improve imaging results.

When the target is known (e. g. land mine problem), the impulse responseof this target can by measured and then used for deconvolution. There are severalmethods [28] like optimum or least squares filtering, wiener filtering, matched filter-ing, minimatched filtering etc. which performances are compared by Robinson and

2.4 Data Calibration and Preprocessing 21

50 100 150 200 250 300 350 400 450 500−1

−0.5

0

0.5

1Before deconvolution

Time [Chips]

Am

plitu

de

50 100 150 200 250 300 350 400 450 500−0.4

−0.2

0

0.2

0.4

0.6

0.8

1After deconvolution

Time [Chips]

Am

plitu

de

a) b)

Front wall Front wallMetal plate

Metal plate

Figure 2.7: Metal plate behind front wall a) Before deconvolution b) After decon-volution

Treitel [88]. The selection of a suitable filter depends on the characteristics of thesignal. As the sample data set is composed of signal, noise and clutter, the questionof the stability of the filter must be considered. There are lots of mathematicaloptimization methods how to propose a good solution described in [28].

Multiple echoes or reverberations are very often produced in through wallradars. These can occur as a result of reflections between the antenna and thewall or within cables connecting the antennas to either the receiver or transmitter.The effect of these echoes can be considerably increased as a result of the applica-tion of time varying gain. This can be easily appreciated by considering the case ofan antenna spaced at distance d from the wall. Multiple reflections occur every 2d(twice the separation) and will decrease at a rate equal to the product of the wallreflection coefficient and the antenna reflection coefficient. This problem may bepartially overcome by suitable signal processing algorithms which can be applied tothe sampled time series output from either time domain or frequency domain radars.The general expectation is that all the individual reflections will be minimum delay.This expectation is generally reliable because most reflection coefficients are lessthan unity, hence the more the impulse is reflected and re-reflected the more it isattenuated and delayed [28]. As a result, the energy is concentrated at the beginningof the train of wavelets. The simplest method of removing multiple reflections is bymeans of a filter of the form:

F (z) =1

1 + αzn(2.13)

In essence, this filter subtracts a delayed (by n) and attenuated (by α) value ofthe primary wavelet from the multiple wavelet train at a time corresponding to thearrival of the first reflection. An alternative method of resolving overlapping echoesis based on the use of the MUltiple SIgnal Characterization (MUSIC) algorithm[102], [21]. It is a high resolution spectral estimation method and is used to es-timate the received signals covariance and then perform a spectral decomposition.

2.4 Data Calibration and Preprocessing 22

Although computationally intensive, evaluation of the technique by Schmidt [102]gave promising results.

The application of analytical methods of target discrimination started with theapplication of Prony’s method [82] to target recognition. The basis of the techniqueis that every object will possess a unique resonant characteristic. Hence every targetcan be identified in terms of its resonant characteristic. In its basic form Prony’smethod is inherently an ill conditioned algorithm and is highly sensitive to noise. Itcan, therefore, be understood that for targets behind the wall what is lossy medium,the high frequency signal information is low and hence the signal to noise ratio issuch as to make Prony processing very vulnerable. Indeed, Dudley [36] points outthat since all real data are truncated only approximations to the resonances are everavailable even in the limit of vanishing noise. Recent developments have improvedthe robustness of the method and is very often used in praxis. There are tworealizations of the Prony method, the classical or the eigenvalue method and detailsof these methods are discussed in [20].

All of these preprocessing methods are applied individual on A-scan. There areseveral B-scan or C-scan processing methods mostly based on migration of the dataand will be described in the next chapter.

3 Basic Radar Imaging Methods 23

3 Basic Radar Imaging Methods

In this chapter I will try to mention some basic methods in so big field like radarimaging is. Most of basic imaging methods like Synthetic Aperture Radar (SAR)or lot of migration methods were developed for airborne radar system, ground pen-etrating radar, tomography, seismology or even for sound waves. They passed along way of successive changes, improvements and adapting for specific uses. Thiscaused that many times they changed their names but not fundamental or theyacquired new names but not the base, what can lead to confusing situation. Like agood example the concept ”SAR” can be mentioned: In airborne the SAR imagingprocess is mostly refereed with synthesize a very long antenna by combining signalsreceived by the radar as it moves along its flight track and compensating Dopplereffects [27], on the other hand, in GPR is SAR imaging process mostly mentioned asconvolving the observed data set with the inverse of the point target response [48].What don’t have to look like the same from first point of view.

To obtain corrected image from preprocessed radar data most often some mi-gration techniques are used. The task of imaging algorithms is to transform timedomain in to the depth domain, where depth means the coordination from antennato the target direction, in order to reduce unfocused nature of data before imaging.The migration algorithms perform spatial positioning, focusing and amplitude andphase adjustments to correct the effects of the spreading or convergence of raypathsas waves propagates. All migration algorithms are based on a linearisation of thewave scattering problem. This means that the interaction of the field inside thescatterer and between different scatterers present in the scene is neglected. Thisapproximation is known from optics as the Born approximation [38], [19].

3.1 Backprojection vs. Backpropagation

This approach is not observed in all publications, but most often backprojection isassociate with geometrically based methods. On the other hand, backpropagationis associated with wave equations based methods. Very good subdivide of basicimaging methods into these two categories is shown in [59].

• Backprojection Algorithms: This class of algorithms contains the conventionalSAR algorithm as well as simple migration algorithms as diffraction summationmigration.

• Backpropagation Algorithms: This class of algorithms contains most migrationalgorithms (including the well known Kirchoff migration) as well as the waveequation based, non-conventional SAR.

The first migration methods were geometric approaches. After the introductionof the computer, more complex techniques, based on the scalar wave equation wereintroduced. A good overview of these techniques is given in [118] and [11].

3.2 SAR Imaging 24

3.2 SAR Imaging

In this chapter conventional geometrically based bistatic synthetic aperture radarimaging will be described. Synthetic aperture radar means that antenna system ismoving during data acquisition.

All geometrically based imaging methods perform only spatial positioning andfocusing, the amplitude and phase adjustments can not be done, because the waveequation is not regarded.

The basic 2D SAR spatial model is shown on Figure 3.1 a). Transmitter hascoordinates [xtr, ztr], receiver [xre, zre] and point target [xT , zT ]. Transmitted waveis reflected from target to all directions uniformly. Because antenna beam is widesignal reflected from target is received not only when antenna system is exactly overthe target, but in all positions that allow to ”see” the target. This will cause thatpointed target will be represent in acquired B-scan as hyperbola, like it is showin Figure 3.1 b). Time Of Arrival (TOA) is the time when wave is flying from

RXTX RXTX

x Direction

zD

ire

ctio

n

[z , yT T]H1

H2

Motion

x Direction

tD

ire

ctio

nA(x,t)B(x,z)

a) b)

Figure 3.1: a) 2D SAR spatial model b) B-scan of pointed target

transmitter to target and from target to receiver. It is given by the (3.1) where thev is velocity of wave propagation. This hold for constant velocity, so all system isin homogeneous medium.

TOA =

√(xtr − xT )2 + (ztr − zT )2 +

√(xT − xre)2 + (zT − zre)2

v(3.1)

The signal A(x, t) received in given time can be reflected from all points that lies onthe locations where TOA is constant. The process that transforms measured dataset A(x, t) to the real spatial data B(x, z) is called migration. These computationscan be made in measured scene A(x, t) or in output scene B(x, z). In praxis whendata are sampled the first method is mostly used. First of all, the spatial grid withspecified resolution in B(x, z) scene is created. One point A(xn, tn) is added to allpoints that have the corresponding constant TOA in B(x, z). This is done for all

3.2 SAR Imaging 25

points from A(x, t). The points that have the same TOA are on hyperbola H withfocuses at transmitter and receiver position (Figure 3.1 a)). Mathematically thisprocess can be written like:

B (xi, zi) =1

N

N∑n=1

A(xn, TOAn) (3.2)

where A(xn, TOAn) are all points that have the same TOA, that is computed by(3.1) for target position [xi, zi].

This method geometrically focus hyperbolas from A(x, t) in to the one pointin B(x, z). Of course this is not ideal approach and this methods produce a lot ofartifacts.

Conflict in names of basic imaging methods become evident in simple geomet-rical approach. This method is often called also back projection [109], [51], [58], [31][66] or diffraction summation [75], [88], [73], [83], [76]. Moreover this geometricalapproach is often incorrectly called Kirchhoff migration [30], [23], [22] [122], [125].

Though the topic is through wall imaging so at least some wall has to beconsidered. Because the wall has another permitivity and permeability like freespace the wave inside the wall will changed the velocity. Wave traveling throughmedium with another permitivity or permeability will cause wave diffraction andrefraction. On Figure 3.2 SAR model with wall is shown.

RXTX

x Direction

zD

ire

ctio

n

[x , zT T]

Wall

�rd 1inwall

d 2inwall

Figure 3.2: Spatial SAR model with wall

The accurate calculation of the total flight time is a critical step. The velocityof the signal inside wall is slower than in free space and this will cause the longerflight time. How to correct the velocity changing inside wall is described by DefenceResearch and Development Canada in [44] and [43]. The time for wave to travel agiven distance dinwall inside a wall is given by:

tinwall =dinwallvwall

(3.3)

3.3 Kirchhoff Migration 26

where vwall is the velocity of the signal inside the wall. The velocity of the electro-magnetic signal is related to the wall permitivity and permeability given by [15]:

vwall =1

√µwallεwall

=1

√µ0ε0µrwallεrwall

=c

√µrwallεrwall

(3.4)

where c is the speed of light in free space, µwall and εwall are the permeability andpermitivity of the wall, µ0 and ε0 are the permeability and permittivity of free space,and µrwall and εrwall are the relative permeability and permitivity of the wall. Fornon-magnetic materials such as concrete wall, the relative permeability is equal toone. Hence the velocity in concrete wall can be given by:

vwall =c

√εrwall

(3.5)

and than:

tinwall =

√εrdinwallc

(3.6)

Time delay caused by through concrete walls penetrating is expressed by comparisonwith free space propagation:

tdelay =dinwallvinwall

− dinwallc

=dinwallc

(√εwall − 1) (3.7)

Including tdelay in to the basic SAR imaging algorithm (3.2) allow correct changingvelocity inside the wall and the output image will be better focused and position oftarget will be corrected as well. Specific results before and after velocity correctionare shown in detail in [44] and [43]. Using these methods assumes to know relativepermitivity of wall and its thickness as well as assume that there are not anotherobjects with another wave velocity like in free space. This method is promising incase when same small objects behind robust wall are investigated. However, in thiscase it is a really big challenge even if this velocity model works perfectly. Thereis often not only one wall and knowledge about its permitivity or thickness is notcertainty in praxis, especially in case of bury walls after earthquake or in police orfireman raid when knowledge about object is insufficient or neither zero.

3.3 Kirchhoff Migration

Kirchhoff migration is based on solving scalar wave equation. Partial differentialequations called separation of variables based on Green’s theorem is used to solve thisscalar wave equation. Kirchhoff migration theory provides a detailed prescriptionfor computing the amplitude and phase along the wavefront, and in variable velocity,the shape of the wavefront. Kirchhoff theory shows that the summation along thehyperbola must be done with specific weights and, for variable velocity, then thehyperbola is replaced by a more general shape.

3.3 Kirchhoff Migration 27

Kirchhoff migration is mathematically complicated algorithm and is deeply sub-scribed e. g. in [73] and [118] and applied for landmine detection in [40]. In thischapter only short overview of mathematical description will be provided with fo-cusing on physical interpretation of method.

Gauss’s theorem [42] and Green’s theorem [17] is used for Kirchhoff migrationdescription. Gauss’s theorem generalizes the basic integral result (3.8) in to themore dimensions (3.9).

b∫a

φ′(x)dx = φ(x)∣∣ba = φ(b)− φ(a) (3.8)

∫V

−→∇ .−→Advol =

∮∂V

−→A.−→n dsurf (3.9)

Where−→A is a vector function that might physically represent something like fluid

flow or electric field and −→n is the outward pointing normal to the surface bounding

the volume of integration (Figure 3.3).−→∇ .−→A is denotes the divergence of

−→A . (3.9)

n

Volume

Figure 3.3: Volume vector and normal to the bounding surface

generalizes (3.8). In many important cases, the vector function−→A can be calculated

as the gradient of a scalar potential−→A =

−→∇φ. In this case Gauss’s theorem becomes:∫

V

∇2φdvol =

∮∂V

∂φ

∂ndsurf (3.10)

In the case when φ = φ1φ2, the φ′ = φ2φ′1 + φ1φ

′2 and (3.8) becomes:

b∫a

[φ2φ′1 + φ1φ

′2]dx = φ1φ2|ba (3.11)

orb∫

a

φ2φ′1dx = φ1φ2|ba −

b∫a

φ1φ′2dx (3.12)

3.3 Kirchhoff Migration 28

An analogous formula in higher dimensions arises by substituting A = φ2~∇φ1 into

(3.9). Using the identity ~∇.a~∇b = ~∇a.~∇b+ a∇2b leads to:∫V

[~∇φ2.~∇φ1 + φ2∇2φ1

]dvol =

∮∂V

φ2∂φ1

∂ndsurf (3.13)

Similar result can be obtained when A = φ1~∇2φ2:∫

V

[~∇φ1.~∇φ2 + φ1∇2φ2

]dvol =

∮∂V

φ1∂φ2

∂ndsurf (3.14)

Finally, subtracting (3.14) from (3.13) yields to:∫V

[φ2∇2φ1 − φ1∇2φ2

]dvol =

∮∂V

[φ2∂φ1

∂n− φ1

∂φ2

∂n

]dsurf (3.15)

(3.15) is known as Green’s theorem. It is a fundamental to the derivation of Kirch-hoff migration theory. It is a multidimensional generalization of the integrationby formula from elementary calculus and is valuable for its ability to solve certainpartial differential equation. φ1 and φ1 in (3.15) may be chosen as desired to conve-niently express solutions to a given problem. Typically, in the solution to a partialdifferential equation like the wave equation, one function is chosen to be the solutionto the problem at hand and the other is chosen to be the solution to a simpler ref-erence problem. The reference problem is usually selected to have a known analyticsolution and that solution is called a Greens function [73].

In general, the scalar wavefield ψ is a solution to the Helmholtz scalar waveequation: (

∇2 + k2)ψ = 0 (3.16)

where ∇2 is the Laplacian and k is the wavevector. When the wave travels throughthe medium from point x0 to the point x this Helmholtz scalar wave equation canbe expressed with the aid of Greens function G (x0, x) by:(

∇2 + k2)G (x0, x) = δ (x0 − x) (3.17)

where the δ (x0 − x) is a Dirac delta function. Greens function describes how thewave changes during travel from point x0 to the point x.

Now the Kirchhoff diffraction integral will be described. Let the ψ be a solutionto the scalar wave equation:

∇2ψ (~x, t) =1

v2

∂2ψ (~x, t)

∂2t(3.18)

where v is the wave velocity and don’t have to be a constant. When Green’s theoremfrom (3.15) is applied to the (3.18) with the aid of Helmholtz equation, Hankel

3.3 Kirchhoff Migration 29

functions, piece of luck and good mathematical skill (the complete process can befind in [73]) the Kirchhoff’s diffraction integral can be obtained:

ψ(~x0, t) =

∮∂V

[1

r

[∂ψ

∂n

]t+r/v0

− 1

v0r

∂r

∂n

[∂ψ

∂t

]t+r/v0

+1

r2

∂r

∂n[ψ]t+r/v0

]dsurf (3.19)

where r = |~x− ~x0|, ~x0 is the source position and v0 is a constant velocity. In manycases this integral is derived for forward modeling with the result that all of theterms are evaluated at the retarded time t − r/v0 instead of the advanced time.This Kirchhoff diffraction integral expresses the wavefield at the observation point~xo at time t in terms of the wavefield on the boundary ∂V at the advanced timet + r/v0. It is known from Fourier theory that knowledge of both ψ and ∂nψ arenecessary to reconstruct the wavefield at any internal point.

In order to obtain practical migration formula two essential task are required forconvert (3.19). First, the apparent need to know ∂nψ must be addressed. Second,the requirement that the integration surface must extend all the way around thevolume containing the observation point must be dropped. There are various waysto make both of these arguments. Schneider [103] solved the requirement of ∂nψ byusing a dipole Green’s function with an image source above the recording place, thatvanished at z = 0 and cancelled the ∂nψ term in (3.19). Wiggins in [111] adaptedSchneider’s technique to rough topography. Docherty in [35] showed that a monopoleGreen’s can also lead to the accepted result and once again challenged Schneider’sargument that the integral over the infinite hemisphere can be neglected. After all,migration by summation along diffraction curves or by wavefront superposition hasbeen done for many years. Though Schneider’s derivation has been criticized, hisfinal expressions are considered correct.

As a first step in adapting (3.19) it is usually considered appropriate to discardthe term 1 1

r2∂r∂n

[ψ(~x)]t+r/v0 . This is called the near-field term and decays morestrongly with r than the other two terms. Then, the surface S = ∂V will be takenas the z = 0 plane, S0, plus the surface infinitesimally below the reflector, Sz, andfinally these surfaces will be joined at infinity by vertical cylindrical walls, S∞, Figure3.4. On Figure 3.4 the geometry for Kirchhoff migration is shown. The integrationsurface is S0 + Sz + S∞, only S0 contributes meaningfully to the estimation of thebackscattered field at vx0. Though the integration over S∞ may contribute, but itcan never be realized due to finite aperture limitations and its neglect may introduceunavoidable artifacts. With these considerations, (3.19) becomes:

ψ(~x0, t) =

∮S0

[−1

r

[∂ψ

∂z

]t+r/v0

+1

v0r

∂r

∂z

[∂ψ

∂t

]t+r/v0

]dsurf (3.20)

where the signs on the terms arise because ~n is the outward normal and z is increasingdownward so that ∂n = −∂z.

3.3 Kirchhoff Migration 30

SourceS0

Xs X

X0

Sz

r

Scatterpoint

Image source

SS∞ ∞

θ

Figure 3.4: The geometry of Kirchhoff migration; from [73]

Now, ∂zψ must be evaluated. Figure 3.4 shows the source wavefield beingscattered from the reflector at ~x0 which is called the scatterpoint. A simple modelfor ψ is that it is approximately the wavefield from a point source, placed at theimage source location, that passes through the scatterpoint to the receiver. Thiscan be expressed as:

ψ(~x, t) ∼ 1

rA (t− r/v) =

[A]t−r/vr

(3.21)

where A(t) is the source waveform at the scatterpoint. Using the chain rule, applyingnear-field term and substituting into (3.20) the result is obtained:

ψ(~x0, t) =

∮S0

2

vr

∂r

∂z

[∂ψ

∂t

]t+r/v0

dsurf (3.22)

where v = v0. Since r =√

(x− x0)2 + (y − y0)2 + (z − z0)2, the ∂zψ can be writtenas:

∂r

∂z=

∂

∂z

√(x− x0)2 + (y − y0)2 + (z − z0)2 =

z

r= cos θ (3.23)

where θ is the vertical angle between the receiver location and ray to the scatterpoint.With this, the final formula for the scattered wavefield just above the reflector is:

ψ(~x0, t) =

∮S0

2 cos θ

vr

[∂ψ

∂t

]t+r/v

dsurf (3.24)

Fact that (3.24) is a form of direct wavefield extrapolation, but not recursive isreason why it is not yet a migration equation. A migration equation have to estimatereflectivity, not just the scattered wavefield and for this purpose a model relating thewavefield to the reflectivity is required. The simplest such model is the explodingreflector model [70] which asserts that the reflectivity is identical to the downward

3.4 f-k Migration 31

continued scattered wavefield at t = 0 provided that the downward continuation isdone with ~v = v/2. Thus, an wave migration equation follows immediately fromequation (3.24) as:

ψ(~x0, 0) =

∮S0

2 cos θ

~vr

[∂ψ

∂t

]r/~v

dsurf =

∮S0

4 cos θ

vr

[∂ψ

∂t

]2r/v

dsurf (3.25)

Finally this is a Kirchhoff migration equation. This result was derived by manyauthors including Schneider [103] and Scales [99]. It expresses migration by sum-mation along hyperbolic travelpaths through the input data space. The hyperbolicsummation don’t have to be seen at the first point of view, but it can be indicatedby [∂tψ]2r/v, notation means that partial derivation is to be evaluated at the time2r/v. ∂tψ (~x, t) is integrated over the z = 0 plane, only those specific traveltimesvalues are selected by:

t =2r

v=

2√

(x− x0)2 + (y − y0)2 + (z − z0)2

v(3.26)

which is the equation of a zero-offset diffraction hyperbola.In addition to diffraction summation, (3.25) requires that the data be scaled by

4cosθ/(vr) and that the time derivative be taken before summation. These addi-tional details were not indicated by the simple geometric theory of section 3.2 and aremajor benefits of Kirchhoff theory. It is these sort of corrections that are necessaryto move towards the goal of creating bandlimited reflectivity. The same correctionprocedures are contained implicitly in f-k migration that will be described in section3.4. Kirchhoff migration is one of the most adaptable migration schemes available.It can be easily modified to account for such difficulties as topography, irregularrecording geometry, pre-stack migration, converted wave imaging etc. Computationcost is one of the few disadvantages of this method.

3.4 f-k Migration

Wave equation based migration can be done also in frequency domain. Mr. Stoltin 1978 showed that migration problem can be solved by Fourier transform [106].The basic principle will be described for 2D problem. Completely mathematicallysolution is very precisely shown in [106] or in [73], so only the basic relations andprinciple of the method will be shown in this work.

Scalar wavefield ψ (x, z, t) is a solution to:

∇2ψ − 1

v2

∂2ψ

∂t2= 0 (3.27)

where v is the constant wave velocity. It is desired to compute ψ (x, z, t = 0) whatis wave in time zero - time when doted point with coordinates [x, y] start radiating.

3.4 f-k Migration 32

While ψ (x, z = 0, t) is given by measuring and it expressing wave in position z = 0where the antennas are positioned. Wavefield can be expressed also in frequency(kx, f) domain, than ψ (x, z, t) is computing from inverse Fourier transform:

ψ(x, z, t) =

∫∫φ (kx, z, f) e2πi(kxx−ft)dkxdf (3.28)

where cyclical wavenumbers and frequencies are used and the Fourier transformconvention uses a + sign in the complex exponential for spatial components (kxx)and a − sign for temporal components (ft). If (3.28) is substituted into (3.27), somemathematical trick can be done and the result is:∫∫ {

∂2φ(z)

∂z2+ 4π2

[f 2

v2− k2

x

]φ(z)

}e2πi(kxx−ft)dkxdf = 0 (3.29)

If v is constant, then the left-hand-side of (3.29) is the inverse Fourier transformof the term in curly brackets. From Fourier theory is known that if signal in onedomain is zero than also in another domain will be zero. This results to:

∂2φ(z)

∂z2+ 4π2k2

zφ(z) = 0 (3.30)

where the wavenumber kz is defined by

k2z =

f 2

v2− k2

x (3.31)

(3.30) and (3.31) are a complete reformulation of the problem in the (kx, f) domain.The boundary condition is now φ (x, z = 0, t) which is the Fourier transform, over(x, t), of ψ (x, z, t). (3.30) is a second-order differential equation with exact solutione±2πikzz, Thus the unique solution can be written by substitution:

φ (kx, z, f) = A (kx, f) e2πikzz +B (kx, f) e−2πikzz (3.32)

where A (kx, f) and B (kx, f) are arbitrary functions of (kx, f) to be determinedfrom the boundary condition(s). The two terms on the right-hand-side of (3.32)have the interpretation of a downgoing wavefield, A (kx, f) e2πikzz, and an upgoingwavefield, B (kx, f) e−2πikzz. Downgoing is mean like in z direction and upgoing in−z direction.

It is now apparent that only one boundary condition φ (x, z = 0, t) is not enoughand the solution will be not unambiguous. To remove this ambiguity the two bound-ary condition are needed for example ψ and ∂zψ, but this is not known in our caseso the migration problem is said to be ill-posed and another trick have to be used.If both conditions were available, A and B could be found as the solutions to φ forz = 0 and it will be specified as φ0 as well as ∂φ/∂z will be specified as φz0:

φ(z = 0) ≡ φ0 = A+B (3.33)

3.4 f-k Migration 33

and∂φ

∂z(z = 0) ≡ φz0 = 2πikzA− 2πikzB (3.34)

The solution for this ill-posed problem and ambiguity removing will be done byassumption the one-way waves. In the receiver position the wave can not be down-going, only upgoing. This allows the solution:

A(kx, f) = 0 and B(kx, f) = φ0(kx, f) ≡ φ(kx, z = 0, f) (3.35)

Then, the wavefield can be expressed as the inverse Fourier transform of φ0:

ψ(x, z, t) =

∫∫φ0(kx, f)e2πi(kxx−kzz−ft)dkxdf (3.36)

and the migrated solution is:

ψ(x, z, t = 0) =

∫∫φ0(kx, f)e2πi(kxx−kzz)dkxdf (3.37)

(3.37) gives a migration process as a double integration of φ0(kx, f) over f andkx. Even if it looks like the solution is complete, it has the disadvantage thatonly one of the integrations, that over kx, is a Fourier transform that can be doneby a fast numerical FFT. The f integration is not a Fourier transform becausethe Fourier kernel e−2πft was lost when the imaging condition (setting t = 0) wasinvoked. However another complex exponential e−2πikzz show up in (3.37). Stoltsuggested a change of variables from (kx, f) to (kx, kz) to obtain a result in whichboth integrations are Fourier transforms. The change of variables is defined by (3.31)and can be solved for f to give:

f = v√k2x + k2

z (3.38)

Performing the change of variables from f to kz according to the rules of calculustransforms (3.37) will yield into:

ψ(x, z, t = 0) =

∫∫φm(kx, kz)e

2πi(kxx−kzz)dkxdkz (3.39)

where

φm(kx, kz) ≡∂f(kz)

∂kzφ0(kx, f(kz)) =

vkz√k2xk

2z

φ0(kx, f(kz)) (3.40)

(3.39) is Stolts expression for the migrated section and forms the basis for the f-kmigration algorithm. The change of variables has reform the algorithm into onethat can be accomplished with FFTs doing all of the integrations. (3.40) resultsfrom the change of variables and is a prescription for the construction of the (kx, kz)spectrum of the migrated section from the (kx, f) spectrum of the measured data.

On Figure 3.5 same examples of transformation from (kx, kz) to (kx, f/v) spaceand vice versa are shown. The v is constant wave velocity. This geometric relation-

3.4 f-k Migration 34

0

0.02

0.04

0.06

0.08

0.1

f/v

-0.06 -0.02 0 0.02 0.06kx

k =.04z

k =.06z

k =.02z

wavelike

0

0.02

0.04

0.06f/v=.06

kz

-0.06 -0.02 0 0.02 0.06kx

f/v=.04

f/v=.02

(k ,f/v)x

(k , )x kz

Figure 3.5: Comparison images in (kx, f/v) space - left side, with (kx, kz) space -right side; from [73]

ships between (kx, kz) and (kx, f/v) can be explained as follows: In (kx, f/v) space,the lines of constant kz are hyperbolas that are asymptotic to the dashed boundary,they are transform in to the (kx, kz) space like curves of constant f/v - semi-circleand vice versa. At kx = 0 and kz = f/v these hyperbolas and semi-circles intersect,when the plots are superimposed. Conceptually, it can also be viewed as a conse-quence of the fact that the (kx, f) and the migrated section must agree at z = 0 andt = 0.

On a numerical dataset, this spectral mapping is the major complexity of theStolt algorithm. Generally, it requires an interpolation in the (kx, f) domain sincethe spectral values that map to grid nodes in (kx, kz) space cannot be expected tocome from grid nodes in (kx, f) space. In order to achieve significant computationspeed that is considered the strength of the Stolt algorithm, it turns out that theinterpolation must always be approximate. This causes artifacts in the final result.The creation of the migrated spectrum also requires that the spectrum be scaled byvkz/

√k2x + k2

z like it is shown in (3.40).The f-k migration algorithm just described is limited to constant velocity. Its

use of Fourier transforms for all of the numerical integrations means that it is com-putationally very efficient.

Stolt provided an approximate technique to adapt f-k migration to non constantwave velocity v(z). This method used a pre-migration step called the Stolt stretch,after this step classical f-k migration has followed. The idea was to perform a one-dimensional time-to-depth conversion with v(z) and then convert back to a pseudotime with a constant reference velocity. The f-k migration is then performed withthis reference velocity. Stolt developed the time-to-depth conversion to be donewith a special velocity function derived from v(z) called the Stolt velocity. Thismethod is now known to progressively lose accuracy with increasing dip and haslost favor. A technique that can handle this problem more precisely is the phase-shift method of Gazdag and is described here [45]. Unlike the direct f-k migration,

3.4 f-k Migration 35

phase shift is a recursive algorithm that process v(z) as a system of constant velocitylayers. In the limit when layer thickness is going to be infinity small, any v(z)variation can be modeled. This method has quite complicated geometrically andlong mathematically representation that is very precisely described in [45] and reallyuserfriendly described in [73]. Hove does it work for one layer will be described inthis work.

The method can be derived starting from (3.36). Considering the first velocitylayer only, this result is valid for any depth within that layer provided that v isreplaced by the first layer velocity, v1. If the thickness of the first layer is ∆z1, thenthe wavefield just above the interface between layers 1 and 2 can be written as

ψ(x, z = ∆z1, t) =

∫∫φ0(kx, f)e2πi(kxx−kz1∆z1−ft)dkxdf (3.41)

where

kz1 =

√f 2

v21

− k2k (3.42)

(3.41) is an expression for downward continuation or extrapolation of the wavefieldto the depth ∆z1. The wavefield extrapolation expression in (3.41) is more simplywritten in the Fourier domain to suppress the integration that performs the inverseFourier transform:

φ(kx, z = ∆z1, f) =

∫∫φ0(kx, f)e2πikz1∆z1 (3.43)

Now consider a further extrapolation to estimate the wavefield at the bottom oflayer 2 (z = ∆z1 + ∆z2). This can be written as:

φ(kx, z = ∆z1 + ∆z2, f) = φ(kx, z = ∆z1, f)T (kx, f)e2πikz2∆z2 (3.44)

where T (kx, f) is a correction factor for the transmission loss endured by the waveas it crossed from layer 2 into layer 1.

If this method want to be applied in praxis, phase shift algorithm [73] haveto be under study more deeply in order to prevent many of unwanted effects andcorrect implementation.

The Fourier method discussed in this section is based upon the solution of thescalar wave equation using Fourier transforms. Though Kirchhoff methods seemsuperficially quite different from Fourier methods, the uniqueness theorems frompartial differential equation theory guarantee that they are equivalent [73]. However,this equivalence only applies to the problem for which both approaches are exactsolutions to the wave equation and that is the case of a constant velocity medium,with regular wavefield sampling. In all other cases, both methods are implementedwith differing approximations and can give very distinct results. Furthermore, evenin the exact case, the methods have different computational artifacts [73].

3.5 Multiple Signal Classification 36

3.5 Multiple Signal Classification

MUltiple SIgnal Classification (MUSIC) is generally used in signal processing prob-lems and was firstly introduce by Therrien in 1992 [108]. It is a method for estimat-ing individual frequencies of multiple time harmonic signals. The use of MUSIC inimaging was first proposed by Devaney in 2000 [32]. He applied the algorithm to theproblem of estimating the locations of a number of point-like scatterers. Detaileddescription is in [32], [21] and [62].

MUSIC for image processing has been developed for multistatic radar systems.It is the way equations based method. The basic idea of MUSIC is the formulationof the so called multistatic response matrix which is then used to compute thetime reversal matrix whose eigenvalues and eigenvectors are shown to correspondto different point-like targets. The multistatic response matrix is formulated usingfrequency domain data, which is obtained by applying the Fourier transform to thetime domain data.

N antennas are centered at the space points Rn, n = 1, 2, ..., N . The positionsof the antennas are not necessarily restricted to be in the same plane or regularlyspaced. The n-th antenna radiate a scalar field ψn (r, ω) into a half space in which areembedded one or more scatterers (targets). M (M ≤ N) is the number of scatterersand Xm is the location of the m-th target in the space. Born approximation is againconsidered [38], [19]. The waveform radiated by the n-th antenna, scattered by thetargets and received by the j-th antenna is given by:

ψn (Rj, ω) =M∑m=1

G (Rj, Xm) τm (ω)G (Xm, Rn) en (ω) (3.45)

where G (Rj, Xm) and G (Xm, Rn) are the Green’s functions of the Helmholtz equa-tion in the medium in which the targets are embedded in direction from transmitterantenna to the target and from the target to the receiving antenna respectively.τm (ω) represents the strength of the m-th target and en (ω) is the voltage appliedat the n-th antenna. In this formula, the signal is only considered for a singlefrequency ω. However, the extension for multiple frequencies is obviously. Themultistatic response matrix K is defined by:

Knj =M∑m=1

G (Rj, Xm) τm (ω)G (Xm, Rn) (3.46)

The scattered waveform emitted at each antenna is measured by all the antennas,so the elements of the multiple response matrix K can be calculated by:

Knj =ψn (Rj, ω)

en (ω)(3.47)

The main idea of MUSIC is to decompose a selfadjoint matrix into two or-thogonal subspaces which represent the signal space and the noise space. Since the

3.6 The Inverse Problem 37

multistatic response matrix is complex, symmetric but not Hermitian (i.e. it is notself-adjoint), the time reversal matrix T is defined from the multistatic responsematrix:

T = Knj ∗Knj (3.48)

where ∗ means the complex conjugation. The time reversal matrix has the samerange as the multistatic response matrix. Moreover, it is selfadjoint. The timereversal matrix T can be represented as the direct sum of two orthogonal subspaces.The first one is spanned by the eigenvectors of T with respect to non-zero eigenvalues.If noise is presence, this subspace is spanned by the eigenvectors with respect toeigenvalues that greater than a certain value, which is specified by the noise level.This space is referred to as the signal space. Note that its dimension is equal tothe number of the point-like targets. The other subspace, which is spanned bythe eigenvectors with respect to zero eigenvalues (or the eigenvalues smaller than acertain value in the case of noisy data), is referred to as noise subspace. That is,denote by the eigenvalues:

λ1 ≥ λ2 ≥ ... ≥ λM ≥ λM+1 ≥ ... ≥ λN ≥ 0 (3.49)

of the time-reversal matrix T and vn, n = 1, 2, ..., N are the corresponding eigen-vectors. Then the signal subspace is spanned by v1, , vM and the noise subspace isspanned by the remaining eigenvectors. For detection of the target the vector gp iscalculated from form the vector of the Greens functions:

gp = (G (R1, p) , G (R2, p) , ..., G (RN , p))′ (3.50)

The point P is at the location of one of the point-like targets if and only if thevector gp is perpendicular to all the eigenvectors vn, n = M + 1, , N . In practice,the following pseudo spectrum is usually calculated by:

D (P ) =1

N∑n=M+1

|(vn, gp)|2(3.51)

The point P belongs to the locations of the targets if its pseudo spectrum is verylarge (theoretically, in the case of exact data, this value will be infinity).

Take in to the account wall in this case can be done by applying Greens functionthat consider wall, what is a big challenge.

3.6 The Inverse Problem