The Monash Campus Grid Programme Enhancing Research with High- Performance/High- Throughput Computing

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

The Monash Campus Grid Programme

Enhancing Research with High-Performance/High-Throughput Computing

What is HPC? High-performance computing is about leveraging the

best and cost-effective technologies, from processors, memory chips, disks and networks, to provide aggregated computational capabilities beyond what is typically available to the enduser

high-performance -- running a program as quickly as possible

high-throughput -- running as many programs as quickly as possible within a unit of time

HPC/HTC are enabling technologies for larger experiments, more complex data analyses, achieving higher accuracy in computational models

Information Technology Services Division

Office of the CIO

The Monash Campus Grid

LaRDS (peta-scale storage)

Monash Gigabit Network

Monash Sun Grid HPC Cluster

Monash SPONGE Condor Pool

Nimrod Grid-enabled Middleware

https://confluence-vre.its.monash.edu.au/display/mcgwiki/Monash+MCG

GT2 / GT4Secure Shell

Secure Copy

GridFTP

Information Technology Services Division

Office of the CIO

WWW

Monash Sun Grid Central high-performance compute cluster (HPC + HTC capable)

Monash eResearch Centre and Information Technology Services Division

Key features:

dedicated Linux cluster with ~205 computers providing ~1,650 CPU cores

processor configurations from 2 CPU cores to up to 48 CPU cores per computer

primary memory configurations from 4 GB to up to 1,024 GB per machine

broad range of applications and development environments

flexibility in addressing our customer requirements

https://confluence-vre.its.monash.edu.au/display/mcgwiki/Monash+Sun+Grid+Overview

Information Technology Services Division

Office of the CIO

Monash Sun Grid node types

2005

2006

2008-10

2009

2008-10

2010

Information Technology Services Division

Office of the CIO

Monash Sun Grid 2010 Very-Large RAM nodes

2010

Dell R910 - four eight-core Intel Xeon (Nehalem) CPUs per node

two nodes [ 64 cores total ]

1,024 GB RAM / node

16 x 600GB 10k RPM SAS disk drives

over 640 Mflop/s

redundant 1.1 kW PSU on each

~300 Mflop/W

http://www.dell.com/us/business/p/poweredge-r910/pd

Information Technology Services Division

Office of the CIO

Monash Sun Grid 2011 Partnership Nodes with

Engineering

2010-11

Dell R815 - four 12-core AMD Opteron CPUs per node

five nodes [ 240 cores ]

128 GB RAM / node

10G Ethernet

> 2,400 Gflop/s

redundant 1.1 kW PSUs

~400 Mflop/W

http://www.dell.com/us/business/p/poweredge-r815/pd

Information Technology Services Division

Office of the CIO

Monash Sun Grid Summary

NameVintag

eNode

Core Count

Gflop/sPower Req’t

Mflop/W

MSG-I 2005 V20z 70 336 ~17 kW 20 Mflop/W

MSG-II 2006 X2100 64 332 ~11 kW 42 Mflop/W

MSG-IIIe 2007 X6220 120 624 ~7.2 kW 65 Mflop/W

MSG-IV 2008 X4600 96 885 ~3.6 kW 250 Mflop/W

MSG-III 2009 X6250 720 7200 ~23 kW 330 Mflop/W

MSG-III 2010 X6250 240 2400 ~7 kW 330 Mflop/W

MSG-gpu 2010 Dell 80> 800 +

18,660?? ??

MSG-vlm 2010Dell

R91064 > 640 ~2.2 kW 290 Mflop/W

MSG-pn 2011Dell

R815240 > 2400 ~5.5 kW 436 Mflop/WMonash Sun Grid HPC Cluster has 1,694

cores &clocks at over 12.5 (+ 18.6) Tflops with > 5.7 TB of RAM

Information Technology Services Division

Office of the CIO

Software Stack• S/W Development, Environments,

Libraries

• gcc, Intel C/Fortran, Intel MKL, IMSL numerical library, Ox, python, openmpi, mpich2, NetCDF, java, PETsc, FFTW, BLAS, LAPACK, gsl, mathematica, octave, matlab (limited)

• Statistics, Econometrics

• R, Gauss, Stata

• Computational Chemistry

• Gaussian 09, GaussView 5, Molden, GAMESS, Siesta, Materials Studio (Discovery), AMBER 10

• Molecular Dynamics

• NAMD, LAMMPS, Gromacs

• Underworld

• CFD Codes

• OpenFOAM, ANSYS Fluent, CFX, viper (user-installed)

• CUDA toolkit, Qt, VirtualGL, itksnap, drishti, Paraview

• CrystalSpace

• FSL

• Meep

• CircuitScape

• Structure and Beast

• XMDS

• ENViMET (via wine)

• ENVI/IDL

• etc etc etc

Information Technology Services Division

Office of the CIO

Growing List!

Specialist Support and AdviseInitial EngagementAccount Creation

General Advise& Startup Tutorial

RequirementsAnalysis

Follow UpMaintenance

CustomisedSolutions

Information Technology Services Division

Office of the CIO

Specialist Support and Advise

Information Technology Services Division

Office of the CIO

• Cluster Queue Configuration and Management

• Compute job preparation

• Custom scripting

• Software installation and tuning

• Job performance and/or error diagnosis

• etc

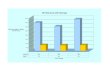

Growth of CPU Usage

2008 859K20093,300K

cpu hours

20106,863K

Information Technology Services Division

Office of the CIO

Growth of CPU Usage

2008 859K2009 3,300K cpu

hours2010 6,863K

Information Technology Services Division

Office of the CIO

783 CPU years!!!

Projected

Active Users

2008 712009 145

Active Users

2010 16924-Aug

Information Technology Services Division

Office of the CIO

What to expect in the future?

Continued refresh of hardware and software

decommissioning older machines

More grid nodes (CPU cores) to meet growing demand

Scalable and high-performance storage architecture without sacrificing data availability

Custom grid nodes &/or subclusters with special configurations to meet user requirements

Better integration with Grid tools and middleware

Information Technology Services Division

Office of the CIO

Green IT Strategy

Monash Sun Grid Beginnings

MSG-I

2005

Sun V20z AMD Opteron (dual core)

initially 32 nodes = 64 cores, with 3 new nodes added in 2007 making a total of 70 cores

4 GB RAM / node

336 Gflop/s

~17kW

20 Mflop/W

http://www.sun.com/servers/entry/v20z/index.jsp

Monash Sun Grid MSG-II

2006

Sun X2100 AMD Opteron (dual core)

initially 24 nodes = 48 cores with 8 nodes added in 2007 making 64 cores at present

4 GB RAM / node

332 Gflop/s

~11 kW

42 Mflop/W

Picture on the right was googled and found from Jason Callaway’s Flicker page: http://www.flickr.com/photos/29925031@N07/

http://www.sun.com/servers/entry/x2100/

Monash Sun Grid Big Mem Boxes

MSG-III (now named as MSG-IIIe)

2008

Sun X6220 Blades - two dual core AMD Opterons per node

currently 20 nodes = 80 cores with 10 nodes to be added in 2010 making 120 cores

40 GB RAM / node

624 Gflop/s

~7.2 kW

330 Mflop/W

http://www.sun.com/servers/blades/x6220/http://www.sun.com/servers/blades/x6220/datasheet.pdf

Monash Sun Grid 2010

MSG-III expansion and GPU nodes

2010

Sun X6250 - two quad-core Intel Xeon CPUs per node

240 cores

24 GB RAM / node

Dell nodes connected to two Tesla C1060 GPU cards

Ten nodes = 20 GPU cards

48 GB and 96 GB RAM configs

http://www.sun.com/servers/blades/x62520/http://www.nvidia.com/object/product_tesla_c1060_us.html

Monash Sun Grid 2009

MSG-III

2009

Sun X6250 - two quad-core Intel Xeon CPUs per node

as of 2009: 720 cores

16 GB RAM / node

> 7 Tflop/s

~23 kW

~330 Mflop/W

http://www.sun.com/servers/blades/x62520/

Monash Sun Grid Big SMP boxes

MSG-IV

2009

Sun X4600 - eight quad-core AMD Opterons CPUs per node

currently three nodes = 96 cores

96 GB RAM / node

885 Gflop/s

~3.6 kW

250 Mflop/W

http://www.sun.com/servers/blades/x4600/

Benefits of using a cluster

parallel

sequential

shared memory

distributed memory use multiple nodes

use 2, 4, 8, 32 coresa single node

multiple scenarios or cases?

use multiple coresuse tools like Nimrod

job

chara

cteri

stic

Information Technology Services Division

Office of the CIO

SPONGE

Introduction

Serendipitous Processing on Operating Nodes in Grid Environment (SPONGE)

Core Idea and Motivation Resource Harnessing Accessibility and Utilization

How SPONGE achieves this.

What SPONGE Can do at the Moment

What SPONGE cannot do at the moment.

Infrastructure and Usage statistics (Pretty Pictures).

Acknowledgements

Core Idea and Motivation The core idea - is to harness tremendous amount of un/under-utilized

computational power to perform high throughput computing.

Motivation - Large (Giga, Terra, Peta ??) scale computational problems that needs High throughput, generally embarrisingly parallel applications, e.g PSAs.

Latin Squares (Mathematics) – Dr. Ian Wanless and Judith Egan; Department of Mathematics.

Molecular Replacement (Biology, Chemistry) – Jason Schmidberger and Dr. Ashley Buckle; Department of Biochemistry and Molecular Biology.

Bayesian Estimation of Bandwidth in Multivariate Kernel Regression with an Unknown Error Density (Business, Economics) – Han Shang, Dr. Xibin Zhang and Dr. Maxwell King; Department of Business and Economics.

HPC Solution for Optimization of Transit Priority in Transportation Networks; Dr. Mahmoud Mesbah, Department of Civil Engineering.

Short running applications that do not require specialized software/hardware and can be easily parallelized.

Single point of submission, monitoring and control.

Core Idea and Motivation Contd…Key Focus Areas

Resource Harnessing – involves tapping “existing” (no new hardware) infrastructure that would contribute in solving the computational problem. Student Labs in different Faculties, ITS, EWS etc.. Staff Computers – Personal Contributions included.

Accessibility How to access these facilities -> Middleware. When to access these facilities -> Access and Usage

Policies.

Utilization - How to properly utilize these facilities Implementation abstraction. Single System Image. Job submission, monitoring and control.

How are we achieving this…

Caulfield Campus

Clayton Campus

Peninsula Campus

Condor Submission Node

User Submits Jobs directly to Condor Submission Node or Via Nimrod, Globus

Condor Execute Node

Condor Head Node or Central Manager

Using Condor – The goal of Condor Project us to develop, implement, deploy and evaluate mechanisms and policies that support High Throughput Computing on large collection of distributively owned computing resources.

Submission and Execution Nodes constantly updates the Central Manager

How are we achieving this…contd

Caulfield Campus

Clayton Campus

Peninsula Campus

Condor Submission Node

Sponge Works – Configuration Layer

User Submits Jobs directly to Condor Submission Node or Via Globus

Condor Execute Node

Condor Head Node Default

Configuration can be modified centrally upto node level.

•Queue Management•Resource Reservation

What SPONGE can do…

Execute large number of short running embarrassingly parallel jobs by leveraging un/under utilized existing computational resources. Sounds simple

AdvantagesLeveraging Idle CPU time that remains unused.Single point of Job Submission, Monitoring, Control

and collation of resultsRemote job submission using Nimrod/G, Globus.

What SPONGE cannot do at the moment

Sponge Pool consists

Mostly non-dedicated computers.

Distributed ownerships.

Limited availability.

This restricts execution of Jobs that:

Require Specialized Software/Hardware High Memory Large Storage Space Additional Software

Takes long time to execute (several days or weeks)

Perform Inter-Process Communication

Some StatisticsUser Name CPU Hrs Used

shikha 2012437.67

jegan 1534528.43

kylee 1166358.76

pxuser 414972.76

iwanless 371833.24

zatsepin 257631.86

hanshang 77930.72

llopes 66747.09

iwanless 30930.82

jvivian 29611.87

User Name CPU Hrs Used

jirving 13258.09

nice-user.pcha13 13205.38

wojtek 7095.26

nice-user.wojtek 6890.78

mmesbah 5562.53

transport 5379

philipc 3733.35

shahaan 3251.94

zatsepin 3069.35

kylee 2988.84

jegan 1937.55

transport 1308.44

Total 688 + CPU Years to date…

Statistics contd…

Acknowledgements

Wojtek Goscinski

Philip Chan

Jefferson Tan

35

Nimrod Tools fore-Research

Monash e-Science & Grid Engineering LaboratoryFaculty of Information Technology

36

Overview

Supporting a Software Lifecycle

Software Lifecycle Tools

The Nimrod family37

Nimrod/G

Grid Middleware

Nimrod/O Nimrod/E

Nimrod Portal

Actuators

Plan File

parameter pressure float range from 5000 to 6000 points 4parameter concent float range from 0.002 to 0.005 points 2parameter material text select anyof “Fe” “Al” task main copy compModel node:compModel copy inputFile.skel node:inputFile.skel node:substitute inputFile.skel inputFile node:execute ./compModel < inputFile > results copy node:results results.$jobnameendtask

38

From one workstation ..

39

.. Scaled Up

40

Why is this challenging?

Develop, Deploy, Test…

41

Why is this challenging?

Build, Schedule & Execute virtual application

42

Approaches to Grid programming

General Purpose WorkflowsGeneric solutionWorkflow editor Scheduler

Special purpose workflowsSolve one class of problemSpecification languageScheduler

43

Nimrod Development Cycle

Prepare Jobs using Portal

Jobs Scheduled Executed Dynamically

Sent to available machines

Results displayed &interpreted

44

Acknowledgements

Message Lab Colin Enticott Slavisa Garic Blair Bethwaite Tom Peachy Jeff Tan

MeRC Shahaan Ayyub Philip Chan

Funding & Support CRC for Enterprise Distributed Systems (DSTC) Australian Research Council GrangeNet (DCITA) Australian Research Collaboration Service (ARCS) Microsoft Sun Microsystems IBM Hewlett Packard Axceleon

Message Lab Wiki:https://messagelab.monash.edu.au/nimrod

Related Documents