A MOBILE APPLICATION FOR RECOGNITION AND TRANSLATION OF TEXT IN REAL TIME AN INTERIM PROJECT REPORT Submitted by SIDDHANT SHARMA (111303) UTKARSH KR. AGARWAL (111312) VIKASH CHANDRA (111313) B. TECH. (CSE) Under the Supervision of: MR. AMIT KUMAR SRIVASTAVA

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

A Mobile Application for Recognition and Translation of Text in real time

A MOBILE APPLICATION FOR RECOGNITION AND TRANSLATION OF TEXT IN REAL TIME

AN INTERIM PROJECT REPORT

Submitted by

SIDDHANT SHARMA (111303)UTKARSH KR. AGARWAL (111312)VIKASH CHANDRA (111313)

B. TECH. (CSE)

Under the Supervision of:

MR. AMIT KUMAR SRIVASTAVA

DEPARTMENT OF COMPUTER SCIENCE & ENGINEERINGJAYPEE UNIVERSITY OF ENGINEERING & TECHNOLOGYGUNA

NOVEMBER 2014ABSTRACT

Inspired by the well-known iPhone app Word Lens, we intend to develop an Android-platform based text translation application that is able to recognize the text captured by a mobile phone camera, translate the text, and display the translation result back onto the screen of the mobile phone at real time. Text data present in images and video contain useful information for automatic annotation, indexing, and structuring of images. We describe a system for smartphones which detects and extracts text, and translates it into user-friendly language. Our Optical Character Recognition (OCR) is based on Tesseract OCR, and translation is done through Google Translate.

i

TABLE OF CONTENTS

CHAPTER NO. TITLE PAGE NO.

ABSTRACT iiACKNOWLEDGMENT iiiDECLARATIONiv

1. INTRODUCTION1.1 General11.2 . . . . . . . . .21.2.1 General 51.2.2.1 General81.2.2.2. . .101.2.2.3121.3 . . .. . . .. . . . . . .131.4 . . . . . . . . . . . . .152. REQUIREMENTS162.1172.2 . . 192.2. 20

ii

ACKNOWLEDGMENT

We take this opportunity to express our deepest gratitude to those who have generously helped us in providing the valuable knowledge and expertise during the course of project. We express our sincere gratitude to Mr. Amit Kumar Srivastava, for his thorough guidance and Dr.Shishir Kumar (H.O.D C.S.E) for his efforts for providing us with this project. Finally, we would like to thank each and every person who has contributed in any of the ways in the training.

iii

DECLARATION

I hereby declare that the project work entitled as A MOBILE APPLICATION FOR RECOGNITION AND TRANSLATION OF TEXT IN REAL TIME is an authentic record of our own work carried out at JAYPEE UNIVERSITY OF ENGINEERING AND TECHNOLOGY as required for the Project Report in Computer Science And Engineering under the guidance of Mr. Amit Kumar Srivastava.

Date: __________ SIDDHANT SHARMAUTKARSH KUMAR AGARWAL VIKASH CHANDRA

Certified that the above statement made by the student is correct to the best of knowledge and belief.

Mr. Amit Kumar Srivastava (DEPARTMENT OF COMPUTER SCIENCE ENGG.)

iv

CHAPTER 1 : INTRODUCTION The motivation of a real time text translation mobile application is to help tourists navigate in a foreign language environment. The application we developed enables the users to get text translate as ease as a button click. The camera captures the text and returns the translated result at real time. The system we developed includes automatic text detection, OCR (optical character recognition), text correction, and text translation. Although the current version of our application would be limited to only selected languages, it can be easily extended into a much wider range of language sets.

1.1. Text in images

A variety of approaches to text information extraction (TIE) from images and video have been proposed for specific applications including page segmentation, license plate location, and content-based image/video indexing . In spite of such extensive studies, it is still not easy to design a general-purpose TIE system. This is because there are so many possible sources of variation when extracting text from a shaded or textured background, or from images having variations in font size, style, color, orientation, and alignment.

1.2. What is text information extraction (TIE)?

A TIE system receives an input in the form of a still image or a sequence of images (frames of videos). The images can be in gray scale or colour, compressed or un-compressed, and the text in the images may or may not move. The TIE problem can be divided into the following sub-problems: i. detection,ii. localization,iii. tracking,iv. extraction and enhancement,v. recognition (OCR)

1.3 Problem Statement Many interchangeable terminologies are used in the literature to indicate text extraction process, such as text segmentation, text detection, and text localization. In this report, we follow the terminology defined as above to maintain the consistency. A system that can extract text objects in image and video documents is a text information extraction system, which is composed of the following five stages: i. detection,ii. localization,iii. tracking,iv. extraction and enhancement,v. recognition (OCR)Each of which constitutes an individual sub-problem.

CHAPTER 2 : LITERATURE REVIEW

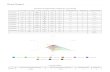

As described in the previous section, TIE can be divided into Ave sub-stages: detection, localization, tracking, extraction and enhancement, and recognition. Each sub-stage will be reviewed in this section.OUTPUT

Fig 1. Architecture of TIE system

2.1. Framing of VideoWe would capture media content using smartphone application which uses Java media framework (JMF). The input to this stage would be a video containing text. The video is then framed into images using JMF at the rate of 1 frame per second. This rate could be increased or decreased depending on the speed of the video i.e. on the basis of fps (frames per second). The images then are scaled before given as input to the next stage.

2.2. Pre ProcessingA scaled image is the input which is then converted into a gray scaled image. This image forms the first stage of the pre-processing part. This was carried out by considering the RGB color contents(R: 11%, G: 56%, B: 33%) of each pixel of the image and converting them to grayscale using appropriate functions of MATLAB. The image is converted to a black and white image containing black text with a higher contrast on white background. As the input video is framed into images at the rate of 1 frame per second in the first stage, the input to this gray scaling stage is a scaled (280x90) colored image i.e. the frames of the video.

Factors like noise, fluctuations, and discontinuities are handled at this stage using various threshold values and contrast levels.

Fig 2. Pre-processing of image

2.3. Detection and Localization

In the text detection stage, since there is no prior information on whether or not the input image contains any text, the existence or non-existence of text in the image must be determined. However, in the case of video, the number of frames containing text is much smaller than the number of frames without text. The text detection stage seeks to detect the presence of text in a given image. Selected a frame containing text from shots elected by video framing, very low threshold values are needed for scene change detection because the portion occupied by a text region relative to the whole image is usually small. This approach is very sensitive to scene change detection. This can be a simple and efficient solution for video indexing applications that only need key words from video clips, rather than the entire text. The localization stage includes localizing the text in the image after detection. In other words, the text present in the frame is tracked by identifying boxes or regions of similar pixel intensity values and returning them to the next stage for further processing. This stage uses Region Based Methods for text localization. Region based methods use the properties of the color or gray scale in a text region or their differences with the corresponding properties of the background.

2.4. Segmentation

After the text is localized, the text segmentation step deals with the separation of the text pixels from the background pixels. The output of this step is a binary image where black text characters appear on a white background. This stage included extraction of actual text regions by dividing pixels with similar properties into contours or segments and discarding the redundant portions of frame.

2.5. Recognition

This stage work would be the final work of the project. This stage includes actual recognition of extracted characters by combining various features extracted in previous stages to give actual text with the help of a supervised neural network. In this stage, the output of the segmentation stage is considered and the characters contained in the image are compared with the pre-defined neural network training set and depending on the value of the character appearing in the image, the character representing the closest training set value is displayed as recognised character.

2.5.1 OPTICAL CHARACTER RECOGNITION via TesseractTesseract is an open-source OCR engine developed at HP labs and now maintained by Google. It is one of the most accurate open source OCR engine with the ability to read a wide variety of image formats and convert them to text in over 60 languages. The Tesseract library would be used in our project. Tesseract algorithm would assume its input is a binary image and would do its own preprocessing first followed by a recognition stage.

CHAPTER 3 : PROBLEM OF TEXT EXTRACTION FROM IMAGES AND VIDEOS

A document image usually contains text and graphics components. The types of graphics component vary according to each specific application domain but generally they include lines, curves, polygons, circles. Text components consist of characters and digits which form words and phrases used to annotate the graphics. Extraction of text components is a challenging problem because:- Graphical components like lines can be of any length, thickness, and orientation. Text components can vary in font styles and sizes.- There may exist touching among text components and touching, crossing between text and graphics components.-Text strings are usually intermingled with graphics and are of any orientation.

Such inconsistencies would make the unpredictable output of Tesseract OCR. Hence, to optimise the output of the Tesseract, we need to pre-process the input. In this chapter we try to touch upon some of the factors that is needed to keep in mind for the pre-processing of image.

3.1 DPIDots per inch(DPI, ordpi)is a measure of spatialprintingorvideodot density, in particular the number of individual dots that can be placed in a line within the span of 1inch.Tesseract works best with text using a DPI of at least 300 dpi, so it may be beneficial to resize images.

Fig 3. DPI

3.2 Binarisation

This is converting an image to black and white. Tesseract does this internally, but it can make mistakes, particularly if the page background is of uneven darkness. Adaptive Thresholding is essential.

Fig 4. Binarisation

3.3 Noise

Noise is random variation of brightness or colour in an image, that can make the text of the image more difficult to read. Certain types of noise cannot be removed by Tesseract in the binarisation step, which can cause accuracy rates to drop.

3.4 Orientation and skew

This is when a page has been scanned when not straight. The quality of Tesseract's line segmentation reduces significantly if a page is too skewed, which severely impacts the quality of the OCR. To address this rotating the page image so that the text lines are horizontal would be a solution.

Fig 5. Orientation

3.5 Borders

Scanned pages often have dark borders around them. These can be erroneously picked up as extra characters, especially if they vary in shape and gradation.

3.6 Spaces between words

Italics, digits, punctuation all create special-case font-dependent spacing. Fully justified text in narrow columns can have vastly varying spacing on different lines.

Fig 6. Spaces variation

CHAPTER 4 : PLATFORM/TECHNOLOGY

4.1. Operating System

Windows XP/Vista/7

4.2. Language

Java J2SE using worklight IDE:Java Platform, Standard Edition or Java SE is a widely used platform for programming in the Java language. It is the Java Platform used to deploy portable applications for general use. In practical terms, Java SE consists of a virtual machine, which must be used to run Java programs, together with a set of libraries (or "packages") needed to allow the use of file systems, networks, graphical interfaces, and so on, from within those programs.

MATLABImage Processing Toolbox of MATLAB provides a comprehensive set of reference- standardalgorithms, functions, andappsfor image processing, analysis, visualization, and algorithm development. You can perform image analysis,image segmentation,image enhancement, noise reduction, geometric transformations, and image registration.

CHAPTER 4 : APPLICATIONS

4.1. Applications

1. In the modern TV programs, there are more and more scrolling video texts which can provide important information (i.e. latest news occurred) in the TV programs. Thus this text can be extracted.2. Extracting the number of a vehicle in textual form: It can be used for tracking of a vehicle. It can extract and recognize a number which is written in any format or font.3. Extracting text from a presentation video.4. Multi-language translator for reading symbols and traffic sign boards in foreign countries.5. Wearable or portable computers: with the rapid development of computer hardware technology, wearable computers are now a reality (Google Goggles).

.

CHAPTER 5 : CONCLUSION

Problem statement of the project has been discussed and explained in the report. Potential problems that could affect the result of translation has been discussed. Recognition would be the final stage of the project which would be done after the full completion of pre-processing of image. We would implement the project considering English language; it can be further extended toother languages. If enlarged in future implementations, it will largely improve the efficiency of the algorithm.

CHAPTER 6 : REFERENCES & BIBLIOGRAPHY

https://hal.archives-ouvertes.fr/file/index/docid/494513/filename/DAS2010.pdf http://code.google.com/p/tesseract-ocr/ www.elsevier.com/locate/patcog/Jung/ https://stacks.stanford.edu/file/druid:my512gb2187/Ma_Lin_Zhang_Mobile_text_recognition_and_translation.pdf http://www.ijarcce.com/upload/2013/november/41-S-Apurva_Tayade_-TEXT.pdf http://www.slideshare.net/vallabh7141/mobile-camera-based-text-detection?related=1 http://airccse.org/journal/sipij/papers/2211sipij09.pdf https://stacks.stanford.edu/file/druid:cg133bt2261/Zhang_Zhang_Li_Event_info_extraction_from_mobile_camera_images.pdf

BOOK:An Introduction To Digital Image Processing With Matlab -Alasdair McAndrew

16

Related Documents