!"#$% ’()*+*,*-. /0)1..*210,1 ’3(*. 456 7##8 91,*)-)*:0 ;8 4 ! #$%&’($) *&+,-./’ 4" <(-= - >-?1@*-0 01)=:(A -B:02 )C1 +:..:=*02 D*0-(? E-(*-D.1@ )C-) B:F1. )C1 :G),:B1 :+ -0 1.1,)*:0H • / H ,-0F*F-)1 *@ /0,GBD10) • I H C-@ .:)@ :+ I:01? +:( -FE1()*@*02 • ’ H G@1@ -FE1()*@1B10)@ )C-) +:,G@ :0 ’))-,A*02 )C1 ,-0F*F-)1J@ :33:010) • K H G@1@ -FE1()*@1B10)@ )C-) +:,G@ :0 )C1 ,-0F*F-)1J@ KG-.*+*,-)*:0@ • L H ,-0F*F-)1 *@ L*A1F • < H :33:010) *@ <*@)(G@)1F • M H ,-0F*F-)1 *@ M.1,)1F N:G( 01)=:(A @C:G.F 10,:F1 )C1 +:..:=*02 D1.*1+@H • /0,GBD10)@ )10F ): (-*@1 .:)@ :+ B:01?" • I:01? ,-0 D1 G@1F ): DG? -FE1()*@*02 )C-) 1*)C1( +:,G@1@ :0 )C1 ,-0F*F-)1J@ OG-.*+*,-)*:0@ :( )C-) -))-,A@ )C1 ,-0F*F-)1J@ :33:010)" >G) *+ :01 F:1@ :016 )C1(1 *@ .1@@ B:01? ): F: )C1 :)C1(" • ’))-,A -FE1()*@1B10)@ )10F ): B-A1 E:)1(@ F*@)(G@) )C1 :33:010) DG) )C1? -.@: B-A1 )C1 E:)1(@ )10F 0:) ): .*A1 )C1 ,-0F*F-)1" • ’FE1()*@1B10) +:,G@*02 :0 OG-.*+*,-)*:0@ )10F@ ): B-A1 )C1 E:)1(@ .*A1 )C1 ,-0F*F-)1" • P-0F*F-)1@ )C-) 31:3.1 .*A1 )10F ): 21) 1.1,)1F" • P-0F*F-)1@ =C:@1 :33:010) 31:3.1 F*@)(G@) )10F ): 21) 1.1,)1F

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

!"#$%&'()*+*,*-.&/0)1..*210,1&

'3(*.&456&7##8& & & & & & &91,*)-)*:0&;8&

&

4&

&

!"""#$%&'($)"*&+,-./'"

4" <(-=&-&>-?1@*-0&01)=:(A&-B:02&)C1&+:..:=*02&D*0-(?&E-(*-D.1@&)C-)&B:F1.&)C1&:G),:B1&:+&-0&

1.1,)*:0H&

• /&H&,-0F*F-)1&*@&/0,GBD10)&

• I&H&C-@&.:)@&:+&I:01?&+:(&-FE1()*@*02&

• '&H&G@1@&-FE1()*@1B10)@&)C-)&+:,G@&:0&'))-,A*02&)C1&,-0F*F-)1J@&:33:010)&

• K&H&G@1@&-FE1()*@1B10)@&)C-)&+:,G@&:0&)C1&,-0F*F-)1J@&KG-.*+*,-)*:0@&

• L&H&,-0F*F-)1&*@&L*A1F&

• <&H&:33:010)&*@&<*@)(G@)1F&

• M&H&,-0F*F-)1&*@&M.1,)1F&

N:G(&01)=:(A&@C:G.F&10,:F1&)C1&+:..:=*02&D1.*1+@H&

• /0,GBD10)@&)10F&):&(-*@1&.:)@&:+&B:01?"&

• I:01?&,-0&D1&G@1F&):&DG?&-FE1()*@*02&)C-)&1*)C1(&+:,G@1@&:0&)C1&,-0F*F-)1J@&OG-.*+*,-)*:0@&

:(&)C-)&-))-,A@&)C1&,-0F*F-)1J@&:33:010)"&&>G)&*+&:01&F:1@&:016&)C1(1&*@&.1@@&B:01?&):&F:&)C1&

:)C1("&

• '))-,A&-FE1()*@1B10)@&)10F&):&B-A1&E:)1(@&F*@)(G@)&)C1&:33:010)&DG)&)C1?&-.@:&B-A1&)C1&

E:)1(@&)10F&0:)&):&.*A1&)C1&,-0F*F-)1"&

• 'FE1()*@1B10)&+:,G@*02&:0&OG-.*+*,-)*:0@&)10F@&):&B-A1&)C1&E:)1(@&.*A1&)C1&,-0F*F-)1"&

• P-0F*F-)1@&)C-)&31:3.1&.*A1&)10F&):&21)&1.1,)1F"&

• P-0F*F-)1@&=C:@1&:33:010)&31:3.1&F*@)(G@)&)10F&):&21)&1.1,)1F&

!"#$%&'()*+*,*-.&/0)1..*210,1&

'3(*.&456&7##8& & & & & & &91,*)-)*:0&;8&

&

7&

&

&

7" <:(&1-,=&:+&)=1&+:..:>*026&?-@&>=1)=1(&*)&*?&:(&*?&0:)&-??1()1A&B@&)=1&01)>:(C&?)(D,)D(1&@:D&A(1>&

E>*)=:D)&-??DF*02&-0@)=*02&-B:D)&)=1&0DF1(*,-.&10)(*1?&*0&)=1&GHI?J"&

&

4" HEKL'6M6NJ&O&HEKL'6&MJ&

&

&

&

&

&

&

7" HE'LP6MJ&O&HE'LPJ&

&

&

&

&

&

&

$" HEK6NL'6MJ&O&HEKL'6MJ&HENL'6MJ&

!"#$%&'()*+*,*-.&/0)1..*210,1&

'3(*.&456&7##8& & & & & & &91,*)-)*:0&;8&

&

$&

&

&

!"""#$%&"'()&*+(,"-&./$%0*"

<=:>&-&?-@1A*-0&01)>:(B&A)(C,)C(1&)=-)&10,:D1A&)=1&+:..:>*02&(1.-)*:0A=*3AE&

• '&*A&*0D1310D10)&:+&?&

• '&*A&D1310D10)&:0&?&2*F10&G&

• '&*A&D1310D10)&:0&H&

• '&*A&*0D1310D10)&:+&H&2*F10&G&

!"#$%&'()*+*,*-.&/0)1..*210,1&

'3(*.&456&7##8& & & & & & &91,*)-)*:0&;8&

&

%&

&

&

!"""#$%&"'()%"*+,%-.+&"/%01()2-"

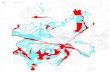

<:0=*>1(&)?*=&01)@:(AB&

&

C?*,?&:+&)?1&+:..:@*02&,:0>*)*:0-.&*0>1310>10,1&-==DE3)*:0=&-(1&)(D1F&

4" '&-0>&G&-(1&*0>1310>10)&

7" '&-0>&G&-(1&*0>1310>10)&2*H10&I&

$" J&-0>&<&-(1&*0>1310>10)&

%" J&-0>&<&-(1&*0>1310>10)&2*H10&'&

K" J&-0>&<&-(1&*0>1310>10)&2*H10&I&

!" '&-0>&G&-(1&*0>1310>10)&2*H10&J&

5" '&-0>&G&-(1&*0>1310>10)&2*H10&L&

8" J&-0>&<&-(1&*0>1310>10)&2*H10&G&

!"#$%&'()*+*,*-.&/0)1..*210,1&

'3(*.&456&7##8& & & & & & &91,*)-)*:0&;8&

&

<&

&

&

!"""#$%"&'()"*+%(),-$"-$".'/%(,'$"0%)1-23("

&

=>1&+:..:?*02&*@&-&.*@)&:+&,:0A*)*:0-.&*0A1310A10,1&@)-)1B10)@"&&C:(&1-,>&@)-)1B10)6&0-B1&-..&:+&)>1&

2(-3>&@)(D,)D(1@6&E4FE%6&:(&G0:01H&)>-)&*B3.I&*)"&

-" '&*@&,:0A*)*:0-..I&*0A1310A10)&:+&J&2*K10&L&

M" '&*@&,:0A*)*:0-..I&*0A1310A10)&:+&J&2*K10&N&

," J&*@&,:0A*)*:0-..I&*0A1310A10)&:+&N&2*K10&'&

A" J&*@&,:0A*)*:0-..I&*0A1310A10)&:+&N&2*K10&L&

1" J&*@&*0A1310A10)&:+&L&

+" J&*@&,:0A*)*:0-..I&*0A1310A10)&:+&L&2*K10&'&

&

3 Maximal Margin Linear Separator (20 points)

f1

1 2

1

2

-1

-2

-1-2

–

–

–

+

f2

+

Data points are: Negative: (-1, -1) (2, 1) (2, -1) Positive: (-2, 1) (-1, 1)

1. Give the equation of a linear separator that has the maximal geometric margin for the dataabove. Hint: Look at this geometrically, don’t try to derive it formally.

(a) w =

(b) b =

2. Draw your separator on the graph above.

3. What is the value of the smallest geometric margin for any of the points?

4. Which are the support vectors for this separator? Mark them on the graph above.

6

6 Naive Bayes (15 points)

Consider a Naive Bayes problem with three features, x1 . . . x3. Imagine that we have seen a totalof 12 training examples, 6 positive (with y = 1) and 6 negative (with y = 0). Here are the actualpoints:

x1 x2 x3 y0 1 1 01 0 0 00 1 1 01 1 0 00 0 1 01 0 0 01 0 1 10 1 0 11 1 1 10 0 0 10 1 0 11 0 1 1

Here is a table with the summary counts:

y = 0 y = 1x1 = 1 3 3x2 = 1 3 3x3 = 1 3 3

1. What are the values of the parameters Ri(1, 0) and Ri(1, 1) for each of the features i (usingthe Laplace correction)?

2. If you see the data point 1, 1, 1 and use the parameters you found above, what output wouldNaive Bayes predict? Explain how you got the result.

9

3. Naive Bayes doesn’t work very well on this data, explain why.

10

2 Nearest Neighbors

f1

1 2

1

2

-1

-2

-1-2

–

–

– + +

f2

Data points are: Negative: (-1, 0) (2, 1) (2, -2) Positive: (0, 0) (1, 0)

1. Draw the decision boundaries for 1-Nearest Neighbors on the graph above. Your draw-ing should be accurate enough so that we can tell whether the integer-valued coordinatepoints in the diagram are on the boundary or, if not, which region they are in.

2. What class does 1-NN predict for the new point: (1, -1.01) Explain why.

3. What class does 3-NN predict for the new point: (1, -1.01) Explain why.

4

5 Naive Bayes (8 pts)

Consider a Naive Bayes problem with three features, x1 . . . x3. Imagine that we have seen atotal of 12 training examples, 6 positive (with y = 1) and 6 negative (with y = 0). Here is atable with some of the counts:

y = 0 y = 1x1 = 1 6 6x2 = 1 0 0x3 = 1 2 4

1. Supply the following estimated probabilities. Use the Laplacian correction.

• Pr(x1 = 1|y = 0)

• Pr(x2 = 1|y = 1)

• Pr(x3 = 0|y = 0)

2. Which feature plays the largest role in deciding the class of a new instance? Why?

8

6 Learning algorithms

For each of the learning situations below, say what learning algorithm would be best to use,and why.

1. You have about 1 million training examples in a 6-dimensional feature space. You onlyexpect to be asked to classify 100 test examples.

2. You are going to develop a classifier to recommend which children should be assignedto special education classes in kindergarten. The classifier has to be justified to theboard of education before it is implemented.

3. You are working for Am*z*n as it tries to take over the retailing world. You are tryingto predict whether customer X will like a particular book, as a function of the inputwhich is a vector of 1 million bits specifying whether each of Am*z*n’s other customersliked the book. You will train a classifier on a very large data set of books, wherethe inputs are everyone else’s preferences for that book, and the output is customerX’s preference for that book. The classifier will have to be updated frequently andefficiently as new data comes in.

10

4. You are trying to predict the average rainfall in California as a function of the measuredcurrents and tides in the Pacific ocean in the previous six months.

11

!"#$%&'()*((+&,"-.-/(012(3#.-456((!

!,"4(7*(02(!#.-456!!

"#$%&!'(&!%)*'!)+!,*#$-!.!$&./&*'!$&#-(0)/!%1.**#+#&/!-/)2*!2#'(!'(&!*#3&!)+!'(&!'/.#$#$-!

*&'4!*)5&'#5&*!)$&!'/#&*!')!&1#5#$.'&!/&6,$6.$'!7)#$'*!+/)5!'(&!'/.#$#$-!*&'8!!9(&*&!./&!

7)#$'*!2()*&!/&5):.1!6)&*!$)'!.++&%'!'(&!0&(.:#)/!)+!'(&!%1.**#+#&/!+)/!.$;!7)**#01&!$&2!

7)#$'8!

!

<8!=$!'(&!+#-,/&!0&1)24!*>&'%(!'(&!6&%#*#)$!0),$6./;!+)/!.!<?$&./&*'?$&#-(0)/!/,1&!.$6!

%#/%1&!'(&!/&6,$6.$'!7)#$'*8!

!

8 9 9

8 9(8 9

88(

88 8 8

9

9( 9( 99 9(

@8!A(.'!#*!'(&!-&$&/.1!%)$6#'#)$B*C!/&D,#/&6!+)/!.!7)#$'!')!0&!6&%1./&6!/&6,$6.$'!+)/!.!<?

$&./&*'?$&#-()/!/,1&E!!F**,5&!2&!(.:&!)$1;!'2)!%1.**&*!BG4!?C8!!H&*'.'#$-!'(&!6&+#$#'#)$!

)+!/&6,$6.$'!BI/&5):#$-!#'!6)&*!$)'!%(.$-&!.$;'(#$-IC!#*!$)'!.$!.%%&7'.01&!.$*2&/8!!J#$'!

K!'(#$>!.0),'!'(&!$&#-(0)/())6!)+!/&6,$6.$'!7)#$'*8!

! <L

!"#$%&'(:*((;%,55.<.=,4.#-(0)>(3#.-456((!

+!!!!<!!!!!!@!!!!!!!M!!!!!!!L!!!!!!!N!!!!!!!!O!!!!!!!P!!!!!!Q!!!!!!!R!!!!!!!<S!!!!!<<!!!!!<@!!!!!<M!

!!

9(&!7#%',/&!.0):&!*()2*!.!6.'.!*&'!2#'(!Q!6.'.!7)#$'*4!&.%(!2#'(!)$1;!)$&!+&.',/&!:.1,&4!

1.0&1&6!+8!!T)'&!'(.'!'(&/&!./&!'2)!6.'.!7)#$'*!2#'(!'(&!*.5&!+&.',/&!:.1,&!)+!O8!!9(&*&!./&!

*()2$!.*!'2)!UV*!)$&!.0):&!'(&!)'(&/4!0,'!'(&;!/&.11;!*(),16!(.:&!0&&$!6/.2$!.*!'2)!UV*!

)$!')7!)+!&.%(!)'(&/4!*#$%&!'(&;!(.:&!'(&!*.5&!+&.',/&!:.1,&8!

!

!,"4(7*(0:>(!#.-456!!

<8!W)$*#6&/!,*#$-!<?T&./&*'!T&#-(0)/*!')!%1.**#+;!,$*&&$!6.'.!7)#$'*8!!X$!'(&!1#$&!0&1)24!

6./>&$!'(&!*&-5&$'*!)+!'(&!1#$&!2(&/&!'(&!<?TT!/,1&!2),16!7/&6#%'!.$!X!-#:&$!'(&!

'/.#$#$-!6.'.!*()2$!#$!'(&!+#-,/&!.0):&8!

+!!!!<!!!!!!@!!!!!!!M!!!!!!!L!!!!!!!N!!!!!!!!O!!!!!!!P!!!!!!Q!!!!!!!R!!!!!!!<S!!!!!<<!!!!!<@!!!!!<M!

!!

@8!W)$*#6&/!,*#$-!N?T&./&*'!T&#-(0)/*!')!%1.**#+;!,$*&&$!6.'.!7)#$'*8!!X$!'(&!1#$&!0&1)24!

6./>&$!'(&!*&-5&$'*!)+!'(&!1#$&!2(&/&!'(&!N?TT!/,1&!2),16!7/&6#%'!.$!X!-#:&$!'(&!

'/.#$#$-!6.'.!*()2$!#$!'(&!+#-,/&!.0):&8!

!

+!!!!<!!!!!!@!!!!!!!M!!!!!!!L!!!!!!!N!!!!!!!!O!!!!!!!P!!!!!!Q!!!!!!!R!!!!!!!<S!!!!!<<!!!!!<@!!!!!<M!

!

! @<

M8!=+!2&!6)!Q?+)16!%/)**?:.1#6.'#)$!,*#$-!<?TT!)$!'(#*!6.'.!*&'4!2(.'!2),16!0&!'(&!

7/&6#%'&6!7&/+)/5.$%&E!!"&''1&!'#&*!0;!%())*#$-!'(&!7)#$'!)$!'(&!1&+'8!!"()2!()2!;),!

.//#:&6!.'!;),/!.$*2&/8!

! @@

!"#$%&'(1*((?@&"<.44.-/(01>(3#.-456(!

Y)/!&.%(!)+!'(&!*,7&/:#*&6!1&./$#$-!5&'()6*!'(.'!2&!(.:&!*',6#&64!#$6#%.'&!()2!'(&!

5&'()6!%),16!):&/+#'!'(&!'/.#$#$-!6.'.!B%)$*#6&/!0)'(!;),/!6&*#-$!%()#%&*!.*!2&11!.*!'(&!

'/.#$#$-C!.$6!2(.'!;),!%.$!6)!')!5#$#5#3&!'(#*!7)**#0#1#';8!!9(&/&!5.;!0&!5)/&!'(.$!)$&!

5&%(.$#*5!+)/!):&/+#''#$-4!5.>&!*,/&!'(.'!;),!#6&$'#+;!'(&5!.118!

!

!,"4(7*(A&,"&54(A&./B$#"5(02(!#.-456(!<8!J)2!6)&*!#'!):&/+#'E!

!

!

!

!

!

!

!!@8!J)2!%.$!;),!/&6,%&!):&/+#''#$-E!

(

(

(

(

(

(

(

(

(

(

!,"4(C*(D&=.5.#-(E"&&5(02(!#.-456(!<8!J)2!6)&*!#'!):&/+#'E!

!

!

!

!

!

!

!!@8!J)2!%.$!;),!/&6,%&!):&/+#''#$-E!

! @P

3 Perceptron (7 pts)

x1

1 2

1

2

-1

-2

-1-2

–

– +

x2

Data points are: Negative: (-1, 0) (2, -2) Positive: (1, 0). Assume that the points areexamined in the order given here.

Recall that the perceptron algorithm uses the extended form of the data points in whicha 1 is added as the 0th component.

1. The linear separator obtained by the standard perceptron algorithm (using a step sizeof 1.0 and a zero initial weight vector) is (0 1 2). Explain how this result was obtained.

2. What class does this linear classifier predict for the new point: (2.0, -1.01)

3. Imagine we apply the perceptron learning algorithm to the 5 point data set we used onProblem 1: Negative: (-1, 0) (2, 1) (2, -2), Positive: (0, 0) (1, 0). Describe qualitativelywhat the result would be.

5

6 Perceptron (8 points)

The following table shows a data set and the number of times each point is misclassifiedduring a run of the perceptron algorithm, starting with zero weights. What is the equationof the separating line found by the algorithm, as a function of x1, x2, and x3? Assume thatthe learning rate is 1 and the initial weights are all zero.

x1 x2 x3 y times misclassified2 3 1 +1 122 4 0 +1 03 1 1 -1 31 1 0 -1 61 2 1 -1 11

15

1 Perceptron (20 points)

f1

1 2

1

2

-1

-2

-1-2

–

–

–

+

f2

+

Data points are: Negative: (-1, -1) (2, 1) (2, -1) Positive: (-2, 1) (-1, 1)Recall that the perceptron algorithm uses the extended form of the data points in which a 1 is

added as the 0th component.

1. Assume that the initial value of the weight vector for the perceptron is [0, 0, 1], that the datapoints are examined in the order given above and that the rate (step size) is 1.0. Give theweight vector after one iteration of the algorithm (one pass through all the data points):

2. Draw the separator corresponding to the weights after this iteration on the graph at the topof the page.

2

3. Would the algorithm stop after this iteration or keep going? Explain.

4. If we add a positive point at (1,-1) to the other points and retrain the perceptron, what wouldthe perceptron algorithm do? Explain.

3

Related Documents

![lecture24-1 - Massachusetts Institute of Technologycourses.csail.mit.edu/6.889/fall11/lectures/L24.pdf · [Nd05] J. Ne set ril and P. Ossona de Mendez. Tree depth, subgraph col-oring](https://static.cupdf.com/doc/110x72/5ba64b1009d3f22f1b8b9c9e/lecture24-1-massachusetts-institute-of-nd05-j-ne-set-ril-and-p-ossona.jpg)