Fast Event-based Harris Corner Detection Exploiting the Advantages of Event-driven Cameras Valentina Vasco, Student Member, IEEE, Arren Glover, Member, IEEE and Chiara Bartolozzi, Member, IEEE Abstract— The detection of consistent feature points in an image is fundamental for various kinds of computer vision techniques, such as stereo matching, object recognition, target tracking and optical flow computation. This paper presents an event-based approach to the detection of corner points, which benefits from the high temporal resolution, compressed visual information and low latency provided by an asynchronous neu- romorphic event-based camera. The proposed method adapts the commonly used Harris corner detector to the event-based data, in which frames are replaced by a stream of asynchronous events produced in response to local light changes at μs temporal resolution. Responding only to changes in its field of view, an event-based camera naturally enhances edges in the scene, simplifying the detection of corner features. We characterised and tested the method on both a controlled pattern and a real scenario, using the dynamic vision sensor (DVS) on the neuromorphic iCub robot. The method detects corners with a typical error distribution within 2 pixels. The error is constant for different motion velocities and directions, indicating a consistent detection across the scene and over time. We achieve a detection rate proportional to speed, higher than frame-based technique for a significant amount of motion in the scene, while also reducing the computational cost. I. INTRODUCTION Using conventional frame-based cameras, several tasks such as stereo vision, motion estimation, object recognition and target tracking require the robust localisation of pixels of interest in images. For instance, in the field of visual motion estimation, the well known aperture problem makes the motion direction of an edge ambiguous, as only the motion component orthogonal to the primary axis of orientation of the object can be inferred based on the visual input. Corner detection and tracking between two or more frames, instead, can be used to produce the true flow. Therefore, corner features, defined as the region in the image where two edges intersect, must be detected consistently over time, and from different orientations and viewpoints. Typical processing in the frame-based paradigm occurs by looking at the appearance of patches of pixels in each frame, where measures derived from self-similarity [1], gradients [2], color [3] and curvature [4] have been proposed to characterize corner pixels. Conventional cameras, while widely adopted, are not al- ways optimal for robotics tasks as, during periods without motion, two or more images can contain the same redundant information, resulting in significant amount of computational resources wastage. Furthermore, the fixed frame rate at which V.Vasco, A.Glover and C.Bartolozzi are with the iCub Facility, Istituto Italiano di Tecnologia, Italy. {valentina.vasco, arren.glover, chiara.bartolozzi}@iit.it. V.Vasco is also with Universit´ a di Genova, Italy. images are acquired is not suitable for tracking fast moving targets: either tracking is completely lost above Nyquist rates, large displacements of features between frames introduce ambiguity when tracking multiple targets or targets can appear blurred in the direction of motion and therefore tracking is lost. Event-based vision sensors (e.g. [5]) are biologically inspired sensors that produce an asynchronous stream of events in response to movements that occur in the sensor’s field of view. In contrast to standard frame-based cameras, only the information from changing elements in the scene require processing, thereby removing data redundancy and reducing the processing requirements. In addition, events can be conveyed with a temporal resolution of 1 μs, allowing for precise computation of scene dynamics orders of magnitude faster than frame-based cameras. Responding only to changes in contrast in the field of view, event-driven sensors are natural contour detectors as events typically only occur on object edges. The corner detection problem can also be simplified as event-driven sensors inherently do not respond to uniform regions in the field of view. For instance, the well- known Canny edge detector was implemented in an event- driven way [6], exploiting this innate property of the sensor and achieving a more efficient and less resource demanding detection than the frame-based counterpart. In this paper, we applied the frame-based Harris corner detection [2] to event-driven data, and showed that its per- formance benefits from the native properties of the event- driven sensors. While the innate edge enhancement facilitates the corner detection, the sensors’ precise timing allows dense detection of fast moving corners, reducing the overall computational cost required by the frame-based method. Event-based corner detection was previously proposed [7], in which events that belong to the intersection of two or more motion planes are labelled as corners. The technique requires the inital calculation of optical flow, and is dependent on the flow direction being accurate. We propose that the variation around a corner can be detected directly by the pattern of active pixels in a small neighbourhood around the corner centre. While the data is motion driven (as motion inherently triggers events), we show that the resulting detection is independent on the speed and direction of the motion. The proposed algorithm is characterised and validated on the neuromorphic iCub humanoid robot [8], whose visual system is composed of two dynamic vision sensors (DVS) mounted as eyes in the head of the iCub, with total of 6 Degrees of Freedom (DoFs).

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

Fast Event-based Harris Corner Detection Exploiting the Advantages ofEvent-driven Cameras

Valentina Vasco, Student Member, IEEE, Arren Glover, Member, IEEE and Chiara Bartolozzi, Member, IEEE

Abstract— The detection of consistent feature points in animage is fundamental for various kinds of computer visiontechniques, such as stereo matching, object recognition, targettracking and optical flow computation. This paper presents anevent-based approach to the detection of corner points, whichbenefits from the high temporal resolution, compressed visualinformation and low latency provided by an asynchronous neu-romorphic event-based camera. The proposed method adaptsthe commonly used Harris corner detector to the event-baseddata, in which frames are replaced by a stream of asynchronousevents produced in response to local light changes at µstemporal resolution. Responding only to changes in its fieldof view, an event-based camera naturally enhances edges inthe scene, simplifying the detection of corner features. Wecharacterised and tested the method on both a controlledpattern and a real scenario, using the dynamic vision sensor(DVS) on the neuromorphic iCub robot. The method detectscorners with a typical error distribution within 2 pixels. Theerror is constant for different motion velocities and directions,indicating a consistent detection across the scene and over time.We achieve a detection rate proportional to speed, higher thanframe-based technique for a significant amount of motion inthe scene, while also reducing the computational cost.

I. INTRODUCTION

Using conventional frame-based cameras, several taskssuch as stereo vision, motion estimation, object recognitionand target tracking require the robust localisation of pixels ofinterest in images. For instance, in the field of visual motionestimation, the well known aperture problem makes themotion direction of an edge ambiguous, as only the motioncomponent orthogonal to the primary axis of orientationof the object can be inferred based on the visual input.Corner detection and tracking between two or more frames,instead, can be used to produce the true flow. Therefore,corner features, defined as the region in the image wheretwo edges intersect, must be detected consistently overtime, and from different orientations and viewpoints. Typicalprocessing in the frame-based paradigm occurs by lookingat the appearance of patches of pixels in each frame, wheremeasures derived from self-similarity [1], gradients [2], color[3] and curvature [4] have been proposed to characterizecorner pixels.

Conventional cameras, while widely adopted, are not al-ways optimal for robotics tasks as, during periods withoutmotion, two or more images can contain the same redundantinformation, resulting in significant amount of computationalresources wastage. Furthermore, the fixed frame rate at which

V.Vasco, A.Glover and C.Bartolozzi are with the iCub Facility,Istituto Italiano di Tecnologia, Italy. {valentina.vasco,arren.glover, chiara.bartolozzi}@iit.it. V.Vascois also with Universita di Genova, Italy.

images are acquired is not suitable for tracking fast movingtargets: either tracking is completely lost above Nyquist rates,large displacements of features between frames introduceambiguity when tracking multiple targets or targets canappear blurred in the direction of motion and thereforetracking is lost.

Event-based vision sensors (e.g. [5]) are biologicallyinspired sensors that produce an asynchronous stream ofevents in response to movements that occur in the sensor’sfield of view. In contrast to standard frame-based cameras,only the information from changing elements in the scenerequire processing, thereby removing data redundancy andreducing the processing requirements. In addition, events canbe conveyed with a temporal resolution of 1 µs, allowing forprecise computation of scene dynamics orders of magnitudefaster than frame-based cameras. Responding only to changesin contrast in the field of view, event-driven sensors arenatural contour detectors as events typically only occur onobject edges. The corner detection problem can also besimplified as event-driven sensors inherently do not respondto uniform regions in the field of view. For instance, the well-known Canny edge detector was implemented in an event-driven way [6], exploiting this innate property of the sensorand achieving a more efficient and less resource demandingdetection than the frame-based counterpart.

In this paper, we applied the frame-based Harris cornerdetection [2] to event-driven data, and showed that its per-formance benefits from the native properties of the event-driven sensors. While the innate edge enhancement facilitatesthe corner detection, the sensors’ precise timing allowsdense detection of fast moving corners, reducing the overallcomputational cost required by the frame-based method.Event-based corner detection was previously proposed [7], inwhich events that belong to the intersection of two or moremotion planes are labelled as corners. The technique requiresthe inital calculation of optical flow, and is dependent on theflow direction being accurate. We propose that the variationaround a corner can be detected directly by the pattern ofactive pixels in a small neighbourhood around the cornercentre. While the data is motion driven (as motion inherentlytriggers events), we show that the resulting detection isindependent on the speed and direction of the motion. Theproposed algorithm is characterised and validated on theneuromorphic iCub humanoid robot [8], whose visual systemis composed of two dynamic vision sensors (DVS) mountedas eyes in the head of the iCub, with total of 6 Degrees ofFreedom (DoFs).

1 2 3 4 5 6 7 8 91

2

3

4

5

6

7

8

9

(a)1 2 3 4 5 6 7 8 9

1

2

3

4

5

6

7

8

9

(b)

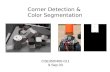

Fig. 1: A visualization of the asynchronous local contrastmap when the local window includes an edge (a) and acorner (b). The black patterns correspond to “1” in the binarylocal map (i.e. where an event occurred). The local changein contrast is high in (b) along the two major axes, comparedto (a) that is high along one direction.

II. THE ALGORITHM

The Harris corner detection [2] is one of the most widelyused techniques to detect corner features in current frame-based vision processing, thanks to its reliability, low nu-merical complexity and invariance to image shift, rotationand lighting [9]. The method detects if a pixel is a cornerby using a local window that is shifted by a small amountin different directions, to determine the average change inimage intensity. The change is computed by calculating thespatial gradient of the intensity. A corner can be identified ifa matrix containing the first derivatives of intensity has twolarge eigenvalues, meaning that the major intensity gradientscentered around the pixel occur in two different directions.We do not disregard events, but augment the informationcarried by the single event when a corner is detected.

A. Event-based corner detection

The DVS sensor does not provide intensity measurementsto which frame-based corner detection can be directly ap-plied, but a stream of events characterized by the pixelposition and the timestamp at which the event occurred.Since pixels only respond to local variations of contrast in thevisual scene, for this application, we create an asynchronouslocal contrast map for each event, as shown in Fig. 1, anduse the Harris score to compare the information generatedfrom the current active pixel to that of surrounding pixels.

As a single event does not provide enough informationalone, events need to be accumulated to create an informativerepresentation of the world. However the data structure to usefor processing the event stream is still an open question. Atemporal window [10] is dependent on the velocity of theobject in the environment and over- or under-estimating itresults in a motion-blur effect or incomplete representation.As the visual stream of events is intrinsically related to themotion of the object, we chose a representation with a fixednumber of events that is not affected by the speed, althoughis scene-dependent. We then need to integrate events overspace to localize features in the scene.

Therefore, for each polarity, we consider a surface ofevents in the three-dimensional spatio-temporal domain,

composed of the two dimensions of the sensor and anadditional dimension representing time. For each incomingevent, the surface maps the pixel position of the event to itstimestamp, such that it will represent the latest timestampsevolving in time: Σt : N2 → R,p = (x, y)T → t =Σt(p) [11], and only the most recent N events are usedto asynchronously update the surface Σt(x, y).

For the current event ei = (pi, ti, poli), we define a localspatial window (W ), L pixels wide, and associate to thepixels’ positions inside the window 1 or 0 depending onwhether an event is present on the surface at that pixellocation, obtaining the binarized surface Σb:

Σb : N2 → Nif ∃ e at p → Σb(p) = 1

otherwise → Σb(p) = 0

(1)

We then compute the gradients of the obtained binarysurface ∇Σb = [dΣb

dx ,dΣb

dy ] and use them to compute thesymmetric matrix M2×2, as follows:

M(ei) =∑e∈W

g(e)

dΣb(e)dx

2 dΣb(e)dx

dΣb(e)dy

dΣb(e)dy

dΣb(e)dx

dΣb(e)dy

2

(2)

where g(e) is a Gaussian window function.Similarly to the original Harris algorithm, we associate a

score R to each event ei, computed as:

R(ei) = det(M)− k trace2(M) =

λ1 λ2 − k (λ1 + λ2)2(3)

where λ1 and λ2 are the eigenvalues of M .Three cases will hold:• if the patch includes few events due to the noise that

occurs in the sensor, there is a negligible variation ofcontrast, leading both eigenvalues λ1 and λ2, and thusR, to be small;

• if the patch includes one edge (as in Fig. 1a), there is ahigh variation of contrast in the direction of the edge,leading one eigenvalue to be high and the other to besmall, and thus R to become negative;

• if the patch includes two edges (as in Fig. 1b), thereis a high contrast variation along the two major axes,leading both eigenvalues λ1 and λ2 to be high, and thusR to be positive: a corner event is probable. A specialcase is the line-ending, which can be seen as cornerif the edge is thick enough to produce high contrastvariation on both directions.

Based on these considerations, if R(ei) is greater than aset threshold S, the event ei is labelled as corner event.

III. EXPERIMENTS

In the event-driven paradigm, the visual stream of eventsis dependent on the amount of motion in the camera’s fieldof view (due to objects moving or due to the camera itselfmoving). Therefore the data generated by the camera ismotion-dependent and the corner detection algorithm needs

0 20 40 60 80 100 120

y(p

x)

0

20

40

60

80

100

120

(a)0 20 40 60 80 100 120

0

20

40

60

80

100

120

(b)0 20 40 60 80 100 120

0

20

40

60

80

100

120

-1

0

1

2

3

4

5

6

(c)

x (px)0 20 40 60 80 100 120

y(p

x)

0

20

40

60

80

100

120

(d)

x (px)0 20 40 60 80 100 120

0

20

40

60

80

100

120

(e)

x (px)0 20 40 60 80 100 120

0

20

40

60

80

100

120

-1

0

1

2

3

4

5

6

(f)

Fig. 2: Distribution of the score for different velocities magnitudes and corner orientations. Each row shows results for thedataset obtained with the same orientation and different magnitudes. The first row shows the score distribution for 15 degreesorientation and 10 deg/s (a), 40 deg/s (b) and 100 deg/s (c) magnitudes. The second row shows the score distribution for60 degrees orientation and 10 deg/s (d), 40 deg/s (e) and 100 deg/s (f) magnitudes. Black dots represent the initial windowof 1000 events. Only one polarity is shown for visualisation.

to be invariant to different motion types. For example, acorner oriented at 45 degrees to the direction of motionshould produce similar edge velocities and strengths evenlyaround the corner centre. As the orientation angle changes,the relative strengths and velocities (due to camera apertureproblems affecting optical flow) can also vary.

We characterised and tested the event-based corner detec-tion on data collected from the dynamic vision sensor (DVS)mounted on the iCub robot [8], performing two differentsets of experiments. The first set was performed by movingthe iCub’s eyes in front of a controlled pattern, in order todetermine the detection sensitivity to various motion speedsand relative orientations of corners and velocity directions.In order to evaluate the detection response at differentvelocities, we moved the cameras at several speeds and alsorotated the pattern to achieve different relative orientations.We used black and white “images”, which are similar to theframe-based approach, with the difference that the contrastchange is much sharper, without any gradient. Therefore theresponse due to change in angular magnitude and imagerotation, as already shown in [2], should not significantlychange in the event-driven method.

The second experiment was performed mimicking a typi-cal scenario in which the iCub is used, with the robot lookingaround in the environment, where several objects are placedat different distances, to determine the robustness of themethod in a real scene and the effectiveness and consistencyof the detection over time.

A. Characterization with a controlled input

The stream of events from the iCub’s camera was recordedwhile the robot was observing a static checkerboard, movingthe eyes horizontally from left to right, at 7 different veloc-ities, from 10 up to 100 deg/s, with a step of 15 deg/s. Foreach acquisition, the checkerboard was rotated at 7 differentorientations, from 0 to 90 degrees, with a step of 15 degrees.For this experiment, we empirically selected N = 1000events and a window of L = 7 pixels.

As shown in Fig. 2, events located on a corner exhibit asignificantly higher score than events located on the edges.This indicates potential for speed and orientation invariantdetection. Events on line endings produce a high score aswell when the edge is thick (top line-endings in Fig. 2a andbottom line-endings in Fig. 2d). However, the line-ending isa feature point with a high information content, and is alsoconsidered a reasonable feature point for tracking. The scoreat the start is higher than the rest of the dataset. This seemsrelated to the off polarity, as the score distribution is moreuniform when we consider the on polarity. Importantly thescores calculated are consistent across different orientationsand speeds qualitatively seen in Fig. 2.

To quantitatively assess the detection performance withdifferent velocities and orientations, we compared the cornerevents detected by the proposed algorithm with a groundtruth obtained by manually labelling the start and the endpositions of each corner. As the motion profile was verysimple (horizontal motion), we only cared about the cornerposition, disregarding any timing information and we con-

x (px)0 20 40 60 80 100 120

y(p

x)

0

20

40

60

80

100

120

C1

C2

C3

C4

C5

C6

C7

C8

C9

C10

C11

C12

C13

(a)

Distance from Ground Truth (px)-10 -5 0 5 10

Sco

re

-1

-0.5

0

0.5

1

1.5

(b)

Fig. 3: Slice of 1000 events and ground truth corners (a) anddistribution of the score compared to the distance from theground truth (b). The threshold S is marked by the dashedblack line. Blue dots represent the score around the groundtruth (red line) of each event inside the dotted black squarewithin the slice of 1000 events.

sidered that corner positions should not deviate from groundtruth over time. In order to set a threshold, we considered theevents located around a single corner within a slice of 1000events (shown in Fig. 3a) and their score distribution withrespect to the corresponding ground truth (shown in Fig. 3b).The distribution has a bell-shape, with a strong peak in thecenter (i.e. on the events located on the ground truth) thatsharply decreases towards a steady state, as we move awayfrom center (i.e. on the events located on the edges). If athreshold S is set equal to 0.8, we can easily isolate thehigh scores on corners from the lower edge scores with anestimated error distribution of 2 pixels.

In order to evaluate how well this measure generalisesacross different corners, the threshold was applied to all thedetected corners in the scene. The detection accuracy errorwas further characterised, by computing the distance betweenalgorithmically detected corners and the ground truths, forevery manually labelled corner/line-ending shown in Fig 3a,at different speeds and orientations. As shown in Fig. 4, theerror is mostly consistent for all corners in all datasets withvarying velocities and orientations. The typical detectionerror is still less than 2 pixels, reaching a mean value of1.1±0.3 px when varying the speed (Fig. 4a) and 1.2±0.4 pxwhen varying the orientation (Fig. 4b). The overall detectionerror is also comparable to the results obtained in [7] andthus we assert the event-based Harris detection has similarerror distribution as the previously proposed intersection ofmotion constraints.

We compared the number of Harris processing requiredby frame and event-based methods, assuming the traditionalcamera to capture frames at 30 fps, with the same spatialresolution of 128 × 128 pixels of our event-based camera.While the traditional method computes the Harris score foreach pixel, performing a global search of the maximumresponse over the entire frame, the event-based techniquecomputes the score asynchronously and locally only whenevents occur. Therefore, the first requires a constant pro-cessing rate (30 × 128 × 128 = 496480 proc/s), and thesecond requires a variable processing rate depending on

Speed magnitude (deg / s)10 25 40 55 70 85 100

Err

or

(px)

0

1

2

3

(a)

Corner orientation (deg)0 15 30 45 60 75 90

0

1

2

3

(b)

Fig. 4: Mean error at S = 0.8 for all the corners at fixedorientation (60 deg) and different speed magnitudes (a) anddifferent orientations at fixed speed (10 deg/s) with respectto the corner’s direction (b). Blue dots represent the initialwindow of 1000 events.

the number of processed events (which can vary with totalnumber of edges in the scene and their velocities). As shownin Fig. 5a, the event-based corner detector drastically reducedthe computational demand for all speeds when viewing thechecker-board pattern. We also show the computational costfor a typical scene where several objects are placed in frontof the robot, which moves its cameras at typical speeds (3, 5and 20 deg/s), with the black crosses. The computation costunder typical conditions is therefore approximately ∼ 94%lower than a frame-based camera, demonstrating the event-based advantage in terms of resource consumption.

Similar considerations apply for the number of detectionsper second, shown in Fig. 5b. For a fair comparison, weconsidered a single moving corner in the different datasetsand computed the number of detections per second takinginto account only the corner events that belonged to theassociated ground truth line. While the frame-based detec-tion rate is constant (∼ 30 det/s assuming a single cornerdetection per frame), the event-based detection rate increaseswith velocity, achieving similar results for low speed (< 10deg/s), but clearly outperforming the frame-based approachfor higher speed (> 25 deg/s), reaching on average ∼ 250det/s for the largest velocity. We note that even the fastmoving target produces spatially-dense observations, whichhas advantages for tracking as no information is lost (i.e.between frames), and has implications in removing trackingambiguity between multiple targets. Also, while the frame-based approach suffers from 33 ms of latency, inherently dueto the camera frame rate, the event-based detector processesevent by event, by reducing the latency down to few µs.

B. Characterization for a real scene

To test the robustness of our method in a real scenario,we performed experiments in a typical iCub’s environment,where several objects (a sponge, a car and a robot toy,a book, a tissue and a tea box) are placed on a table infront of the robot (as in Fig. 6) which in turn moves itseyes along several directions (shown in Fig. 7a) at a speedof 5 deg/s, while acquiring event-data from the scene. Weempirically selected N = 2000 events and a window of

Speed (deg / s)0 10 25 40 55 70 85 100

Num

ber

ofH

arr

ispro

cess

ing

(pro

c/

s)#10 5

0

1

2

3

4

5

6

(a)

Speed (deg / s)0 10 25 40 55 70 85 100

Num

ber

ofdet

ections

(det

/s)

0

50

100

150

200

250

300

3500153045607590

(b)

Fig. 5: Comparison between the computational cost (a) andthe detection rate (b) of the frame-based detector (dotted line)and the event-based detector, for different velocities of iCub’seyes and different orientations of the checkerboard. The crossmarkers indicate the number of processing for the complexscene (realistic velocities generally used on the robot havebeen tested). Both polarities are processed.

Fig. 6: Experimental setup for corner detection in typicaliCub environments.

L = 5 pixels. The algorithm produced detection of manymoving corners in the scene and examples of the detectedcorners for different motion directions are shown in Fig. 7.The sharpest corners are consistently detected over time andalong different trajectories (e.g. the book and the sponge fourcorners). However, the low spatial resolution of the cameramakes less-sharp corners more ambiguous in the binarisedimages, and the exact central position of corners (e.g. fromthe toy robot or toy car) are not as well defined.

Evaluating the detection consistency over time includingchanges in motion direction is important to produce a propersignal for tracking. Given a rough estimate of ∼ 45 manuallylabelled corners in the visual scene, we grouped the algo-rithmically detected corner events that belonged to the samemoving corner and computed the number of the groupedcorner events over time. The total number of corners detectedhas a mean value of 45.7, remaining mostly consistent overtime, indicating the corner detection algorithm responds con-sistently even in more cluttered datasets, as shown in Fig. 8a.During periods in which the eyes are changing direction, thelow motion results in a low amount of events, and hence,corner detections. An example of traces in (x, y, t) spacefrom the ‘b’ trajectory is shown in Fig. 8b. One cornerwas selected from each object in the scene and the (x, y)trajectory was manually tagged over the full dataset, shownin Fig. 9. The ground truth was computed for each cornerby accumulating events over a period of 1s and manuallylabelling the central corner pixel. We visually identified a

time (s)0 10 20

num

ber

ofco

rner

s

0

20

40

60

80

b cd

e

f g h

(a)

time (s)

32

10100

x (px)500

100

0

50y(p

x)

(b)

Fig. 8: Number of detected corners over time (a) and detectedcorner events over time in the (x, y, t) space (b) along theb trajectory (Fig. 7a). Blue dots represent the initial windowof 2000 events corresponding to Fig. 7b and colors indicatecorner events belonging to different moving corners.

radius of 5 pixels, marked by the green circles in Fig. 7b,within which corner events were considered as possiblecorner candidates. The limited testing range is necessary asmany corners are spatially close making the analysis of actualcorner accuracy difficult from a data association perspective.

As our data is motion-driven, during periods of no-motion we do not get detections, but importantly whenmotion occurs, consistent corner events occur in regionscorresponding to the ground truth with an overall averageerror < 3 pixels (significantly lower than the 5 pixel limit).The error increases for objects with corners visually not well-defined: the robot toy and the car toy corners are detectedwith an error of 2.8± 1.4 px and 3.1± 1.3 px respectively.This also occurs as the spatially closest corners merge evenwithin the selected radius of 5 pixels (e.g. it’s difficult todisentangle corner events that belong to different car toycorners). However, the trajectories traced by such points onthe image plane appear smooth and coherent to changes intime and in motion direction and we therefore propose thata suitable tracking method could be successfully applied.

IV. DISCUSSION AND FUTURE WORK

In this work, we have presented the event-driven Harrisalgorithm for corner detection using the event-driven DVS,which is able to detect moving corners in visual scenes withlower computation cost than a frame-based camera, and at adetection rate that is proportional to speed. Event-based Har-ris detection processes asynchronously each event wheneverthe corner moves by a pixel, thus requiring processing onlyduring motion and achieving low latency. In comparison,the frame-based camera receives an observation at a setframe-rate regardless of any position change and with higherlatency.

We used a data structure with fixed number of events,which was tuned according to the scene complexity, resultingin a higher value for the real scene dataset. This parameterhowever did not affect the score and the same threshold couldbe used for both datasets. Other representations are still underinvestigation.

We showed that, while motion is required to produceevents, the algorithm was invariant to speed and relative

b

c

d

e

f

g

h

(a) (b) (c) (d)

(e) (f) (g) (h)

Fig. 7: Trajectories set on iCub’s cameras and examples of corner events detected along different trajectories and at differenttime intervals: [0.3 - 0.4] s (b), [3.7 - 3.8] s (c), [8 - 8.2] s (d), [12.1 - 12.3] s (e), [15.8 - 16] s (f), [19.7 - 19.8] s (g) and[23.5 - 23.7] s (h). The green circles in (b) show the ground truth radius of 5 pixels. Both polarities are shown.

time (s)0 5 10 15 20 25

x(p

x)

0

20

40

60

80

100

120

bookspongerobot toycar toytissue boxtea box

(a)

time (s)0 5 10 15 20 25

y(p

x)

0

20

40

60

80

100

120

(b)

erro

r (p

x)0

1

2

3

4

5

book cartoy

tissue box

tea box

robot toy

sponge

(c)

Fig. 9: Trajectories of the x (a) and y (b) coordinates of the detected corner events (dots) with corresponding ground truths(solid lines) and mean and standard deviation of the error for each object (c). Shaded areas indicate periods of non motionof the camera that do not produce detections.

corner orientation and the direction of the corner velocity.The latter being important if spatio-temporal gradients areused to compute optical flow as, in this situation, the apertureproblem produces erroneous flow estimations. The algorithmwas competitive with previous literature, achieving similarerror distributions (< 2 pixels), but without the need forthe calculation of optical flow. In natural scenes the numberof corners remained reasonable constant over time, anddetection plots indicate a strong potential for robust trackingof corners on the event-driven iCub robot. We plan to use,in particular, these trackable features to generate a sparseoptical flow that is unaffected by the aperture problem. Suchflow can be used for learning entire scene flow statistics forcharacterising the ego-motion of the event-driven iCub.

REFERENCES

[1] H. P. Moravec, “Obstacle avoidance and navigation in the real worldby a seeing robot rover.” tech. report CMU-RI-TR-80-03, p. 175, 1980.

[2] C. Harris and M. Stephens, “A Combined Corner and Edge Detector,”Procedings of the Alvey Vision Conference 1988, pp. 147–151, 1988.

[3] J. Chu, J. Miao, G. Zhang, and L. Wang, “Color-based corner detectionby color invariance,” HAVE 2011 - IEEE International Symposium onHaptic Audio-Visual Environments and Games, Proceedings, no. 1, pp.19–23, 2011.

[4] F. Mokhtarian and R. Suomela, “Robust image corner detectionthrough curvature scale space,” IEEE Transactions on Pattern Analysisand Machine Intelligence, vol. 20, no. 12, pp. 1376–1381, 1998.

[5] P. Lichtsteiner, C. Posch, and T. Delbruck, “A 128 x 128 120 dB 15 uslatency asynchronous temporal contrast vision sensor,” IEEE Journalof Solid-State Circuits, vol. 43, no. 2, pp. 566–576, 2008.

[6] S.-H. Ieng, C. Posch, and R. Benosman, “Asynchronous NeuromorphicEvent-Driven Image Filtering,” Proceedings of the IEEE, vol. 102,no. 10, pp. 1485–1499, Oct. 2014.

[7] X. Clady, S.-H. Ieng, and R. Benosman, “Asynchronous event-basedcorner detection and matching,” Neural Networks, vol. 66, pp. 91–106,2015.

[8] G. Metta, L. Natale, F. Nori, G. Sandini, D. Vernon, L. Fadiga, C. vonHofsten, K. Rosander, M. Lopes, J. Santos-Victor, A. Bernardino, andL. Montesano, “The iCub humanoid robot: An open-systems platformfor research in cognitive development,” Neural Networks, vol. 23, no.8-9, pp. 1125–1134, 2010.

[9] D. Parks and J. Gravel, “Corner Detection ,” International Journal ofComputer Vision, pp. 1–17, 2004.

[10] R. Benosman, S.-H. Ieng, C. Clercq, C. Bartolozzi, and M. Srinivasan,“Asynchronous frameless event-based optical flow,” Neural networks: the official journal of the International Neural NetworkSociety, vol. 27, pp. 32–7, mar 2012. [Online]. Available:http://www.ncbi.nlm.nih.gov/pubmed/22154354

[11] R. Benosman, C. Clercq, X. Lagorce, S. H. Ieng, and C. Bartolozzi,“Event-based visual flow,” IEEE Transactions on Neural Networks andLearning Systems, vol. 25, no. 2, pp. 407–417, Feb. 2014.

Related Documents