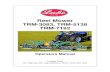

GPU Gyorgy Denes, Kuba Maruszczyk, Rafał K. Mantiuk Department of Computer Science and Technology Problem Rendering in VR is exclusively expensive due to the requirements on display resolution, anti-aliasing and refresh rate (90) . However, the angular resolution of headsets is 10x lower than that of a desktop monitor. Additionally, transmission of frames to the headset requires high-bandwidth dedicated links, especially in a wireless setup. Current temporal approaches [Beeler et al. 2016, Vlachos 2016] exploit spatial coherence between consecutive frames by rendering only every k-th frame; in-between frames can be generated by transforming the previous frame. These techniques, however, are only recommended as a last resort [Vlachos 2016]. Motivation A temporal multiplexing algorith that takes advantage of the limitations of the visual system: 1. finite integration time results in fusion of rapid temporal changes 2. the inability to perceive high-spatial- frequency signals at high temporal frequencies. Rendered frames GPU / Rendering Perceived stimulus Decoding & display Transmission References Method In the binocular setup we alternate the reduced-resolution and compensated frames off-phase for the two eyes. With a naive OpenGL/OpenVR implementation we acheived a steady 19-25% speed-up with this setup In a subjective user experiment we compared the perceived quality of TRM to 90Hz, 45Hz (no reprojection), state-of-the-art NCSFI and ASW. TRM performed consistently better both on the HTC Vive and the Oculus Rift. Our proposed method, Temporal Resolution Multiplexing (TRM), renders even-numbered frames with reduced resolution. Before displaying: 1. Odd-numbered frames are compensated for the loss of high frequencies. 2. Values that are lost while clamping to the dynamic range of the display are stored in a temporary residual buffer 3. The residual is added to the next even-numbered frame with a weight mask that takes motion into account. When the sequence is viewed at high frame rates (> 90), the visual system perceives the original, full resolution video. Our Approach Reduce the cost of (A) rendering and (B) transmission for virtual reality (VR) without perceivable loss of quality. 45Hz NCSFI ASW TRM ½ 90Hz Car Football Car Football Bedroom HTC Vive Oculus Rift Quality (JOD) 1 2 3 4 Results Comparison Motion quality Ease of integration Flicker free Brightness Bandwidth savings Rendering savings 45Hz NCSFI ASW 90Hz BFI TRM (A) (B) time 90Hz Right Left TRM ¼ TRM ½ Frame t i Frame t i+1 Render eye TRM Post-Process VR compositor Right Left Alex Vlachos. 2016. Advanced VR Rendering Performance. In Game Developers Conference (GDC) Dean Beeler, Ed Hutchins and Paul Pedriana. 2016 Asynchronous Spacewarp. https://developer.oculus.com/asynchronous-spacewarp/. (2016) Accessed: 2018-06-14 Hanfeng Chen, Sung-soo Kim, Sung-hee Lee, Oh-jae Kwon and Jun-ho Sung. 2005. Nonlinearity compensated smooth frame insertion for motion-blur reduction in LCD. In 2005 IEEE 7th Workshop on Multimedia Signal Processing. IEEE, 1-4. https://doi.org/10.1109/MMSP.2005.248646 Our method is designed to maximise the perceived motion quality, while reducing rendering cost and data transfer. On 90Hz current temporal multiplexing techniques such as nonlinearity compensated smooth frame insertion (NCSFI) [Chen et al. 2005] and black frame insertion (BFI) either result in motion artifacts or flicker. Exploiting the limitations of spatio-temporal vision for more efficient VR rendering

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

GPU

Gyorgy Denes, Kuba Maruszczyk, Rafał K. Mantiuk

Department of Computer

Science and Technology

Problem

Rendering in VR is exclusively expensive due

to the requirements on display resolution,

anti-aliasing and refresh rate (90𝐻𝑧) .However, the angular resolution of headsets

is 10x lower than that of a desktop monitor.

Additionally, transmission of frames to the

headset requires high-bandwidth dedicated

links, especially in a wireless setup.

Current temporal approaches [Beeler et al.

2016, Vlachos 2016] exploit spatial coherence

between consecutive frames by rendering

only every k-th frame; in-between frames can

be generated by transforming the previous

frame. These techniques, however, are only

recommended as a last resort [Vlachos 2016].

Motivation

A temporal multiplexing algorith that takes

advantage of the limitations of the visual

system:

1. finite integration time results in fusion of

rapid temporal changes

2. the inability to perceive high-spatial-

frequency signals at high temporal

frequencies.

Rendere

d fra

mes

GPU / Rendering Perceived stimulusDecoding & display

Tra

nsm

issio

n

References

Method

In the binocular setup we alternate the reduced-resolution and

compensated frames off-phase for the two eyes. With a naive

OpenGL/OpenVR implementation we acheived a steady 19-25%

speed-up with this setup

In a subjective user experiment we compared the perceived quality

of TRM to 90Hz, 45Hz (no reprojection), state-of-the-art NCSFI and

ASW. TRM performed consistently better both on the HTC Vive and

the Oculus Rift.

Our proposed method, Temporal Resolution Multiplexing (TRM),

renders even-numbered frames with reduced resolution.

Before displaying:

1. Odd-numbered frames are compensated for the loss of high

frequencies.

2. Values that are lost while clamping to the dynamic range of the

display are stored in a temporary residual buffer

3. The residual is added to the next even-numbered frame with

a weight mask that takes motion into account.

When the sequence is viewed at high frame rates (> 90𝐻𝑧), the

visual system perceives the original, full resolution video.

Our Approach

Reduce the cost of (A) rendering and (B)

transmission for virtual reality (VR) without

perceivable loss of quality.

45Hz NCSFI ASW TRM ½ 90Hz

Car Football Car Football Bedroom

HTC Vive Oculus Rift

Qu

alit

y (

JO

D)

1

2

3

4

Results

ComparisonMotionquality

Ease ofintegration

Flickerfree

Brightness

Bandwidthsavings

Renderingsavings 45Hz

NCSFI ASW

90Hz

BFI

TRM

(A)

(B)

time

90Hz

Right Left

TRM

¼

TRM

½

Frame ti Frame ti+1

Render eye TRM Post-Process VR compositor

Right Left

Alex Vlachos. 2016. Advanced VR Rendering Performance. In Game Developers Conference (GDC)

Dean Beeler, Ed Hutchins and Paul Pedriana. 2016 Asynchronous Spacewarp.

https://developer.oculus.com/asynchronous-spacewarp/. (2016) Accessed: 2018-06-14

Hanfeng Chen, Sung-soo Kim, Sung-hee Lee, Oh-jae Kwon and Jun-ho Sung. 2005. Nonlinearity

compensated smooth frame insertion for motion-blur reduction in LCD. In 2005 IEEE 7th Workshop

on Multimedia Signal Processing. IEEE, 1-4. https://doi.org/10.1109/MMSP.2005.248646

Our method is designed to maximise the perceived

motion quality, while reducing rendering cost and data

transfer. On 90Hz current temporal multiplexing

techniques such as nonlinearity compensated smooth

frame insertion (NCSFI) [Chen et al. 2005] and black

frame insertion (BFI) either result in motion artifacts or flicker.

Exploiting the limitations of spatio-temporal vision for

more efficient VR rendering

Related Documents