44 Computer Music Journal Computer Music Journal, 24:4, pp. 44–63, Winter 2000 © 2000 Massachusetts Institute of Technology. When interpreting a musical score, performers in- troduce deviations in time, sound level, and tim- bre from the values indicated by composers. These deviations depend on the expressive mechanical and acoustical possibilities offered by the instru- ment they are playing and can vary in nature. Mu- sical structure, biological motion, timekeeper variance and motor variance, and the performer’s expressive intentions are the most common sources of deviation in a performance. In this pa- per, we focus on the deviations that render differ- ent emotional characteristics in music performance as part of expressive intentions. Many important contributions to research in this area derive from Alf Gabrielsson’s group at the University of Uppsala. Much of their work has centered on the so-called basic emotions (anger, sadness, happiness, and fear, sometimes comple- mented with solemnity and tenderness). The group found that all of these emotions, as conveyed by players, could be clearly recognized by an audience containing both musically trained and untrained listeners (Juslin 1997a; Juslin 1997b). Other re- searchers have shown that more complex emo- tional states can be successfully communicated in performance, although it is not completely clear how these states are defined—because performers and listeners often use different terms in describ- ing intentions and perceived emotions (Canazza et al. 1997; Battel and Fimbianti 1998; De Poli, Rodà, and Vidolin 1998; Orio and Canazza 1998). The identification of a communication code in real performances is another essential task in re- search on emotional aspects of music performance, and researchers have approached this problem in several ways. Gabrielsson and Juslin (1996) asked professional musicians to play a set of simple melo- dies with different prescribed emotions. From these renditions, the authors identified sets of potential acoustical cues that players of different instru- ments (violin, flute, and guitar) used to encode emotions in their performances which were similar to the sets listeners used for decoding these emo- tions (Juslin 1997c). A difficulty with this experi- mental design is that conflicts may exist between the general character of the composition and the prescribed emotional quality of its performance. Another important aspect is to what extent the characteristic performance variations contain in- formation that is essential to the identification of emotion. The significance of various performance cues in the identification of emotional qualities of a performance has been tested in synthesis experi- ments. Automatic performances were obtained by setting certain expressive cues to greater or lesser values, either in custom developed software (Canazza et al. 1998) or on a commercial se- quencer (Juslin 1997c), and in formal listening tests listeners were able to recognize and identify the intended emotions. In the computer program developed by Canazza et al., the expressiveness cues were applied to a ”neutral” performance as played by a live musician with no intended emo- tion, as opposed to a computer-generated ”dead- pan” performance. Juslin manually adjusted the values of some previously identified cues by means of ”appropriate settings on a Roland JX1 synthesizer that was MIDI-controlled” by a Synclavier III. No phrase markings were used, and Juslin assumes that this reduced listeners’ ratings of overall expressiveness. The present work attempts to further explore the flexibility of Director Musices (DM), a program for automatic music performance that has been devel- oped in our group (Friberg et al. 2000). More spe- cifically, we have explored the possibilities of using the DM program to produce performances that differ with respect to emotional expression. Director Musices The DM program is a LISP language implementa- tion of the KTH performance rule system (e.g., Friberg 1991, 1995a; Sundberg 1993), for automatic Emotional Coloring of Computer-Controlled Music Performances Roberto Bresin and Anders Friberg Speech Music Hearing Department (TMH) Royal Institute of Technology (KTH) Drottning Kristinas väg 31 100 44 Stockholm, Sweden [roberto, andersf]@speech.kth.se www.speech.kth.se/music/

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

44 Computer Music Journal

Computer Music Journal 244 pp 44ndash63 Winter 2000copy 2000 Massachusetts Institute of Technology

When interpreting a musical score performers in-troduce deviations in time sound level and tim-bre from the values indicated by composers Thesedeviations depend on the expressive mechanicaland acoustical possibilities offered by the instru-ment they are playing and can vary in nature Mu-sical structure biological motion timekeepervariance and motor variance and the performerrsquosexpressive intentions are the most commonsources of deviation in a performance In this pa-per we focus on the deviations that render differ-ent emotional characteristics in musicperformance as part of expressive intentions

Many important contributions to research inthis area derive from Alf Gabrielssonrsquos group atthe University of Uppsala Much of their work hascentered on the so-called basic emotions (angersadness happiness and fear sometimes comple-mented with solemnity and tenderness) The groupfound that all of these emotions as conveyed byplayers could be clearly recognized by an audiencecontaining both musically trained and untrainedlisteners (Juslin 1997a Juslin 1997b) Other re-searchers have shown that more complex emo-tional states can be successfully communicated inperformance although it is not completely clearhow these states are definedmdashbecause performersand listeners often use different terms in describ-ing intentions and perceived emotions (Canazza etal 1997 Battel and Fimbianti 1998 De Poli Rodagraveand Vidolin 1998 Orio and Canazza 1998)

The identification of a communication code inreal performances is another essential task in re-search on emotional aspects of music performanceand researchers have approached this problem inseveral ways Gabrielsson and Juslin (1996) askedprofessional musicians to play a set of simple melo-dies with different prescribed emotions From theserenditions the authors identified sets of potentialacoustical cues that players of different instru-ments (violin flute and guitar) used to encode

emotions in their performances which were similarto the sets listeners used for decoding these emo-tions (Juslin 1997c) A difficulty with this experi-mental design is that conflicts may exist betweenthe general character of the composition and theprescribed emotional quality of its performance

Another important aspect is to what extent thecharacteristic performance variations contain in-formation that is essential to the identification ofemotion The significance of various performancecues in the identification of emotional qualities ofa performance has been tested in synthesis experi-ments Automatic performances were obtained bysetting certain expressive cues to greater or lesservalues either in custom developed software(Canazza et al 1998) or on a commercial se-quencer (Juslin 1997c) and in formal listeningtests listeners were able to recognize and identifythe intended emotions In the computer programdeveloped by Canazza et al the expressivenesscues were applied to a rdquoneutralrdquo performance asplayed by a live musician with no intended emo-tion as opposed to a computer-generated rdquodead-panrdquo performance Juslin manually adjusted thevalues of some previously identified cues bymeans of rdquoappropriate settings on a Roland JX1synthesizer that was MIDI-controlledrdquo by aSynclavier III No phrase markings were used andJuslin assumes that this reduced listenersrsquo ratingsof overall expressiveness

The present work attempts to further explore theflexibility of Director Musices (DM) a program forautomatic music performance that has been devel-oped in our group (Friberg et al 2000) More spe-cifically we have explored the possibilities ofusing the DM program to produce performancesthat differ with respect to emotional expression

Director Musices

The DM program is a LISP language implementa-tion of the KTH performance rule system (egFriberg 1991 1995a Sundberg 1993) for automatic

Emotional Coloringof Computer-ControlledMusic Performances

Roberto Bresin and Anders FribergSpeech Music Hearing Department (TMH)Royal Institute of Technology (KTH)Drottning Kristinas vaumlg 31100 44 Stockholm Sweden[roberto andersf]speechkthsewwwspeechkthsemusic

Bresin and Friberg 45

performance of music It contains about 25 rulesthat model performersrsquo renderings of for examplephrasing accents or rhythmic patterns The DMprogram consists of a set of context-dependentrules that introduce modifications of amplitudeduration vibrato and timbre These modificationsare dependent on and thus reflect musical struc-ture as defined by the score complemented bychord symbols and phrase markers

The program has a deterministic nature it al-ways introduces the same modifications givenidentical musical contexts This determinism mayappear counterintuitive however while DM al-ways generates the same performance of a givencomposition it is well known that a piece of mu-sic can be performed in a number of different butmusically acceptable ways depending on amongother things the performer

However Friberg (1995b) demonstrated that DMcan indeed produce different performances of apiece simply by varying the magnitude of the ef-fects of a single rule that was applied to four hier-archical phrase levels By these simple means DMwas capable of generating inter-onset interval (IOI)patterns that closely matched those observed in re-cordings by three famous pianists This result indi-cates that most differences between performancescan be explained in terms of how players empha-size and demarcate the structure

Macro-Rules for Emotional ExpressivePerformance

Gabrielsson (1994 1995) and Juslin (1997a 1997c)proposed a list of expressive cues that seemedcharacteristic of each of the emotions fear angerhappiness sadness solemnity and tenderness (seeTable 1) The cues described in qualitative termsconcern tempo sound level articulation (staccatolegato) tone onsets and decays timbre IOI devia-tions vibrato and final ritardando These descrip-tions were used as a starting point for selectingrules and rule parameters that could model eachemotion The cues were restricted to those pos-sible on a keyboard instrument therefore elimi-nating the cues of tone onset and decay timbre

and vibrato although these do belong to theGabrielsson and Juslin list of characteristic cuesThe cues used here are listed in Table 1

The method used was analysis by synthesis(Gabrielsson 1985) Rules and rule parametersmodeling each emotion were selected by a panelconsisting of Roberto Bresin Anders Friberg JohanSundberg and Lars Frydeacuten the latter an expertmusician and principal advisor in designing therules in DM After listening to the resulting per-formance the parameters were further adjustedand the whole process was repeated After tryingseveral musical examples a consensus was ob-tained resulting in a macro-rule (rdquorule paletterdquo inDM) consisting of a set of rules and parameters foreach emotion Each macro-rule could be appliedwith the same parameters to each of the musicalexamples tried

There is no model for determining the basictempo from a musical structure In measurementsby Repp (1992 1998) the basic tempo in commer-cial recordings of the same music by different pia-nists differed by up to a factor of two thusindicating that such a model is not feasible There-fore the basic tempo corresponding to a rdquonormalrdquomusical performance had to be selected by thepanel for each music example

The following six of the existing rules were se-lected for modeling the considered emotions HighLoud (Friberg 1991 1995a) increases the soundpressure level as a function of fundamental fre-quency Duration Contrast Articulation (Bresinand Friberg 1998) inserts a micro pause betweentwo consecutive notes if the first note has an IOIof between 30 and 600 msec The Duration Con-trast rule (Friberg 1991 1995a) increases the con-trast between long and short notes iecomparatively short notes are shortened and soft-ened while comparatively long notes are length-ened and made louder Punctuation (Friberg et al1998) automatically locates small tone groups andmarks them with a lengthening of the last noteand a following micropause In the Phrase Archrule (Friberg 1995b) each phrase is performed withan arch-like tempo and sound level curve startingslowsoft becoming fasterlouder in the middlefollowed by a ritardandodecrescendo at the end

46 Computer Music Journal

Table 1 Cue profiles for each emotion as outlined by Gabrielsson and Juslin are compared with the rulesetup used for synthesis with Director Musices

Emotion Expressive Cue Gabrielsson and Juslin Macro-Rule in Director Musices

Tempo Irregular Tone IOI is lengthened by 80Sound Level Low Sound Level is decreased by 6 dBArticulation Mostly staccato or non-legato Duration Contrast Articulation ruleTime Deviations Large Duration Contrast rule

Structural reorganizations Punctuation ruleFinal acceleration (sometimes) Phrase Arch rule applied on phrase level

Phrase Arch rule applied on sub-phrase levelFinal Ritardando

Tempo Very rapid Tone IOI is shortened by 15Sound Level Loud Sound Level is increased by 8 dBArticulation Mostly non-legato Duration Contrast Articulation ruleTime Deviations Moderate Duration Contrast rule

Structural reorganizations Punctuation ruleIncreased contrast between long Phrase Arch rule applied on phrase leveland short notes Phrase Arch rule applied on sub-phrase level

Tempo Fast Tone IOI is shortened by 20Sound Level Moderate or loud Sound Level is increased by 3 dB

High Loud rule

Articulation Airy Duration Contrast Articulation ruleTime Deviations Moderate Duration Contrast rule

Punctuation ruleFinal Ritardando rule

Tempo Slow Tone IOI is lengthened by 30Sound Level Moderate or loud Sound Level is decreased by 6 dBArticulation LegatoTime Deviations Moderate Duration Contrast rule

Phrase Arch rule applied on phrase levelPhrase Arch rule applied on sub-phrase level

Final Ritardando Yes Obtained from the Phrase rule with the nextparameter

Tempo Slow or moderate Tone IOI is lengthened by 30Sound Level Moderate or loud Sound Level is increased by 3 dB

High Loud ruleArticulation Mostly legato Duration Contrast Articulation ruleTime Deviations Relatively small Punctuation ruleFinal Ritardando Yes Final Ritardando rule

Tempo Slow Tone IOI is lengthened by 30Sound Level Mostly low Sound Level is decreased by 6 dBArticulation LegatoTime Deviations Diminished contrast between long Duration Contrast rule

and short notes

Final Ritardando Yes Final Ritardando rule

Fear

An

ger

Hap

pin

ess

Sad

nes

sSo

lem

nit

yT

end

ern

ess

Bresin and Friberg 47

The rule can be applied to different hierarchicalphrase levels Final Ritardando (Friberg andSundberg 1999) introduces a ritardando at the endof the piece similar to a stopping runner

It was also necessary to introduce two new rulesfor controlling overall tempo and sound level TheTone Duration rule shortens or lengthens all notesin the score by a constant percentage The SoundLevel rule decreases or increases the sound level(SL) of all notes in the score by a constant value indecibels The performance variables and the inputparameters of these eight rules are listed in Table 2

The resulting groups of rules ie macro-rulesfor each intended emotion are listed in the rightcolumn of Table 1 The parameters for each of therules are shown graphically in Figure 1 The rulescontained in a macro-rule are automatically ap-plied in sequence one after the other to the inputmusic score The effects produced by each rule areadded to the effects produced by previous rules

As an example the resulting deviations for therdquoangryrdquo version of a Swedish nursery rhyme(Ekorrn satt i granen or The Squirrel Sat on the FirTree composed by Alice Tegneacuter and henceforth re-ferred to as Ekorrn) are shown in Figure 2 The rela-tive time deviation for each notersquos IOI variedbetween ndash20 and ndash40 The negative values implythat the original tempo was slower in accordancewith the observations of Gabrielsson and JuslinThe IOI deviation curve contains small and quickoscillations this reflects our attempt to reproducewhat Gabrielsson and Juslin described as rdquomoder-ate variations in timingrdquo The large time devia-tions associated with short notes was produced bythe duration contrast rule thus realizing the rdquoin-creased contrast between long and short notesrdquonoted by Gabrielsson and Juslin The graph alsoshows that a slight accelerandocrescendo appearsin the end instead of a final ritardandodescrescendo

The off-time duration (DRO) reached a maximumof 110 msec at the endings of phrases andsubphrases The Duration Contrast Articulation ruleintroduced small articulation pauses after all com-paratively short notes thus producing somethingequivalent to the rdquomostly non-legato articulationrdquoobserved by Gabrielsson and Juslin The Punctuation

rule inserted larger articulation pauses at automati-cally detected structural boundaries The overall netresult was that all DRO values were positive

At the endings of phrases and subphrases thesound level increased an effect caused by the in-version of the Phrase Arch rule This rule at-tempted to realize the rdquostructural reorganizationrdquoproposed by Gabrielsson and Juslin The soundlevel curve shows positive deviations correspond-ing to the increased sound level also noted byGabrielsson and Juslin A more detailed descrip-tion of the macro-rule used for the rdquoangryrdquo versionof Ekorrn can be found in a previous work by theauthors (Bresin and Friberg 2000)

Listening Experiment

According to Juslin (1997b) forced-choice judg-ments and free-labeling judgments give similar re-sults when listeners attempt to decode a performerrsquosintended emotional expression Therefore it wasconsidered sufficient to make a forced-choice listen-ing test to assess the efficiency of the emotionalcommunication

Music

Two clearly different pieces of music were usedand are given in Figures 3 and 4 One was themelody line of Ekorrn written in a major modeThe other was a computer-generated piece in a mi-nor mode (henceforth called Mazurka) written inan attempt to portray the musical style of FreacutedericChopin (Cope 1992) The phrase structure with re-gard to the levels of sub-phrase (level 6) phrase(level 5) and piece (level 4) was marked in eachscore (shown in Figures 3 and 4) thus meeting thedemands of the Phrase Arch rule

Performances

Applying the macro-rules listed in Table 1 usingthe k-values shown in Figure 1 seven differentversions of each of the two scores were generated

48 Computer Music Journal

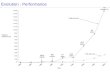

Figure 1 Values used forthe indicated rule param-eters in the various rulesfor the emotions tender-ness (T) sadness (Sa) so-

lemnity (So) happiness(H) anger (A) and fear(F) Each rule is repre-sented by a panel

Bresin and Friberg 49

Table 2

(a) List of the variables affected by the rules and of the parameters used for setting up the rules

Rule name Affected variables Parameters (default values)

High Loud sl

Duration Contrast dr sl amp 1 dur 1

Duration Contrast Articulation dro

Punctuation dr dro dur 1 duroff 1

Phrase Arch dr sl phlevel 7 power 2 amp 1next 1

2next 1 turn 2 last 1 acc 1

Final Ritard dr q 3

(b) Description of the affected variables

dl Sound level in decibels relative to a constant value defined in DM (default value is sl = 0)

dr Total inter-onset duration in msec

dro Offtime duration ie offset to next onset

(c) Description of the parameters

amp Sets the sound level as a factor multiplied by the default value

dur Amount of the effect on the duration

duroff Amount of the effect on the off-time duration

phlevel Indicates the level of phrasing at which the rule produces the effect

power Determines the shape of the accelerando and ritardando functions

next Used to modify the amount of ritardando for phrases that also terminate a phrase at the next higherlevel

turn The position of the turning point between the accelerando and the ritardando

last Changes the duration of the last note of each phrase

acc Controls the amount of accelerando expressed in terms of factor multiplied by k2

q Curvature parameter

Note The effect of each rule can be amplified or damped by multiplying it with an emphasis parameter called k

50 Computer Music Journal

Figure 2 Percent IOI de-viation (top) off-time de-viation (middle) andsound level deviation(bottom) for the rdquoangryrdquoversion of Ekorrn

Bresin and Friberg 51

These versions represented our modeling of rdquofearrdquordquoangerrdquo rdquohappinessrdquo rdquosadnessrdquo rdquosolemnityrdquo andrdquotendernessrdquo In addition a rdquodead-panrdquo versionhenceforth called the rdquono-expressionrdquo version wasgenerated for the purpose of comparison Thetempo for the rdquono-expressionrdquo version was 187quarter notes per minute for Ekorrn and 96 quarternotes per minute for Mazurka The same macro-rules were used for both Ekorrn and Mazurka

The polyphonic structure of Mazurka repre-sented as a multi-track MIDI file required the useof a synchronization rule the Melodic Sync ruleThis rule generates a new track consisting of alltone onsets in all tracks When there are several si-multaneous onsets the note with the maximummelodic charge is selected All rules that modifythe IOI are applied to this synchronization trackand the resulting IOIs are transferred back to the

original tracks (Sundberg Friberg and Frydeacuten1989) Rules that act on parameters other than IOIare applied to each track independently

Figures A1 and A2 in the Appendix show therelative deviations of IOI and the variation of DROand sound level for all six performances of eachpiece Negative DRO values indicate a legato ar-ticulation of two subsequent notes while positivevalues indicate a staccato articulation Negativevalues of sound level deviation indicate a softerperformance as compared with the rdquono-expres-sionrdquo performance

Fourteen performances (7 emotions acute 2 ex-amples)mdashoriginally stored in MIDI filesmdashwere re-corded as 441 kHz 16-bit uncompressed soundfiles A grand piano sound (taken from theKurzweil sound samples of the Pinnacle TurtleBeach sound card) was used for the synthesis

Figure 3 The melodyEkorrn The bracketsabove the staff corre-spond to different phraselevels piece (4) phrase(5) and sub-phrase (6)

Figure 4 The first 12 (of23) measures of MazurkaThe brackets mark thephrase structure as in Fig-ure 3 The entire compo-

sition can be found on theInternet at artsucscedufacultycopehome

Figure 3

Figure 4

52 Computer Music Journal

Figure 5 Ordered averagepercentage of rdquosamerdquo re-sponses given by eachsubject for the repetitionsof Ekorrn and Mazurka

Listeners

Twenty subjects 24ndash50 years in age and consistingof five females and 15 males volunteered as listen-ers None were professional musicians or musicstudents although 18 of the subjects stated thatthey currently or formerly played an instrumenton a non-regular basis The subjects all worked atthe Speech Music Hearing Department of theRoyal Institute of Technology Stockholm

Procedure

The subjects listened to the examples individuallyover Sennheiser HD435 Manhattan headphones ad-justed to a comfortable level Each subject was in-structed to identify the emotional expression of eachexample as one out of seven alternatives rdquofearrdquordquoangerrdquo rdquohappinessrdquo rdquosadnessrdquo rdquosolemnityrdquo rdquoten-dernessrdquo or rdquono-expressionrdquo The responses wereautomatically recorded with the Visor software sys-tem specially designed for listening tests (Granqvist1996) Visor presents the sound stimuli as anony-mous boxes in random order and provides sevenempty columns corresponding to the seven differentemotions into which the boxes may be placed Sub-jects were instructed to (1) double-click on each ofthe boxes and listen to its music (2) identify theemotion conveyed by the performance (3) drag thebox to the corresponding column and (4) continueuntil all the boxes were placed Subjects could listenas many times as needed to each music sample andthey were given the opportunity to associate anynumber of boxes with the same emotioncolumn

Each session contained two sub-tests repeatedonce each presenting the seven performances of (1)Ekorrn (2) Mazurka (3) repeat of Ekorrn and (4) re-peat of Mazurka The stimuli order within each sub-test was automatically randomized for each subjectby the Visor program The average duration of an ex-periment session was approximately eleven minutes

Analysis

Consistency of the subjectsrsquo answers was analyzedfrom the repeated stimuli in the test Four sub-

jects who gave the same answers for repeatedstimuli in fewer than 36 of the cases wereeliminated from subsequent analysis (see Figure 5)

Statistical analysis of the results from the listen-ing test was conducted We conducted a three-wayrepeated-measures analysis of variance (ANOVA)with fixed factors Intended Emotion (7 levels) Per-ceived Emotion (7 levels) and Piece of Music (2levels) and with listenersrsquo choices as the dependentvariable For each intended emotion listenersrsquochoices were codified by marking the subjectsrsquo cho-sen emotions with the value one and the remainingsix emotions with the value zero In addition atwo-way ANOVA was conducted for each intendedemotion separately with fixed factors PerceivedEmotion (7 levels) and Piece of Music (2 levels)

The synthesis of each emotional performancewas achieved by using a special macro-rule eachincluding a specific subset of DM rules as alreadymentioned (see Table 1 Table 2 and Figure 1) Atotal of 17 parameters were involved in thesemacro-rules some of which were interrelated Anattempt was made to reduce the number of dimen-sions of this space by means of a principal compo-nent analysis of these parameters

Results and Discussion

The three-way ANOVA revealed that the mainfactor Perceived Emotion was significant(F(63038) = 3917 p lt 00007) implying that lis-teners overall preferred rdquoangryrdquo and rdquohappyrdquoHowever the main factors Intended Emotion and

Bresin and Friberg 53

Piece of Music were not significant The interac-tion between Intended Emotion and PerceivedEmotion was significant (F(42 3038) = 55505p lt 00001) The interaction between Intended Emo-tion Perceived Emotion and Piece of Music wasalso significant (F(42 3038) = 2639 p lt 00001)showing an influence of the score on the perceptionof the intended emotion An interesting resultemerging from the three-way ANOVA was a sig-nificant interaction between Piece of Music andPerceived Emotion (F(6 3038) = 3404 p lt 00024)subjects rated performances of Mazurka to bemainly rdquoangryrdquo and rdquosadrdquo while performances ofEkorrn were perceived as more rdquohappyrdquo and rdquoten-derrdquo Both pieces were perceived with almost thesame rate of rdquosolemnityrdquo and rdquono-expressionrdquo asshown in Figure 6 This result confirms the typicalassociation of rdquohappinessrdquo with major mode andrdquosadnessrdquo with minor mode

The significance of the main factor PerceivedEmotion in the three-way ANOVA induced us torun a two-way ANOVA for each of the seven In-tended Emotions in order to verify that each ofthem was significant In each of the seven two-way ANOVAs the effects of Perceived Emotionwere as expected significant (p lt 00001) and theeffects of music were insignificant Figure 7 showsthe percentage of rdquocorrectrdquo responses for the twopieces In all cases but one these percentages wellexceeded the 14 chance level and were in all

cases but one the highest value To facilitate amore detailed analysis the subjectsrsquo responses foreach version of Ekorrn and Mazurka are presentedas a confusion matrix in Tables 3a and 3b An in-teresting outcome in both confusion matrixes istheir high degree of symmetry along the main di-agonal except for a few terms which implies con-sistency in the subjectsrsquo choices

The rdquotendernessrdquo version of Mazurka was theonly case that was not correctly identified (9)From the two-way ANOVA for the intended emo-tion rdquotendernessrdquo emerged a significant interac-tion between Piece of Music and PerceivedEmotion (F(6434) = 7519 p lt 00001) To explainthis significance a post hoc comparison using theScheffeacute test was applied to all possible pairs of theseven levels of the main factor Perceived Emotionfor each Piece of Music separately The Scheffeacutetest revealed that Ekorrn was perceived as rdquoten-derrdquo while Mazurka was perceived as rdquosadrdquo Ac-cording to Juslin (1997b) the tenderness version isoften performed with a higher sound level than therdquosadnessrdquo version while the same sound level forboth versions was used in this experiment Thisobservation may help us achieve better macro-rules for rdquosadnessrdquo and rdquotendernessrdquo performancesin future work

For happiness the responses differed substan-tially between Ekorrn (97) and Mazurka (63)This was confirmed by a significant interaction be-

Figure 6 Percentage distri-bution of subjectsrsquo choicesover the two pieces Ekorrnand Mazurka

Figure 7 Percent rdquocor-rectrdquo responses for thetwo test examples ob-tained for each of the in-dicated emotions

54 Computer Music Journal

Table 3

Confusion matrix () for the classification test of seven synthesized performances of (a) Ekorrn and (b)Mazurka

(a)

Intended Emotion Fear Anger Happiness Sadness Solemnity Tenderness No Expression

Fear 44 0 3 3 0 41 9

Anger 3 91 0 0 6 0 0

Happiness 0 3 97 0 0 0 0

Sadness 3 0 0 69 0 28 0

Solemnity 3 3 3 6 72 0 13

Tenderness 65 0 0 28 3 53 95

No-Expression 0 0 9 0 13 6 72

(b)

Intended Emotion Fear Anger Happiness Sadness Solemnity Tenderness No Expression

Fear 47 0 0 13 3 31 6

Anger 6 91 0 3 0 0 0

Happiness 6 28 63 0 3 0 0

Sadness 3 0 0 59 0 38 0

Solemnity 3 16 0 13 59 0 9

Tenderness 13 0 0 50 3 9 25

No-Expression 3 0 95 0 22 95 56

tween Piece of Music and Perceived Emotion(F(6434) = 11453 p lt 00001) For the remainingfive Intended Emotions the interaction betweenPerceived Emotion and Piece of Music was not sta-tistically significant

The fear version of Ekorrn was classified both asrdquoafraidrdquo and as rdquotenderrdquo 44 of the subjects per-ceived it as rdquoafraidrdquo and 41 of them perceived itas rdquotenderrdquo The fear version of Mazurka was signifi-cantly perceived as more rdquoafraidrdquo and less rdquotenderrdquo

These differences in the perception of Ekorrnand Mazurka performances could be partly be-cause Mazurka is written in a minor mode which

in the western music tradition is often associatedwith moods of sadness fear or anger

From the principal component analysis twoprincipal factors emerged explaining 61 (Factor1) and 29 (Factor 2) of the total variance Figure8 presents the main results in terms of the distri-bution of the different setups in the two-dimen-sional space

Factor 1 was closely related to deviations ofsound level and tempo Louder and quicker perfor-mances had negative values of Factor 1 and weredelimited by the coordinates of rdquoangerrdquo and rdquoso-lemnityrdquo Softer and slower performances had

Bresin and Friberg 55

positive values of Factor 1 and were delimited bythe coordinates of rdquosadnessrdquo and rdquofearrdquo

Factor 2 was closely related to the articulationand phrasing variables This distribution resemblesthose presented in previous works and obtained us-ing other methods (De Poli Rodagrave and Vidolin 1998Orio and Canazza 1998 Canazza et al 1998) Thequantity values (k-values) for the duration contrastrule in the macro-rules also shown in Figure 8 in-creased clockwise from the fourth quadrant to thefirst Figure 8 also shows an attempt to qualita-tively interpret the variation of this rule in thespace It shows that in the rdquotenderrdquo and rdquosadrdquo per-formances the contrast between note durationswas very slight (shorter notes were played longer)strong in the rdquoangryrdquo and rdquohappyrdquo performancesand strongest in the rdquofearrdquo version

The acoustical cues resulting from the six syn-thesized emotional performances are presented inFigure 9 The average IOI deviations are plottedagainst the average sound level together with theirstandard deviations for all six performances of eachpiece Negative values of sound level deviation in-dicate a softer performance compared to therdquono-expressionrdquo version rdquoAngerrdquo and rdquohappinessrdquoperformances were thus played quicker and louderwhile rdquotendernessrdquo rdquofearrdquo and rdquosadnessrdquo perfor-mances were slower and softer relative to ardquono-expressionrdquo rendering Negative values of IOI

deviation imply a tempo faster than the originaland vice versa for positive values The rdquofearrdquo andrdquosadnessrdquo versions have larger standard deviationsobtained mainly by exaggerating the duration con-trast but also by applying the phrasing rules Theseacoustic properties fit very well with the observa-tions of Gabrielsson and Juslin and also with perfor-mance indications in the classical music traditionFor example in his Versuch uumlber die wahre Art dasClavier zu spielen (1753 reprinted 1957) CarlPhilippe Emanuel Bach wrote rdquo activity is ex-pressed in general with staccato in Allegro and ten-derness with portato and legato in Adagio rdquo)Figure 9 could be interpreted as a mapping of Figure8 into a two-dimensional acoustical space

The Gabrielsson and Juslin experiment includedthe cues tone onset and decay timbre and vibratoNone of these can be implemented in piano perfor-mances and thus had to be excluded from thepresent experiment Yet our identification resultswere similar to or even better than those found byGabrielsson and Juslin A possible explanation isthat listenersrsquo expectations regarding expressive de-viations vary depending on the instrument played

Finally the SL and time deviations used here inthe seven performances can be compared to thoseobserved in other studies of expressiveness in sing-ing and speech Figure 10 presents such a compari-son For tempo the values were normalized withrespect to rdquohappyrdquo because rdquoneutralrdquo was not in-cluded in all studies compared In many casesstriking similarities can be observed For examplemost investigations reported a slower tempo and alower mean sound level in rdquosadrdquo than in rdquohappyrdquoThe similarities indicate that similar strategies areused to express emotions in instrumental musicsinging and speech

General Discussion

The main result of the listening experiment wasthat the emotions associated with the DM macro-rules were correctly perceived in most cases Thissuggests that DM performance rules can be groupedinto macro-rules to produce performances that canbe associated with different emotional states

Figure 8 Two-dimensionalspace of the indicatedemotions derived from aprincipal componentanalysis of all rule param-eters in all rules used forthe different emotional

rule setups The dashedcurve represents an ap-proximation of how the k-value of the rule DurationContrast varied betweenthe setups

56 Computer Music Journal

Figure 9 Average IOI de-viations versus averagesound level deviations forall six performances of (a)Ekorrn and (b) MazurkaThe bars represent thestandard deviations

(a)

(b)

Bresin and Friberg 57

Figure 10 Relative devia-tions of (a) tempo and (b)sound pressure level (SPL)as reported in speech re-search (Mozziconacci1998 van Bezooijen 1984

Kitahara and Tohkura1992 House 1990Carlson Granstroumlm andNord 1992) singing voiceresearch (Kotlyar andMorozov 1976

Langeheinecke et al1999) and in the presentstudy (indicated as rdquoin-strumental musicrdquo in thefigure)

(a)

(b)

58 Computer Music Journal

An important observation is that the same macro-rules could be applied with reasonably similar suc-cess to both Ekorrn and Mazurkamdashtwo compositionswith very different styles This is not surprising be-cause the performance rules have been designed to begenerally applicable On the other hand still betterresults may be possible if somewhat different ver-sions of the macro-rules are used for scores of differ-ing styles For example a style-dependent variationof the quantities for the staccatolegato and thephrase-marking rules may be introduced

The magnitude of each effect ie the k-valuesplays an important role in the differentiation ofemotional expression Quantities greater than unityimply an amplification of the effect of the rule val-ues between zero and unity a reduced effect andnegative values the inverse of the effect While thedefault value is k = 1 higher values have been foundoptimal if a rule is applied in isolation (SundbergFriberg and Frydeacuten 1991) In general quantities de-viating from unity have been found appropriatemainly when many rules are applied in combinationand for the purpose of adapting the rules to the in-trinsic expressive character of the composition Val-ues smaller than unity may be needed when the neteffect of many rules produces exaggerated effects Inthe present experiment exaggerated values for someof the rules were found useful for producing emo-tionally expressive performances where emphasis orwarping of the musical structure was needed Instudies comparing expressive and neutral renderingsof the same musical examples greater expressivedeviations have been found in the expressive ver-sions (Sundberg 2000)

The misclassification of mazurka as rdquosadrdquorather than the intended alternative mood of rdquoten-derrdquo may be related to tonality According toMeyer (1956) the minor mode is typically associ-ated with intense feeling and rdquosadness sufferingand anguish in particularrdquo Meyer argues that thisassociation rdquois a product of the deviant unstablecharacter of the mode and of the association ofsadness and suffering with the slower tempi thattend to accompany the chromaticism prevalent inthe minor moderdquo Associations between minormode with sadness and major mode with happi-ness have been experimentally confirmed in stud-

ies of both children and adults (Gerardi andGerken 1995 Kastner and Crowder 1990) Accord-ing to this reasoning the minor mode of mazurkamay explain why this piece was much less suc-cessful than Ekorrn in its rdquohappinessrdquo versions

Applications

Our findings indicate interesting new potential forthe DM system Our results clearly demonstratethat in spite of its deterministic structure theDM system is capable of generating performancesthat differ significantly in emotional quality Thisinvites some speculation regarding future applica-tions

One immediate application is the design of newplug-ins for commercial music sequencers and edi-tors Users would be able to produce emotionallycolored performances of any MIDI file while stillusing their favorite software tool

Another possibility would be to use the newmacro-rules as tools for objective performanceanalysis Macro-rules could be used in reverse or-der to analyze the emotional content of a perfor-mance It has been demonstrated that the ruleparameters can be automatically fitted to a spe-cific performance (cf Friberg 1995b) this wouldallow the classification of deviations in variousperformance parameters in terms of plausible in-tended emotion This presents a new perspectivein the field of music performance didactics whereDM has already been successfully applied (Fribergand Battel forthcoming)

A third possibility is to design an rdquoemotionaltoolboxrdquo capable both of recognizing the emotionof playerssingers and of translating it back in au-tomatic performance This could be applied tosmart Karaoke applications included in the futureMPEG-7 standard for improving human-machineinteraction The user might sing the melody of asong to retrieve it from large MIDI file databaseson the Internet and the Karaoke system couldplay back the file with the same emotions used bythe singer (Ghias et al 1995)

A fourth appealing application is that of con-sumer electronics involving automatic perfor-

Bresin and Friberg 59

mance of synthesized sounds for instance in cel-lular phones Rule-based techniques can be usedto improve the quality of the ringing tone perfor-mance of cellular phones This is probably themost popular music synthesizer at least with thehighest diffusion and we listen to its mechanicaland dull performances every day In a currentproject we are using our technique to design anemotional cellular phone The caller will be ableto send a code to anotherrsquos phone and by influ-encing the performance of the receiverrsquos ringingtone include an emotional content In this way itwill be possible to have a musical interaction be-tween the caller and the receiver Furthermoresome cellular phone producers include the possi-bility of using MIDI standard as a format for ring-ing tone files

Conclusions

The results demonstrate that in spite of the de-terministic nature of the DM program differentperformances of the same score can be achievedby applying different combinations of rules By in-terpreting the results of Gabrielsson and Juslin interms of rules incorporated in the DM programemotionally differing performances can be pro-duced Because all DM rules are triggered by thestructure as defined by the score in terms of forexample a combination of note values or inter-vals the representation of musical structureseems to play a decisive role in creating emotionalexpressiveness According to a principal compo-nent analysis applied to the rule parameters thetwo most important dimensions for emotional ex-pressiveness are mean SL and tempo (Factor 1)and phrasing and articulation (Factor 2) There-fore the Duration Contrast rule plays a particu-larly productive role Mean SL and mean temposeem to produce similar contributions in perfor-mance of instrumental music as in speech andsinging The results from the listening test reflectthe typical association of happiness with majormode and sadness with minor mode and the asso-ciation of tenderness with major mode and angerwith minor mode In future research we will ex-

amine the formulation of new rules for the render-ing of expressive articulation In fact in a recentstudy Bresin and Battel (in press) showed howlegato staccato and repeated tone articulationvaries significantly in performances biased by dif-ferent expressive adjectives

Acknowledgments

This work was supported by the Bank of SwedenTercentenary Foundation The authors are gratefulto Johan Sundberg who assisted in editing themanuscript to Peta White who edited our Englishto Diego DallrsquoOsto and Patrik Juslin for valuablediscussions to the reviewers and to the twentysubjects who participated in the listening test

References

Bach CPE 1957 Facsimile of first edition 1753 (PartOne) and 1762 (Part Two) Ed L Hoffmann-ErbrechtLeipzig Breitkopf amp Haumlrtel

Battel G U and R Fimbianti 1998 rdquoHow Communi-cate Expressive Intentions in Piano Performancerdquo InA Argentini and C Mirolo eds Proceedings of theXII Colloquium on Musical Informatics UdineAIMI 67ndash70

Bresin R and G U Battel In press rdquoArticulationStrategies in Expressive Piano Performancerdquo Journalof New Music Research

Bresin R and A Friberg 1998 rdquoEmotional Expressionin Music Performance Synthesis and DecodingrdquoTMH-QPSR Speech Music and Hearing QuarterlyProgress and Status Report 485ndash94

Bresin R and A Friberg 2000 rdquoRule-Based EmotionalColouring of Music Performancerdquo Proceedings of the2000 International Computer Music Conference SanFrancisco International Computer Music Associa-tion pp 364ndash367

Canazza S et al 1997 rdquoSonological Analysis of Clari-net Expressivityrdquo In M Leman ed Music Gestaltand Computing Studies in Cognitive and SystematicMusicology Berlin Springer Verlag 431ndash440

Canazza S et al 1998 rdquoAdding Expressiveness to Au-tomatic Musical Performancerdquo In A Argentini andC Mirolo eds Proceedings of the XII Colloqium onMusical Informatics Udine AIMI 71ndash74

60 Computer Music Journal

Carlson R B Granstroumlm and L Nord 1992 rdquoExperi-ments with Emotive Speech Acted Utterances andSynthesized Replicasrdquo Proceedings ICSLP 92 BanffAlberta Canada University of Alberta 1671ndash674

Cope D 1992 rdquoComputer Modeling of Musical Intelli-gence in Experiments in Musical Intelligencerdquo Com-puter Music Journal 16(2)69ndash83

De Poli G A Rodagrave and A Vidolin 1998 rdquoA Model ofDynamic Profile Variation Depending on ExpressiveIntention in Piano Performance of Classical MusicrdquoIn A Argentini and C Mirolo eds Proceedings of theXII Colloqium on Musical Informatics Udine AIMI79ndash82

Friberg A 1991 rdquoGenerative Rules for Music Perfor-mancerdquo Computer Music Journal 15(2)56ndash71

Friberg A 1995a rdquoA Quantitative Rule System for Mu-sical Expressionrdquo Doctoral dissertation StockholmRoyal Institute of Technology

Friberg A 1995b rdquoMatching the Rule Parameters ofPhrase Arch to Performances of `Traumlumereirsquo A Pre-liminary Studyrdquo In A Friberg and J Sundberg edsProceedings of the KTH Symposium on Grammarsfor Music Performance Stockholm Royal Institute ofTechnology Speech Music and Hearing Department37ndash44

Friberg A et al 1998 rdquoMusical Punctuation on theMicrolevel Automatic Identification and Perfor-mance of Small Melodic Unitsrdquo Journal of New Mu-sic Research 27(3)271ndash292

Friberg A V et al 2000 rdquoGenerating Musical Perfor-mances with Director Musicesrdquo Computer MusicJournal 24(3)23ndash29

Friberg A and G U Battel Forthcoming rdquoStructuralCommunication Timing and Dynamicsrdquo In RParncutt and G McPherson eds Science and Psy-chology of Music Performance Oxford Oxford Uni-versity Press

Friberg A and J Sundberg 1999 rdquoDoes Music Perfor-mance Allude to Locomotion A Model of FinalRitardandi Derived from Measurements of StoppingRunnersrdquo Journal of the Acoustical Society ofAmerica 105(3)1469ndash1484

Gabrielsson A 1985 rdquoInterplay Between Analysis andSynthesis in Studies of Music Performance and MusicExperiencerdquo Music Perception 3(1)59ndash86

Gabrielsson A 1994 rdquoIntention and Emotional Expres-sion in Music Performancerdquo In A Friberg et al edsProceedings of the Stockholm Music Acoustics Con-ference 1993 Stockholm Royal Swedish Academy ofMusic 108ndash111

Gabrielsson A 1995 rdquoExpressive Intention and Perfor-mancerdquo In R Steinberg ed Music and the Mind Ma-chine The Psychophysiology and the Psychopathologyof the Sense of Music Berlin Springer Verlag 35ndash47

Gabrielsson A and P Juslin 1996 rdquoEmotional Expres-sion in Music Performance Between the PerformerrsquosIntention and the Listenerrsquos Experiencerdquo Psychologyof Music 2468ndash91

Gerardi G M and L Gerken 1995 rdquoThe Develop-ment of Affective Responses to Modality and MelodicContourrdquo Music Perception 12(3)279ndash290

Ghias A et al 1995 rdquoQuery by Humming Musical In-formation Retrieval in an Audio Databaserdquo Proceed-ings of ACM Multimedia laquo95 New York Associationfor Computing Machinery 231ndash236

Granqvist S 1996 rdquoEnhancements to the Visual Ana-logue Scale VAS for Listening Testsrdquo Royal Instituteof Technology Speech Music and Hearing Depart-ment Quarterly Progress and Status Report 461ndash65

House D 1990 rdquoOn the Perception of Mood in SpeechImplications for the Hearing Impairedrdquo Lund Univer-sity Dept of Linguistics Working Papers 3699ndash108

Juslin PN 1997a rdquoEmotional Communication in Mu-sic Performance A Functionalist Perspective andSome Datardquo Music Perception 14(4)383ndash418

Juslin P N 1997brdquo Can Results from Studies of Per-ceived Expression in Musical Performances Be Gener-alized Across Response Formatsrdquo Psychomusicology1677ndash101

Juslin PN 1997c rdquoPerceived Emotional Expression inSynthesized Performances of a Short Melody Captur-ing the Listenerrsquos Judgment Policyrdquo MusicaeScientiae 1(2)225ndash256

Kastner M P and R G Crowder 1990 rdquoPerception ofthe MajorMinor Distinction IV Emotional Conno-tation in Young Childrenrdquo Music Perception8(2)189ndash202

Kitahara Y and Y Tohkura 1992 rdquoProsodic Control toExpress Emotions for Man-Machine InteractionrdquoIEICE Transactions on Fundamentals of ElectronicsCommunications and Computer Sciences 75155ndash163

Kotlyar GM and VP Morozov 1976 rdquoAcousticalCorrelates of the Emotional Content of VocalizedSpeechrdquo Acoustical Physics 22(3)208ndash211

Langeheinecke E J et al 1999 rdquoEmotions in the Sing-ing Voice Acoustic Cues for Joy Fear Anger andSadnessrdquo Journal of the Acoustical Society ofAmerica 105(2) part 2 1331

Meyer LB 1956 Emotion and Meaning in Music Chi-cago University of Chicago Press

Bresin and Friberg 61

Mozziconacci S 1998 Speech Variability and EmotionProduction and Perception Eindhoven TechnischeUniversiteit Eindhoven

Orio N and S Canazza 1998 rdquoHow Are ExpressiveDeviations Related to Musical Instruments Analysisof Tenor Sax and Piano Performances of `How Highthe Moonrsquo Themerdquo In A Argentini and C Miroloeds Proceedings of the XII Colloquium on MusicalInformatics Udine AIMI 75ndash78

Repp B H 1992 rdquoDiversity and Commonality in Mu-sic Performance An Analysis of Timing Microstruc-ture in Schumannrsquos `Traumlumereirsquordquo Journal of theAcoustical Society of America 92(5)2546ndash2568

Repp B H 1998 rdquoA Microcosm of Musical ExpressionI Quantitative Analysis of Pianistsrsquo Timing in the Ini-

tial Measures of Chopinrsquos Etude in E Majorrdquo Journalof the Acoustical Society of America 1041085ndash1100

Sundberg J 2000 rdquoEmotive Transformsrdquo Phonetica Vol 57 No 2ndash4 95ndash112

Sundberg J 1993 rdquoHow Can Music Be ExpressiverdquoSpeech Communication 13239ndash253

Sundberg J A Friberg and L Frydeacuten 1989 rdquoRules forAutomated Performance of Ensemble Musicrdquo Con-temporary Music Review 389ndash109

Sundberg J A Friberg and L Frydeacuten 1991 rdquoThresholdand Preference Quantities of Rules for Music Perfor-mancerdquo Music Perception 9(1)71ndash92

van Bezooijen R A M G 1984 The Characteristicsand Recognizability of Vocal Expressions of EmotionDordrecht The Netherlands Foris Publications

62 Computer Music Journal

The following is a list of World Wide Web sites relevant to this article

Sound and MIDI files of the seven versions of Ekorrn and Mazurkawwwspeechkthse~robertoemotion

KTH performance rules descriptionwwwspeechkthsemusicperformancewwwspeechkthsemusicpublicationsthesisafsammfa2ndhtmwwwspeechkthsemusicpublicationsthesisrb

Director Musices software (Windows and Mac OS)wwwspeechkthsemusicperformancedownload

Figure A1 (1) Percent IOIdeviation (2) off-time de-viation in msec and (3)sound level deviation indB for each performanceof Ekorrn

Bresin and Friberg 63

Figure A2 (1) Percent IOIdeviation (2) off-time de-viation in msec and (3)sound level deviation indB for each performanceof the first 45 notes ofMazurka

Bresin and Friberg 45

performance of music It contains about 25 rulesthat model performersrsquo renderings of for examplephrasing accents or rhythmic patterns The DMprogram consists of a set of context-dependentrules that introduce modifications of amplitudeduration vibrato and timbre These modificationsare dependent on and thus reflect musical struc-ture as defined by the score complemented bychord symbols and phrase markers

The program has a deterministic nature it al-ways introduces the same modifications givenidentical musical contexts This determinism mayappear counterintuitive however while DM al-ways generates the same performance of a givencomposition it is well known that a piece of mu-sic can be performed in a number of different butmusically acceptable ways depending on amongother things the performer

However Friberg (1995b) demonstrated that DMcan indeed produce different performances of apiece simply by varying the magnitude of the ef-fects of a single rule that was applied to four hier-archical phrase levels By these simple means DMwas capable of generating inter-onset interval (IOI)patterns that closely matched those observed in re-cordings by three famous pianists This result indi-cates that most differences between performancescan be explained in terms of how players empha-size and demarcate the structure

Macro-Rules for Emotional ExpressivePerformance

Gabrielsson (1994 1995) and Juslin (1997a 1997c)proposed a list of expressive cues that seemedcharacteristic of each of the emotions fear angerhappiness sadness solemnity and tenderness (seeTable 1) The cues described in qualitative termsconcern tempo sound level articulation (staccatolegato) tone onsets and decays timbre IOI devia-tions vibrato and final ritardando These descrip-tions were used as a starting point for selectingrules and rule parameters that could model eachemotion The cues were restricted to those pos-sible on a keyboard instrument therefore elimi-nating the cues of tone onset and decay timbre

and vibrato although these do belong to theGabrielsson and Juslin list of characteristic cuesThe cues used here are listed in Table 1

The method used was analysis by synthesis(Gabrielsson 1985) Rules and rule parametersmodeling each emotion were selected by a panelconsisting of Roberto Bresin Anders Friberg JohanSundberg and Lars Frydeacuten the latter an expertmusician and principal advisor in designing therules in DM After listening to the resulting per-formance the parameters were further adjustedand the whole process was repeated After tryingseveral musical examples a consensus was ob-tained resulting in a macro-rule (rdquorule paletterdquo inDM) consisting of a set of rules and parameters foreach emotion Each macro-rule could be appliedwith the same parameters to each of the musicalexamples tried

There is no model for determining the basictempo from a musical structure In measurementsby Repp (1992 1998) the basic tempo in commer-cial recordings of the same music by different pia-nists differed by up to a factor of two thusindicating that such a model is not feasible There-fore the basic tempo corresponding to a rdquonormalrdquomusical performance had to be selected by thepanel for each music example

The following six of the existing rules were se-lected for modeling the considered emotions HighLoud (Friberg 1991 1995a) increases the soundpressure level as a function of fundamental fre-quency Duration Contrast Articulation (Bresinand Friberg 1998) inserts a micro pause betweentwo consecutive notes if the first note has an IOIof between 30 and 600 msec The Duration Con-trast rule (Friberg 1991 1995a) increases the con-trast between long and short notes iecomparatively short notes are shortened and soft-ened while comparatively long notes are length-ened and made louder Punctuation (Friberg et al1998) automatically locates small tone groups andmarks them with a lengthening of the last noteand a following micropause In the Phrase Archrule (Friberg 1995b) each phrase is performed withan arch-like tempo and sound level curve startingslowsoft becoming fasterlouder in the middlefollowed by a ritardandodecrescendo at the end

46 Computer Music Journal

Table 1 Cue profiles for each emotion as outlined by Gabrielsson and Juslin are compared with the rulesetup used for synthesis with Director Musices

Emotion Expressive Cue Gabrielsson and Juslin Macro-Rule in Director Musices

Tempo Irregular Tone IOI is lengthened by 80Sound Level Low Sound Level is decreased by 6 dBArticulation Mostly staccato or non-legato Duration Contrast Articulation ruleTime Deviations Large Duration Contrast rule

Structural reorganizations Punctuation ruleFinal acceleration (sometimes) Phrase Arch rule applied on phrase level

Phrase Arch rule applied on sub-phrase levelFinal Ritardando

Tempo Very rapid Tone IOI is shortened by 15Sound Level Loud Sound Level is increased by 8 dBArticulation Mostly non-legato Duration Contrast Articulation ruleTime Deviations Moderate Duration Contrast rule

Structural reorganizations Punctuation ruleIncreased contrast between long Phrase Arch rule applied on phrase leveland short notes Phrase Arch rule applied on sub-phrase level

Tempo Fast Tone IOI is shortened by 20Sound Level Moderate or loud Sound Level is increased by 3 dB

High Loud rule

Articulation Airy Duration Contrast Articulation ruleTime Deviations Moderate Duration Contrast rule

Punctuation ruleFinal Ritardando rule

Tempo Slow Tone IOI is lengthened by 30Sound Level Moderate or loud Sound Level is decreased by 6 dBArticulation LegatoTime Deviations Moderate Duration Contrast rule

Phrase Arch rule applied on phrase levelPhrase Arch rule applied on sub-phrase level

Final Ritardando Yes Obtained from the Phrase rule with the nextparameter

Tempo Slow or moderate Tone IOI is lengthened by 30Sound Level Moderate or loud Sound Level is increased by 3 dB

High Loud ruleArticulation Mostly legato Duration Contrast Articulation ruleTime Deviations Relatively small Punctuation ruleFinal Ritardando Yes Final Ritardando rule

Tempo Slow Tone IOI is lengthened by 30Sound Level Mostly low Sound Level is decreased by 6 dBArticulation LegatoTime Deviations Diminished contrast between long Duration Contrast rule

and short notes

Final Ritardando Yes Final Ritardando rule

Fear

An

ger

Hap

pin

ess

Sad

nes

sSo

lem

nit

yT

end

ern

ess

Bresin and Friberg 47

The rule can be applied to different hierarchicalphrase levels Final Ritardando (Friberg andSundberg 1999) introduces a ritardando at the endof the piece similar to a stopping runner

It was also necessary to introduce two new rulesfor controlling overall tempo and sound level TheTone Duration rule shortens or lengthens all notesin the score by a constant percentage The SoundLevel rule decreases or increases the sound level(SL) of all notes in the score by a constant value indecibels The performance variables and the inputparameters of these eight rules are listed in Table 2

The resulting groups of rules ie macro-rulesfor each intended emotion are listed in the rightcolumn of Table 1 The parameters for each of therules are shown graphically in Figure 1 The rulescontained in a macro-rule are automatically ap-plied in sequence one after the other to the inputmusic score The effects produced by each rule areadded to the effects produced by previous rules

As an example the resulting deviations for therdquoangryrdquo version of a Swedish nursery rhyme(Ekorrn satt i granen or The Squirrel Sat on the FirTree composed by Alice Tegneacuter and henceforth re-ferred to as Ekorrn) are shown in Figure 2 The rela-tive time deviation for each notersquos IOI variedbetween ndash20 and ndash40 The negative values implythat the original tempo was slower in accordancewith the observations of Gabrielsson and JuslinThe IOI deviation curve contains small and quickoscillations this reflects our attempt to reproducewhat Gabrielsson and Juslin described as rdquomoder-ate variations in timingrdquo The large time devia-tions associated with short notes was produced bythe duration contrast rule thus realizing the rdquoin-creased contrast between long and short notesrdquonoted by Gabrielsson and Juslin The graph alsoshows that a slight accelerandocrescendo appearsin the end instead of a final ritardandodescrescendo

The off-time duration (DRO) reached a maximumof 110 msec at the endings of phrases andsubphrases The Duration Contrast Articulation ruleintroduced small articulation pauses after all com-paratively short notes thus producing somethingequivalent to the rdquomostly non-legato articulationrdquoobserved by Gabrielsson and Juslin The Punctuation

rule inserted larger articulation pauses at automati-cally detected structural boundaries The overall netresult was that all DRO values were positive

At the endings of phrases and subphrases thesound level increased an effect caused by the in-version of the Phrase Arch rule This rule at-tempted to realize the rdquostructural reorganizationrdquoproposed by Gabrielsson and Juslin The soundlevel curve shows positive deviations correspond-ing to the increased sound level also noted byGabrielsson and Juslin A more detailed descrip-tion of the macro-rule used for the rdquoangryrdquo versionof Ekorrn can be found in a previous work by theauthors (Bresin and Friberg 2000)

Listening Experiment

According to Juslin (1997b) forced-choice judg-ments and free-labeling judgments give similar re-sults when listeners attempt to decode a performerrsquosintended emotional expression Therefore it wasconsidered sufficient to make a forced-choice listen-ing test to assess the efficiency of the emotionalcommunication

Music

Two clearly different pieces of music were usedand are given in Figures 3 and 4 One was themelody line of Ekorrn written in a major modeThe other was a computer-generated piece in a mi-nor mode (henceforth called Mazurka) written inan attempt to portray the musical style of FreacutedericChopin (Cope 1992) The phrase structure with re-gard to the levels of sub-phrase (level 6) phrase(level 5) and piece (level 4) was marked in eachscore (shown in Figures 3 and 4) thus meeting thedemands of the Phrase Arch rule

Performances

Applying the macro-rules listed in Table 1 usingthe k-values shown in Figure 1 seven differentversions of each of the two scores were generated

48 Computer Music Journal

Figure 1 Values used forthe indicated rule param-eters in the various rulesfor the emotions tender-ness (T) sadness (Sa) so-

lemnity (So) happiness(H) anger (A) and fear(F) Each rule is repre-sented by a panel

Bresin and Friberg 49

Table 2

(a) List of the variables affected by the rules and of the parameters used for setting up the rules

Rule name Affected variables Parameters (default values)

High Loud sl

Duration Contrast dr sl amp 1 dur 1

Duration Contrast Articulation dro

Punctuation dr dro dur 1 duroff 1

Phrase Arch dr sl phlevel 7 power 2 amp 1next 1

2next 1 turn 2 last 1 acc 1

Final Ritard dr q 3

(b) Description of the affected variables

dl Sound level in decibels relative to a constant value defined in DM (default value is sl = 0)

dr Total inter-onset duration in msec

dro Offtime duration ie offset to next onset

(c) Description of the parameters

amp Sets the sound level as a factor multiplied by the default value

dur Amount of the effect on the duration

duroff Amount of the effect on the off-time duration

phlevel Indicates the level of phrasing at which the rule produces the effect

power Determines the shape of the accelerando and ritardando functions

next Used to modify the amount of ritardando for phrases that also terminate a phrase at the next higherlevel

turn The position of the turning point between the accelerando and the ritardando

last Changes the duration of the last note of each phrase

acc Controls the amount of accelerando expressed in terms of factor multiplied by k2

q Curvature parameter

Note The effect of each rule can be amplified or damped by multiplying it with an emphasis parameter called k

50 Computer Music Journal

Figure 2 Percent IOI de-viation (top) off-time de-viation (middle) andsound level deviation(bottom) for the rdquoangryrdquoversion of Ekorrn

Bresin and Friberg 51

These versions represented our modeling of rdquofearrdquordquoangerrdquo rdquohappinessrdquo rdquosadnessrdquo rdquosolemnityrdquo andrdquotendernessrdquo In addition a rdquodead-panrdquo versionhenceforth called the rdquono-expressionrdquo version wasgenerated for the purpose of comparison Thetempo for the rdquono-expressionrdquo version was 187quarter notes per minute for Ekorrn and 96 quarternotes per minute for Mazurka The same macro-rules were used for both Ekorrn and Mazurka

The polyphonic structure of Mazurka repre-sented as a multi-track MIDI file required the useof a synchronization rule the Melodic Sync ruleThis rule generates a new track consisting of alltone onsets in all tracks When there are several si-multaneous onsets the note with the maximummelodic charge is selected All rules that modifythe IOI are applied to this synchronization trackand the resulting IOIs are transferred back to the

original tracks (Sundberg Friberg and Frydeacuten1989) Rules that act on parameters other than IOIare applied to each track independently

Figures A1 and A2 in the Appendix show therelative deviations of IOI and the variation of DROand sound level for all six performances of eachpiece Negative DRO values indicate a legato ar-ticulation of two subsequent notes while positivevalues indicate a staccato articulation Negativevalues of sound level deviation indicate a softerperformance as compared with the rdquono-expres-sionrdquo performance

Fourteen performances (7 emotions acute 2 ex-amples)mdashoriginally stored in MIDI filesmdashwere re-corded as 441 kHz 16-bit uncompressed soundfiles A grand piano sound (taken from theKurzweil sound samples of the Pinnacle TurtleBeach sound card) was used for the synthesis

Figure 3 The melodyEkorrn The bracketsabove the staff corre-spond to different phraselevels piece (4) phrase(5) and sub-phrase (6)

Figure 4 The first 12 (of23) measures of MazurkaThe brackets mark thephrase structure as in Fig-ure 3 The entire compo-

sition can be found on theInternet at artsucscedufacultycopehome

Figure 3

Figure 4

52 Computer Music Journal

Figure 5 Ordered averagepercentage of rdquosamerdquo re-sponses given by eachsubject for the repetitionsof Ekorrn and Mazurka

Listeners

Twenty subjects 24ndash50 years in age and consistingof five females and 15 males volunteered as listen-ers None were professional musicians or musicstudents although 18 of the subjects stated thatthey currently or formerly played an instrumenton a non-regular basis The subjects all worked atthe Speech Music Hearing Department of theRoyal Institute of Technology Stockholm

Procedure

The subjects listened to the examples individuallyover Sennheiser HD435 Manhattan headphones ad-justed to a comfortable level Each subject was in-structed to identify the emotional expression of eachexample as one out of seven alternatives rdquofearrdquordquoangerrdquo rdquohappinessrdquo rdquosadnessrdquo rdquosolemnityrdquo rdquoten-dernessrdquo or rdquono-expressionrdquo The responses wereautomatically recorded with the Visor software sys-tem specially designed for listening tests (Granqvist1996) Visor presents the sound stimuli as anony-mous boxes in random order and provides sevenempty columns corresponding to the seven differentemotions into which the boxes may be placed Sub-jects were instructed to (1) double-click on each ofthe boxes and listen to its music (2) identify theemotion conveyed by the performance (3) drag thebox to the corresponding column and (4) continueuntil all the boxes were placed Subjects could listenas many times as needed to each music sample andthey were given the opportunity to associate anynumber of boxes with the same emotioncolumn

Each session contained two sub-tests repeatedonce each presenting the seven performances of (1)Ekorrn (2) Mazurka (3) repeat of Ekorrn and (4) re-peat of Mazurka The stimuli order within each sub-test was automatically randomized for each subjectby the Visor program The average duration of an ex-periment session was approximately eleven minutes

Analysis

Consistency of the subjectsrsquo answers was analyzedfrom the repeated stimuli in the test Four sub-

jects who gave the same answers for repeatedstimuli in fewer than 36 of the cases wereeliminated from subsequent analysis (see Figure 5)

Statistical analysis of the results from the listen-ing test was conducted We conducted a three-wayrepeated-measures analysis of variance (ANOVA)with fixed factors Intended Emotion (7 levels) Per-ceived Emotion (7 levels) and Piece of Music (2levels) and with listenersrsquo choices as the dependentvariable For each intended emotion listenersrsquochoices were codified by marking the subjectsrsquo cho-sen emotions with the value one and the remainingsix emotions with the value zero In addition atwo-way ANOVA was conducted for each intendedemotion separately with fixed factors PerceivedEmotion (7 levels) and Piece of Music (2 levels)

The synthesis of each emotional performancewas achieved by using a special macro-rule eachincluding a specific subset of DM rules as alreadymentioned (see Table 1 Table 2 and Figure 1) Atotal of 17 parameters were involved in thesemacro-rules some of which were interrelated Anattempt was made to reduce the number of dimen-sions of this space by means of a principal compo-nent analysis of these parameters

Results and Discussion

The three-way ANOVA revealed that the mainfactor Perceived Emotion was significant(F(63038) = 3917 p lt 00007) implying that lis-teners overall preferred rdquoangryrdquo and rdquohappyrdquoHowever the main factors Intended Emotion and

Bresin and Friberg 53

Piece of Music were not significant The interac-tion between Intended Emotion and PerceivedEmotion was significant (F(42 3038) = 55505p lt 00001) The interaction between Intended Emo-tion Perceived Emotion and Piece of Music wasalso significant (F(42 3038) = 2639 p lt 00001)showing an influence of the score on the perceptionof the intended emotion An interesting resultemerging from the three-way ANOVA was a sig-nificant interaction between Piece of Music andPerceived Emotion (F(6 3038) = 3404 p lt 00024)subjects rated performances of Mazurka to bemainly rdquoangryrdquo and rdquosadrdquo while performances ofEkorrn were perceived as more rdquohappyrdquo and rdquoten-derrdquo Both pieces were perceived with almost thesame rate of rdquosolemnityrdquo and rdquono-expressionrdquo asshown in Figure 6 This result confirms the typicalassociation of rdquohappinessrdquo with major mode andrdquosadnessrdquo with minor mode

The significance of the main factor PerceivedEmotion in the three-way ANOVA induced us torun a two-way ANOVA for each of the seven In-tended Emotions in order to verify that each ofthem was significant In each of the seven two-way ANOVAs the effects of Perceived Emotionwere as expected significant (p lt 00001) and theeffects of music were insignificant Figure 7 showsthe percentage of rdquocorrectrdquo responses for the twopieces In all cases but one these percentages wellexceeded the 14 chance level and were in all

cases but one the highest value To facilitate amore detailed analysis the subjectsrsquo responses foreach version of Ekorrn and Mazurka are presentedas a confusion matrix in Tables 3a and 3b An in-teresting outcome in both confusion matrixes istheir high degree of symmetry along the main di-agonal except for a few terms which implies con-sistency in the subjectsrsquo choices

The rdquotendernessrdquo version of Mazurka was theonly case that was not correctly identified (9)From the two-way ANOVA for the intended emo-tion rdquotendernessrdquo emerged a significant interac-tion between Piece of Music and PerceivedEmotion (F(6434) = 7519 p lt 00001) To explainthis significance a post hoc comparison using theScheffeacute test was applied to all possible pairs of theseven levels of the main factor Perceived Emotionfor each Piece of Music separately The Scheffeacutetest revealed that Ekorrn was perceived as rdquoten-derrdquo while Mazurka was perceived as rdquosadrdquo Ac-cording to Juslin (1997b) the tenderness version isoften performed with a higher sound level than therdquosadnessrdquo version while the same sound level forboth versions was used in this experiment Thisobservation may help us achieve better macro-rules for rdquosadnessrdquo and rdquotendernessrdquo performancesin future work

For happiness the responses differed substan-tially between Ekorrn (97) and Mazurka (63)This was confirmed by a significant interaction be-

Figure 6 Percentage distri-bution of subjectsrsquo choicesover the two pieces Ekorrnand Mazurka

Figure 7 Percent rdquocor-rectrdquo responses for thetwo test examples ob-tained for each of the in-dicated emotions

54 Computer Music Journal

Table 3

Confusion matrix () for the classification test of seven synthesized performances of (a) Ekorrn and (b)Mazurka

(a)

Intended Emotion Fear Anger Happiness Sadness Solemnity Tenderness No Expression

Fear 44 0 3 3 0 41 9

Anger 3 91 0 0 6 0 0

Happiness 0 3 97 0 0 0 0

Sadness 3 0 0 69 0 28 0

Solemnity 3 3 3 6 72 0 13

Tenderness 65 0 0 28 3 53 95

No-Expression 0 0 9 0 13 6 72

(b)

Intended Emotion Fear Anger Happiness Sadness Solemnity Tenderness No Expression

Fear 47 0 0 13 3 31 6

Anger 6 91 0 3 0 0 0

Happiness 6 28 63 0 3 0 0

Sadness 3 0 0 59 0 38 0

Solemnity 3 16 0 13 59 0 9

Tenderness 13 0 0 50 3 9 25

No-Expression 3 0 95 0 22 95 56

tween Piece of Music and Perceived Emotion(F(6434) = 11453 p lt 00001) For the remainingfive Intended Emotions the interaction betweenPerceived Emotion and Piece of Music was not sta-tistically significant

The fear version of Ekorrn was classified both asrdquoafraidrdquo and as rdquotenderrdquo 44 of the subjects per-ceived it as rdquoafraidrdquo and 41 of them perceived itas rdquotenderrdquo The fear version of Mazurka was signifi-cantly perceived as more rdquoafraidrdquo and less rdquotenderrdquo

These differences in the perception of Ekorrnand Mazurka performances could be partly be-cause Mazurka is written in a minor mode which

in the western music tradition is often associatedwith moods of sadness fear or anger

From the principal component analysis twoprincipal factors emerged explaining 61 (Factor1) and 29 (Factor 2) of the total variance Figure8 presents the main results in terms of the distri-bution of the different setups in the two-dimen-sional space

Factor 1 was closely related to deviations ofsound level and tempo Louder and quicker perfor-mances had negative values of Factor 1 and weredelimited by the coordinates of rdquoangerrdquo and rdquoso-lemnityrdquo Softer and slower performances had

Bresin and Friberg 55

positive values of Factor 1 and were delimited bythe coordinates of rdquosadnessrdquo and rdquofearrdquo

Factor 2 was closely related to the articulationand phrasing variables This distribution resemblesthose presented in previous works and obtained us-ing other methods (De Poli Rodagrave and Vidolin 1998Orio and Canazza 1998 Canazza et al 1998) Thequantity values (k-values) for the duration contrastrule in the macro-rules also shown in Figure 8 in-creased clockwise from the fourth quadrant to thefirst Figure 8 also shows an attempt to qualita-tively interpret the variation of this rule in thespace It shows that in the rdquotenderrdquo and rdquosadrdquo per-formances the contrast between note durationswas very slight (shorter notes were played longer)strong in the rdquoangryrdquo and rdquohappyrdquo performancesand strongest in the rdquofearrdquo version

The acoustical cues resulting from the six syn-thesized emotional performances are presented inFigure 9 The average IOI deviations are plottedagainst the average sound level together with theirstandard deviations for all six performances of eachpiece Negative values of sound level deviation in-dicate a softer performance compared to therdquono-expressionrdquo version rdquoAngerrdquo and rdquohappinessrdquoperformances were thus played quicker and louderwhile rdquotendernessrdquo rdquofearrdquo and rdquosadnessrdquo perfor-mances were slower and softer relative to ardquono-expressionrdquo rendering Negative values of IOI

deviation imply a tempo faster than the originaland vice versa for positive values The rdquofearrdquo andrdquosadnessrdquo versions have larger standard deviationsobtained mainly by exaggerating the duration con-trast but also by applying the phrasing rules Theseacoustic properties fit very well with the observa-tions of Gabrielsson and Juslin and also with perfor-mance indications in the classical music traditionFor example in his Versuch uumlber die wahre Art dasClavier zu spielen (1753 reprinted 1957) CarlPhilippe Emanuel Bach wrote rdquo activity is ex-pressed in general with staccato in Allegro and ten-derness with portato and legato in Adagio rdquo)Figure 9 could be interpreted as a mapping of Figure8 into a two-dimensional acoustical space

The Gabrielsson and Juslin experiment includedthe cues tone onset and decay timbre and vibratoNone of these can be implemented in piano perfor-mances and thus had to be excluded from thepresent experiment Yet our identification resultswere similar to or even better than those found byGabrielsson and Juslin A possible explanation isthat listenersrsquo expectations regarding expressive de-viations vary depending on the instrument played

Finally the SL and time deviations used here inthe seven performances can be compared to thoseobserved in other studies of expressiveness in sing-ing and speech Figure 10 presents such a compari-son For tempo the values were normalized withrespect to rdquohappyrdquo because rdquoneutralrdquo was not in-cluded in all studies compared In many casesstriking similarities can be observed For examplemost investigations reported a slower tempo and alower mean sound level in rdquosadrdquo than in rdquohappyrdquoThe similarities indicate that similar strategies areused to express emotions in instrumental musicsinging and speech

General Discussion