-

8/12/2019 Computer System Design By Lampson Hints

1/16

-

8/12/2019 Computer System Design By Lampson Hints

2/16

There are references for nearly all the specific examples,but for only a few of the the ideas; man y of these are partof the folklore, and it would take a lot of work to trackdown their multiple sources.

It seemed appropriate to decorate a guide to the doubtfulprocess of system design with quotations fromHamlet.Unless otherwise indicated, they are taken from Polonius'advice to Laertes (I iii 57-82).

An d these fe w precepts in thy m emoryLo ok thou character.

Some quotations are from other sources, as noted. Eachone is intended to apply to the text which follows it.

F u n c t i o n a l i t yW H Y ? D o e s i t w o r k ?

W H E R E ?

C o m p l e t e n e s s

I n t e r f a c e

I m p l e m e n t a t i o n

S e p a r a t e n o r m a l a n d ,,w o r s t c a s e I

Do one th ing wel l :

Don t generalizeGet it right

D o n t h i d e p o w e rU s e p r o c e d u r e a r g u m e n t sL e a v e i t t o t h e c l i e n t

K e e p b a s i c i n t e r f a c e s s t a b l eK e e p a p l a c e t o s t a n d

P l a n to t h r o w o n e a w a yK e e p s e c r e t sU s e a g o o d i d e a a g a i nD i v i d e a n d c o n q u e r

of them depend on the notion of aninterface which sepa-rates an implementation of some abstraction from theclients who use the abstraction. The interface between twoprograms consists o f the set o fassumptions that each pro-grammer needs to make about the other program in orderto demonstrate the correctness of his program (paraphras-ed from [5]). Defining interfaces is the most im portan t partof system design. Usu ally it is also the mo st difficult, sincethe interface design must satisfy three conflicting require-ments: an interface should be simple, it should be com -plete, and it should admit a sufficiently small and fastimplementation. Alas, all too often the assumptions em-bodied in an interface turn out to be misconceptions in-stead. Parnas' classic paper [38] and a mo re rec ent one o n

S p e e d F a u l t t o l e r a n c eI s i t f a s t e n o u g h ? D o e s i t k e e p w o r k i n g ?

S a f e t y f i r s tS h e d l o a dE n d - t o - e n d E n d - t o - e n d

Make i t f a s t E nd- to -end

S p l i t r e s o u r c e s L o g u p d a t e sS t a t i c a n a l y s i s M a k e a c t i o n s a t o m i cD y n a m i c t r a n s l a t i o n

C a c h e a n s w e r sU s e h i n t sU s e b r u t e f o r c eC o m p u t e i n b a c k g r o u n dBatc h p rocess ing

M a k e a c t i o ns a t o m i cU s e h i n t s

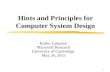

F i g u r e S u m m a r yof the s l o g a n s

Each hint is summarized by a slogan, which whenproperly interpreted reveals its essence. Figure 1 organizesthe slogans alon g two axes:

Why it helps in making a good system: withfunctionality (does it work?), speed (is it fastenough?), or fault-tolerance (does it keep working?).

Where in the system design it helps: in ensuring com-pleteness, in choosing interfaces, or in devising im-plementations.

Double lines connect repetitions of the same slogan, singlelines con nect rela ted slogans.

The body of the paper is in three sections, according to

the why headings: functionality ( 2), speg d ( 3), an dfault-tolerance ( 4).

2 . F u n c t i o n a l i t y

The most important hints, and the vaguest, have to dowith obtaining the right fimctionality from a system. Most

device interfaces [5] offer exc ellent practical advice on thissubject.

The main reason that interfaces are difficult to design isthat each interface is a small programming language: it de-f ines a set of objects, and the operations that can be usedto manipulate the objects. Concrete syntax is not an issue,but every other aspect of programm ing language design ispresent. In the l ight of this observation, many of Hoare 'shints on prog ramm ing language design [19] can be read asa supplement to the present paper.

2 .1 K eep i t s imp le

Perfection is reached, not when there is no longeranything to add, bu t when there is no longer anything totake away. (,4. Saint-Exu pery)

Those friend s thou hast, and their adoption triec~Grapple them unto thy soul with hoops o f steel;But do not dull thy palm with entertainmentO f each new-hatch'c~ unfledg'd comrade.

34

-

8/12/2019 Computer System Design By Lampson Hints

3/16

Do one thing at a time, and do it well.An interfaceshould capture theminimum essentials of an abstracti on.Don't generalize; generalizations are generally wrong.

We are face d with an insurmountable opportunity.(W. Kelley)

Wh en an interface undezVakes to do too milch, the resu lt is

an implementation which is large, slow and complicated.An interface is a contract to deliver a certain amount ofservice: clients of the interface depend on the function-ality, which is usually documented in the interface specifi-cation. They also depend on incurring a reasonable cost(in t ime or other scarce resources) for using the interface;the definition of "reasonable" is usually not documentedanywhere. I f there are six levels of abstraction, and eachcosts 50% more that is "reasonable", the service deliveredat the top will miss by more th an a factor of 10.

If in doubt, leave it out. (Anony mous)

Extermin ate features. (C. Thaeker)

KISS:Keep It Simple, Stupid. (Anonymous)On the other hand,

Every thing shou M be made as simp le as possible,but no simpler. (A. Einste in)

Thus, service must have a fairly predictable cost, and theinterface must not promisemore than the implementerknows how to deliver.Especially, it should not promise fea-tures needed by only a few clients, unless the imple men terknows how to provide them without penalizing others. Abet ter implementer, or one who comes along ten yearslater when the problem is better understood, might beable to deliver, but unlessthe. one yo u havecan do so, it is

wise to reduce your aspirations.

For example, PL/1 got into serious trouble by attemptingto provide consis tent meanings for a large number ofgeneric operations across a wide variety of data types.Early implementa tions tended to handle all the cases ineffi-ciently, but ev en with the o ptimizing com pilers of 15 yearslater, i t is hard for the prog ram mer to tell what will be fastand what will be slow [31]. A language like Pascal or C ismuch easier to use, because every construct has a roughlyconstant cost which is independent of context or argu-ments, and in fact most constructs have about the samecost.

Of course, these observations apply most strongly to inter-faces which clients use heavily: virtual memory, files, dis-play handling, arithmetic. A seldom used interface cansacrifice some performance for functionality: passwordchecking, interpreting user commands, printing 72 pointcharacters. (What this really means is that though the costmust sti l l be predictable, i t can be many times the mini-mum achievable cost.) And such cautious rules don't apply

to research whose object is learning how to make b etter im-plementations. But since research may well fail , othersmus tn't dep end on its success.

Alg ol 60 was not o nly an imp rovement o n its predeces-sors, bu t also on nearly all its successors. (C. H oar e)

Examples of offering too m uch are legion. Th e Alto operat-

ing system [29] has an ordinary rea d/write-n-b ytes inter-face to files, and was extended for lnterlisp-D [7] with anordinary pag ing system which stores each virtual page on adedicated disk page. Both have small implementations(about 900 lines of co de for files, 500 for paging) and arefast (a page fault takes one disk access and has a constantcomp uting cost which is a small fraction of the disk accesstime, and the client can fairly easily run the disk at fullspeed). The Pilot system [42] (which suc ceeded .the AltoOS) follows Multics and several other systems in allowingvirtual pages to be mapped to file pages, thus subsuming

file input/output into the virtual memory system. The im-plementatio n is much larger (about 11,000 lines of code)and slower (it often incurs two disk accesses to handle apage fault , and cannot run the disk at full speed). Th e ex-tra functionality is bough t at a high price.

This is not to say that a good implementation of this inter-face is impossible, merely that i t is hard. Th is system wasdesigned and coded by several highly competent and ex-perienced people. Part of the problem is avoiding circular-ity: the file system would like to use the virtual memory,but virtual memory depends on files. Quite general waysare known to solve this probl em [22], but th ey are trickyand lead easily to greater cost and complication in thenormal case.

And, in this upshot, purposes mistookFall'n on th' inventors' heads. (Vii 387)

Another examp le il lustrates how easily generality can leadto unexpected complexity. The Tenex system [2] has thefollowing innocent-looking combin ation o f features:

It reports a reference to an unassigned virtual page byan interrupt to the user program.

A system call is viewed as a machine instruction foran extended machine, and any reference i t makes toan unassigned virtual page is thus similarly reportedto the user program.

Th ere is a system call CONNECT to o bta in access toano ther directory; one o f its argum ents is a string con-taining the passwo rd for the directory. If the passwordis wrong, the call fails after a three second delay, toprevent guessing passwords at high speed.

35

-

8/12/2019 Computer System Design By Lampson Hints

4/16

CONNECT is implemented by a loop o f the formfor i= 0 toLength[DirectoryPassword]do

if DirectoryPasswordi]~PasswordArgumeni]thenWait three seconds; returnBadPassword

endloopConnect o directory; eturnSuccess

It is possible to guess a password of length n in 64n trieson the average, rather than 128"/2 (Tenex uses 7 bit char-acters in strings), by the following trick. Arrange the Pass-wordArgument sothat its first character is the last characterof a page and the next page. is unassigned, and try eachpossible cha racter as t he first. I f CONNECT repo rts aBadPassword, the guess was wrong; if the system reports areference to an unassigned page, it was correct. Now ar-range the PasswordArgumentso that its second character isthe last character of the page, and proceed in the obviousway.

This obscure and amusing bug went unnoticed by thedesigners because the interface provided by a Tenex sys-

tem call is quite complex: it includes the possibility of areported reference to an unassigned page. Or looked atanother way, the interface provided by an ordinary mem-ory reference instruction in system code is quite complex:it includes the possibility that an improper reference willbe reported to the client, without any chance for the sys-tem code to get control first.

An engineer is a man who can do or a dime w hat anyfoo l can do or a dollar. (Ano nym ous)

Ther e are times, however, When it 's worth a lot o f work tomake a fast implementation of a clean and powerful inter-

face. If the interface is used widely enough, the effort putinto designing and tuning the implementation can pay offmany times over. But do this only for an interface whoseimportance is already known from existing uses. And besure that you kn ow how to make it fast.

For example, theBitBltor RasterOp interface for manipulat-ing raster images [21, 37] was devised by Dan IngaUs afterseveral years of experimenting w ith the Alto's high-resolu-tion interactive display. Its implementation costs about asmuch microcode as the entire emulator for the A lto's stan-dard instruction set, and required a lot of skill and experi-ence to construct. But the pe rforman ce is nearly as good asthe special-purpose character-to-raster operations that pre-

ceded it, and its simplicity and generality has made a bigdifferen ce in the ease of building display applications.

The Dorado memory system [8] contains a cache and aseparate high-bandwidth path for fast input/output- Itprovides a cache read or write in every 64 ns cycle,together with 500 MBits/second of i/o bandwidth, virtualaddressing from both cache and i/o, and no special casesfor the microprogramm er to worry about. H owever, the im-plementation takes 850 MSI chips, and consumed several

man-years of design time. This could only be justified byextensive prior experience (30 years ) with this interface,and the kno wledge that. mem ory access is usually th e limit-ing factor in performance. Even so, it seems in retrospectthat the high i/o bandwidth is not worth the cost; it isused mainly for displays, and a dual-ported frame bufferwould almost certainly be better.

Finally, lest this advice seem too easy to take,

Get it right. Neither abstraction nor simplicity is asubstitute for getting it right. In fact, abstraction can be asource o f severe difficulties, as this caution ary tale shows.Word processing and office information systems usuallyhave provision fo r embedding n amedfields in thedocumen ts they handle. For example, a form letter mighthave address and salutation fields. Usually a document is

rep rese nte d as a sequence of characters, and a field isencoded by something like{name: contents}.Among otheroperations, there is a procedureFindNamedFieldthat findsthe field with a given name. O ne major comm ercial systemfor some time used aFindNamedFieldprocedure that ran intime O(n2), where n is the length of the document. T his re-markable result was achieved by first writing a procedureFindlthField to find the rth field (which must take timeO(n) if there is no auxiliary data structure), and then im-plementing FindNamedField[name] with the very naturalprogram

for 1=0 toNumberOfFteldsdoFlndlthField;if ts name s name hen exitendloop

Once the (unwisely chosen) abstractionFindlthField isavailable, only a lively awareness of its cost will avoid thisdisaster. Of course, this is not an argument against

abstraction, but it is well to be aware of its dangers.

2 .2 Coro l l a r i es

The rule about simplicity and generalization has many in-teresting corollaries.

Costly thy habit as thy purse can buy,Bu t not express'd infa ncy; rich, not gaudy.

M ak e it fast, rather than general or p owerful. If it 's fast,the client can program the function it wants, and an otherclient can program some other function. It is much betterto have basic op erations executed quickly than m ore pow-erful ones w hich are slower (of course, a fast, powerfuloperation is best, if you know how to get it). The troublewith slow, powerful operations is that the client whodoesn't want the power pays more for the basic function.Usually it turns out that the powerful operation is not thefight one.

Ha d I but time (as this fell sergeant, d eathIs strict in his arrest), O, I couM tell yo u -But let i t be (Vii 339)

36

-

8/12/2019 Computer System Design By Lampson Hints

5/16

For example, many studies [e.g., 23, 51, 52] have shownthat programs spend most of their time doing very simplethings: loads, stores, tests for equa lity, adding o ne.Mach ines like the 801 [41] or the RISe [39], which hav e in-strnctions to do these simple operations quickly, run pro-grams faster (for the same amount of hardware) thanmachines like the VAX, which ha ve m ore g eneral andpowerful instructions that take longer in the simple cases.It is easy to lose a factor of two in the running time of a

program, with the same amount o f hardware in the imple-mentation. Machines with still more grandiose ideas aboutwhat the client needs do even worse [18].

To find the places where time is being spent in a largesystem, it is necessary to have measurement tools that willpinpoint the time-consuming code. Few systems are wellenough understood to be properly tuned without suchtools; it is normal for 80% of the time to be spent in 20%of the code, buta prior i analysis or intuition usually can'tfind the 20% with any certainty. The performance tuningof Interlisp-D described in [7] describes one set of usefultools, and gives many details of how the system was spedup by a factor of I0.

Don ' t h ide power.This slogan is closely related to the lastone. W hen a low level of abstraction allows someth ing tobe done quickly, higher levels should not bury this powerinside something more general. The purpose of abstrac-tions is to concealundesirable properties; desirable onesshould not be hidden. Sometimes, of course, an abstrac-tion is multiplexing a resource, and this necessarily hassome cost. But it should be possible to deliver all or nearlyall of it to a single client with only slight loss of perfor-mance.

For example, the Alto disk h ardware [53] can transfer afull cylinder at disk speed. The basic file system [29] cantransfer successive file pages to client memory at full diskspeed, with time for the client to do some computing oneach sector, so that with a few sectors of buffering theentire disk can be scanned at disk speed. This facility hasbeen u sed to write a variety of applications, ranging froma scavenger which reconstructs a broken file system, toprograms which search files for substrings that match apattern. T he stream level of the file system can read orwrite n bytes to or from client memor y; any portion of then bytes which occupy full disk sectors are transferred atfull disk speed. Loaders, comp ilers, editors and manyother programs depend for their performance on thisability to read large files quickly. At this level the client

gives up the facility to see the pages as they arrive; this isthe on ly price paid fo r the higher level of abstraction.

Use procedure arguments to provide flexibility in aninterface. They can be restricted or encoded in variousways if necessary for pro tection or portability. This tech-nique can greatly simplify an interface, eliminating a

jumble o f parameters whose function is to provid e a smallprogramming language. A simple example is an enumera-tion procedure that returns all the elements of a set satisfy-ing some property. The cleanest interface allows the clientto pass a filter procedure which tests for the property,rathe r than defining a special languag e of patterns orwhatever.

But this theme has ma ny variations. A mo re interesting ex-

amp le is the Spy system mo nitorin g facility in the 940 sys-tem at Berkeley [10]. This allows a more or less untrusteduser program to plant patches in the code of the super-visor. A patch is coded in machine language, but the oper-ation that installs it checks that it does no wild branches,contains no loops, is not too long, and stores only in adesignated region of memory dedicated to collectingstatistics. Using the Spy, the student of the system canfine-tune his measurements without any fear of breakingthe system, or even perturbing its operation much.

Ano ther unusu al examp le that illustrates the pow er of thisme tho d is the FRETURN mech anism in th e Cal time-shar-ing system for the DC 6400 [30]. From any sup ervisor call

C it is possible to make an oth er oneC F which ex ecutes ex-actly like C in the no rmal case, but sends contro l to a desig-nated failure handler if C gives an error return. Th eC Foperation can do more (e.g., it can extend files on a fast,limited-capacity storage device to larger files on a slowerdevice), but it runs as fast as C in the (hopefully) normalcase

It may be better to have a specialized language, however,if it is more amenable to static analysis for optimization.This is a major criterion in the design of database querylanguages, for example.

Leave R to the client.As long as it is cheap to pass con-trol back and forth, an interface can combine simplicity,flexibility and high performance together by solving onlyone prob lem and leaving the rest to the client. For exam-ple, many parsers confine themselves to do ing the context-free recognition, and call client-supplied "semantic rou-tines" to record the results o f the parse. This has obviousadvantages over always building a parse tree which theclient must traverse to find out wh at happened.

The success o f monitors [20, 25] as a synchronizationdevice is partly due to the fact that the locking and signal-ing mechanisms do very little, leaving all the real work tothe client programs in the monitor procedures. This sim-plifies the mon itor implem entation a nd keep s it fast; if theclient needs buffer allocation, re source accounting or othe rfrills, it provides these functions itself, or calls other lib-rary facilities, and pays for what it needs. The fact thatmonitors give no control over the scheduling o f processeswaiting on monitor locks or condition variables, often ci-ted as a drawback, it actually an advantage, since it leaves

37

-

8/12/2019 Computer System Design By Lampson Hints

6/16

the client free to provide the scheduling it needs (using aseparate condition variable for each class of process), with-out having to pay for or fight with some built-in mechan-ism which is unlikely to do the right thing.

The Unix system [44] encourages the bui lding o f smal lprograms which take one or more character streams as in-put , produce one or more s t reams as output , and do oneoperation. When this style is imitated properly, each pro-gram has a simple interface and does one thing well , leav-ing the client to combine a set of such programs with itsown co de an d achieve precisely the effect desired.

The end-to-endslogan discussed in 3 is anothe r corollaryof keeping it simple.

2.3 Continuity

There is a constant tension between the desi re to improv ea design, and the need for stabili ty or continuity.

Keep basic interfaces stable.Since an interface embodies

assumptions which are shared by m ore than one part o f asystem, and sometimes by a great many parts, i t is verydesirable not to change the interface. When the system isprogrammed in a language wi thout type-checking, i t i snearly out o f the question to change any pu blic interface,because there is no way of tracking down its clients andchecking for elementary imcompatibili t ies, such as dis-agreements on the number of arguments , or confusionbetween pointers and integers. With a language like Mesa[15], however, which has complete type-checking andlanguage support for interfaces, i t becomes much easier tochange interfaces without causing the system to collapse.But even if type-checking can usually detect that an as-sumption no longer holds, a programmer must sti l l correctthe assumption. When a system grows to more than 250kl ines of code, the amou nt o f change becom es in tolerable;even when there is no do ubt abo ut what has to be done, i ttakes too long to do it . There .is no choice but to break thesystem into smaller pieces are related only by interfaceswhich are stable for years. Traditionally, only the interfacedefined by a programming language or operat ing systemkernel is this stable.

Keep a plac e to stand, i f you do have to change in ter-faces. Here are two rather different examples to i l lustratethis idea. One is thecompatibility package,which imple-menus an old interface on top of a new system. This allows

programs that depend on the old in terface to cont inueworking. Many new operating systems (including Tenex[2] and Cal [50]) have kept old software usable by simula-ting the supervisor calls of an old system (TOPS-10 andSCOPE, respectively). Usually th ese simulators need only asmal l amount of effort compared to the cost of reimple-menting the old software, and it is not hard to get accept-able p erforman ce. A t a different level, the II~M 360/370

systems provided emulat ion o f the ins t ruct ion sets of o ldermach ines like the 1 401 and 7090. Taken a l i t t le further,this leads to virtual machines, which simu late (several cop-ies of) a machine on the m achine itself [9].

A rather different example is theworld-swap debugger.This i s a debugging syste m that works by wri ting the realmemo ry of the target system (the one being debug ged)onto a secondary storage device, and reading in thedebugging system in its place. The debugger then providesits user with complete access to the target world, mappingeach target mem ory address to the prope r place on secon-dary storage. With some care, i t is possible to swap thetarget back in and cont inue execut ion. This i s somewhatclumsy, but allows very low levels of a system to bedebugged convenient ly, s ince the debugger does not de-pend on the correct function of anything irr the target,except for the very s imple world-swap mechanism. I t i sespecial ly useful during boots t rapping. There are manyvariations. For instance, the debugger can run on a differ-ent machine, with a smalltele-debuggingnub .in the targetworld which can interpretReadWord, W riteWord, Stop and

Go comman ds arr iv ing from the deb ugger over a network.Or if the target is a process in a t ime-sharing system, thedebugger can run in a different process.

2.4 Makingimplementations work

Perfection m ust b e reached by degrees; She requires theslow hand of t im~ (Voltaire)

Plan to throw one away;you will any how [6]. If there isanything new about the function of a system, the firstimplementat ion wi l l have to be redone completely toachieve a satisfactory (i .e., acceptably small, fast and main-tainable) result . It costs a lot less if you plan to have aprototype. Unfortunately, somet imes two prototypes areneeded, especially if there is a lot of innovation. I f you arelucky, you can copy a lot from a previous system; thus,Tenex was based on the SDS 940 [2]. This can even wo rkeven i f the previous system was too grandiose; Unix tookman y ideas from Multics [44].

Even when an implementation is successful, i t pays torevisit old decisions as the system evolves; in particular,optimizations for particular properties of the load or theenvironment (mem ory s ize, for example) often come to befar from optimal.

Give thy thoughts no tong ueNor any unproportion'd thought his act.

Keep secrets of the implementat ion. Secrets are assump-t ions about an implementat ion that cl ient programs arenot al lowed to make (paraphrased from [5]) . In other

38

-

8/12/2019 Computer System Design By Lampson Hints

7/16

words, they are things that can change; the interface de-fines the things that cannot chang e (without simultaneouschanges to both implementation and client). Obviously, itis easier to program and modify a system if its parts makefewer assumptions about each other. On the other hand,the system may not be easier todesign-i t 's hard to designa good interface. And there is a tension with the desire not

to hide power.

An efficient program is an exercise in logical brinkm an-ship. (E. OU kstra)

There is another danger in k eeping secrets. On e way to im-prove performance is toincrease the number of assump-tions that one part o f a systems makes abo ut another; theadditional assumptions often allow less work to be done,sometimes a 10t less. Fo r instance, if a set o f size n isknown to be sorted, it is possible to do a mem bership testin time log n rather than n. This technique is very im-portant in the design of algorithms and the tuning of small

modules. In a large system the ability to improve each partseparately is usually more important. But striking the rightbalance is an art.

0 throw away the worserpart o f it,A nd live the purer w ith the other half. (111 iv 157)

Divide and conquer. This is a well known method forsolving a hard problem: reduce it to several easier ones.The resulting program is usually recursive. When resour-ces are limited the method takes a slightly different form:bite of f as much as will fit, leaving the rest for anoth eriteration.

A good example of this is in the Alto's Scavenger pro-gram, which scans the disk and rebuilds the index anddirectory structures of the file system from the file iden-tifier and page number recorded on each disk sector [29].A recent rewrite of this program has a phase in which itbuilds a data structure in main storage, with one entry f oreach contiguous run of disk pages that is also a contiguousset of pages in a file. Norm ally, files are allocated mor e orless contiguously, and this structu re is not too large. I f thedisk is badly fragmented, however, the structure will notfit in storage. When this happens, the Scavenger discardsthe information for half the files and continues with theother half. After the index for these files is rebuilt, the

process is repeated for the other files. If necessary thework is further subdivided; the method fails only if asingle file's index won't fit.

Another interesting example arises in the Dover rasterprinter [26, 53], which scan-converts lists of characters andrectangles into a largem X n array of bits, in which onescorrespond to spots of ink on the pape r and zeros to spotswithout ink. In this printer m=3300 and n=4200, so the

array contains fourteen million bits and is too large tostore in memory. Th e printer consum es bits faster than theavailable disks can deliver them, so the array cannot bestored on disk. Instead, the printer electronics contains twoband buffers, each of which can store 16 4200 bits. Theentire array is divided into 164200 bit slices calledbands,and the characters and rectangles are sorted intobuckets,

one for each band. A bucket receives the objects whichstart in the corresponding band. Scan conversion proceedsby filling one band buffer from its bucket, and then play-ing it out to the p rinter and zeroing it while the o ther buf-fer is filled from the next bucket. Objects which spill overthe edge of one band are put on aleover list which ismerged with the contents of the next bucket. This left-overscheme is the trick which allows the problem to be sub-divided.

Some times it is conv enien t to artificially limit the resource,by quantizing it in fixed-size units; this simplifies book-keeping and p revents one kind of fragmentation. The clas-sical example of this is the use of fixed-size pages for vir-tual memory, rather than variable-size segments. In spiteof the apparen t advantages of k eeping logically related in-formation together, and transferring it between m ain stor-age and backing storage as a unit, paging systems haveworked out better. The reasons for this are complex andhave n ot been systematically stud ied.

An d ma kes u s rather bear those ills we haveThan fiy to others that we kno w not of. (II I i8 1)

Use a good ideaagain, instead of generalizing it. A spe-cialized implementation of the idea may be much moreeffective than a general one. Th e discussion of cachingbelow gives several examples bf app lying this general p rin-ciple. A nother interesting example is the notion o freplica-tion of data for reliability. A small amount of data caneasily be replicated locally by writing it on two or moredisk drives [28]. When the amount of data is large, or itmust be recorded on separate machines, it is not easy to en-sure that the copies are always the same. Gifford [16]shows how the problem can be solved by building repli-cated data on top of a transactional storage system, whichallows an arbitrarily large update to be done as an atomicoperation (see 4). The transactional storage itself depe ndson the simple local replication scheme to store its logreliably. There is no circularity here, since only theidea is

used twice, not any code. Yet a third possible use of repli-cation in this context is to store the commit record onseveral machines [27].

Th e user in terface for the Sta r office system [47] has asmall set of operation s (type text, move, copy, delete, showproperties) which are applied to nearly all the objects inthe system: text, graphics, file folders and file drawers,records files, printers, in and out baskets, etc. The exact

39

-

8/12/2019 Computer System Design By Lampson Hints

8/16

meaning of an operation varies with the class of object,within the limits of what the user is likely to considernatural. For instance, copying a document to an outbasketcauses it to be sent as a message; m oving the endpoi nt o f aline causes the line to follow like a rubber band. Certainlythe implementations are quite different in many cases. Butthe generic operations do not simply m~lke the systemeasier to use; they represent a view of what operations arepossible and how the im pleme ntation of each class of ob-ject should be organized.

2.5 Handling all the cases

Diseases desperate grown,By desperate appliance are reliev'd,Or no t at all . (II I vii 9-11)

Th is project

ShouM have a back or second, that might hold,If this should blast in pro of (I V iii 149-153)

Handle normal and wo rst case separately as a rule,because the requirements for the two are quite different:

the normal case must be fast;

the worst case must make some progress.

In most systems it is all right to schedule unfairly and giveno service to some process, or to deadlock the entire sys-tem, as long as this event is detected automatically, anddoesn't happen too often. The usual recovery is by crash-ing some processes, or even the entire system. At first thissounds terrible, but usually one crash a week is a cheapprice to pay for 20% better performance. Of course the sys-tem mu st have dec ent error recovery (an application of theend-to-e nd principle; see 4), but that is required in anycase, since there are so many other possible causes of acrash.

Caches and hints ( 3) are examples o f special treatm entfor the normal case, but there are many others.

The In terl isp-D and Cedar programing systems use areferencing-counting garbage collector [11] which has animpor tant optim ization o f this kind. Pointers in the localframes or activation records of procedures are not count-ed; instead, the frames are scanned whenever garbage iscollected. This saves a lot of reference-counting, sincemost pointer assignments are to local variables; also, sincethere are not many frames, the time to scan them is small,and the collector is nearly real-time. Cedar goes fartherand does not keep track of which local variables containpointers; instead, it assumes that they all do, so an integerwhich happ ens to contain the address of an object which isno longer referenced will prevent that object from beingfreed. Measu remen ts show tha t less than 1% of the storageis incorrectly retained [45].

Reference-counting makes it easy to have an incrementalcollector, so that computation need not stop during collec-tion. However, it cannot reclaim circular structures whichare no longer reachable. Cedar therefore has a conven-tional trace-and-sweep collector as well. This is not suit-

able for real-time applications, since it stops the entire sys-tem for many seconds, but in interactive applications itcan be used during coffee breaks to reclaim accumulatedcircular structures.

Another problem with reference-counting is that the countmay overflow the space provided for it . This happens veryseldom, since only a few objects have more than two orthree references . I t is s imple to make the maximum valuesticky. Unfortunately, in some applications the root of alarge structure is referenc ed from man y places; if the rootbecomes sticky, a lot of storage will unexpectedly becomepermanent. An attractive solution is to have anoverflowcount table, which is a hash table keyed on the address of

an object. When the count reaches its limit, it is reducedby half, the overflow count is increased by one, and anoverflow flag is set in the object. When the count reacheszero, if the overflow flag is set the process is reversed.Thus even with as few as four bits, there is room to countup to seven, and the overflow table is touched only whenthe count swings by more than four, which happens veryseldom.

There are many cases when resources are dynamically allo-cated and freed (e.g., real memory in a paging system),and sometimes addi t ional recources are needed temporar-ily to free an item (some table might have to be swappedin to find out where to write out a page). Normally thereis a cushion (clean pages which can be freed with nowork), but in the worst case the cushion may disappear (allpages are dirty). The trick here is to keep a little some-thing in reserve under a mattress, bringing it out only in acrisis. It is necessary to bound the resources needed to freeone item; this determine s the size of the reserve unde r themattress, which m ust be re garded as a fixed cost of theresource multiplexing. When the crisis arrives, only oneitem should be freed at a time, so that the entire reserve isdevoted to that job; this may slow things down a lot, but itensures that progress will be made.

Sometimes radically different strategies are appropriate in

the normal and worst cases. The Bravo editor [24] uses apiece tableto represent the document being edi ted . This isan array of pieces: pointers to strings of characters storedin a file; each piece contains the file address of the firstcharacter in the string, and its length. The strings are nevermodified during normal editing. Instead, when some char-acters are deleted, for example, the piece containing thedeleted characters is split into two pieces, one pointing tothe first undeleted string and the other to the second.When characters are inserted from the keyboard, they are

40

-

8/12/2019 Computer System Design By Lampson Hints

9/16

appended to the file, and the piece containing the inser-tion point is split into three pieces: one for the precedingcharacters, a second for the inserted characters, a nd a thirdfor the following characters. After hours of editing thereare hundreds of pieces and things start to bog down. It isthen time for a cleanup, which writes a new file containingall the characters of the doc umen t in order. Now the piecetable can be replaced by a single piece pointing to the newfile, and editing can continue. Cleanup is a specializedkind o f garbage collection.

3. Speed

This section describes hints for making systems faster, for-going any further discussion of why this is important.

N either a borro~ver, nor a lender be;For loan oft loses both #s elf and frie ndAnd borrowing dulleth edge of husbandry.

Split resources in a fixed way if in doubt, rather thansharing them. It is usually faster to allocate dedicatedresources, it is often faster to access them, and the be-havior of the allocator is more predictable. The obviousdisadvantage is that more total resources are needed, ignor-ing multiplexing overheads, than if all come from a com-mon pool. In many cases, however, the cost of the extraresources is small, or the overhead is larger than the frag-mentation, or both.

For example, it is always faster to access information inthe registers of a processor than to get it from memory,even if the mac hine has a high-perform ance cache. Regis-ters have gotten a bad name because it can be tricky toallocate them intelligently, and because saving and restor-ing them across procedure calls may negate their speed ad-

vantages. When programs are written in the approvedmodern style, however, with lots of small procedures, 16registers are nearly always enough for all the local vari-ables and temporaries, so that there are usually enoughregisters and allocation is not a problem. And with n setsof registers arranged in a stack, saving is needed onlywhen there are n successive calls without a return [14, 39].

Input/output channels, floating-point co-processors andsimilar specialized computing devices are other applica-tions of this principle. When extra hardware is expensivethese services are provided by multiplexing a single proces-sor, but as it becomes cheap, static allocation o f computingpower for various purposes becomes worthwhile.

The Interlisp virtual memory system mentioned earlier [7]needs to keep track of the disk address corresponding toeach virtual address. This inform ation could itself be heldin the virtual memory (as it is in several systems, includingPilot [42]), but the need to avoid circularity makes this

rather complicated. Instead, real memory is dedicated tothis purpose. Unless the disk is ridiculously fragmented,the space thus consumed is less than the space for thecode to prevent circularity.

Use static analysis if you can; this is another way of stat-ing the last slogan. The result of static analysis is knownproperties of the program which can usually be used to im-prove its performance. The hooker is" i f you can;",when agood static analysis is not possible, don't delude yourself

with a bad one, but fall back on a dynamic scheme.

The remarks about registers above depend on the fact thatthe compiler can decide how to allocate them, simply byputting the local variables and temporaries there. Mostmachines lack multiple sets of registers o? lack a way ofstacking them efficiently. Efficient allocation is then muchmore difficult, requiring an elaborate inter-proceduralanalysis which may not succeed, and in any case must beredone each time the program changes. So a little bit ofdynamic analysis (stacking the registers) goes a long way.Of course the static analysis can still pay o ff in a largeprocedure if the compiler is clever.

A program can read data much faster when the data isread sequentially. This makes it easy to predict what datawill be needed next and read it ahead into a buffer. Oftenthe data can be allocated sequentially on a disk, which al-lows it to be transferred at least an order of magnitudefaster. These performance gains depend on the fact thatthe programmer has arranged the data so that it is acces-sed according to some predictable pattern, i .e. so that staticanalysis is possible. Many attempts have been made toanalyse programs after the fact and optimize the disk trans-fers, but as far as I know this has never worked. The dyna-mic analysis done by demand paging is always at least asgood.

Some kinds of static analysis exploit the fact that someinvariant is maintained. A system that depends on suchfacts may be less robust in the face of hardware failures orbugs in software which falsify the invariant.

Dynam& translation from a convenient (compact, easilymodified or easily displayed) representation to one whichcan be quickly interpreted is an important variation on theold idea of compiling. Translating a bit at a time is theidea behind separate compilation, which goes back at leastto Fortran lI. Incremental compilers do it automaticallywhen a statement, procedure or whatever is changed.Mitchell investigated smooth motion on a continuum bet-

ween the convenient and the fast representation [34]. Asimpler version of his scheme is to always do the transla-tion on demand and cache the result; then only one inter-preter is required, and no decisions are needed except forcache replacement,

41

-

8/12/2019 Computer System Design By Lampson Hints

10/16

For example, an experimental Smalltalk implementation[12] uses the bytecodes produced by the standard Small-talk compiler as the convenient (in this case, com pact)representation, and translates a single p rocedu re from bytecodes into machine language when it is invoked. It keeps acache with room for a few thousan d instructions of trans-lated code. For the scheme to pay off, the cache must belarge enough that on the average a procedure is executedat least n times, where n is the ratio of translation time toexecution time for the untranslated code.

A rather different example is provided by the C-machinestack cach e [14]. In this d evice, instructions are fetc hedinto an instruction cache; as they are loaded, any operandaddress which is relative to the local frame pointer FP isconve rted into an absolute address, using the curr ent valueof FP (which remains constant during execution of theprocedure). In addition, if the resulting address is in therange of addresses currently in the stack data cache, theoperand is changed to register mode; later execution ofthe instruction will then access the register directly in thedata cache. The FP value is concatenated with the instruc-tion address to form the key of the translated instruction

in the cache, so that multiple activations of the same pro-ced ure will still work.

If thou did'st ever hold me in thy heart. (Vii 349)

Cache answers to expensive computations, rather thandoing them over. By storing the triple ~ x, f(x) ] in an asso-ciative store with fa nd x as keys, we can retr iev er(x) witha lookup. This wins if f (x) is neede d again before it getsreplaced in the cache, which presumably has limited capa-city. Ho w m uch it wins depend s on ho w expensive it is tocomputef(x). A serious problem is that when fis not func-tional (can give different results with the same arguments),we need a way toinvalidate or update a cache entry if thevalue of f( x) changes. Updating depends on an equationof the formf ( x + A ) = g ( x , A , f (x ))in which g is muchcheaper to compute than f. For example, x might be an ar-ray of 1000 numbers, f t h e sum of the array elements, andA a new value for one o f them. Then g(x, y, z) isx + y - z .

If a cache is too small to hold all the "active" values, itwill thrash. If reco mp uti ngf is expensive, perform ance willsuffer badly. Thus it is wise to choose the cache size adapt-ively, "if possible, increasing it whe n the hit rate declines,and reducing it when many entries go unused for a longtime.

The classic example is a hardware cache which speeds upaccess to main storage; its entries are triples[Fetch, address,contents o f address]. Th e Fetchoperation is certainly notfunctional:Fetch(x) gives a different answer afterStore(x)has been done. Hence the cache must be updated or in-validated after a store. Virtual m emory systems do exactlythe same thing: main storage plays the role of the cache,

disk plays the role of main storage, and the un it of transfeis the page, segment or whatever. But nearly every non-trivial system has more specialized applications of caching.

This is especially true for interactive or real-time systems,in which the basic problem is to incrementally update acomplex state in response to frequent small changesDoing this in an ad-hoc way is extremely error-prone. Thbest organizing principle is to recompute the entire stateafter each change, but cache all the expensive results othis computation. A change must invalidate at least thecache entries which it renders invalid; if these are too hardto identify precisely, it may invalidate more entries, at theprice of more computing to reestablish them. The secret osuccess is to organize the cache so that small changes onlyinvalidate a few entries.

Fo r example, the Bravo editor [24 ] has a functionDisplayLine[document, characterNumber]which returns thebitmap for the line of text in the displayed docu men t witdocument[characterNurnber]as its first character. It also re-turns lastCharDisplayedand lastCharUsed, the numbers o fthe last character displayed, and the last character examined in computing the bitmap (these are usually not thesame, since it is necessary to look past the end of the linein order to choose the line break). This function computeline breaks, does justification, uses font tables to mapcharacters into their raster pictures, etc. There is a cachewith an entry for each line currently displayed on thescreen, and sometimes a few lines just ab ove or below. Anedit which changes characters i through j invalidates anycache entry for which [characterNumber .. lastCharUsed]intersects [i .. j]. The display is re com put ed by

c: = first Char;loop

[bitMap, tastC,] : = DisptayLine[docurnent. c ] ; Paint[bitMap];c; = lastC + 1

endloopThe call ofDisplayLineis short-circuited by using the cacheentry for [document, c] if it exists. At the end, any cacheentry which has not been used is discarded; these entriesare not invalid, but they are no long er interesting, becausthe line breaks have changed so that a line no longerbegins at these points.

The same idea can be applied in a very different settingBravo allows a docum ent to be structured into paragraphseach with specified left and rig ht margins, inter-line leading, etc. In ordinary page layout, all the information abouthe paragraph that is needed to do the layout can berepresented very compactly:

the n umb er o f lines;the height of each line (normally all lines are thesame height);

any keep properties;

the pre and post leading.

4

-

8/12/2019 Computer System Design By Lampson Hints

11/16

In the usual case this can be encoded in three or fourbytes. A 30 page chapter has perhaps 300 paragraphs, soabout lk bytes are required for all this data. This is less in-formation than is required to specify the characters on apage. The layout computation is comparable to the linelayout computation for a page. Therefore it should be pos-sible to do the pagination for this chapter in less time thanis required to render one page. Layout can be done in-

dependently for each chapter.

What makes this work is a cache of [paragraph,ParagraphShape(paragraph)]entries. If theparagraph is edit-ed, the cache entry is invalid and must be recomputed.This can be done at the time of the edit (reasonable if theparagraph is on the screen, as is usually the case, but notso good for a global substitute), in background, or onlywhen repagination is requested.

For the apparel oft proclaims the man.

Use hints to speed up normal execution. A hint, like acache entry, is the saved result of some computation. It isdifferent in two ways: it may be wrong, and it is not neces-sarily reached by an associative lookup. Because a hintmay be wrong, there must be a way to check its correct-ness before taking any unrecoverable action. It is checkedagainst the truth, information which must be correct, butwhich can be optimized for this purpose and need not beadequate for efficient execution. Like a cache entry, thepurpose of a hint is to make the system run faster. Usuallythis means that it must be correct nearly all the time.

For example, in the Alto [29] and Pilot [42] operating sys-tems, each file has a unique identifier, and each disk pagehas a label field whose contents can be checked before

reading or writing the data, without slowing down the datatransfer. The label contains the identifier of the file whichcontains the page, and the num ber of that page in the file.Page zero of each file is called theleader page and con-tains, among other things, the directory in which the fileresides and its string name in that directory. This is thetruth on which the file systems are based, and they takegreat pains to keep it correct. With only this information,however, there is no way to find the identifier of a filefrom its name in a directory, or to find the disk address ofpage L except to search the entire disk, a met hod whichworks but is unacceptably slow.

Therefore, each system maintains hints to speed up these

operations. For each directory there is a file which con-tains triples [string name, file identifier, address of firstpage]. For each file there is a data structure which maps apage num ber into the disk address of the page. In the Altosystem, this structure is a link in each label to the nextlabel; this makes it fast to get from page n to page n+ L InPilot, it is a B-tree which implements the map directly,taking advantage of the comm on case in which consecutive

file pages occupy consecutive disk pages. Information ob-tained from any o f these hints is checked whe n it is used,by checking the label or reading the file name from theleader page. If it proves to be wrong, all of it can bereconstructed by scanning the disk. Similarly, the bit tablewhich keeps track of free disk pages is a hint; the truth isrepresented by a special value in the label of a free page,which is checked when the page is allocated before the

label is overwritten with a file identifier and page number.

Another example of h in ts is the s tore and forward mutingfirst used in the Arpanet [32]. Each node in the networkkeeps a table which gives the best route to each othernode. This table is updated by periodic broadcasts inwhich each node announces to all the other nodes itsopinion about the quality of its links to its nearest neigh-bors. These broadcast messages are not synchronized, andare not guaranteed to be delivered. Thus there is no guar-antee that the nodes have a consistent view at any instant.The truth in this case is that each node knows itso w nidentity, and hence knows when it receives a packet des-tined for itself. For the rest, the routing does the best itcan; when things aren't changing too fast it is nearly op-timal.

A more curious example is the Ethernet [33], in which lackof a carrier on the cable is used as a hint that a packet canbe sent. I f two senders take the hint simultaneously, thereis a collision which both can detect, and both stop, delayfor a random ly chosen interval, and then try again. If n suc-cessive collisions occur, this is taken as a hint that the num-ber of senders is 2 n, and each sende r lengthens the me anof his random delay interval accordingly, to ensure that e net does not become overloaded.

A very different application of hints is used to speed up ex-ecution of Smalltalk program s [12]. In Smalltalk the codeexecuted when a procedure is called is determined dynami-cally, based on the type of the first argument. Thu sPrint[x,format[ invokes the Print proced ure which is part o f thetype of x. Since Smalltalk has no declarations, the type ofx is not known statically. Instead, each object contains apointer to a table which contains a set of pairs[procedurenam~ address of code],and when this call is executed,Printis looked up in this table for x (I have normalized the un-usual Smalltalk terminology and syntax, and oversimpli-fied a bit). This is expensive. It turns out that usually thetype of x is the same as it was last time. So the code forthe call Print[ x, format]can be arranged like this:

push ormat;push x;pushlastType;calllastProc,and each Print procedure begins with

It: =Pop[]; x: =Pop[]; t:=ty pe of x;iftC=lt then LookupAndCall[x,"Print"] else the body of theprocedure.

Here lastType and lastProc are immediate values stored inthe code. The idea is thatLookupAndCallshould store thetype of x and the code address it finds back into the

43

-

8/12/2019 Computer System Design By Lampson Hints

12/16

lastType and lastProc fields respectively. If the t ype is thesame next time, the procedure will be called directly.Measurements show that this cache hits about 96% of thetime. In a machine with an instruction fetch unit, thisscheme has the nice property that the transfer tolastProecan proceed at full speed; thus, when the hint is correct,the call is as fast as an ordinary subroutine call. The checko f t~ l t can be arranged so that it normally does notbranch.

The same idea in a different guise is used in the S-1 [48],which has an extra bit for each instruction in its instruc-tion cache. The bit is cleared when the instruction is load-ed, set when the instruction causes a branch to be taken,and used to govern the path that the instruction fetch unitfollows. If the prediction turns out to be wrong, the bit ischang ed an d the oth er path is. followed.

Wh en in doubt, use brute force.Especially as the cost of

hardware declines, a straightforward, easily analyzed solu-tion which requires a lot of special-purpose computingcycles is better than a complex, poorly characterized onewhich may work well if certain assumptions are satisfied.For example, Ken Thompson's chess machine, Belle,relies mainly on special-purpose hardware to generatemoves and evaluate positions, rather than on sophisticatedchess strategies. Belle has won the world co mp uter chesschampionships several times. Another instructive-exampleis the success of personal computers over time-sharingsystems; th e latter include much more cleverness and havemany fewer wasted cycles, but the former are increasinglyrecognized as the most cost-effective way of providinginteractive computing.

Even an asymptotically faster algorithm is not necessarilybetter. It is known how to multiply twon X n matricesfaster than O(n2'5), but the constant factor is prohibitive.On a more mundane note, the 7040 Watfor compiler usedlinear search to look up symbols; student programs haveso few symbols that the setup time for a better algorithmcouldn't be recovered.

Compute in backgroundwhen possible. In an interactiveor real-time system, it is good to do as little work as pos-sible before responding to a request. The reason is two-fold: first, a rapid response is better for the users, andsecond, the load usually varies a great deal, so that there islikely to be idle processor time later, which is wasted un-less there is background work to do. Many kinds of workcan be deferred to background. The Interlisp and Cedargarbage collectors [7, 11] do nearly all their work this way.Many paging systems write out dirty pages and preparecandidates for replacement in background. Electronic mailcan be delivered and retrieved by background processes,since delivery within an hour or two is usually acceptable.Many b anking systems consolidate the data on accounts atnight and have it ready the next morning. These are four

examples with successively less need for synchronizationbetween foreground and background tasks. As the amountof synchronization increases, m ore care is needed to avoidsubtle errors; an extreme example is the on-the-fly gar-bage collection algorithm given in [13]. But in m ost cases asimple producer-consumer relationship between twootherwise indepe ndent processes is possible.

Use batch processing if possible. Doin g things increm en-tally almost always costs more, even aside from the factthat disks and tapes work much better when accessed se-quentially. And batch processing permits much sim plererror recovery. Th e Bank o f America has an interactivesystem which allows tellers to record deposits and checkwithdrawals. It is load ed with curre nt accou nt balances inthe morning, and does its best to maintain them duringthe day. But the next morning the on-line data is discard-ed and replaced with the results of night's batch run. Thisdesign made it much easier to meet the bank's require-

ments for trustworthy long-term data, and there isn osignificant loss in functio n.

Be w ary then; best safety ties in fear. ( I iii 43)

Safety first. In allocating resources, strive to avoid dis-aster, rather than to attain an optimum . M any years of ex-perience with virtual memory, networks, disk allocation,database layout and other resource allocation problemshas mad e it clear that a general-purpose system cann ot op-timize the use of resources. On the other hand, it is easyenough to overload a system and drastically degrade theservice. A system cannot be expected to function well ifthe demand for any resource exceeds two-thirds of thecapacity (unless the load can be characterized extremelywell). Fo rtunately hardware is cheap and getting cheaper;we can a fford to provide excess capacity. Memory is espe-cially cheap , which is especially fortun ate, since to some ex-tent plenty of memory can allow other resources, such asprocessor cycles or communication bandwidth, to be util-ized mo re fully.

The sad truth about optimization was brought ho me w henthe first paging systems began to thrash. In those daysmemory was very expensive, and people had visions ofsqueezing the mo st o ut o f every byte by clever optimi-

zation of the swapping: putting related procedu res on thesame page, predicting the next pages to be referencedfrom previous references, running jobs w hich share data o rcode together, etc. No one ever learned how to do this.Instead, memory got cheaper, and systems spent it toprovide enough cushion that simple demand paging wouldwork. It was learned that the only important thing is toavoid thrashing, or too much demand for the availablememo ry. A system that thrashes spendsall its time waitingfor the disk. The only systems-in which clevernesshas

44

-

8/12/2019 Computer System Design By Lampson Hints

13/16

worked are those with very well-known loads. For in-stance, the 360/50APL system [4] had the same size work-space for each user, and common system code for all ofthem. It made all the system code resident, allocated acontiguous piece of disk for each user, and overlapped aswap-out and a swap-in with each unit of computation.This worked fine.

The nicest thing about the Alto is that it doesn't runfaster at night, (J. Morris)

A similar lesson was learned abou t processor t ime. With in-teract ive use the response t ime to a demand for comput ingis important, since a person is waiting for i t . Manyattempts were made to tune the processor scheduling as afunction of priority of the computation, working set size,memory loading, past history, l ikelihood of an i/o request,etc.; these efforts failed. Only the crudest parametersproduce intelligible effects: e.g., interactive vs non-interac-tive computations; high, foreground and background pri-ority. The most successful schemes give a fixed share ofthe cycles to each job, and don't allocate more than 100%;unused cycles are wasted or, with luck, consumed by abackg round job. Th e natural extension of this strategy isthe personal computer, in which each user has at least oneprocessor to himself.

Give every man thy ear, but fe w thy voice;Tak e each man's censure, but reserve thy jud gmen t.

Shed load to control demand, rather than allowing thesystem to become overloaded. This is a corollary of theprevious rule. There are ma ny ways to shed load. An inter-active system can refuse new users, or if necessary deny

service to existing ones. A memory manager can limit thejobs being served so that their total working sets are lessthan the available memory . A network can discard packets.If i t comes to the worst, th e system can crash and startover, hopefully with greater prudence.

Bob Morris once suggested that a shared interactive sys-tem should have a large red button on each terminal,which the user pushes if he is dissatisfied with the service.When the button is pushed, the system must either im-prove the service, or throw the user off; i t makes an equi-table choice over a sufficiently long period. The idea is tokeep people from wasting their t ime in front of terminalswhich are not delivering a useful am oun t o f service.

The original specification for the Arpanet [32] was that apacket, once accepted by the net, is guaranteed to bedelivered unless the recipient machine is down, or a net-work node fails while i t is holding the packet. This turnedout to be a bad idea. It is very hard to avoid deadlock inthe worst case with this rule, and attempts to obey it leadto many complications and inefficiencies even in the nor-mal case. Furthermore, the client does not benefit , since

he sti l l has to deal with packets lost by host or networkfailure (see 4). Eventually the rule was abandoned . ThePup internet [3], faced with a muc h m ore v ariable set oftransport facilities, has always ruthlessly discarded packetsat the first sign of congestion.

4. Fault-tolerance

The unavoidable price o f reliability is simplicity.(C. Hoare)

Making a system reliable is not really hard, if you .knowhow to g o abo ut i t . But retrofit t ing reliabili ty to a n existingdesign is very difficult.

This above all: to thine own self be trueAn d it must follow, as the night the day,Thou canst not then be false to any man.

End-to-end error recovery is absolutely necessary for areliable system, andany other error detection or recoveryis not logically necessary, but is strictly for performance.This observation is due to Saltzer [46], and is very widelyapplicable.

For ex ample, consider the operation of transferring a fi lefrom a file system on a disk attached to machine A, toanother fi le system on another disk attached to machine B.The minimum procedure which inspires any confidencethat the right bits are really on B's disk, is to read the filefrom B's disk, compute a checksum of reasonable length(say 64 bits), and find that i t is equal to a checksum com-puted by reading the bits from A's disk, Checking thetransfer from A's disk to ,4 's mem ory, from d over the net-

work to B, or from B's memo ry to B's disk is notsufficient,since there might be trouble at some other point, or thebits might be clobbered while sit t ing in memory, or what-ever. Furthermore, these other checks are notnecessaryeither, since if the en d-to-end check fails the entire trans-fer can be repeated. Of course this is a lot of work, and iferrors are frequent, intermediate checks can reduce theamo unt o f work that mu st be repeated. B ut this is strictly aquestion of performance, and is irrelevant to the reliabili tyof th e file transfer. Indeed, in the ring-based system atCambridge, i t is customary to copy an entire disk pack of58 MBytes with only an end-to-end check; errors are soinfrequent that the 20 minutes o f work very seldom needsto be repeated [36].

Many uses of hints are applications of this idea. In theAlto file system described earlier, for example, i t is thecheck of the label on a disk sector before writing the sec-tor that ensures the disk address for the write is correct.Any precautions taken to make it more likely that the ad-dress is correct may b e imp ortant, o r even crit ical, for per-formance, but they do not affect the reliabili ty of the filesystem.

45

-

8/12/2019 Computer System Design By Lampson Hints

14/16

The Pup internet [4] adopts the end-to-end strategy atseveral levels. The main service offered by the network istransport of a data packet from a source to a destination.The packet may t raverse a num ber o f networks wi th wide-ly varying error rates and other properties. Internet nodeswhich s tore and forward packets may run short of spaceand be forced to discard packets. Only rough estimates ofthe best route for a packet are available, and these may be

wildly erroneous wh en parts of the network fail or resum eoperation. In the face of these uncertainties, the Pu p inter-net provides good service wi th a s imple implementat ion b yattempting only "best efforts" delivery. A packet may belost with no notice to the sender, and it may be corruptedin transit . Clients must provide their own error control todeal with these problems, and indeed higher-level Pupprotocols provide more complex services such as reliablebyte s t reams. However, the packet t ransport does at temptto report problems to its clients, by providing a modestamount of error control (a 16-bit checksum), notifyingsenders of discarded packets when possible, etc. Theseservices are intended to improve performance in the faceof unreliable commu nication an d overloading; since theytoo are best efforts , they don 't complicate the implemen-tation much.

There are two problems with the end-to-end s t rategy.First, it requi res a che ap test. for success. Secon d, it canlead to working systems with severe performance defects,which may not appear unt i l the system becomes opera-tional and is placed under heavy load.

Remem ber thee?Yea, from the table of my m emoryI'll wipe away all trivial fo nd record~All saws o f books, all orms, all pleasures past,Tha t yo uth and ob servation copied there;An d thy commandm ent all alone shall liveW ithin the bo ok and volume o f my brain,U nmix 'd with baser matter. (1 v 97)

Log updatesto record the t ruth ab out the s tate of an ob-ject. A log is a very simple data structure which can bereliably written and read, and cheaply forced out onto diskor other stable storage that can survive a crash. Because iti s append-only, the amo unt o f writ ing is minimized, and i tis easy to ensure that the log is valid no matter when acrash occurs. It is easy and cheap to duplicate the log,write copies on tape, or whatever. Logs have been used for

many years to ensure that information in a data base is notlost [17], but the idea is a very general one and can beused in ord inary file systems [35, 49] and in man y othe rless obviou s situations. When a log holds the truth, the cur-rent state of the object is very much like a hint (i t isn't ex-actly a hint because there is no cheap way to check itscorrectness).

To use the technique, record every update to an object asa log entry, consisting of the name of the upda teprocedure and i tsarguments. The procedure must befunc-tional: when appl ied to the same arguments i t must alwayshave the same effect. In other words, there is no state out-side the arguments that affects the operation of the proce-dure. This means that the (procedure call specified by the)log entry can be re-executed later, and if the object being

updated is in the same state as when the update was firstdone, i t will end up in the same state as after after the up-date was first done. By induction, this means that a se-quence of log entries can be re-executed, starting with thesame objects , and produce the same objects that wereproduced in the original execution.

For this to work, two requirements must be satisfied:

The upd ate procedure must be a t rue funct ion:

Its result does not depend on any state outside itsarguments ;

It has no side effects, except on the object inwhose log it appears.

The arguments must bevalues,one of:

Immediate values, such as integers, strings etc.An immediate value can be a large thing, l ike anarray or even a l ist , but the entire value must becopied into the log entry.

References toimmutableobjects.

Most objects of course are not immutable, s ince they areupdated. However, a particularversion of an ob ject i s im-mutable; changes made to the object change the vers ion.A s imple way to refer to an object vers ion unambiguouslyis with the pair [object identifier, number of updates]. Ifthe object identifier leads to the log for that object, thenreplaying the specif ied number of log entr ies y ields theparticular version. Of course, doing this replay may re-quire finding some other object versions, but as long aseach update refers only to existing versions, there won't beany cycles and this process will terminate.

For example, in the Bravo editor [24] there are exactly twoupdate functions for editing a document:

Replace[old: Interval, new: nterva~ChangeProperttes[where: nterval,what:FormattingOp]

An Interval is a triple [document version, first character,last character]. AFormattingOpis a function from proper-ties to properties; a property might beitalic o r leflMargin,and a FormattingOpmigh t be leftMargin: = leftMargin+10 oritalic:---TRUE.ThUS only two kin ds of log entries are ne ed-ed. All the editing commands reduce to applications ofthese two functions.

BewareO f entrance to a quarrel, but, b eing in,Bear "t that th' opp osed may b eware o f the~

46

-

8/12/2019 Computer System Design By Lampson Hints

15/16

M a k e a c t i o ns a t om i c or restartable. An atomic act ion(often called a t r ansac t ion) is one which either completesor has no effect. For example, in most main storage sys-tems fetching or storing a word is atomic. The advantagesof atomic actions fo r fault-tolerance are obvious: if a fail-ure occurs during the action, it has no effect, so that inrecovering from a failure it is not necessary to deal withany of the interm ediate states of the action [28]. Atomicityhas been provided in database systems for some time [17],using a log to store the information needed to complete orcancel an action. The basic idea is to assign a unique iden-tifier to each atomic action, and use it to label all the logentries associated with that action. Ac om m i t r e c o r dfor theaction [42] tells whe ther it is in progress, comm itted (i.e.,logically complete, e ven if some cleanup work rem ains tobe done), or aborted (i.e. logically canceled, eve n if somecleanup rema ins); changes in the state of the comm itrecord are also recorded as log entries. An action cannotbe committed unless there are log entries for all of its up-dates. After a failure, recovery applies the log entries foreach comitted action, and undoes the updates for eachaborted action. Many variations on this scheme are pos-

sible [54].

For this to work, a log entry usually needs to berestart-able. This means that it can be partially executed any num-ber o f t imes before a complete execution , without chang-ing the result; sometimes such an action is calledidem-potent . For example, storing a set of values into a set ofvariables is a restartable action; incrementing a variable byone is not. Restartable log entries can be applied to the cur-rent state of the object; there is no need to recover an oldstate.

This basic method can be used for any k ind of perma nentstorage. If things are simple enough, a rather distorted ver-

sion will work. The Alto file system described above, forexample, in effect uses the disk labels and leader pages asa log, and rebuilds its other data structures from these ifnecessary. H ere, as in most file systems, it, is only the fileallocation and directory actions that are atomic; the file sys-tem does not help the client to make its updates atomic.The Juniper file system [35, 49] goes much further, allow-ing each client to make an arbitrary set of updates as asingle atomic action. It uses a trick known ass ha do w p a ge s ,in which data pages are moved from the log into the filessimply by changing the pointers to them in the B-tree thatimplements the map from file addresses to disk addresses;this trick was first used in the Cal system [50]. Cooperating

clients of an ordinary file system can also implementatomic actions, by checking whether recovery is neededbefore each access to a file, and when it is, carrying outthe entrie s in specially na me d log files [40],

Atomic actions are not trivial to implement in general, al-though the preceding discussion tries to show that they are

not nearly as hard as their public image suggests. Some-t imes a weaker but cheaper method wil l do . The Grape-vine mail transport and registration system [1], for exam-ple, maintains a replicated data base of names an d distribu-tion lists on a large numb er o f mach ines in a nationwidenetwork. Updates are made at one s i te , and propagated toother sites using the mail system itself. This guaranteesthat the updates will eventually arrive, but as sites fail andrecover, and the network partitions, the order in whichthey arrive may vary greatly. Each update message is time-stamped, and the latest one wins. After enough time haspassed, all the sites will receive all the updates and will allagree. During the propagation, however, the sites may dis-agree, e.g. about whether a person is a member of a cer-tain distribution list. Such occasional disagreements anddelays are not very important to the usefulness of thisparticular system.

5. Conclusion

M os t hum bl y do I t a ke m y l e a ve , m y l o r d

Such a collection of good advice and anecdotes is rathertiresome to read; perhaps it is best taken in small doses 'atbedtime. In extenuation I can only plead that I have ig-nored most of these rules at least once, and nearly alwaysregretted it . The references tell fuller stories about thesystems or techniques, which I have only sketched. Manyof them also have mo re com plete rationalizations.

The slogans in the paper are collected in Figure 1.

Acknowledgements

I am indebted to many sympathet ic readers of earl ierdrafts of th is paper, and to the comm ents o f the programcommittee.

References

1. Birrell, A.D. et.al. Grapevine: an exercise in distributed comput-ing. Comm. ACM25, 4, April 1982, p 260-273.

2. Bobrow,D.G. et. al. Tenex: a paged time-sharing system for thePDP-10.Com ~ ACM15, 3, March 1972, p 135-143.

3. Boggs,D.R. et. al. Pup: an internetwork architecture.IEEE TranxCommunicationsCOM-28,4, April 1980, p 612-624.

4. Breed,L.M and Lathwell, R.H. The implementation Of APL/360. InInteractive Systems fo r Experimental A pplied Mathematics,Klererand Reinfelds, eds., Academic Press, 1968, p 390-399.

5. Britton, K.H., et.aL A procedure for designing abstract interfacesfor device interface modules.Proc. 5th InI'l. Conf. SoftwareEngineering,1981, p 195-204.

6. Brooks, F.B.The Mythical Man-Month.Addison-Wes ley, 1975.7. Burton,R.R. el. al. Interlisp-D overview. InPapers on Interlisp-D,

Technical report SSL-80-4,Xerox Palo Alto Research Center, 1981.

47

-

8/12/2019 Computer System Design By Lampson Hints

16/16

8. Clark, D.W.et. al. Themem ory system of a high-performance per-sonal computer.IlrEE Trans. Comp utersTC-30, 10, Oct. 1981, p715-733.

9. Creasy, R.J. The origin of the VM/37 0 time-sharing system,lB M Rex Develop.25, 5, Sep. 198 1, p 483-491.

10. Deutsch, L.P. and Grant, C.A. A flexible measurem ent tool forsoftware systems.Proc IFIPCong.1971, North-Holland.

11. Deutsch, L.P. and Bobrow, D.G . An efficient incrementalautomatic garbage collector.Comm. aCM19, 9, Sept 1976.

12. Deutsch, L.P. Private communication, Februa ry 1982.13. Dijkstra, E.W.et. al. On-the-fly garbage collection: an exercise in

cooperation. Com~ aCM21, 11, Nov. 1978, p 966-975.14. Ditzel, D.R. an d McLellan, H.R. R egister allocation for free: the C

machine stack cache,slaet~lV Notices17, 4, April 1982, p 48-56.15. Geschke, C.M.et. al. Early experience with Mesa.Comm. ACM 20,

8, Aug. 197 7, p 540-553.16. Gifford, D.K . Weighted voting for replicated data.Operating

Systems Review13, 5, Dec. 1979, p 150-162.17. Gray, J.et. al. The recovery manager o f the System R database

manager. Comput. Surveys13, 2, Ju ne 1981, p 223-242.18. Hansen, P.M.et. at. A performance evaluation of the in tel iAPX

432. Computer Architecture News10, 4, Ju ne 1982, p 17-26.19. Hoare, C.A.R. Hints on program ming language design.

SIGACT/SIGPLANSymposium on Principles o f Programminglanguages, Boston, Oct. 1973.

20. Hoare, C.A.R. Monitors: an operating system structuring concept.Comm.ACM 17, 10, Oct . 1974, p 549-557.

21. Ingalls, D. Th e Smalltalk graphics kernel.Byte6, 8, Aug. 1 981, p168-194.

22. Janson , P.A. Using type-extension to organize virtual-memorymechanisms.Operating Systems R eview15, 4, Oct. 1981, p 6-38.

23. Knuth, D.E . An empirical study of Fortra n programs.Software- Practice and Experience1, 2, Mar. 1971 , p 105-133.