Chapter 3. Lexical Analysis (1)

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

Chapter 3.

Lexical Analysis (1)

2

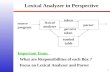

Interaction of lexical analyzer with parser.

sourceprogram

lexicalanalyzer

parser

symboltable

token

get nexttoken

3

Lexical Analysis

Issues – Simpler design is preferred – Compiler efficiency is improved– Compiler portability is improved

Terms– Tokens terminal symbols in a grammar– Patterns rules to describing strings of a token

– Lexemes a set of strings matched by the pattern

4

TOKEN SAMPLE LEXEMESINFORMAL DESCRIPTION OF

PATTERN

const

if

relation

id

num

literal

const

if

<, <=, =, <>, >, >=

pi, count, D2

3.1416, 0, 6.02E23

"core dumped"

const

if

< or <= or = or < > or >= or >

letter followed by letters and digits

any numeric constant

any characters between " and " except "

Examples of tokens.

5

Difficulties in implementing lexical

analyzers FORTRAN

– No delimiter is used– DO 5 I=1.25 DO 5 I=1,25 DO 5 I= 1 25

PL/I– Keywords are not reserved– IF THEN THEN THEN = ELSE; ELSE ELSE=THEN;

6

Attributes for tokens

A lexical analyzer collects information about tokens into their associated attributes

Example – E = M * C ** 2

• <id, pointer to symbol-table entry for E>• <assign_op,>• <id, pointer to symbol-table entry for M>• <mult_op,_>• <id, pointer to symbol-table entry for C>• <exp_op,>• <num, integer value 2> generally stored in constant table

7

Lexical Errors

Rules for error recovery– Deleting an extraneous character– Inserting a missing character– Replacing an incorrect character by a correct character– Transposing two adjacent characters

Minimum-distance erroneous correction Example

– Detectable : 2as3, 2#31, …– Undetectable : fi(a == f(x)) …

8

Input Buffering

A single buffer could make a big difficulty– 두 버퍼 사이에 있는 word– Declare (arg1, …. , argn) array or function

Buffer pairs– A good solution– Sentinels 을 쓰면 매번 버퍼의 끝인지와

파일의 끝인지를 동시에 검사할 필요가 없음

9

Sentinels at end of each buffer half.

: : : E : : = : : M : * : eof C : * : * : 2 : eof : : : : : eof

lexeme_beginning

forward

10

Specification of Tokens

Strings and languages – Alphabet or character class finite set of symbols

– String sentence word

– |s| length of a string s

– ε : empty string, Ф ={ε} : empty set

– x, y are strings • xy : concatenation, εx = x ε = x

Operations on languages

11

Terms for parts of a string.

TERM DEFINTION

prefix of sA string obtained by removing zero or more trailing symbols of string s; e.g., ban is a prefix of banana.

suffix of sA string formed by deleting zero or more of the leading symbols of s; e.g., nana is a suffix of banana.

substring of s

A string obtained by deleting a prefix and a suffix from s; e.g., nan is a substring of banana. Every prefix and every suffix of s is a substring of s, but not every substring of s is a prefix or a suffix of s. For every string s, both s and are prefixes, suffixes, and substrings of s.

proper prefix, suffix, or substring of s

Any nonempty string x that is, respectively, a prefix, suffix, or substring of s such that s x.

subsequence of sAny string formed by deleting zero or more not necessarily contiguous symbols from s; e.g., baaa is a subsequence of banana.

12

Definitions of operations on languages.

OPERATION DEFINITION

union of L and M written

L M.L M = {s | s is in L or s is in M}

concatenation of L and M written LM

LM = { st | s is in L and t is in M }

Kleene closure of L

written L* L* denotes “zero or more concatenations of” L.

positive closure of L

written L+

L+ denotes “one or more concatenations of” L.

13

Regular Expressions

1. is a regular expression that denotes {}, that is, the set containing the empty string.

2. If a is symbol in , then a is a regular expression that denotes {a}, i.e., the set containing the string a. Although we use the same notation for all three, technically, the regular expression a is different from the string a or the symbol a. It will be clear from the context whether we are talking about a as a regular expression, string, or symbol.

3. Suppose r and s are regular expressions denoting the language L(r) and L(s). Then,a) (r)|(s) is a regular expression denoting L(r) L(s).

b) (r)(s) is a regular expression denoting L(r)L(s).

c) (r)* is a regular expression denoting (L(r))*.

d) (r) is a regular expression denoting L(r).

14

Examples on operations in regular expressions

Σ ={a,b} alphabets– a | b {a,b}– (a|b)(c|d) {ac, ad, bc, bd}– a* {ε, a, aa, aaa, …}

– (a|b)* (a*|b*)*

– aa* = a+, ε|a+ = a*

– (a|b) = (b|a)

15

Algebraic properties of regular expressions.

AXIOM DESCRIPTION

r|s = s|r | is commutative

r|(s|t) = (r|s)|t | is associative

(rs)t = r(st) concatenation is associative

r(s|t) = rs|rt

(s|t)r = sr|trconcatenation distributes over |

r = r

r = r is the identity element for concatenation

r* = (r|)* relation between * and

r** = r* * is idempotent

16

Regular Definitions

Regular definition– d1 r1 d2 r2 …. dn rn

• 예• letter A|B| … |Z|a|b| … |z• digit 0|1| … | 9• id letter (letter|digit)*

17

Unsigned numbers

Pascal digit 0|1| … |9

digits digit digit*

operational_fraction . digits | ε optional_exponent (E(+|-| ε) digits | ε

num digits operational_fraction optional_exponent

18

Notational Shorthands (1/2)

1. One or more instances. The unary postfix operator + means “one or more instances of.” If r is a regular expression that denotes the language L(r), then (r)+ is a regular expression that denotes the language (L(r))+. Thus, the regular expression a+ denotes the set of all strings of one or more a’s. The operator + has the same precedence and associativity as the operator *. The two algebraic identities r* = r+| and r+ = rr* relate the Kleene and positive closure operators.

2. Zero or one instance. The unary postfix operator ? means “zero or one instance of.” The notation r? is a shorthand for r|. If r is a regular expression, then, (r)? is a regular expression that denotes the language L(r) {}. For example, using the + and ? operators, we can rewrite the regular definition for num in Example 3.5 as

19

Notational Shorthands (2/2)

3. Character classes. The notation [abc] where a, b, and c are alphabet symbols denotes the regular expression a | b | c. An abbreviated character class such as [a – z] denotes the regular expression a | b | ··· | z. Using character classes, we can describe identifiers as being strings generated by the regular expression

[A – Za – z][A – Za – z0 – 9]*

digit

digits

optional _fraction

optional_exponent

num

0 | 1 | ··· | 9

digit+

( . digits )?

( E ( + | - )? digits )?

Digits optional_fraction optional_exponent

20

Nonregular set

{wcw-1|w is a string of a’s and b’s}

context-free grammar is required to

represent the string

21

Regular-expression patterns for tokens.

REGULAR

EXPRESSIONTOKEN ATTRIBUTE-VALUE

wsif

thenelseid

num<

<==

< >>

>=

-if

thenelseid

numreloprelopreloprelopreloprelop

----

pointer to table entrypointer to table entry

LTLEEQNEGTGE

22

Transition diagram

Finite-state automata states and edges 몇 가지 예를 보여줌 … . 다음 페이지 , 그림 3.14 는 앞의 예를 바탕으로 그림

23

9 10 1011letter otherstart

return(gettoken(), install_id())

letter or digit

*

Transition diagram for identifiers and keywords.

24

Lex 에 의한 구현

Regular definition finite automata, transition diagram

C 프로그램으로 출력 Lexical analysis, pattern matching, …

25

Creating a lexical analyzer with Lex.

Lexcompiler

lex.yy.c

Lexsource

programlex.l

Ccompiler

a.outlex.yy.c

a.outsequence

oftokens

inputstream

26

Lex program for the tokens of Fig. 3. 10. (1/2)

%{

/*definitions of manifest constants

LT, LE, EQ, NE, GT, GE,

IF, THEN, ELSE, ID, NUMBER, RELOP */

%}

/*regular definitions */

delim [ \ t \ n ]

ws { delim }+

letter [ A-Za-z ]

digit [ 0 – 9 ]

id { letter } ( { letter } | { digit } )*

number { digit } + ( \ .{ digit } + ) ? ( E [ + \ - ] ? { digit } + ) ?

27 Lex program for the tokens of Fig. 3. 10. (2/2)

%%{ ws } { /* no action and no return */ }if { return(IF); }then { return(THEN); }else { return(ELSE); }{ id } { yylval = install_id(); return(ID); }{ number } { yylval = install_num(); return(NUMBER); }“<” { yylval = LT; return(RELOP); }“<=” { yylval = LE; return(RELOP); }“=” { yylval = EQ; return(RELOP); }“<>” { yylval = NE; return(RELOP); }“>” { yylval = GT; return(RELOP); }“>=” { yylval = GE; return(RELOP); }%%

install_id() {/* procedure to install the lexeme, whose first character is pointed to by yytext and whose length is yyleng, into the symbol table and return a pointer thereto */

}install_num() {

/* similar procedure to install a lexeme that is a number */}

28

Lookahead operator

DO 5 I = 1.25 DO 5 I=1,25– DO/({letter | digit})* = ({letter} | {digit})*,– DO/{id}* = {digit}*,

IF(I,J)=3 IF(condition) statement– IF/ \( .* \) {letter}

Related Documents