Blue Waters and PPL’s Role Celso Mendes & Eric Bohm Parallel Programming Laboratory Dep. Computer Science, University of Illinois

Blue Waters and PPL’s Role Celso Mendes & Eric Bohm Parallel Programming Laboratory Dep. Computer Science, University of Illinois.

Dec 13, 2015

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

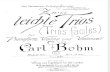

Blue Waters and PPL’s Role

Celso Mendes & Eric BohmParallel Programming Laboratory

Dep. Computer Science, University of Illinois

2

Outline• Blue Waters System

• NCSA Project• Petascale Computing Facility• Machine Characteristics

• PPL Participation• PPL’s Role in Blue Waters• Object-Based Virtualization• BigSim Simulation System• NAMD Petascale Application

• Conclusion• How to Join Us• Acknowledgements

3

Blue Waters System• Comes online in 2011 at NCSA

• World’s first sustained petascale system for open scientific research

• Hundreds of times more powerful than today’s typical supercomputer

• 1 quadrillion calculations per second sustained

• Collaborators:• University of Illinois/NCSA

• IBM

• Great Lakes Consortium for Petascale Computation

4

The Blue Waters Project• Will enable unprecedented science and engineering

advances

• Supports:• Application development• System software development• Interaction with business and industry• Educational programs

• Includes Petascale Application Collaboration Teams (PACTs) that will help researchers:• Port, scale, and optimize existing applications• Create new applications

5

Blue Waters – How We Won• Two years from start to finish to develop proposal and

go through intense competition and peer-review process

• Rivals from across the country, including: California, Tennessee, Pennsylvania—universities, national labs

• Offered an excellent, open site; unparalleled technical team; collaborators from across the country; and an intense focus on scientific research

• Leverages $300M DARPA investment in IBM technology

• 3 years of development, followed by 5 years of operations. Blue Waters will come online in 2011 and be retired or upgraded in 2016

6

Blue Waters – What’s a Petaflop?One quadrillion calculations per second!

If we multiplied two 14-digit numbers together per second:

• 32 years to complete 1 billion calculations

• 32 thousand years to complete 1 trillion calculations

• 32 million years to complete 1 quadrillion calculations

32 years ago, America celebrated its bicentennial

32 thousand years ago, early cave paintings were completed

32 million years ago, the Alps were rising in Europe

7

Blue Waters – The lay of the land

Blue Waters NCSA/Illinois 1 petaflop sustained per second

Roadrunner DOE/Los Alamos 1.3 petaflops peak per second

Ranger TACC/Texas 504 teraflops peak per second

Kraken NICS/Tennessee 166 teraflops peak per second (with upgrade to come)

Campuses across the U.S. Several sites 50-100 teraflops peak per second

Blue Waters is the powerhouse of the National Science Foundation’s strategy to support supercomputers for scientists nationwide

T1

T2

T3

8

Petascale Computing Facility• Future home of Blue Waters and other NCSA hardware• 88,000 square feet, 20,000 square foot machine room• Water-cooled computers are 40 percent more efficient• Onsite cooling towers save even more energy

9

Blue Waters – Interim SystemsAn interesting challenge: The IBM POWER7 hardware on

which Blue Waters will be based isn’t available yet. NCSA has installed four systems to prepare for Blue Waters:• “BluePrint,” an IBM POWER575+ cluster for studying the software environment• Two IBM POWER6 systems for developing the archival storage environment and scientific applications• An x86 system running “Mambo,” an IBM system simulator that allows researchers to study the performance of scientific codes on Blue Waters’ POWER7 hardware

10

Selection Criteria for Petascale Computer• Maximize Core Performance

…to minimize number of cores needed for a given level of performance as well as lessen impact of sections of code with limited scalability

• Incorporate Large, High-bandwidth Memory Subsystem…to enable the solution of memory-intensive problems

• Maximize Interconnect Performance…to facilitate scaling to the large numbers of processors required for

sustained petascale performance

• High-performance I/O Subsystem…to enable solution of data-intensive problems

• Maximize System Integration, Leverage Mainframe Reliability, Availability, Serviceability (RAS) Technologies…to assure reliable operation for long-running, large-scale simulations

11

Blue Waters - Main Characteristics• Hardware:

• Processor: IBM Power7 multicore architecture• More than 200,000 cores will be available• Capable of simultaneous multithreading (SMT)• Vector multimedia extension capability (VMX)• Four or more floating-point operations per cycle • Multiple levels of cache – L1, L2, shared L3• 32 GB+ memory per SMP, 2 GB+ per core• 16+ cores per SMP• 10+ Petabytes of disk storage• Network interconnect with RDMA technology

12

Blue Waters - Main Characteristics• Software:

• C, C++ and Fortran compilers• UPC and Co-Array-Fortran compilers• MASS, ESSL and parallel ESSL libraries• MPI, MPI2, OpenMP• Low level active messaging layer• Eclipse-based application development framework• HPC and HPCS toolkits• Cactus framework• Charm++/AMPI infrastructure• Tools for debugging at scale• GPFS file system• Batch and interactive access

13

Blue Waters Project Leadership• Thom H. Dunning, Jr. – NCSA

• Project Director

• Bill Kramer - NCSA• Deputy Project Director

• Wen-mei Hwu – UIUC/ECE• Co-Principal Investigator

• Marc Snir – UIUC/CS• Co-Principal Investigator

• Bill Gropp – UIUC/CS• Co-Principal Investigator

To learn more about Blue Waters: http://www.ncsa.uiuc.edu/BlueWaters

14

PPL Participation in Blue Waters• Since the very beginning…

15

Current PPL Participants in Blue Waters• Leadership:

• Prof. Laxmikant (Sanjay) Kale

• Research Staff:• Eric Bohm *• Celso Mendes *• Ryan Mokos• Viraj Paropkari• Gengbin Zheng #

* Partially funded # Unfunded

• Grad Students:• Filippo Gioachin• Chao Mei• Phil Miller

• Admin. Support:• JoAnne Geigner *

16

PPL’s Role in Blue Waters• Three Activities:

a) Object-Based Virtualization – Charm++ & AMPI

b) BigSim Simulation System

c) NAMD Application Porting and Tuning

• Major Effort Features:• Deployments specific for Blue Waters• Close integration with NCSA staff• Leverages other PPL’s research funding

• DOE & NSF (Charm++), NSF (BigSim), NIH (NAMD)

17

Object-Based Virtualization• Charm++ is a well used software that has been ported

to a number of different parallel machines• OS platforms including Linux, AIX, MacOS, Windows,…• Object and thread migration is fully supported on those platforms

• Many existing applications based on Charm++ have already scaled beyond 20,000 processors• e.g. NAMD, ChaNGa, OpenAtom

• Adaptive MPI (AMPI): designed for legacy MPI codes• MPI implementation based on Charm++; supports C/C++/Fortran • Usability has been continuously enhanced

• Scope of PPL Work:• Deploy and optimize Charm++, AMPI and possibly other virtualized

GAS languages on Blue Waters

18

Current Charm++/SMP Performance• Improvement on K-Neighbor Test (24 cores, Mar’2009)

19

BigSim Simulation System

• Two-Phase Operation:• Emulation: Run actual program with AMPI, generate logs• Simulation: Feed logs to a discrete-event simulator

• Multiple Fidelity Levels Available• Computation: scaling factor; hardware counters; processor-simulator• Communication: latency-bandwidth only; full contention-based

20

Combined BigSim/Processor-Simulator Usevoid func(… )

{

StartSim( )

…

EndSim( )

}

Cycle-accurateSimulator

e.g. Mambo

BigSim Emulator

Parameter files

Log files

interpolation

New log files

BigSim Simulator

+Replace sequential timings

21

Recent BigSim Enhancements• Incremental Reading of Log Files

• Enables handling large log files that might not fit in memory

• Creation of Out-of-Core Support for Emulation• Enables emulating applications with large memory footprint

• Tests with Memory-Reuse Schemes (in progress)

• Enables reusing memory data of emulated processors• Applicable to codes without data-dependent behavior

• Flexible Support for Non-Contention Network Model• Network parameters easily configured at runtime

• Development of Blue Waters Network Model (in progress)

• Contention-based model specific for Blue Waters network• NOT a part of BigSim’s public distribution

22

Petascale Problems from NSF• NSF Solicitation (June 5, 2006):

• http://www.nsf.gov/pubs/2006/nsf06573/nsf06573.html• Three applications selected for sustained petaflop performance:

• Turbulence• Lattice-gauge QCD• Molecular Dynamics

• Turbulence and QCD cases defined by problem specification• Molecular dynamics:

• Defined with problem specification• Required use of NAMD code

23

MD Problem Statement from NSF “A molecular dynamics (MD) simulation of curvature-inducing protein

BAR domains binding to a charged phospholipid vesicle over 10 ns simulation time under periodic boundary conditions. The vesicle, 100 nm in diameter, should consist of a mixture of dioleoylphosphatidylcholine (DOPC) and dioleoylphosphatidylserine (DOPS) at a ratio of 2:1. The entire system should consist of 100,000 lipids and 1000 BAR domains solvated in 30 million water molecules, with NaCl also included at a concentration of 0.15 M, for a total system size of 100 million atoms. All system components should be modeled using the CHARMM27 all-atom empirical force field. The target wall-clock time for completion of the model problem using the NAMD MD package with the velocity Verlet time-stepping algorithm, Langevin dynamics temperature coupling, Nose-Hoover Langevin piston pressure control, the Particle Mesh Ewald algorithm with a tolerance of 1.0e-6 for calculation of electrostatics, a short-range (van der Waals) cut-off of 12 Angstroms, and a time step of 0.002 ps, with 64-bit floating point (or similar) arithmetic, is 25 hours. The positions, velocities, and forces of all the atoms should be saved to disk every 500 timesteps.”

24

NAMD Challenges• At the time the NSF benchmark was proposed the

largest atom systems being run in NAMD (or similar applications) had fewer than 4 million atoms.• Standard file formats (PSF, PDB) could not even express systems

larger than 10 million atoms.• Startup, input, output were all handled on one processor• The NAMD toolset of : NAMD, VMD, and PSFGen all required

significant enhancements so that atom systems of this size could be executed.

• Blue Waters hardware not available

25

NAMD Progress• New File formats to support 100 Million atom systems

• New I/O framework to reduce memory footprint

• New output framework to parallelize output

• New input framework to parallelize input and startup

• New PME communication framework

• Performance analysis of sequential blocks in MAMBO

• Performance prediction via BigSim and MAMBO

• Performance analysis of extremely large systems

26

NAMD Progress (cont.)• NAMD 2.7b1 and Charm-6.1 released

• Support execution of 100M atom systems• New plug-in system to support arbitrary file formats• Limit on number of atoms in PSF file fixed• Tested using 116 Million atom BAR domain and water

systems

• Parallel Output• Parallel output is complete• Performance tuning is ongoing

27

NAMD Progress (cont.)• Parallel Input

• Work delayed by complexities in file formats• Worked with John Stone and Jim Phillips (Beckman Inst.) to revise file formats• New plug-in system integrated• Demonstrated 10x-20x performance improvement for 116 M atom

• 10M, 50M, 100M Bar systems• PSFgen couldn’t make them with old format (see above)• Currently have 10M, 20M,50M,100M, 150M water boxes• 116M BAR Domain constructed, solvated, run in NAMD.

• Analysis of 10M, 50M, 100M• Comparative analysis of overheads from fine

decomposition and molecule size ongoing

28

Parallel Startup in NAMDTable 1: Parallel Startup for 10 Million water on BlueGene/PNodes Start (sec) Memory(MB)

1 NA 4484.55 *

8 446.499 865.117

16 424.765 456.487

32 420.492 258.023

64 435.366 235.949

128 227.018 222.219

256 122.296 218.285

512 73.2571 218.449

1024 76.1005 214.758

Table : Parallel Startup 116 Million BAR domain on Abe

Nodes Start (sec) Memory (MB)

1 3075.6 * 75457.7 *

50 340.361 1008

80 322.165 908

120 323.561 710

29

Current NAMD Performance

30

Summary• Blue Waters arriving at Illinois in 2011

• First sustained-Petaflop system

• PPL early participation• NSF proposal preparation• Application studies

• Illinois’ HPC tradition continues…

• PPL current participation• Charm++/AMPI deployment• BigSim simulation• NAMD porting and tuning

31

ConclusionWant to Join Us?

• NCSA has a few open positions• Visit http://www.ncsa.uiuc.edu/AboutUs/Employment

• PPL may have PostDoc and RA positions in the near future• E-mail to [email protected]

Acknowledgments - Blue Waters funding

• NSF grant OCI-0725070

• State of Illinois funds

Related Documents