University of Southern Denmark Master’s thesis MSc in Engineering - Robot Systems Autonomous Airborne Tool-carrier Authors: Hjalte B. L. Nygaard Peter E. Madsen Supervisors: Ulrik P. Schultz Rasmus N. Jørgensen Kjeld Jensen May 30, 2012

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

University of Southern Denmark

Master’s thesisMSc in Engineering - Robot Systems

Autonomous AirborneTool-carrier

Authors:

Hjalte B. L. Nygaard

Peter E. Madsen

Supervisors:

Ulrik P. Schultz

Rasmus N. Jørgensen

Kjeld Jensen

May 30, 2012

Abstract

The field of Unmanned Aerial Vehicles has been under rapid development duringthe last decade. Eyes in the sky have proven to be a powerful tool in a variety ofdomains. Hence many different approaches have been taken to create these typeof systems. However, the foundation of these varying approaches differs mainlyin the task they complete and thus the payload they carry. This thesis proposes amodular approach to the fixed wing UAV domain, aiming to induce reusability ofthe same flying platform in a multitude of applications. This is done by the use ofswappable software as well as hardware structures, utilizing ROS as a commoncommunication basis. Although a completely autonomous flying prototype isnot yet implemented, promising results have been achieved. A hardware basehas been constructed, using MEMS sensors and an ARM processor. As stateestimation is a common problem for all airborne platforms, this has been thea main concern of this thesis. A cascaded Extended Kalman Filter has beenimplemented, and some of its performance measures have been verified, usingvision based horizon tracking. Aided flights have been conducted, utilizing simplePID loops to maintain plane attitude, based on the pilot commands and feedbackfrom the state estimator.

Acknowledgments

We owe our deepest gratitude to a number of people, who have been involvedin this project, one way or the other. First of all our beloved and patientsweethearts. Without their love and support, this thesis would have remained adream. We really appreciate the time, patience and especially steady hand andeagle-eye of Carsten Albertsen, in assisting us on SMD soldering and bug-fixing during the PCB prototype manufacturing. In the same phrase, gratitudeshall be expressed to David Brandt, for helping us out on Gumstix relatedhardware design issues and ad-hoc components. Morten Larsen should bethanked for his tireless support on Linux and CMake related issues. We havebenefited from his ability to decipher illegible compiler errors. We also thankStig Hansen and Kold College for kindly lending us field and airspace to per-form flight test. We are deeply grateful for Jimmi Friis’ guidance into theworld of RC model planes. Your patience and kind instructions was invaluable.Without the initial help of Henning Porsby we would never have gotten inthe air. Andreas Rune Fugl’s input and ideas have proved inspirational inour implementation and future work. We owe our gratitude to Lars PeterEllekilde for breaking the ice on the subjects of quaternions and Kalman fil-tering, and Dirk Kraft for suggesting vision procedures. Lastly we would liketo thank Bent Bennedsen and Henrik Midtiby for their input on the useof imagery in agriculture.

Flexible is much too rigid, in aviation you have to be fluid- Verne Jobst

1

Contents

Contents 3

Introduction 6

1 Background & Analysis 71.1 Related Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71.2 Application areas . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

1.2.1 Conditions . . . . . . . . . . . . . . . . . . . . . . . . . . 101.2.2 Regulations . . . . . . . . . . . . . . . . . . . . . . . . . . 101.2.3 Practicalities . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.3 System requirements & Architecture . . . . . . . . . . . . . . . . 12

2 Auto Pilot 142.1 Low level control loops . . . . . . . . . . . . . . . . . . . . . . . . 16

2.1.1 PID controller . . . . . . . . . . . . . . . . . . . . . . . . 162.1.2 Bank and Heading control . . . . . . . . . . . . . . . . . . 172.1.3 Climb and Altitude control . . . . . . . . . . . . . . . . . 192.1.4 Miscellaneous control . . . . . . . . . . . . . . . . . . . . 19

2.2 Flight Management System . . . . . . . . . . . . . . . . . . . . . 202.2.1 Trajectory Smoothing . . . . . . . . . . . . . . . . . . . . 202.2.2 Trajectory Tracking . . . . . . . . . . . . . . . . . . . . . 212.2.3 Tool Interaction . . . . . . . . . . . . . . . . . . . . . . . 22

2.3 Flight Computer . . . . . . . . . . . . . . . . . . . . . . . . . . . 232.4 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

3 State Feedback 263.1 Sensors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

3.1.1 Velocities and course parameters . . . . . . . . . . . . . . 283.1.2 Position . . . . . . . . . . . . . . . . . . . . . . . . . . . . 303.1.3 Altitude . . . . . . . . . . . . . . . . . . . . . . . . . . . . 303.1.4 AHRS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

3.2 Sensor fusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 333.2.1 Methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . 333.2.2 Estimator architecture . . . . . . . . . . . . . . . . . . . . 363.2.3 Kinematic models . . . . . . . . . . . . . . . . . . . . . . 37

3.3 Noise considerations . . . . . . . . . . . . . . . . . . . . . . . . . 423.4 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

4 Visualization and Simulation 44

2

4.1 Telemetry . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 444.1.1 Link hardware . . . . . . . . . . . . . . . . . . . . . . . . 444.1.2 Message passing . . . . . . . . . . . . . . . . . . . . . . . 45

4.2 Visualization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 454.3 Simulator . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 464.4 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

5 Implementation 485.1 Airframe . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 495.2 Autopilot PCB . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

5.2.1 Processor and data buses . . . . . . . . . . . . . . . . . . 515.2.2 Airspeed sensor . . . . . . . . . . . . . . . . . . . . . . . . 525.2.3 Accelerometer . . . . . . . . . . . . . . . . . . . . . . . . . 525.2.4 Gyroscope . . . . . . . . . . . . . . . . . . . . . . . . . . . 535.2.5 Magnetometer . . . . . . . . . . . . . . . . . . . . . . . . 545.2.6 Barometer . . . . . . . . . . . . . . . . . . . . . . . . . . . 545.2.7 GPS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 545.2.8 Zigbee . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 545.2.9 Ultrasonic proximity . . . . . . . . . . . . . . . . . . . . . 555.2.10 R/C interface & Failsafe operation . . . . . . . . . . . . . 555.2.11 Power management . . . . . . . . . . . . . . . . . . . . . . 565.2.12 Auxiliary components . . . . . . . . . . . . . . . . . . . . 57

5.3 Software building blocks . . . . . . . . . . . . . . . . . . . . . . . 575.3.1 fmFusion . . . . . . . . . . . . . . . . . . . . . . . . . . . 575.3.2 fmController . . . . . . . . . . . . . . . . . . . . . . . . . 585.3.3 GPS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 595.3.4 fmTeleAir and fmTeleGround . . . . . . . . . . . . . . . . 59

5.4 Ground Control system . . . . . . . . . . . . . . . . . . . . . . . 605.5 Simulator . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 615.6 Flight test . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

5.6.1 Vision based post flight roll verification . . . . . . . . . . 625.6.2 Aided Flight . . . . . . . . . . . . . . . . . . . . . . . . . 65

6 Perspective 686.1 Future work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 686.2 Project analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . 696.3 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

Bibliography 71

Nomenclature 76

Appendix A Attitude kinematics 80

Appendix B Heading kinematics 84

Appendix C Position and Wind kinematics 88

Appendix D Wind statistics 91

Appendix E Wikis and how-tos 92

3

Appendix F PCB design 94

Appendix G Thesis proposal 100

Appendix H Sensor Fusion for Miniature Aerial Vehicles 105

Appendix I Project Log 124

4

Introduction

During the past decade, the field of small scale autonomous airborne vehicleshas undergone a rapid development. This development has become possiblethrough new sensor technologies. Small, light-weight, integrated circuit com-ponents now replace larger, heavier mechanical sensors. Development has beenseen in a wide variety of applications, including military, education, commercialand recreation. Example use can be seen in agriculture, aerial photography,disaster management and inspection systems. Common for these areas are spe-cialized systems, with integrated sensors for very specific areas of application.Through this thesis, the state of the art will be assessed. Automated flight andways to control unmanned aerial vehicles will be reviewed. By implementingstate estimation and low level control, on a highly capable embedded platform,we aim to break with the single purpose paradigm, seen in todays solutions. Wepropose a single, modular system, applicable to various different use cases, andairframes. Through increased computational capabilities, the same autopilotcan be used for many different tools, and thereby application areas. We callthis the Autonomous Tool Carrier. It is hypothesized that, through aided con-trol, unmanned aerial vehicles can be controlled by inexperienced pilots, andthat the implementation of such a system, can be used as the first step towardsrealizing this new concept.

5

Chapter 1Background & Analysis

This chapter will analyse existing systems available in the domain of UnmannedAerial Vehicles (UAVs), identify application areas and asses the available tech-nologies. Based on this analysis, it will be formulated how an Autonomous ToolCarrier can supplement the current state of the art. Furthermore, the overallbuilding-blocks of such a system will be identified and introduced.

The remainder of this introductory Chapter will be structured as follows: In Sec-tion 1.1 an overview of the existing commercial and open source based projectswill be given, Section 1.2 on the next page surveys applications for UnmannedAerial Vehicles and finally Section 1.3 on page 12 describes the proposed systemand its architecture. The latter Section also outlines the structure of this thesis.

1.1 Related Work

A review of existing autopilot systems will be conducted in this section. Theavailable systems have been divided into two groups, commercial and opensource autopilots. A select number of open source auto pilot projects are pre-sented in Table 1.1 on the next page. General for these systems are the rela-tively low hardware costs, compared to the commercial units. The majority ofthe projects use Complementary filtering for state estimation, as it is easier toimplement compared to the Kalman filters used by the commercial units. Theseprojects generally seem to lack coherent documentation and scientific founda-tion. None of the systems provide tool interaction, as the purpose of the projectsis limited to enabling automated flight with R/C model planes.

A number of commercial autopilots are available, as can be seen in Table 1.2on the following page. Based on various sales documents, it can be deducedthat commonly, these autopilots are based on Extended Kalman Filters. Theturn-key prices are relatively high, ranging from 4.000 Euro and up. Sys-tem information beyond sales documents is generally sparsely available. TheKestrel autopilot[12] is an exception. This autopilot originates from Birming-ham Young University[23] and has been used comprehensively by Beard et al. intheir research, in various aspects regarding automated flight of miniature aerial

6

Table 1.1: Table of open source autopilot projects

Name Computer State Est. HW Price[EUR]Payloadcomm.

Paparazzi[14] 60 MHz ARM Complimentary 400 no

GluonPilot[4] 80 MHz dsPIC EKF 480 no

ArduPilot[1] 20 MHz AVR Complimentary 160 no

OpenPilot[6] 150MIPS Cortex-M3 Complimentary 70 no

Table 1.2: Table of commercial autopilot systems

Name Payload Computer State Est. Price[EUR]Payloadcomm.

Kestrel[12] - 29MHz 8-bit EKF 4,000 one-way

SenseFly[8]12MP CanonPowerShot - - 8,500 no

MicroPilot[5]Gimbal Camera

EUR 19,250 150 MIPS 12 state EKF 6,400 one-way

Piccolo II[7] - - - 6,000 one-waya

Avior 100[2] - 600 MHz 15 state EKF N/A one-way

Gatewing x100[3]10MP digital

camera - - N/A noa Might have two way communication. No specific information found.

vehicles[19, 45, 54, 59]. Due to the academic history of this autopilot, someinsight to its internal workings is available. Most of the commercial autopilotscarry some sort of tool and most of them are indeed capable of communicatingthe position and orientation of the airframe to the tool. However, none of themsuggest capabilities of involving the tool in the flight itself. Others simply fixatea regular camera to the airframe.When surveying the field, it is soon recognized that State Estimation plays amajor role, for the overall system performance. This is due to the fact that thissubsystem provides the feedback for the actual controller to react on. Thus, abad state estimate yields poor overall autopilot performances. This is reflectedin the hobbyists using relatively simple methods, as opposed to the commercialmore complex and thus expensive systems.

1.2 Application areas

The primary role of UAVs is provide an eye in the sky. This is useful for adhoc data collection, in many domains. This section will survey some differentapplication areas of UAVs and delimit the requirements, in terms of payloads,versatility and various other aspects.

Aerial photography in agriculture

In recent decades there has been an increasing interest in remote sensing-basedprecision agriculture[44, 52, 70]. Especially Site Specific Weed Management(SSWM) has great potential for reducing the amount pesticides needed for ade-quate weed management[33]. Historically, weed management has involved a vastamount of manual labor. In the past decades the use of herbicides has greatly

7

reduced the amount of manual labor. However, these chemical agents impactboth environment and yield of the crop. Gutjahr and Gerhards shows that useof herbicides can be reduced by approximately 80%, by spraying selectively inweed infested areas, rather than broad spraying the entire field. Further op-timization can be achieved by selectively activating relevant spraying nozzles,when driving a spraying boom over a weed plant[40]. The two examples requiredifferent vision system approaches; the former needs to localize weed patchesand the latter individual plants within the patch. As weed control is relevantin the germination period, weed patches can be identified by excessive bioma-terial density. This can be measured using Normalized Difference VegetationIndex [70] (NDVI). This indexing method exploits the fact that photosynthesisutilizes most red light with a wavelength of λ ≈ 680nm, and reflects most near-infrared light with a wavelength of λ ≈ 750nm, as illustrated in Figure 1.1a.This steep rise in reflection is known as the red edge.

(a) Reflection curves for soil and different plantspecies, illustrating typical red edges [70, figure8.1.]

(b) Weed detection by row relativeplacement [22, figure 10.c]

Figure 1.1: Reprinted figures, illustrating two different approaches to imagebased weed detection.

Another method, when dealing with row crops, is identification of biomass be-tween rows, as seen in [22] and, and depicted in Figure 1.1b.

As pointed out by Weis and Sokefeld [70], successful crop to weed discriminationis essential for SSWM. Therefore spatial and temporal variations of weed pop-ulations needs to be assessed if treatment should vary within the field. Satellitebased image data have been proven useful for localizing big weed patches[39],by combining RGB (red, green & blue) and NIR (Near Infra Red) images withNDVI. Weed patches are detected in images, from the increased biomass, com-pared to the surrounding areas. However, due to the limited spatial resolutionof the satellite based images, small patches remains undetectable. Furthermore,it is expensive and the revisiting time of the QuickBird satellite used in [39], isup to 3.5 days.

8

Disaster overview and search & rescue aid

As it is noted by Bendea et al. [20], small scale unmanned aerial vehicles (UAV)are ideal for disaster overview. I a multitude of situations, be that floods, earth-quakes or post hurricane situations, an immediate overview of the situation canprove very helpful. Revisiting times of satellites, and their limited spatial reso-lution limits their use in these cases, thus supplementary imagery from a UAVcan aid disaster management.

An eye in the sky could also be invaluable in the search for missing persons.Combined with thermal cameras, an effective search could be conducted withoutthe cost of a manned aircraft. Also the aircraft requires less space for storage,and can easily be standby for immediate dispatch. Additionally, multiple UAVscan be launched to perform a swift overflight of an area without additionalpersonnel requirements.

Other application

The need to inspect gas pipelines is explained by Hausamann et al. [34], whereit is concluded that remote sensing possibilities of UAVs could potentially beapplied. Espinar and Wiese [29] uses a UAV to collect dust samples from trop-ical trade winds from North Africa’s Sahara, in an effort to link it with healtheffects on humans and ecosystem. Data could also be sampled from other moreinhospitable environments, like gamma radiation of the recent Fukushima dis-aster in Japan. Furthermore, regular civil aerial photography is also an area ofapplication. This can be used for producing pictures for sale, wildlife monitor-ing, cattle inspection and counting.

The different application areas have different constraints to the UAV and partic-ularly the payload it carries. In agriculture RGB and IR images are required tomap fields and extract NDVI maps, which can be used to localize weed patchesthat needs treatment. The spatial resolution should be high enough to clearlydistinguish row from inter-row areas. In applications such as search and rescue,thermal cameras are deemed useful, as they can detect body heat. Thermalcameras are likewise useful in agriculture, as they can be used to spot deer[69]in the field before harvesting. The majority of the application areas utilizescameras, to provide ’the grand overview’. However, the specifications of thesecameras varies. As it has been pointed out, other applications requires verydifferent sensing tools.

1.2.1 Conditions

In order to identify the requirements of UAVs in Denmark, a number of practicaland regulative considerations must be addressed.

1.2.2 Regulations

The Civil Aviation Administration - Denmark, has a number of regulationsregarding recreational model plane flying and automated unmanned aerial ve-hicles. The most essential parts of the regulations, contained in article BL 9-4[48], have been identified as:

9

� Weight: BL 9-4 §4.2, model planes with a take off weight of 7-25 kgrequires special permissions and registration of aircraft. Therefore a max-imum weight of 7 kg is required for anyone not possessing this permit.

� Flying height: BL 9-4 §2.2.e, model planes are limited to a maximumaltitude of 100 m.

� Line of sight BL 9-4, a model plane must alway be in line of sight (with-out using helping tools like binoculars) and if the plane is autonomous,the person operating the plane should always be able to change to manualmode, in flight, to be able to avert potential collisions.

1.2.3 Practicalities

Apart from the regulative considerations, a number of other practicalities mustbe assessed. When selecting an aerial platform a number of requirements anddesired capabilities must be defined. The platform needs to operate outdoors,and has to be operable on any, weather-wise, regular day. When dealing withlight weight smaller aircraft, the main concern before takeoff is the wind.

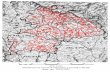

DMI [42] provides a wind rose average spanning from 1993-2002, see Figure 1.2.From the wind rose it can be conducted, see Appendix D, that if a UAV can flyin winds up to 10,7 m/s, it can operate 347 days of the year. The wind tends toslow down at night, in the morning and evening. Thus, for all practical reasons,a plane that flies stable in 10,7 m/s wind can be used all year in Denmark.

Wind speed Days a year[m/s] [%]

0.3 - 5.4 585.5 - 10.7 37> 10.8 5

Figure 1.2: DMI wind rose [42]. Average wind speeds and direction in a periodfrom 1993 to 2002 at Odense Airport.

It is desirable to use electrical propulsion, over fuel driven motors as these areless demanding in maintenance, cleaner, quieter and simpler to work with andcontrol. As the plane will be used as a tool carrier, it has to be able to carryextra weight. It is estimated that a typical tool, like a camera, would typicallyweigh up to 1 kg - this would be the minimum extra weight capability of the

10

plane. It is desirable to have an as low stall speed. Low stall speed is essen-tial for slow flights and allows for added weight while still maintaining realistictake-off and landing speeds.

When adding weight to the plane, the flight dynamics will change. The addedmass increases the inertia of the system, and as a result the aircraft step-responseto control input will be slower. As seen in (1.1), the lift force of a wing isdependent on wing area, A, traveling speed, v, lift coefficient, CL and air densityρ:

L

A=

1

2· v2 · ρ · CL (1.1)

Thus, as an effect of added weight, the take-off and landing speed will increaseproportionally by the square root of the added weight, as seen in (1.2) through(1.3), where WS denotes wing loading:

v2 =2 · L

A · ρ · CL

∣∣∣∣LA = M·g

A =WS ·g(1.2)

=2 · g ·WS

ρ · CL(1.3)

When dealing with UAVs a number of issues must be dealt with. The foremostchallenge is that fixed wing platform must be in motion in order to maintain lift.Thus, stopping in the air, in case of an unforeseen event is clearly not an option.As a result it must be ensured that a pilot or operator is always ultimately incontrol of the airframe; even when it is flying autonomously, he or she must beready to manually take over the flight.The fact that the platform is airborne also introduces a number of challengesin the development phase. First of all, it is non-trivial to do a flight test. Anumber of conditions must be met; 1) One must have a capable pilot, 2) theweather must be right, 3) one must have an airspace to perform the test in.As debugging directly on the flying platform is not applicable, every piece ofdata that might be, or become relevant in later analysis, must be logged ortransmitted wireless to the ground during flights.That being said, the domain of autonomous flight is an inspiring area to dodevelopment in, and the many challenges inspires long working hours.

1.3 System requirements & Architecture

Based on the above analysis, it is concluded that a modular system capable ofinterfacing a range of tools, would enable future developer to focus at the taskand thus payload at hand, rather than building the vehicle carrying it. Further-more, by providing bi-directional platform to tool interfacing, new possibilitiescould arise. In order to provide such a system, it is of the outmost importancethat basic building blocks like state estimation, autopilot and telemetry link arein place. Providing these fundamental sub systems will be the main focus ofthis thesis.In order to provide modularity, is was chosen to take the abstraction to a higherlevel, than seen in any of the existing UAV systems. This has been done by

11

building the system using ROS[58] as a middleware. By utilizing ROS, a wellestablished open source community with a broad code and sensor base is drawnupon. In order to accommodate ROS, it is necessary to provide a hardwareplatform, capable of running the system.Figure 1.3 depicts the suggested structure of such a system. As seen in thisblock diagram, a simulator is capable of replacing the entire airframe. Thisis done in order to enhance development and debugging. This is necessary, asflight tests are not trivially conducted, as it was concluded in Subsection 1.2.3on page 11.

AutopilotChapter 2

ControlChapter 2

State EstimatorChapter 3

Flight PlanChapter 2

ToolChapter 2

TelemetryChapter 4

Ground ControlStation

Chapter 4

SimulatorChapter 4

AirframeChapter 5

SensorsChapter 3

ActuatorsChapter 5

Figure 1.3: Overview of information flow in the proposed system structure. Itshould be noted that chapter references are to the main discussion of the topic.

The remainder of this thesis will be structured as follows. In Chapter 2, ’AutoPilot’ on the following page, the subject of autonomous flight will be discussedalong with the processing needs of the flight computer. Chapter 3, ’StateFeedback’ on page 26, surveys sensors, methods and algorithms for determiningquantities such as position and orientation of the moving UAV. Various devel-oped tools for aiding debugging and monitoring are discussed in Chapter 4, ’Vi-sualization and Simulation’ on page 44. Chapter 5, ’Implementation’ on page 48,condenses all these aspects into a flying prototype of the proposed system andevaluates some overall performance aspects. Finally Chapter 6, ’Perspective’ onpage 68, debriefs this thesis and outlines the future of the project.

12

Chapter 2Auto Pilot

The overall purpose of the autopilot is to enable automated, purposeful flight.The purpose of the flight depends on the payload and task at hand, but will typ-ically be some sort of overflight of a predefine area. When dealing with aircraftcontrol, a number of standard aviation terms are used. The most importantones are listed in the Nomenclature on page 76.

Different approaches on autopilot design is found in the literature. Commonly,they are build in some sort of hierarchy, with the lowest layer stabilising the rollaxis, pitch axis and velocity of the airframe. On top of this, methods for con-trolling altitude and heading can be build. The higher abstraction layers utilizesthese abilities to provide execution of a plan, in one or another format. Theplan is typically composed of a set of waypoints, i.e ’dots’ which the airframemust ’connect’ during the flight, in a specified order. As fixed wing aircraftsneeds velocity to maintain lift, turning on the spot is not an option. Hence,some sort of smoothing must be applied when interpolating the waypoints, toprovide a flyable path.

Although the autopilot is not fully implemented, an overview of the proposedstructure will be given here and depicted in Figure 2.1 on the next page.

� The top level is Planning - the act of creating a set of waypoints, i.e a list ofchronically ordered places, velocities, measurements and other conditionswhich must be satisfied, one by one. This list will be referred to as theflight plan. As planning involves the operator and can be done pre-flight,it will be done on the Ground Control Station (GCS).

� The intermediate level is the Flight Management System (FMS), whichcarries out the flight plan on a higher abstraction level, waypoint by way-point.

� The actual real time control of the airframe is done by a set of controllers,which aims to position the airframe correctly in terms of attitude, alti-tude, velocity etc, according to the current setpoints given by the FMS.Typically one controller is devoted to each set of control surfaces. Such a

13

Planning

Flight ManagementSystem

Section 2.2

Tool / PayloadSection 2.2.3

ControllersSection 2.1

ActuationSection 5.2.10

Fight ComputerSection 2.3

Flight plan

Control modes and setpoints

Control efforts

Operator

Operator

Sta

teF

eed

bac

kS

eeC

hapte

r3

Figure 2.1: Overview of the autopilot sub-system structure. The operator isin control in the planning phase, but is also capable of overriding the controlefforts and thus manually control the airframe.

controller can work in different modes, depending on what condition theFMS requires it to maintain.

As pointed out by Low [47], the separation between FMS and the controllers,introduces an abstraction, which allows the FMS to control different types ofairframes, without modification. The controllers must be tuned to the airframein question, but the way of controlling it is in essence the same. It should benoted that key parameters, such turn radius, cruising speed, etc of the airframeand its controllers needs to be known in the planning phase as they vary withthe type and proportions of the airframe.

The FMS also communicates with the payload tool. This communication pro-vides the required information to the tool, for sampling data points, when aset of conditions are met (i.e, when the airframe is at the appropriate posi-tion). It is likewise possible to change flight parameters during flight, basedon observations acquired by the tool. E.g if the tool misses a data sample, itcan request the FMS to fly back to the point in question for another attempt.Other interesting thinkable scenarios could be following a ’rabbit’ or path, moni-tored by the tool - or expanding the search area based on the tool’s observations.

The final layer of the system is actuation. In this layer, the servos are signalledto position control surfaces at the deflection commanded by the controllers. Alsohere, the operator can get involved, as he shall at all times be able to disengagethe autopilot system and take over control via a remote control.The technical details presented in the remainder of this Chapter are structuredbottom-up, as Section 2.1 on the following page describes the low level con-trollers, Section 2.2 on page 20 describes how the low level controllers can beutilized by the FMS, when executing a flight plan. Section 2.3 on page 23 eval-uates the need for computational power and other constraints associated with

14

f

A

f

A

f

A

+Setpoint

(Desired value)

Feedback(Actual value)

+

−Ki ·

∫e(t)dt

Kp · e(t)

Kd · ddte(t)

e(t)+

Outputto system

Figure 2.2: Block diagram of a basic PID loop with bandwidth limited inputsand output. Please note that implementations might deviate from the depictedversion.

the UAV domain and finally chooses a platform. Section 5.2.10 on page 55 de-scribes the subsystem for interacting with the R/C components, which performsthe actual execution of the flight.It should be noted that throughout this Chapter, the state of the airframe isassumed to be known and correct. Chapter 3, ’State Feedback’ on page 26describes methods for acquiring this state.

2.1 Low level control loops

The low level controllers serves to control flight parameters such as roll, pitch,yaw and velocity. They do so by continuously controlling the deflection of thecontrol surfaces. The output to these surfaces are based on the requirementsfrom the FMS and the feedback from the state estimator

2.1.1 PID controller

The lowest level of control is stabilisation of the roll and pitch axis of the air-frame. For this form of control, the PID scheme is applicable, as it is generallysufficient for controlling most types of systems and can be tuned with relativeease. Figure 2.2 depicts such a controller. It takes two input signals, referredto as set point and feedback. The setpoint is the desired value of the plant un-der control. The actual value of the plant must be measured and returned asfeedback. By subtracting the current plant value (feedback) from the desired(setpoint), the error is found. The controller outputs a single value, output,which must be connected to an actuator or subsystem, controlling the plantunder control. The output of the controller is the weighted sum of current theerror, integral- and derivative of the error. The applied weights are referred toas Kp, Ki and Kd in the ideal form, for proportional, integral and derivativerespectively.

Various schemes exist for tuning the three parameters on a PID controller, with-out modelling the system under control. Most notable is Ziegler-Nichols[72].This method is a step-by-step recipe for tuning a PID controller for a arbitrarysystem, by applying various gains and inputs and observing the system reaction.

In Figure 2.2, the PID controller is depicted with bandwidth limiting low passfilters on setpoint, feedback and output. These are not strictly necessary, but

15

can be applied to reduce the bandwidths, in order to filter out noise in feedbackand setpoint. This filter can likewise limit the rate of change of the output. Ifthe cut-off frequencies of these filters are not too low, it will not compromisethe functionality of the PID-controller, as it does not make any sense to controlthe airframe any faster than its natural frequency. Moving average filtering canalso be applied to e.g the setpoint to reshape a step input to a linear ramp.

Integral wind-up is the situation, where the controller aims to control the out-put to a unreachable setpoint and thus the integrator term never gets ’satisfied’and keeps integrating. This scenario will make the output stick to the upperlimit, even when the setpoint is back in the reachable region. Integral wind-upis handled by limiting the value of the integrator.

By nesting PID controllers more complex controller structures can be build.This is done by letting one controller output the setpoint for the next. Thisway e.g the heading of the airframe can be controlled by outputting the desiredroll angle to another controller. When dealing with nested controller loops, thecontroller being controlled by another is referred to as the inner control loop andthe controller controlling it the outer control loop. It should be noted that theinner most controller should always be the fastest. Controlling a slow controllerwith a fast one will result in a unstable system.

2.1.2 Bank and Heading control

The bank angle of the airframe is controlled using a PID loop, as seen in Fig-ure 2.3a on the next page. The feedback is the actual roll angle, φa, and thesetpoint is given by outer control loops as the desired roll angle, φd. The outputof the controller is connected to the ailerons, with opposite signs for left andright, to induce roll.

An outer control loop can control the heading of the airframe, by banking theairframe, under the assumption that a lateral controller is used to maintain thealtitude and the velocity is constant, as:

r =V 2a

g · tan(φ)(2.1)

Where Va is the velocity through air, r is the turn radius and g is the gravita-tional acceleration. Based on this, the angular velocity around the yaw axis, ψ(i.e. the rate of turning), can be controlled, as:

ψ · r = Va ⇔ ψ =Var

(2.2)

ψ =VaV 2a

g·tan(φ)

=g · tan(φ)

Va(2.3)

When using a PID loop for controlling the heading, the output must be limited.If the airframe rolls too much, the lift is compromised, such that the lateralcontroller is unable to maintain the altitude.

16

PIDFB

SPPID

FB

SPAirframe

φdAileron

φaχa

χdRollHeading

(a) Longitudinal low level controller loops.

PIDFB

SPPID

FB

SPAirframe

θdElevator

θaAlta

AltdPitchAltitude

(b) Lateral low level controller loops.

PIDFB

SPAirframe

0Rudder

αy

TurnCoordinator

(c) Vertical low level controller / turn coordi-nator.

PIDFB

SPAirframe

VdThrottle

Va

Velocity

(d) Throttle controller / Cruise control.

Figure 2.3: Low level PID controllers.

17

By controlling the yaw of the airframe, the course over ground can be controlled,as the following heading covary:

χ = ψ + β (2.4)

Where χ is the course over ground and β is the side slip angle, due to the windcomponent. This time and heading varying offset can be trimmed away by theintegral term of the controller.

2.1.3 Climb and Altitude control

The lateral controller, depicted in Figure 2.3b on the preceding page, is similarto the longitudinal; The inner PID loop controls the pitch of the airframe, byoutputting the elevator control effort. The feedback is the current pitch angle,θa, and the setpoint is given by a outer control loop, as the desired pitch angle,θd.An outer loop delivers the setpoint for the inner loop, in order to maintaina given altitude. The coupling between pitch and altitude is more intuitive,compared to φ→ ψ, as:

Alt ≈ Va · cos(γ) · cos(φ) (2.5)

Where the course climb angle, γ, is given the the pitch angle, θ, and the angleof attack, α:

γ = θ − α (2.6)

Strictly speaking, (2.5) is an approximation, as the wind is neglected. Pleaserefer to Figure 3.2b on page 29. However, this approximation is viable for alti-tude control purposes.

Similarly to the longitudinal controller, the output of the outer loop must belimited, as it is undesirable to climb or decent to steeply - At least under normalcircumstances.

2.1.4 Miscellaneous control

The vertical controller outputs the rudder control effort. See figure 2.3c on thepreceding page. This controller will per default maintain the y-acceleration ofthe airframe at zero[38], such that coordinated turns are performed. As the servoused to control the rudder also controls the steering wheel of the airframe, aspecial controller must be used to handle take-off, landing and taxiing situations.

Figure 2.3d on the previous page depicts a simple PID control to maintaining theairframe’s velocity, referred to as throttle- or cruise control. It should be notedthat either the indicated airspeed, Vi, or the true airspeed, Va, can be utilizedas feedback for this controller. By using the true airspeed as feedback, time-of-flight and ground speed can be controlled. But if the airframe experiencesstrong tailwind, the velocity relative to the air might get to small to produceproper lift, possible resulting in stall situations. A solution could be using thetrue airspeed per default and in-cooperate a safety mechanism based on theindicated airspeed.

18

Figure 2.4: Examples of circle fitting trajectory smoothing. Reprinted from[17, Fig. 13]

.

2.2 Flight Management System

The foremost task of the Flight Management System (FMS) is to ensure that theflight is executed correctly, by keeping the flight plan and its state of executionup-to-date. This includes a set of subtasks:

1. Interpolate the flight plan, into a smooth, flyable trajectory.

2. Ensure that the low level controllers do in fact fly the trajectory.

3. Communicate state of plan and airframe to the tool and receive updatesfrom it.

2.2.1 Trajectory Smoothing

Trajectory smoothing is the task of interpolating the waypoints, from the flightplan, into a continuous, flyable trajectory. Normally, it is desirable to fly asstraight as possible in between waypoints, as these will be the lines where datamust be sampled by the tool. Thus, flying straight lines in between the way-points and turning in minimum circles in the line segment transitions doesintuitively seem like a good idea. However, one must carefully consider howthe circle segments are used: In Figure 2.4 Anderson et al. [17] illustrates threedifferent approaches to this, as the FMS can either:

a) Fly the minimum distance path (left path)

b) Force the trajectory through the waypoint (center path)

c) Fly the equal length distance (right path)

19

(a) Nested PID loops can be used for trajectory tracking, by minimising the distancefrom the the desired path. Partial reprint from [38, Fig. 17]

(b) Non-linear approach on trajectorytracking. The method aims to intersectthe desired path, a fixed distance ahead ofthe airframe. Reprinted from [57, Figure1]

(c) A vector field can be described, thatguides the UAV towards the desired tra-jectory and gradually towards the direc-tion of it. Partial reprint from [54, Fig.1]

Figure 2.5: Reprinted figures of different approaches on trajectory tracking.

If the purpose of the flight is to gather data along the line segments, option b)seems advantageous, as it maximizes the line segments length. Is the purposeon the other hand to get from A to B fast, option a) is the way to go. Finallyoption c) is advantageous, if the flight time is important, as the flown distanceis easily calculated, from the sum of waypoint distances. The information onwhich scheme to use should be part of the flight plan description, to ensure thatthe planner and FMS share their interpretations of the trajectory.

2.2.2 Trajectory Tracking

A number of different approaches to trajectory tracking can be found in theliterature. A few examples are picked an reviewed here. Commonly, they relyon the longitudinal low level controller, but differ in the way the desired headingis produced.

Ippolito [38] proposes a controller, based on nested PID controllers. This con-troller essentially extends the longitudinal controller depicted in Figure 2.3a onpage 18, by letting an additional PID loop control the desired heading. Thecontroller has two modes, as it can either track a line, by controlling the desired

20

heading from the orthogonal distance between the line and the airframe, ortrack a circle segment, by comparing the distance from the circle center to thedesired circle radius. The controller is depicted in Figure 2.5a on the precedingpage. This scheme is temptingly simple, but not quite flawless. As the orthogo-nal distance between the airframe and the trajectory is used, the controller canonly work reactively. This will inevitable result in the airframe overshooting thetrajectory, before any error will be present for the PID to react on.

Park et al. [57] proposes a non linear controller, which follows a imaginary rab-bit a fixed distance ahead of the airframe, on the trajectory. A conceptualillustration is given in Figure 2.5b on the previous page. The turn radius is con-tinuously set such that the airframe will intercept the trajectory at that verydistance. Thus, the airframe will smoothly approach the trajectory and adjustthe turn radius such that the curve will be tracked.

Nelson et al. [54] proposes a Vector Field based navigation and control schemefor fixed wing UAV. The general idea of this scheme is that various ’interest’maps can be combined. E.g the UAV can be directed away from densely popu-lated areas, towards unexplored areas etc. Thus this scheme is not necessarilyclassified as a strictly trajectory tracker, but interesting indeed. In Figure 2.5con the preceding page such a vector field is depicted for a straight line segmentof the trajectory.

2.2.3 Tool Interaction

Beyond the capabilities of a regular autopilot in a UAV, the autopilot of an ATCmust be coupled with the tool it is carrying. This coupling is bidirectional, asthe tool affects the dynamics of the airplane, while the autopilot provides infor-mation about the current state of the airframe. A use-case could be a cameratool; mounting a camera under the belly of the plane increases its weight andthus its flight characteristics, i.e. the take-off and landing speeds are increased,as well as the step response time to control input. Therefor the tool must pro-vide information about its size and weight to the autopilot, such that theseparameters can be accounted for. Furthermore, the tool needs information fromthe autopilot. Assume the camera is mounted in a gimbal and the mission goalis to photograph a specific location on the ground from 360◦ around. The toolwould then need to know where to point the gimbal, and when to take a photo.Should the tool fail, it could inform the autopilot, such that a second circle couldbe flown. The publish/subscribe[30] model is ideal for accommodating this sortof feature, as the tool can just subscribe to this information. The autopilotcould likewise subscribe to the information provided be the tool.

Various tools can be contemplated, and a theoretical classification has beenmade:

1) Passive tool: Works independently of the airframe state. Ex. a camerafilming the entire flight. Requires no autopilot support or interfacing.

2) Interactive tool: Executes commands send from the FMS. Ex. take pic-ture, turn gimbel here. Requires the tool to subscribe to the autopilot com-

21

mands.

3) Controlling tool: Tool provides autopilot control commands. Ex. tooltracks a red car and provides target coordinates to the autopilot.

The various tool categories requires different levels of support. 1) Passive, re-quires little implementation in the autopilot software, as long as its dimensionsand weight is known it is just a matter of fixating it in the tool mount. 2)Interactive, requires some support. Typical message types must be predefined,such that the tool can subscribe to. 3) Controlling requires a more completeintegration, as it must be ensured that the commands send from the tool do notresult in hazardous control - i.e. the autopilot should supply failsafe mechanisms.

At this stage of the project, tool interfacing has not been implemented. It hashowever played a major role in the design and architecture of both hardware andsoftware, such that interfaces are provided, and the software has been modulebased.

2.3 Flight Computer

At the heart of the ATC lies the main computer. Its tasks include, but is notlimited to, state estimation, control, tool interfacing and telemetry linkage. Inthose regards a number of requirements have to be met.

The ATC being intended as a development platform, outlines the requirementsfor the main computer. It is desirable to have ’enough’ processing power to tryout new ideas, without compromising the main tasks of keeping the platformairborne. It is likewise desired to use to publish / subscribe model, as describedearlier. This inherently includes some amount of computational message trans-port overhead, which must be accommodated. The performance requirementsare similar to those of a modern desktop PC. Due to space and power con-straints carrying a desktop PC around is not an option. Furthermore a varietyof hardware interfaces must be available, including SPI, I2C, UART, USB andEthernet. High performance embedded computers have these interfaces.As it is seen in for example the Paparazzi project 1 a dedicated processor like theSMT32/LPC214x can handle the inertial navigational system. This approachyields a fine solution for a dedicated autopilot. However, it limits expandabilityand modularity as all resources are dedicated to keeping the airframe in the air.Thus, there is very limited options for adding extra sensors and tools.Another approach is to use a processor like ARM, and run a Linux kernel on it.There are various distributors of this type of processor boards. Most notableare the Beagleboard and Gumstix. A comparison of the features offered by thetwo is listed in Table 2.1 on the next page.

1http : //paparazzi.enac.fr/wiki/Umarimv10

22

Feature Beagleboard xM Gumstix FE

Dimensions 78.74 x 76.2 mm 58 x 17 x 4.2 mmWeight 37 g 5.6 gProcessor DM3730 OMAP3530Clock 1000 MHz 600MHzRAM 512 MB 512 MBFlash 0 MB 512 MBPower 5V USB or DC power 3.3V DCVideo DVI/LCD/S-Video DVI/LCDDSP 800 Mhz 220MHzJTAG 3

UART 3

USB OTG 3

USB HOST 3

Ethernet 3 7

Audio I/O 3

Camera connector 3

microSD 3

Bluetooth 7 3

Wi-Fi 7 3

Expansion boards 7 3

I2C - SPI - ADCPWM - GPIO 3

Table 2.1: Comparison of BeagleBoard xM and Gumstix FE

BeagleBoard has the advantage of integrated Ethernet, however it comes at aprice of a rather large motherboard type PCB, similar to that of a standalonePC, whereas the Gumstix provides WiFi and a processor-only board, meant forusing in expansion boards. Thus the design of the Gumstix is more in line withthe design criteria of this system - allowing for a module based hardware.Gumstix has been deemed the optimal compromise between size, interfaces andcomputational power. Gumstix offers small self-contained computers, capableof higher abstraction operating systems, such as Linux. The form-factor allowsfor easy installation in custom designed boards. Furthermore Gumstix have avibrant open source based user community, using OpenEmbedded2 and BitBake.This allows for quick access to support on numerous hardware and softwarerelated issues.

2.4 Conclusion

In this Chapter, various aspects of automated flight have been examined, withemphasis on modularity and independence of specific fixed wing airframes. Bysplitting the executive autopilot into a Flight Management System and a con-troller layer, airframe specific tunings only need to be handled at the lowestlayer. Abstract tasks, such as tool interfacing, trajectory smoothing and flight

2http://www.openembedded.org/

23

control can be handled by the generic Flight Management System. Althoughsuch a system has not been implemented, the lower control layer it relies on,has been. The PID controller scheme was found useful for this task, which in-herently means that well known methods can be used to tune the controller fora specific airframe. In Section 5.6.2 on page 65 it will be verified by field tests,that the implemented parts of the controller are indeed capable of controllingthe airframe. A Gumstix has been chosen as the main flight computer, such thatinterfacing with various tools can be done with minor effort, utilizing Linux andROS. The lack of realtime capabilities on the Linux operating system is dealtwith, by letting a small coprocessor interface with the R/C components. Thiscoprocessor also makes sure that the operator can reclaim manual control of theairframe, in case of a operating system crash.

24

Chapter 3State Feedback

To enable control of the airframe, state feedback is essential. Together with set-points, this feedback will enable real time control of the airframe, as discussedin Chapter 2. Several state variables has to be monitored, to enable low- aswell as higher level control. The airframe’s bank, climb and heading needs to beknown. Rotating from world to plane body frame, is done using Euler angles,in the order Z-Y-X. This de facto standard does intuitively relate ψ to heading,θ to elevation and φ to bank, as seen in Figure 3.1b on page 28. Combined,these three will be referred to as the pose of the airframe. A subset of the pose,excluding heading information, will be referred to as the attitude of the airframe.

The airframe coordinate frame is positioned (xa, ya, za) = Nose, Starboard,Down. The global coordinate frame used is Universal Transverse Mercator(UTM). The Cartesian UTM coordinate system is chosen over the sphericallatitude / longitude system, as it eases calculation. The UTM frame is po-sitioned (x0, y0, z0) = North, East, Down. However, as it is unintuitive thatnegative movement on the z0-axis will map to positive climb, the altitude is re-ferred to as positive above ground or sea level, as seen in Figure 3.1a on page 28.This figure also shows the relations of the position of the airframe, (Pn, Pe), tothe global UTM frame. It is assumed that the UTM frame is a valid inertialreference frame. Although this is not exactly true, due rotation of the Earthand the solar system, the massive scale of these motions reduce the impact onour scale-relatively much faster moving system and are thus neglected.

It is vital to know the velocity of the airframe for control purposes, but also forattitude estimation, as it will discussed in Section 3.2 on page 33. In aeronautics,velocity is a more complicated issue compared to ground based vehicles, as atotal of three different velocities must be considered:

1. The Indicated airspeed, Vi, is the velocity of the airframe with respect tothe surrounding air.

2. The True airspeed, Va, is the total 3D velocity of the airframe, with respectto the inertial reference frame. On a completely windless day, Va and Viare identical.

25

3. The Speed over ground or Course speed, Vg, is the total 2D (x-y) velocityof the airframe, with respect to the inertial reference frame.

Please refer to Figures 3.2a and 3.2b on page 29 for a graphical visualisation ofthe different velocities and their relations. Especially Vi and Va must be eval-uated separately, as Vi is important with respect to flight dynamics (e.g. stallspeed, control surface response) and Va with respect to inertial measurements.

For control purposes, it is convenient to know the direction and magnitude ofthe wind. E.g, this will allow the for better dead reckoning navigation in thecase of lost GPS fix. Likewise, the Flight Management System will be capableof controlling the course heading, χ, rather than the airframe heading, ψ.

A complete list of variables used to describe the state of the airframe is givenin Table 3.1.

Variable Description Type Unit

Vi Indicated Airspeed Direct [m/s]Va True Airspeed Derived [m/s]Vg Speed over Ground Direct [m/s]

φ Roll/bank angle Fused [rad]θ Pitch/elevation angle Fused [rad]ψ Yaw/heading angle Fused [rad]

Pn Position north Fused / Direct [m]Pe Position east Fused / Direct [m]Alt Altitude Fused / Direct [m]

Wn Wind in north direction Unobservable [m/s]We Wind in east direction Unobservable [m/s]α Angle of Attack Unobservable [rad]β Side slip angle Derived [rad]

χ Course heading Derived [rad]γ Course climb Derived [rad]

Table 3.1: Table of state variables, needed to be determined. Quantities markedwith Direct are directly measurable, Fused are deduced by fusing of variousmeasurements. Unobservable quantities are not measurable and must be es-timated. Derived quantities are redundant and can be calculated from othervariables.

The remainder of this Chapter will be structured as follows. Section 3.1 onthe following page will examine sensors, applicable for measuring the quantitieslisted in Table 3.1. In Section 3.2 on page 33 methods and kinematic equationsfor fusing noisy data and estimating unobserved quantities will be explored.Noise modelling will briefly be discussed in Section 3.3 on page 42. FinallySection 3.4 on page 43 will assess the overall performance of the implementedmethods.

26

UTMn = x0

UTMe = y0

Down = z0

Up = −z0

UTMO

Alt = −z

PnPe

ya

xa

za

(a) The airframe positioned in the worldframe.

x0

y0

z0

−z0

ψ

θ

xa

φ

ya

za

(b) ZYX Euler rotation. Firstψ around the world frame z-axis,then θ around the new interme-diate y-axis and finally φ aroundthe body frame x-axis.

Figure 3.1: Illustrations of position and orientation variables, relating thebodyframe to the world frame.

3.1 Sensors

A number of different sensors must be combined to provide the information re-quired. This section will review the different sensor types, capable of measuringthe variables. Later sections will review methods for extracting the informationfrom the noisy sensor data streams.

3.1.1 Velocities and course parameters

Vi can be measured using an pitot tube. This sensor is a specialized anemometerand operates by comparing the static air pressure with the dynamic pressure,induced by the airframes velocity through the surrounding air. A variety ofother types of anemometers can be used to measure the airspeed, but the pitottube is most commonly used in aeronautics.

If the airframe pose, angle-of-attack and the wind components are know, thetrue airspeed, Va can be calculated from the indicated airspeed, Vi:

Va =

√(Vi · cγ · cψ +Wn)

2+ (Vi · cγ · sψ +We)

2+ (Vi · sγ)

2(3.1)

where the course climb angle γ = θ − α. Similarly, the speed over ground, Vgcan be determined from the indicated airspeed:

Vg =

√(Vi · cγ · cψ +Wn)

2+ (Vi · cγ · sψ +We)

2(3.2)

GPS based sensors can also be used to determine Vg directly and from thismeasurement Va can be determined, if the airframes attitude is known:

Va =Vgcγ

(3.3)

Which also implies that:Vg = Va · cγ (3.4)

27

From the wind components, indicated airspeed and attitude, the course overground can be determined[19]:

χ = tan−1

(Vi · sψ · cγ +We

Vi · cψ · cγ +Wn

)(3.5)

= ψ − β (3.6)

Which also implies that β = ψ − χ.

As the aforementioned velocities refer to the inertial reference frame, they canalso be estimated over short time by integration of airframe acceleration, butlong term integration will soon loose precision due to various noise sources.

ONorth

Viwn

we

Va

w

ψ

χ

β

(a) Top view, showing relation between yaw, ψ, wind components Wn

and We, side slip-angle β, course-heading χ, indicated airspeed Vi andtrue airspeed Va.

OHorizontal

Vg

Vi

α

wVa

θγ

(b) Side view, showing relation between wind W , Angle of attack α, course climb angle,γ, indicated airspeed Vi, true airspeed Va, and speed over ground Vg.

Figure 3.2: Illustration of angles and velocities related to the airframe move-ment.

Pitot model

The pitot tube is connected to a differential pressure sensor, such that Bernoulli’sequation (3.7) can be applied:

Pt = Ps +

(ρair · V 2

i

2

)(3.7)

28

Where Pt is total pressure, Ps is static pressure (the two pressures given bythe pitot tube) and ρair is air density. Thus all variables are accounted for, asthe differential pressure sensor measures the dynamic pressure Pd = Pt − Ps.Therefore:

Vi =

√2 · Pdρair

(3.8)

The air density ρair varies with temperature, humidity and pressure, but is inthe vicinity of 1.22kg/m3 under ’normal’ conditions.

3.1.2 Position

The position of the airframe within the reference frame can be measured by GPS(Global Positioning System). This system uses global landmarks, in the formof satellites. Satellites with known positions and synchronised clocks frequentlytransmits radio signals, containing a time stamps. By receiving timestampsfrom multiple satellites, the difference in time-of-flight of the radio signals canbe determined and thus the position of the receiver. Integrated GPS receiversare available in small modules and can be interfaced via a serial communicationline. The update rate is typically 1 to 10Hz. The GPS outputs the positionin spherical longitude/latitude coordinates. These coordinates needs to be con-verted to UTM northing and easting coordinates. This conversion is somewhatcomplex, but is already a integrated part of FroboMind and thus available with-out further effort.

3.1.3 Altitude

The altitude of the airframe can be measured using GPS. However, the resolu-tion on the altitude is somewhat limited from this sensor, due to satellite versusreceiver geometry. The static air pressure, Ps, can also be used, as the pressuredecreases up through the atmosphere[19].

Ps = ρair ·Alt · g ⇔ Alt =Ps

ρair · g(3.9)

Specialized barometric altimeters are available in IC sized packages. Thesesensors precisely measure air pressure and temperature, from which the altitudecan be calculated. However, as atmospheric pressure varies with the weather,the pressure at sea level must be known to calculate the absolute altitude abovesea level. If on the other hand the altitude above the ground station is adequate,a series of measurements can be conducted on the ground before take-off andused as reference. During long flights or changes in weather, the pressure at thesurface will vary and this reference will not be accurate.In certain situations, the barometric altimeter is not precise enough. For in-stance in take off and landing situations, the distance to the runway must beknown in cm-scale. A ultrasonic range finder is useful for this scale and ismounted on the belly of the airframe. The sensor operates on the time-of-flightprinciple: A ultrasonic chirp is transmitted and detected when it is reflected bythe surface of the runway. The time of travel is proportional to the speed ofsound and the distance travelled. The range of this type of sensor is howeverlimited to approximately 7 meters.

29

3.1.4 AHRS

The Attitude and Heading Reference System (AHRS for short) serves, as thename suggests, as a reference for the airframe’s orientation in 3D.

Historically, mechanical gimbal-mounted spinning mass gyroscopes have beenuseful for this type of instrumentation, as they maintain their orientation,with reference to the inertial reference frame, as the airframe is maneuvered.While these mechanical systems are bulky, expensive and heavy, MEMS (MicroElectronic Mechanical System) technology have introduced much cheaper andsmaller rate gyroscopes. These devices are based on vibrating micro structuresand are available in IC-sized packages for direct PCB mounting. As the air-frame rotates, the vibrating mass tends to maintain its direction of travel andwill therefore exceed a force (the Coriolis force) on its supports. This force canbe measured and is proportional to the rate of rotation[53]. They do not sensethe orientation of the airframe, but the rate of rotation. Integration of the rateof rotation will yield the actual orientation. The integration must however bedone in Euler sequence (3.11) as rotation is not cumulative.

φkθkψk

=

1 sφk · tθk cφk · tθk0 cφk −sφk0

sφkcθk

cφkcθk

· ωk (3.10)

φnθnψn

=

∫ n

0

φ

θ

ψ

dt (3.11)

≈n∑

k=0

φkθkψk

·∆tk (3.12)

Where ωk is the current angular rotation rate at time k and ∆tk is the sam-pling period at time k. Sensor imperfection, discrete time resolution, vibrationsand integration errors, will accumulate over time, and eventually render theinformation useless. Hence, a way of measuring the attitude and heading withabsolute reference is necessary and will be reviewed here.

Attitude sensing

The direction of Earth’s gravitational field, relative to the airframe, can usedto determine the attitude (pitch and roll). This acceleration can be measuredusing MEMS accelerometers, like rate gyroscopes available in IC-sized pack-ages. These devices consist of micro machined masses, suspended by cantileverbeams, acting as spring elements. Accelerations acting on the mass will deflectthe suspending beams and move the proof mass, relative to the base. Thisdisplacement is typically measured by the changing capacitance to the mass,with respect to end walls. During stable, level flight, the created lift is equal togravity, such that the acceleration is exactly −g. Maneuvering will change thedirection of the airframe, by using the control surfaces to produce centripetalacceleration in the desired direction, according to Newtons 3rd law of motion.

30

As these sources of acceleration are indistinguishable, the sensor is useless ifthe centripetal accellerations are not estimated. Additionally acceleration willaffect the airframe, from various noise sources such as vibrations, wind gustsetc. These noise sources must be filtered out.Alternatively, infra red thermopile sensors can be used to detect the horizon andthus the attitude of the airframe. This method works by a pair of thermopiles,facing opposite directions, in a collinear configuration (see Figure 3.3). As theairframe banks, one thermopile ’sees’ more sky and the other more Earth. Asthe Earth is relatively warmer than the sky, the attitude angle can be estimatedfrom this temperature difference. However, it is not trivial to extract the exactmagnitude of the roll and pitch angles from this information, as the temperatureof Earth and the sky varies. Hilly terrain will likewise distort the informationprovided by the sensors. Apart from these imperfections, the system is simpleand intuitive. Some issues might arise in the implementation. The sensors needto be mounted externally on the airframe, with clear sight of the Earth and sky.This will constrain the mounting possibilities. While the system is attractivelysimple, it is dismissed on the basis of the aforementioned imperfections.

Figure 3.3: Two thermopiles on opposite sides of the airframe can be used todetermine the roll angle.Reprinted from http: // paparazzi. enac. fr/ wiki/ Sensors/ IR [14].

Heading sensing

As the gravitational field tends to point to the center of Earth, it does notcontribute with any information of the airframe’s heading. However, Earth’smagnetic field is useful for determining the airframe’s heading. A digital mag-netometer can be used to measure the field strength in three orthogonal axisand thus provide a three dimensional vector pointing towards the North Mag-netic Pole. A number of constraints must be considered, when using Earth’smagnetic field for heading determination:

1. Earth’s magnetic field is not uniform across the planet.

2. Other (high power) electrical equipment and soft iron masses affects themagnetic field around the airframe.

3. As the airframe pitches and rolls the sensed field rotates, as it is measuredwith respect to the base frame on all axis, not only heading.

31

If the location is known, the first problem is easily overcome by a look-up-table,based on the World Magnetic Model[16]. Ignoring the declination of the mag-netic field will in some areas of the world be a reasonable approximation. Inother areas, especially near Earth’s magnetic poles, the magnetometer will beperfectly useless, if the exact location is not known and the specifications ofthe magnetic field can not be looked up. Electromagnetic noise from on-boardpower components are harder to eliminate. Shielding the sensor is clearly nota possibility, as such a shield will not discriminate signal over noise . Shieldingthe power system will probably prove cumbersome. However, by positioning themagnetometer as remotely as possible to the power system, the magnitude ofthis issue can be reduced.

Alternatively, a GPS based sensor can be used to measure heading. As thissensor calculates the heading from changes on position, it will obviously onlywork when the airframe is in motion. Also, the sensor does measure the course-over-ground, rather than the actual orientation of the airframe. These twoheadings are not necessarily the same, due wind and thus side slip angle, asdescribed in Section 3.1.1 on page 28. Alternatively, two GPS receivers can beused in a differential configuration. This would provide heading information,even when standing still.

3.2 Sensor fusion

In order to obtain a precise and fast responding state estimate, suitable as feed-back for the control loops, some of the aforementioned sensors must be fused.Sensor fusion is the discipline of combining multiple noise infested sensor datastreams into a combined estimate of the actual state. E.g both accelerome-ters and gyroscopes are indeed capable of measuring the attitude of the air-frame, but neither of them is capable of doing so very well. Vibrations andun-modelled accelerations impact the accelerometers measurements, such thatshort-term extraction of the state is unsuitable. Similar sources of noise appliesto magnetometer measurements. Integration errors on the other hand rendersthe gyroscopes long-term precision worthless. The authors have conducted asurvey of suitable sensor fusion methods for UAVs (See Appendix H, ’SensorFusion for Miniature Aerial Vehicles’ on page 105). This section will describethe major findings of this survey and derive the mathematical formulation of anapplicable state estimator.

3.2.1 Methods

Several methods of varying complexity are capable of sensor fusion. The simplestmethod is complimentary filtering[35, 49]. Complementary filtering is useful forcombining accurate fast, but biased data with slow, noise disturbed but absolutedata. This is done by a pair of complimentary filters, one high pass the other lowpass, as seen in figure 3.4 on the following page. Pilot experiments have shownthat this filter type is indeed capable of fusing gyroscope and accelerometer datainto a viable attitude estimate. The complementary filter is however not a stateestimator, and is as such not capable of in-cooperating unobserved variables,such as the wind components, angle of attack, gyroscope biases etc.

32

f

A

f

A

xu = f(u, x)

xz = f(z, x)

Σ x

z

u

Figure 3.4: Basic complimentary filter. The high pass filter (top) and lowpass filter (bottom) filters out the weakness of each sensor. As the sensors haveopposite weaknesses, their strengths can be combined.

Bayes’ filters[18, 21, 71] on the other hand are recursive state estimators. Bayes’filters work under the assumption that the true state, x is not directly observable- It is a hidden Markov process. However, sensor measurements, z are derived ofthe true state. Because of the Markov assumption, the probability distributionfunction (PDF) of the current state, given the previous state, p(xk|xk−1), isindependent of the history of states, such that

p(xk|xk−1,xk−2 · · ·x0) = p(xk|xk−1) (3.13)

As the observation, z, is derived of the unobserved true state, it is likewise fairto conclude that that it depends only on the current state and not the historyof states.

p(zk|xk,xk−1 · · ·x0) = p(zk|xk) (3.14)

Bayes’ theorem[51, 71] provides the mathematical foundation for describing theprobability of the state given a measurement, based on the probability of themeasurement given the state. According to (3.15) is it necessary to describe theprobabilities of the state and the measurement.

p(x|z) =p(z|x) · p(x)

p(z)(3.15)

Implementation of Bayes’ filter work in a two-step recursion. First, the PDFis propagated, using the current system input, uk, and a model of the systemin question. As the PDF is propagated, it is smeared a bit in order to reflectthe uncertainty associated with the state transition, wk, referred to as the statetransition noise. The projected state estimate is referred to as the a priori stateestimate, x−k , as this is the estimate, prior to in-cooperation of a measurement.The second step is to in-cooperate a measurement, zk. Based on Bayes’ theoremthis measurement can describe a PDF of the state. As the measurement basedstate estimate is in-cooperated, the PDF is focused to reflect that knowledge hasbeen acquired. The new estimate is referred to as the posteriori state estimate,x+k .

Many different implementations of Bayes’ filters exists. They mainly differ onthe way they represent the PDF of the state estimate. Generally speaking, themore detailed the description of the state estimate needs to be, the more compu-tation is required. E.g Particle filters[68] is capable of representing a arbitraryPDF, but in limited resolution. Kalman filter[27, 43] represent the PDF as aGaussian distribution and can thus use a parametric description, containing the

33

mean and covariance. This representation does limit the PDF to be symmet-ric and univariate (i.e, only keeps one hypothesis of the state). The originalformulation[43] uses the State Space model to propagate the state estimate for-ward in time. The filter can however be extended to work for nonlinear systemsas well. The Kalman filter is chosen as the complexity of multivariate PDF’sare not required in this application. The Kalman filter will be reviewed here.

As mentioned, the Kalman filter uses the linear state space model to projectthe state estimate and covariance forward in time:

x−k = x+k−1 +

(A · x+

k−1 +B · uk)·∆tk (3.16)

P−k = P+k−1 +

(A · P+

k−1 ·A> +Q)·∆tk (3.17)

Where the state transition model,A, is used to project the state estimate, x, andthe state covariance matrix, P , forward in time, by the time step ∆tk = tk−tk−1.The system input, u, is projected into the state vector by the input model,B, and the transition noise covariance, Q, is added to the state covariancematrix. Note that A and B are not step-dependant, as the system is linear. Ameasurement is in-cooperated in the measurement update step:

Kk = P−k ·H> ·(H · P−k ·H> +R

)−1

(3.18)

x+k = x−k +Kk ·

(zk −H · x−k

)(3.19)

P+k = (I −Kk ·H) · P−k (3.20)

Where K is the Kalman gain, which is calculated from the state covariancematrix, the observer model, H and the observation covariance matrix, R. Basedon the Kalman gain and the innovation term, zk −H · xk, the posteriori stateestimate, is calculated. Finally, the state covariance matrix is corrected to reflectthe information gain, introduced by the measurement.As mentioned, the Kalman filter is limited to linear systems, by the linear statespace model. However, it can be extended[63] to work for non-linear systems aswell. This is done by using a pair of non-linear models f(x,u,w) and h(x,v):

xk = f (xk,uk,wk) (3.21)

= Ak · xk +Bk · uk +W k ·wk (3.22)

zk = h (xk,vk) (3.23)

= Hk · xk + V k · vk (3.24)

f(x,u,w) models the state transition. It takes the current state vector, xk,the current system input vector, uk and the noise vector wk as inputs. wk

is a random noise vector, drawn from a mean zero Gaussian distribution withcovariance Q. f(x,u,w) needs not to be formulated in the linear form (3.22),but the linear models Ak and W k must be calculated for each time update.These can be calculated as the Jacobians, i.e linearising around the state andnoise vectors respectively, such that:

Ak[i,j] =∂f [i]

∂x[j](xk,uk) (3.25)

34

and

W k[i,j] =∂f [i]

∂w[j](xk,uk) (3.26)

h(x,v) models the sensor output, based on the current state estimate, xk andthe measurement noise vector, vk which is drawn from a mean zero Gaussiandistribution with covarianceR. h(x,v) needs not to be in the linear form (3.24),but the linear models Hk and V k need to be known. They can be found bylinearising the model around the state and noise vector respectively, such that:

Hk[i,j] =∂h[i]

∂x[j](xk) (3.27)

and

V k[i,j] =∂h[i]

∂v[j](xk) (3.28)

The time update step of the extended Kalman filter[18, 21, 71] (EKF) is formu-lated as:

x−k = x+k−1 + f(x+

k−1,uk, 0) ·∆tk (3.29)

P−k = P+k−1 +

(Ak · P+

k−1 ·A>k +W k ·Q ·W>k

)·∆tk (3.30)

Where the state is propagated using the state transition function f(x,u,w).Note that the noise input is set to zero, as no intentional noise is added. Thestate transition noise covariance matrix, Q is projected by the state transi-tion noise model W k, before it is added to the state covariance matrix. Themeasurement update step is formulated as:

Kk = P−k ·H>k ·(Hk · P−k ·H>k + V k ·R · V >k

)−1

(3.31)

x+k = x−k +Kk ·

(zk − h

(x−k , 0

))(3.32)

P+k = (I −Kk ·Hk) · P−k (3.33)

Where the measurement noise model, V k is used to project the measurementnoise covariance matrix, R and the measurement model, h(x,v), is used in theinnovation term. Also here, no intentional noise is injected, so vk = 0.

This concludes the theoretical description of the EKF, used in the system. It wasfound that the EKF was most suitable for state estimation. The formulationof this filter has been reviewed and explained. The state estimator can beconfigured in various ways. This will be investigated further in the next. Afterthis, the kinematic models needed for the filtering are derived.

3.2.2 Estimator architecture

The architecture of the state estimator is vital to performance in terms of cal-culation efficiency and precision. But also from a debugging and developmentpoint of view, various constructions are easier to work with compared to others.Three different architectures have been considered.

35

The first scheme is the obvious single 9-state filter[61], with the measurementvector composed of acceleration, α, magnetic field strength, β, UTM positionand altitude, utm, as depicted in Figure 3.5a on the next page. However, asthe four sensors (accelerometer, magnetometer, GPS receiver and barometricaltimeter), needed to compose the measurement vector, have very different up-date rates, the lowest frequency must be used. In this case the GPS receiverwith a update frequency of approximately 5 Hz. This measurement frequencyis not acceptable for attitude estimation.

It is however possible to use separate measurement vectors[56] within the sameestimator, by defining different H, V and R matrices for each of the mea-surement vector, as illustrated in Figure 3.5b on the following page. With thisarrangement, the measurement rate dependency between the sensors can beeliminated. To simplify the problem even more a thrid approach can be taken;Splitting the estimator into smaller sub-estimators[28, 60], responsible for co-herent parts of the state vector. Such a scheme is depicted in Figure 3.5c on thenext page. The advantage of this decoupling is that debugging and developmentcan be done in a segmented fashion. This structure also reduces the size of thematrices remarkably, which is advantageous as it reduces the need for compu-tational resources. However this segmentation results in lost coupling betweenmeasurement and state, i.e. magnetometer can not aid attitude estimation, andthe GPS can not aid heading estimation. But as the primary and intentionalsensor data is still available to the respective estimators, this is not considereda major setback.CONCLUSION

3.2.3 Kinematic models

The Extended Kalman filter, which was chosen for state estimation earlier inthis Section, requires kinematics models of the system. These models are usedto propagate the estimate and its covariance forward in time, as discussed insubsection 3.2.1 on page 33. A total of three different estimators are needed,as explained in the previous subsection. The outlines for the models for eachestimator will be given in the following subsection. Appendices A to C onpages 80–88 describes the deviations in more detail.

Attitude kinematic model