1 A Web Search Engine-based Approach to Measure Semantic Similarity between Words Danushka Bollegala, Yutaka Matsuo, and Mitsuru Ishizuka, Member, IEEE Abstract—Measuring the semantic similarity between words is an important component in various tasks on the web such as relation extraction, community mining, document clustering, and automatic meta data extraction. Despite the usefulness of semantic similarity measures in these applications, accurately measuring semantic similarity between two words (or entities) remains a challenging task. We propose an empirical method to estimate semantic similarity using page counts and text snippets retrieved from a Web search engine for two words. Specifically, we define various word co-occurrence measures using page counts and integrate those with lexical patterns extracted from text snippets. To identify the numerous semantic relations that exist between two given words, we propose a novel pattern extraction algorithm and a pattern clustering algorithm. The optimal combination of page counts-based co-occurrence measures and lexical pattern clusters is learned using support vector machines. The proposed method outperforms various baselines and previously proposed web-based semantic similarity measures on three benchmark datasets showing a high correlation with human ratings. Moreover, the proposed method significantly improves the accuracy in a community mining task. Index Terms—Web Mining, Information Extraction, Web Text Analysis ✦ 1 I NTRODUCTION A CCURATELY measuring the semantic similarity be- tween words is an important problem in web min- ing, information retrieval, and natural language pro- cessing. Web mining applications such as, community extraction, relation detection, and entity disambiguation, require the ability to accurately measure the semantic similarity between concepts or entities. In information retrieval, one of the main problems is to retrieve a set of documents that is semantically related to a given user query. Efficient estimation of semantic similarity between words is critical for various natural language processing tasks such as word sense disambiguation (WSD), textual entailment, and automatic text summa- rization. Semantically related words of a particular word are listed in manually created general-purpose lexical on- tologies such as WordNet 1 , In WordNet, a synset contains a set of synonymous words for a particular sense of a word. However, semantic similarity between entities changes over time and across domains. For example, apple is frequently associated with computers on the Web. However, this sense of apple is not listed in most general-purpose thesauri or dictionaries. A user who searches for apple on the Web, might be interested in this sense of apple and not apple as a fruit. New words are constantly being created as well as new senses are assigned to existing words. Manually maintaining on- tologies to capture these new words and senses is costly if not impossible. • The University of Tokyo, Japan. [email protected] 1. http://wordnet.princeton.edu/ We propose an automatic method to estimate the semantic similarity between words or entities using Web search engines. Because of the vastly numerous documents and the high growth rate of the Web, it is time consuming to analyze each document separately. Web search engines provide an efficient interface to this vast information. Page counts and snippets are two useful information sources provided by most Web search engines. Page count of a query is an estimate of the num- ber of pages that contain the query words. In general, page count may not necessarily be equal to the word frequency because the queried word might appear many times on one page. Page count for the query P AND Q can be considered as a global measure of co-occurrence of words P and Q. For example, the page count of the query “apple” AND “computer” in Google is 288, 000, 000, whereas the same for “banana” AND “computer” is only 3, 590, 000. The more than 80 times more numerous page counts for “apple” AND “computer” indicate that apple is more semantically similar to computer than is banana. Despite its simplicity, using page counts alone as a measure of co-occurrence of two words presents sev- eral drawbacks. First, page count analysis ignores the position of a word in a page. Therefore, even though two words appear in a page, they might not be actually related. Secondly, page count of a polysemous word (a word with multiple senses) might contain a combination of all its senses. For an example, page counts for apple contains page counts for apple as a fruit and apple as a company. Moreover, given the scale and noise on the Web, some words might co-occur on some pages without being actually related [1]. For those reasons, page counts alone are unreliable when measuring semantic similarity. Snippets, a brief window of text extracted by a search engine around the query term in a document, provide

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

1

A Web Search Engine-based Approach toMeasure Semantic Similarity between Words

Danushka Bollegala, Yutaka Matsuo, and Mitsuru Ishizuka, Member, IEEE

Abstract—Measuring the semantic similarity between words is an important component in various tasks on the web such as relationextraction, community mining, document clustering, and automatic meta data extraction. Despite the usefulness of semantic similaritymeasures in these applications, accurately measuring semantic similarity between two words (or entities) remains a challenging task.We propose an empirical method to estimate semantic similarity using page counts and text snippets retrieved from a Web searchengine for two words. Specifically, we define various word co-occurrence measures using page counts and integrate those with lexicalpatterns extracted from text snippets. To identify the numerous semantic relations that exist between two given words, we propose anovel pattern extraction algorithm and a pattern clustering algorithm. The optimal combination of page counts-based co-occurrencemeasures and lexical pattern clusters is learned using support vector machines. The proposed method outperforms various baselinesand previously proposed web-based semantic similarity measures on three benchmark datasets showing a high correlation with humanratings. Moreover, the proposed method significantly improves the accuracy in a community mining task.

Index Terms—Web Mining, Information Extraction, Web Text Analysis

F

1 INTRODUCTION

A CCURATELY measuring the semantic similarity be-tween words is an important problem in web min-

ing, information retrieval, and natural language pro-cessing. Web mining applications such as, communityextraction, relation detection, and entity disambiguation,require the ability to accurately measure the semanticsimilarity between concepts or entities. In informationretrieval, one of the main problems is to retrieve a setof documents that is semantically related to a givenuser query. Efficient estimation of semantic similaritybetween words is critical for various natural languageprocessing tasks such as word sense disambiguation(WSD), textual entailment, and automatic text summa-rization.

Semantically related words of a particular word arelisted in manually created general-purpose lexical on-tologies such as WordNet1, In WordNet, a synset containsa set of synonymous words for a particular sense ofa word. However, semantic similarity between entitieschanges over time and across domains. For example,apple is frequently associated with computers on theWeb. However, this sense of apple is not listed in mostgeneral-purpose thesauri or dictionaries. A user whosearches for apple on the Web, might be interested inthis sense of apple and not apple as a fruit. New wordsare constantly being created as well as new senses areassigned to existing words. Manually maintaining on-tologies to capture these new words and senses is costlyif not impossible.

• The University of Tokyo, [email protected]

1. http://wordnet.princeton.edu/

We propose an automatic method to estimate thesemantic similarity between words or entities usingWeb search engines. Because of the vastly numerousdocuments and the high growth rate of the Web, it istime consuming to analyze each document separately.Web search engines provide an efficient interface tothis vast information. Page counts and snippets are twouseful information sources provided by most Web searchengines. Page count of a query is an estimate of the num-ber of pages that contain the query words. In general,page count may not necessarily be equal to the wordfrequency because the queried word might appear manytimes on one page. Page count for the query P AND Qcan be considered as a global measure of co-occurrenceof words P and Q. For example, the page count of thequery “apple” AND “computer” in Google is 288, 000, 000,whereas the same for “banana” AND “computer” is only3, 590, 000. The more than 80 times more numerous pagecounts for “apple” AND “computer” indicate that apple ismore semantically similar to computer than is banana.

Despite its simplicity, using page counts alone as ameasure of co-occurrence of two words presents sev-eral drawbacks. First, page count analysis ignores theposition of a word in a page. Therefore, even thoughtwo words appear in a page, they might not be actuallyrelated. Secondly, page count of a polysemous word (aword with multiple senses) might contain a combinationof all its senses. For an example, page counts for applecontains page counts for apple as a fruit and apple asa company. Moreover, given the scale and noise on theWeb, some words might co-occur on some pages withoutbeing actually related [1]. For those reasons, page countsalone are unreliable when measuring semantic similarity.

Snippets, a brief window of text extracted by a searchengine around the query term in a document, provide

2

useful information regarding the local context of thequery term. Semantic similarity measures defined oversnippets, have been used in query expansion [2], per-sonal name disambiguation [3], and community min-ing [4]. Processing snippets is also efficient because itobviates the trouble of downloading web pages, whichmight be time consuming depending on the size of thepages. However, a widely acknowledged drawback ofusing snippets is that, because of the huge scale of theweb and the large number of documents in the resultset, only those snippets for the top-ranking results fora query can be processed efficiently. Ranking of searchresults, hence snippets, is determined by a complexcombination of various factors unique to the underlyingsearch engine. Therefore, no guarantee exists that allthe information we need to measure semantic similaritybetween a given pair of words is contained in the top-ranking snippets.

We propose a method that considers both page countsand lexical syntactic patterns extracted from snippetsthat we show experimentally to overcome the abovementioned problems. For example, let us consider thefollowing snippet from Google for the query JaguarAND cat.

“The Jaguar is the largest cat in Western Hemisphere andcan subdue larger prey than can the puma”

Fig. 1. A snippet retrieved for the query Jaguar AND cat.

Here, the phrase is the largest indicates a hypernymicrelationship between Jaguar and cat. Phrases such as alsoknown as, is a, part of, is an example of all indicate varioussemantic relations. Such indicative phrases have beenapplied to numerous tasks with good results, such ashypernym extraction [5] and fact extraction [6]. From theprevious example, we form the pattern X is the largest Y,where we replace the two words Jaguar and cat by twovariables X and Y.

Our contributions are summarized as follows.• We present an automatically extracted lexical syn-

tactic patterns-based approach to compute the se-mantic similarity between words or entities usingtext snippets retrieved from a web search engine.We propose a lexical pattern extraction algorithmthat considers word subsequences in text snippets.Moreover, the extracted set of patterns are clusteredto identify the different patterns that describe thesame semantic relation.

• We integrate different web-based similarity mea-sures using a machine learning approach. We extractsynonymous word-pairs from WordNet synsets aspositive training instances and automatically gen-erate negative training instances. We then train atwo-class support vector machine to classify syn-onymous and non-synonymous word-pairs. Theintegrated measure outperforms all existing Web-based semantic similarity measures on a benchmark

dataset.• We apply the proposed semantic similarity measure

to identify relations between entities, in particularpeople, in a community extraction task. In thisexperiment, the proposed method outperforms thebaselines with statistically significant precision andrecall values. The results of the community miningtask show the ability of the proposed method tomeasure the semantic similarity between not onlywords, but also between named entities, for whichmanually created lexical ontologies do not exist orincomplete.

2 RELATED WORK

Given a taxonomy of words, a straightforward methodto calculate similarity between two words is to find thelength of the shortest path connecting the two words inthe taxonomy [7]. If a word is polysemous then multiplepaths might exist between the two words. In such cases,only the shortest path between any two senses of thewords is considered for calculating similarity. A problemthat is frequently acknowledged with this approach isthat it relies on the notion that all links in the taxonomyrepresent a uniform distance.

Resnik [8] proposed a similarity measure using infor-mation content. He defined the similarity between twoconcepts C1 and C2 in the taxonomy as the maximum ofthe information content of all concepts C that subsumeboth C1 and C2. Then the similarity between two wordsis defined as the maximum of the similarity between anyconcepts that the words belong to. He used WordNet asthe taxonomy; information content is calculated usingthe Brown corpus.

Li et al., [9] combined structural semantic informationfrom a lexical taxonomy and information content from acorpus in a nonlinear model. They proposed a similaritymeasure that uses shortest path length, depth and localdensity in a taxonomy. Their experiments reported ahigh Pearson correlation coefficient of 0.8914 on theMiller and Charles [10] benchmark dataset. They didnot evaluate their method in terms of similarities amongnamed entities. Lin [11] defined the similarity betweentwo concepts as the information that is in common toboth concepts and the information contained in eachindividual concept.

Cilibrasi and Vitanyi [12] proposed a distance metricbetween words using only page-counts retrieved froma web search engine. The proposed metric is namedNormalized Google Distance (NGD) and is given by,

NGD(P,Q) =max{logH(P ), logH(Q)} − logH(P,Q)

logN −min{logH(P ), logH(Q)}.

Here, P and Q are the two words between which dis-tance NGD(P,Q) is to be computed, H(P ) denotes thepage-counts for the word P , and H(P,Q) is the page-counts for the query P AND Q. NGD is based on normal-ized information distance [13], which is defined using

3

Kolmogorov complexity. Because NGD does not takeinto account the context in which the words co-occur,it suffers from the drawbacks described in the previoussection that are characteristic to similarity measures thatconsider only page-counts.

Sahami et al., [2] measured semantic similarity be-tween two queries using snippets returned for thosequeries by a search engine. For each query, they collectsnippets from a search engine and represent each snippetas a TF-IDF-weighted term vector. Each vector is L2

normalized and the centroid of the set of vectors is com-puted. Semantic similarity between two queries is thendefined as the inner product between the correspondingcentroid vectors. They did not compare their similaritymeasure with taxonomy-based similarity measures.

Chen et al., [4] proposed a double-checking modelusing text snippets returned by a Web search engineto compute semantic similarity between words. For twowords P and Q, they collect snippets for each word froma Web search engine. Then they count the occurrences ofword P in the snippets for word Q and the occurrencesof word Q in the snippets for word P . These values arecombined nonlinearly to compute the similarity betweenP and Q. The Co-occurrence Double-Checking (CODC)measure is defined as,

CODC(P,Q) ={0 if f(P@Q) = 0,

exp(

log[f(P@QH(P ) ×

f(Q@P )H(Q)

]α)otherwise.

Here, f(P@Q) denotes the number of occurrences of Pin the top-ranking snippets for the query Q in Google,H(P ) is the page count for query P , and α is a con-stant in this model, which is experimentally set to thevalue 0.15. This method depends heavily on the searchengine’s ranking algorithm. Although two words P andQ might be very similar, we cannot assume that onecan find Q in the snippets for P , or vice versa, becausea search engine considers many other factors besidessemantic similarity, such as publication date (novelty)and link structure (authority) when ranking the resultset for a query. This observation is confirmed by theexperimental results in their paper which reports zerosimilarity scores for many pairs of words in the Millerand Charles [10] benchmark dataset.

Semantic similarity measures have been used in var-ious applications in natural language processing suchas word-sense disambiguation [14], language model-ing [15], synonym extraction [16], and automatic the-sauri extraction [17]. Semantic similarity measures areimportant in many Web-related tasks. In query expan-sion [18] a user query is modified using synonymouswords to improve the relevancy of the search. Onemethod to find appropriate words to include in a queryis to compare the previous user queries using semanticsimilarity measures. If there exist a previous query thatis semantically related to the current query, then it can

gem

jewel

SearchEngine

WebJaccardWebOverlap

WebDiceWebPMI

H(“gem”)H(“jewel”)H(“gem” AND “jewel”)

page-counts

“gem” (X)

“jewel” (Y)

snippets

frequency oflexical patternsin snippetsX is a Y: 10

X, Y: 7X and Y: 12

......

SupportVectorMachine

SemanticSimilarity

AND

patternclusters

Fig. 2. Outline of the proposed method.

be either suggested to the user, or internally used by thesearch engine to modify the original query.

3 METHOD

3.1 Outline

Given two words P and Q, we model the problem ofmeasuring the semantic similarity between P and Q, asa one of constructing a function sim(P,Q) that returns avalue in range [0, 1]. If P and Q are highly similar (e.g.synonyms), we expect sim(P,Q) to be closer to 1. Onthe other hand, if P and Q are not semantically similar,then we expect sim(P,Q) to be closer to 0. We definenumerous features that express the similarity between Pand Q using page counts and snippets retrieved from aweb search engine for the two words. Using this featurerepresentation of words, we train a two-class supportvector machine (SVM) to classify synonymous and non-synonymous word pairs. The function sim(P,Q) is thenapproximated by the confidence score of the trainedSVM.

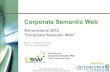

Fig. 2 illustrates an example of using the proposedmethod to compute the semantic similarity between twowords, gem and jewel. First, we query a Web searchengine and retrieve page counts for the two words andfor their conjunctive (i.e. “gem”, “jewel”, and “gem ANDjewel”). In section 3.2, we define four similarity scoresusing page counts. Page counts-based similarity scoresconsider the global co-occurrences of two words on theWeb. However, they do not consider the local context inwhich two words co-occur. On the other hand, snippetsreturned by a search engine represent the local context inwhich two words co-occur on the web. Consequently, wefind the frequency of numerous lexical-syntactic patternsin snippets returned for the conjunctive query of thetwo words. The lexical patterns we utilize are extractedautomatically using the method described in Section 3.3.However, it is noteworthy that a semantic relation can beexpressed using more than one lexical pattern. Group-ing the different lexical patterns that convey the samesemantic relation, enables us to represent a semanticrelation between two words accurately. For this purpose

4

we propose a sequential pattern clustering algorithm inSection 3.4. Both page counts-based similarity scores andlexical pattern clusters are used to define various featuresthat represent the relation between two words. Usingthis feature representation of word pairs, we train a two-class support vector machine (SVM) [19] in Section 3.5.

3.2 Page-count-based Co-occurrence Measures

Page counts for the query P AND Q can be consideredas an approximation of co-occurrence of two words (ormulti-word phrases) P and Q on the Web. However,page counts for the query P AND Q alone do not accu-rately express semantic similarity. For example, Googlereturns 11, 300, 000 as the page count for “car” AND“automobile”, whereas the same is 49, 000, 000 for “car”AND “apple”. Although, automobile is more semanticallysimilar to car than apple is, page counts for the query“car” AND “apple” are more than four times greater thanthose for the query “car” AND “automobile”. One mustconsider the page counts not just for the query P ANDQ, but also for the individual words P and Q to assesssemantic similarity between P and Q.

We compute four popular co-occurrence measures;Jaccard, Overlap (Simpson), Dice, and Pointwise mutualinformation (PMI), to compute semantic similarity us-ing page counts. For the remainder of this paper weuse the notation H(P ) to denote the page counts forthe query P in a search engine. The WebJaccard coeffi-cient between words (or multi-word phrases) P and Q,WebJaccard(P,Q), is defined as,

WebJaccard(P,Q) (1)

=

{0 if H(P ∩Q) ≤ c,

H(P∩Q)H(P )+H(Q)−H(P∩Q) otherwise.

Therein, P ∩ Q denotes the conjunction query P ANDQ. Given the scale and noise in Web data, it is possiblethat two words may appear on some pages even thoughthey are not related. In order to reduce the adverseeffects attributable to such co-occurrences, we set theWebJaccard coefficient to zero if the page count for thequery P ∩ Q is less than a threshold c2. Similarly, wedefine WebOverlap, WebOverlap(P,Q), as,

WebOverlap(P,Q) (2)

=

{0 if H(P ∩Q) ≤ c,

H(P∩Q)min(H(P ),H(Q)) otherwise.

WebOverlap is a natural modification to the Overlap(Simpson) coefficient. We define the WebDice coefficientas a variant of the Dice coefficient. WebDice(P,Q) isdefined as,

WebDice(P,Q) =

{0 if H(P ∩Q) ≤ c,

2H(P∩Q)H(P )+H(Q) otherwise.

(3)

2. we set c = 5 in our experiments

Pointwise mutual information (PMI) [20] is a measurethat is motivated by information theory; it is intended toreflect the dependence between two probabilistic events.We define WebPMI as a variant form of pointwise mutualinformation using page counts as,

WebPMI(P,Q) (4)

=

0 if H(P ∩Q) ≤ c,

log2(H(P∩Q)

NH(P )

NH(Q)

N

) otherwise.

Here, N is the number of documents indexed by thesearch engine. Probabilities in (4) are estimated accord-ing to the maximum likelihood principle. To calculatePMI accurately using (4), we must know N , the numberof documents indexed by the search engine. Althoughestimating the number of documents indexed by a searchengine [21] is an interesting task itself, it is beyondthe scope of this work. In the present work, we setN = 1010 according to the number of indexed pages re-ported by Google. As previously discussed, page countsare mere approximations to actual word co-occurrencesin the Web. However, it has been shown empiricallythat there exist a high correlation between word countsobtained from a Web search engine (e.g. Google andAltavista) and that from a corpus (e.g. British Nationalcorpus) [22]. Moreover, the approximated page countshave been sucessfully used to improve a variety oflanguage modelling tasks [23].

3.3 Lexical Pattern Extraction

Page counts-based co-occurrence measures described inSection 3.2 do not consider the local context in whichthose words co-occur. This can be problematic if one orboth words are polysemous, or when page counts areunreliable. On the other hand, the snippets returned bya search engine for the conjunctive query of two wordsprovide useful clues related to the semantic relationsthat exist between two words. A snippet contains awindow of text selected from a document that includesthe queried words. Snippets are useful for search be-cause, most of the time, a user can read the snippetand decide whether a particular search result is relevant,without even opening the url. Using snippets as contextsis also computationally efficient because it obviates theneed to download the source documents from the Web,which can be time consuming if a document is large.For example, consider the snippet in Figure 3.3. Here,

“Cricket is a sport played between two teams,each with eleven players.”

Fig. 3. A snippet retrieved for the query “cricket” AND“sport”.

the phrase is a indicates a semantic relationship betweencricket and sport. Many such phrases indicate semanticrelationships. For example, also known as, is a, part of, is

5

Ostrich, a large, flightless bird that lives in the drygrasslands of Africa.

Fig. 4. A snippet retrieved for the query “ostrich * * * * *bird”.

an example of all indicate semantic relations of differenttypes. In the example given above, words indicatingthe semantic relation between cricket and sport appearbetween the query words. Replacing the query wordsby variables X and Y we can form the pattern X is a Yfrom the example given above.

Despite the efficiency of using snippets, they pose twomain challenges: first, a snippet can be a fragmentedsentence, second a search engine might produce a snip-pet by selecting multiple text fragments from differ-ent portions in a document. Because most syntactic ordependency parsers assume complete sentences as theinput, deep parsing of snippets produces incorrect re-sults. Consequently, we propose a shallow lexical patternextraction algorithm using web snippets, to recognizethe semantic relations that exist between two words.Lexical syntactic patterns have been used in variousnatural language processing tasks such as extractinghypernyms [5], [24], or meronyms [25], question an-swering [26], and paraphrase extraction [27]. Althougha search engine might produce a snippet by selectingmultiple text fragments from different portions in adocument, a pre-defined delimiter is used to separatethe different fragments. For example, in Google, thedelimiter “...” is used to separate different fragments in asnippet. We use such delimiters to split a snippet beforewe run the proposed lexical pattern extraction algorithmon each fargment.

Given two words P and Q, we query a web searchengine using the wildcard query “P * * * * * Q” anddownload snippets. The “*” operator matches one wordor none in a web page. Therefore, our wildcard queryretrieves snippets in which P and Q appear withina window of seven words. Because a search enginesnippet contains ca. 20 words on average, and includestwo fragments of texts selected from a document, weassume that the seven word window is sufficient tocover most relations between two words in snippets.In fact, over 95% of the lexical patterns extracted bythe proposed method contain less than five words. Weattempt to approximate the local context of two wordsusing wildcard queries. For example, Fig. 4 shows asnippet retrieved for the query “ostrich * * * * * bird”.

For a snippet δ, retrieved for a word pair (P,Q),first, we replace the two words P and Q, respectively,with two variables X and Y. We replace all numericvalues by D, a marker for digits. Next, we generateall subsequences of words from δ that satisfy all of thefollowing conditions.

(i). A subsequence must contain exactly one occur-

rence of each X and Y(ii). The maximum length of a subsequence is L words.

(iii). A subsequence is allowed to skip one or morewords. However, we do not skip more than gnumber of words consecutively. Moreover, the totalnumber of words skipped in a subsequence shouldnot exceed G.

(iv). We expand all negation contractions in a context.For example, didn’t is expanded to did not. Wedo not skip the word not when generating subse-quences. For example, this condition ensures thatfrom the snippet X is not a Y, we do not producethe subsequence X is a Y.

Finally, we count the frequency of all generated subse-quences and only use subsequences that occur more thanT times as lexical patterns.

The parameters L, g, G and T are set experimentally,as explained later in Section 3.6. It is noteworthy that theproposed pattern extraction algorithm considers all thewords in a snippet, and is not limited to extracting pat-terns only from the mid-fix (i.e., the portion of text in asnippet that appears between the queried words). More-over, the consideration of gaps enables us to capture rela-tions between distant words in a snippet. We use a mod-ified version of the prefixspan algorithm [28] to generatesubsequences from a text snippet. Specifically, we use theconstraints (ii)-(iv) to prune the search space of candidatesubsequences. For example, if a subsequence has reachedthe maximum length L, or the number of skipped wordsis G, then we will not extend it further. By pruning thesearch space, we can speed up the pattern generationprocess. However, none of these modifications affect theaccuracy of the proposed semantic similarity measurebecause the modified version of the prefixspan algorithmstill generates the exact set of patterns that we wouldobtain if we used the original prefixspan algorithm (i.e.without pruning) and subsequently remove patterns thatviolate the above mentioned constraints. For example,some patterns extracted form the snippet shown in Fig. 4are: X, a large Y, X a flightless Y, and X, large Y lives.

3.4 Lexical Pattern Clustering

Typically, a semantic relation can be expressed usingmore than one pattern. For example, consider the twodistinct patterns, X is a Y, and X is a large Y. Boththese patterns indicate that there exists an is-a relationbetween X and Y. Identifying the different patternsthat express the same semantic relation enables us torepresent the relation between two words accurately.According to the distributional hypothesis [29], wordsthat occur in the same context have similar meanings.The distributional hypothesis has been used in variousrelated tasks, such as identifying related words [16], andextracting paraphrases [27]. If we consider the wordpairs that satisfy (i.e. co-occur with) a particular lexicalpattern as the context of that lexical pair, then fromthe distributional hypothesis it follows that the lexical

6

Algorithm 1 Sequential pattern clustering algorithm.Input: patterns Λ = {a1, . . . ,an}, threshold θOutput: clusters C

1: SORT(Λ)2: C ← {}3: for pattern ai ∈ Λ do4: max← −∞5: c∗ ← null6: for cluster cj ∈ C do7: sim← cosine(ai, cj)8: if sim > max then9: max← sim

10: c∗ ← cj11: end if12: end for13: if max > θ then14: c∗ ← c∗ ⊕ ai15: else16: C ← C ∪ {ai}17: end if18: end for19: return C

patterns which are similarly distributed over word pairsmust be semantically similar.

We represent a pattern a by a vector a of word-pair frequencies. We designate a, the word-pair frequencyvector of pattern a. It is analogous to the document fre-quency vector of a word, as used in information retrieval.The value of the element corresponding to a word pair(Pi, Qi) in a, is the frequency, f(Pi, Qi, a), that the patterna occurs with the word pair (Pi, Qi). As demonstratedlater, the proposed pattern extraction algorithm typicallyextracts a large number of lexical patterns. Clusteringalgorithms based on pairwise comparisons among allpatterns are prohibitively time consuming when thepatterns are numerous. Next, we present a sequentialclustering algorithm to efficiently cluster the extractedpatterns.

Given a set Λ of patterns and a clustering similaritythreshold θ, Algorithm 1 returns clusters (of patterns)that express similar semantic relations. First, in Algo-rithm 1, the function SORT sorts the patterns intodescending order of their total occurrences in all wordpairs. The total occurrence µ(a) of a pattern a is the sumof frequencies over all word pairs, and is given by,

µ(a) =∑i

f(Pi, Qi, a). (5)

After sorting, the most common patterns appear at thebeginning in Λ, whereas rare patterns (i.e., patterns thatoccur with only few word pairs) get shifted to the end.Next, in line 2, we initialize the set of clusters, C, tothe empty set. The outer for-loop (starting at line 3),repeatedly takes a pattern ai from the ordered set Λ, andin the inner for-loop (starting at line 6), finds the cluster,

c∗ (∈ C) that is most similar to ai. First, we represent acluster by the centroid of all word pair frequency vectorscorresponding to the patterns in that cluster to computethe similarity between a pattern and a cluster. Next,we compute the cosine similarity between the clustercentroid (cj), and the word pair frequency vector of thepattern (ai). If the similarity between a pattern ai, andits most similar cluster, c∗, is greater than the thresholdθ, we append ai to c∗ (line 14). We use the operator ⊕to denote the vector addition between c∗ and ai. Thenwe form a new cluster {ai} and append it to the setof clusters, C, if ai is not similar to any of the existingclusters beyond the threshold θ.

By sorting the lexical patterns in the descending orderof their frequency and clustering the most frequentpatterns first, we form clusters for more common re-lations first. This enables us to separate rare patternswhich are likely to be outliers from attaching to oth-erwise clean clusters. The greedy sequential nature ofthe algorithm avoids pair-wise comparisons between alllexical patterns. This is particularly important becausewhen the number of lexical patterns is large as in ourexperiments (e.g. over 100, 000), pair-wise comparisonsbetween all patterns is computationally prohibitive. Theproposed clustering algorithm attempts to identify thelexical patterns that are similar to each other more thana given threshold value. By adjusting the threshold wecan obtain clusters with different granularity.

The only parameter in Algorithm 1, the similaritythreshold, θ, ranges in [0, 1]. It decides the purity of theformed clusters. Setting θ to a high value ensures thatthe patterns in each cluster are highly similar. However,high θ values also yield numerous clusters (increasedmodel complexity). In Section 3.6, we investigate, exper-imentally, the effect of θ on the overall performance ofthe proposed relational similarity measure.

The initial sort operation in Algorithm 1 can be carriedout in time complexity of O(nlogn), where n is thenumber of patterns to be clustered. Next, the sequentialassignment of lexical patterns to the clusters requirescomplexity of O(n|C|), where |C| is the number ofclusters. Typically, n is much larger than |C| (i.e. n�|C|).Therefore, the overall time complexity of Algorithm 1is dominated by the sort operation, hence O(nlogn).The sequential nature of the algorithm avoids pairwisecomparisons among all patterns. Moreover, sorting thepatterns by their total word-pair frequency prior toclustering ensures that the final set of clusters containsthe most common relations in the dataset.

3.5 Measuring Semantic Similarity

In Section 3.2 we defined four co-occurrence measuresusing page counts. Moreover, in Sections 3.3 and 3.4we showed how to extract clusters of lexical patternsfrom snippets to represent numerous semantic rela-tions that exist between two words. In this section,we describe a machine learning approach to combine

7

both page counts-based co-occurrence measures, andsnippets-based lexical pattern clusters to construct arobust semantic similarity measure.

Given N clusters of lexical patterns, first, we representa pair of words (P,Q) by an (N+4) dimensional featurevector fPQ. The four page counts-based co-occurrencemeasures defined in Section 3.2 are used as four distinctfeatures in fPQ. For completeness let us assume that (N+1)-st, (N + 2)-nd, (N + 3)-rd, and (N + 4)-th features areset respectively to WebJaccard, WebOverlap, WebDice,and WebPMI. Next, we compute a feature from each ofthe N clusters as follows. First, we assign a weight wijto a pattern ai that is in a cluster cj as follows,

wij =µ(ai)∑t∈cj µ(t)

. (6)

Here, µ(a) is the total frequency of a pattern a in all wordpairs, and it is given by (5). Because we perform a hardclustering on patterns, a pattern can belong to only onecluster (i.e. wij = 0 for ai /∈ cj). Finally, we compute thevalue of the j-th feature in the feature vector for a wordpair (P,Q) as follows,∑

ai∈cj

wijf(P,Q, ai). (7)

The value of the j-th feature of the feature vector fPQrepresenting a word pair (P,Q) can be seen as theweighted sum of all patterns in cluster cj that co-occurwith words P and Q. We assume that all patterns in acluster to represent a particular semantic relation. Con-sequently, the j-th feature value given by (7) expressesthe significance of the semantic relation represented bycluster j for word pair (P,Q). For example, if the weightwij is set to 1 for all patterns ai in a cluster cj , thenthe j-th feature value is simply the sum of frequen-cies of all patterns in cluster cj with words P and Q.However, assigning an equal weight to all patterns in acluster is not desirable in practice because some patternscan contain misspellings and/or can be grammaticallyincorrect. Equation (6) assigns a weight to a patternproportionate to its frequency in a cluster. If a patternhas a high frequency in a cluster, then it is likely tobe a canonical form of the relation represented by allthe patterns in that cluster. Consequently, the weightingscheme described by Equation (6) prefers high frequentpatterns in a cluster.

To train a two-class SVM to detect synonymousand non-synonymous word pairs, we utilize a trainingdataset S = {(Pk, Qk, yk)} of word pairs. S consistsof synonymous word pairs (positive training instances)and non-synonymous word pairs (negative training in-stances). Training dataset S is generated automaticallyfrom WordNet synsets as described later in Section 3.6.Label yk ∈ {−1, 1} indicates whether the word pair(Pk, Qk) is a synonymous word pair (i.e. yk = 1) ora non-synonymous word pair (i.e. yk = −1). For eachword pair in S, we create an (N+4) dimensional featurevector as described above. To simplify the notation, let

us denote the feature vector of a word pair (Pk, Qk) byfk. Finally, we train a two-class SVM using the labeledfeature vectors.

Once we have trained an SVM using synonymous andnon-synonymous word pairs, we can use it to computethe semantic similarity between two given words. Fol-lowing the same method we used to generate featurevectors for training, we create an (N + 4) dimensionalfeature vector f∗ for a pair of words (P ∗, Q∗), betweenwhich we must measure semantic similarity. We definethe semantic similarity sim(P ∗, Q∗) between P ∗ and Q∗

as the posterior probability, p(y∗ = 1|f∗), that the fea-ture vector f∗ corresponding to the word-pair (P ∗, Q∗)belongs to the synonymous-words class (i.e. y∗ = 1).sim(P ∗, Q∗) is given by,

sim(P ∗, Q∗) = p(y∗ = 1|f∗). (8)

Because SVMs are large margin classifiers, the outputof an SVM is the distance from the classification hy-perplane. The distance d(f∗) to an instance f∗ from theclassification hyperplane is given by,

d(f∗) = h(f∗) + b.

Here, b is the bias term and the hyperplane, h(f∗), isgiven by,

h(f∗) =∑i

ykαkK(fk, f∗).

Here, αk is the Lagrange multiplier corresponding tothe support vector fk

3, and K(fk, f∗) is the value of

the kernel function for a training instance fk and theinstance to classify, f∗. However, d(f∗) is not a cali-brated posterior probability. Following Platt [30], we usesigmoid functions to convert this uncalibrated distanceinto a calibrated posterior probability. The probability,p(y = 1|d(f)), is computed using a sigmoid functiondefined over d(f) as follows,

p(y = 1|d(f)) =1

1 + exp(λd(f) + µ).

Here, λ and µ are parameters which are determinedby maximizing the likelihood of the training data. Log-likelihood of the training data is given by,

L(λ, µ) =

N∑k=1

log p(yk|fk;λ, µ) (9)

=

N∑k=1

{tk log(pk) + (1− tk) log(1− pk)}.

Here, to simplify the notation we have used tk = (yk +1)/2 and pk = p(yk = 1|fk). The maximization in (9)with respect to parameters λ and µ is performed usingmodel-trust minimization [31].

3. From K.K.T. conditions it follows that the Lagrange multiplierscorresponding to non-support vectors become zero.

8

TABLE 1No. of patterns extracted for training data.

word pairs synonymous non-synonymous# word pairs 3000 3000# extracted patterns 5365398 515848# selected patterns 270762 38978

3.6 Training

To train the two-class SVM described in Section 3.5, werequire both synonymous and non-synonymous wordpairs. We use WordNet, a manually created Englishdictionary, to generate the training data required by theproposed method. For each sense of a word, a set ofsynonymous words is listed in WordNet synsets. Werandomly select 3000 nouns from WordNet, and extracta pair of synonymous words from a synset of eachselected noun. If a selected noun is polysemous, then weconsider the synset for the dominant sense. Obtaininga set of non-synonymous word pairs (negative traininginstances) is difficult, because there does not exist a largecollection of manually created non-synonymous wordpairs. Consequently, to create a set of non-synonymousword pairs, we adopt a random shuffling technique.Specifically, we first randomly select two synonymousword pairs from the set of synonymous word pairscreated above, and exchange two words between wordpairs to create two new word pairs. For example, fromtwo synonymous word pairs (A,B) and (C,D), wegenerate two new pairs (A,C) and (B,D). If the newlycreated word pairs do not appear in any of the wordnet synsets, we select them as non-synonymous wordpairs. We repeat this process until we create 3000 non-synonymous word pairs. Our final training dataset con-tains 6000 word pairs (i.e. 3000 synonymous word pairsand 3000 non-synonymous word pairs).

Next, we use the lexical pattern extraction algorithmdescribed in Section 3.3 to extract numerous lexicalpatterns for the word pairs in our training dataset. Weexperimentally set the parameters in the pattern extrac-tion algorithm to L = 5, g = 2, G = 4, and T = 5. Table 1shows the number of patterns extracted for synonymousand non-synonymous word pairs in the training dataset.As can be seen from Table 1, the proposed patternextraction algorithm typically extracts a large numberof lexical patterns. Figs. 5 and 6 respectively, show thedistribution of patterns extracted for synonymous andnon-synonymous word pairs. Because of the noise inweb snippets such as, ill-formed snippets and misspells,most patterns occur only a few times in the list ofextracted patterns. Consequently, we ignore any patternsthat occur less than 5 times. Finally, we de-duplicatethe patterns that appear for both synonymous and non-synonymous word pairs to create a final set of 302286lexical patterns. The remainder of the experiments de-scribed in the paper use this set of lexical patterns.

We determine the clustering threshold θ as follows,

0.5 1 1.5 2 2.5 3 3.5 4 4.5 5 5.5

0

2

4

6

8

10

12

14

16

18x 10

4

log pattern frequency

no

. o

f u

niq

ue

pa

tte

rns

Fig. 5. Distribution of patterns extracted from synony-mous word pairs.

0.5 1 1.5 2 2.5 3 3.5 4 4.5

0

0.5

1

1.5

2

2.5x 10

4

log pattern frequency

no

. o

f u

niq

ue

pa

tte

rns

Fig. 6. Distribution of patterns extracted from non-synonymous word pairs.

First, we we run Algorithm 1 for different θ values, andwith each set of clusters we compute feature vectorsfor synonymous word pairs as described in Section 3.5.Let W denote the set of synonymous word pairs (i.e.W = {(Pi, Qi)|(Pi, Qi, yi) ∈ S, yi = 1}). Moreover, let fWbe the centroid vector of all feature vectors representingsynonymous word pairs, which is given by,

fW =1

|W |∑

(P,Q)∈W

fPQ. (10)

Next, we compute the average Mahalanobis distance,D(θ), between fW and feature vectors that representsynonymous as follows,

D(θ) =1

|W |∑

(P,Q)∈WMahala(fW, fPQ). (11)

Here, |W | is the number of word pairs in W , and

9

mahala(fW, fPQ) is the Mahalanobis distance defined by,

mahala(fw, fPQ) = (fw − fPQ)TC−1(fw − fPQ) (12)

Here, C−1 is the inverse of the inter-cluster correlationmatrix, C, where the (i, j) element of C is defined to bethe inner-product between the vectors ci, cj correspond-ing to clusters ci and cj . Finally, we set the optimumvalue of clustering threshold, θ̂, to the value of θ thatminimizes the average Mahalanobis distance as follows,

θ̂ = arg minθ∈[0,1]

D(θ).

Alternatively, we can define the reciprocal of D(θ) asaverage similarity, and minimize this quantity. Notethat the average in (11) is taken over a large numberof synonymous word pairs (3000 word pairs in W ),which enables us to determine θ robustly. Moreover,we consider Mahalanobis distance instead of Euclideandistances, because a set of pattern clusters might notnecessarily be independent. For example, we wouldexpect a certain level of correlation between the twoclusters that represent an is-a relation and a has-a relation.Mahalanobis distance consider the correlation betweenclusters when computing distance. Note that if we takethe identity matrix as C in (12), then we get the Eu-clidean distance.

Fig. 7 plots average similarity between centroid featurevector and all synonymous word pairs for differentvalues of θ. From Fig. 7, we see that initially averagesimilarity increases when θ is increased. This is becauseclustering of semantically related patterns reduces thesparseness in feature vectors. Average similarity is stablewithin a range of θ values between 0.5 and 0.7. However,increasing θ beyond 0.7 results in a rapid drop of averagesimilarity. To explain this behavior consider Fig. 8 wherewe plot the sparsity of the set of clusters (i.e. the ratiobetween singletons to total clusters) against thresholdθ. As seen from Fig. 8, high θ values result in a highpercentage of singletons because only highly similarpatterns will form clusters. Consequently, feature vectorsfor different word pairs do not have many features incommon. The maximum average similarity score of 1.31is obtained with θ = 0.7, corresponding to 32, 207 totalclusters out of which 23, 836 are singletons with exactlyone pattern (sparsity = 0.74). For the remainder of theexperiments in this paper we set θ to this optimal valueand use the corresponding set of clusters.

We train an SVM with a radial basis function (RBF)kernel. Kernel parameter γ and soft-margin trade-off Cis respectively set to 0.0078125 and 131072 using 5-foldcross-validation on training data. We used LibSVM4 asthe SVM implementation. Remainder of the experimentsin the paper use this trained SVM model.

4. http://www.csie.ntu.edu.tw/∼cjlin/libsvm/

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

1.1

1.2

1.3

1.4

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

Av

erag

e S

imil

arit

y

Clustering Threshold

Fig. 7. Average similarity vs. clustering threshold θ

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

Clu

ster

Sp

arsi

ty

Clustering Threshold

Fig. 8. Sparsity vs. clustering threshold θ

4 EXPERIMENTS

4.1 Benchmark DatasetsFollowing the previous work, we evaluate the pro-posed semantic similarity measure by comparing it withhuman ratings in three benchmark datasets: Miller-Charles (MC) [10], Rubenstein-Goodenough (RG) [32],and WordSimilarity-353 (WS) [33]. Each dataset containsa list of word pairs rated by multiple human annotators(MC: 28 pairs, 38 annotators, RG: 65 pairs, 36 annotators,WS: 353 pairs, 13 annotators). A semantic similaritymeasure is evaluated using the correlation between thesimilarity scores produced by it for the word pairsin a benchmark dataset and the human ratings. BothPearson correlation coefficient and Spearman correlationcoefficient have been used as evaluation measures in pre-vious work on semantic similarity. It is noteworthy thatPearson correlation coefficient can get severely affectedby non-linearities in ratings. Contrastingly, Spearmancorrelation coefficient first assigns ranks to each list ofscores, and then computes correlation between the twolists of ranks. Therefore, Spearman correlation is moreappropriate for evaluating semantic similarity measures,

10

which might not be necessarily linear. In fact, as we shallsee later, most semantic similarity measures are non-linear. Previous work that used RG and WS datasetsin their evaluations have chosen Spearman correlationcoefficient as the evaluation measure. However, for theMC dataset, which contains only 28 word pairs, Pearsoncorrelation coefficient has been widely used. To be ableto compare our results with previous work, we useboth Pearson and Spearman correlation coefficients forexperiments conducted on MC dataset, and Spearmancorrelation coefficient for experiments on RG and WSdatasets. It is noteworthy that we exclude all wordsthat appear in the benchmark dataset from the trainingdata created from WordNet as described in Section 3.6.Benchmark datasets are reserved for evaluation purposesonly and we do not train or tune any of the parametersusing benchmark datasets.

4.2 Semantic SimilarityTable 2 shows the experimental results on MC datasetfor the proposed method (Proposed); previously pro-posed web-based semantic similarity measures: Sahamiand Heilman [2] (SH), Co-occurrence double check-ing model [4] (CODC), and Normalized Google Dis-tance [12] (NGD); and the four page counts-based co-occurrence measures in Section 3.2. No Clust baseline ,which resembles our previously published work [34], isidentical to the proposed method in all aspects exceptfor that it does not use cluster information. It can beunderstood as the proposed method with each extractedlexical pattern in its own cluster. No Clust is expected toshow the effect of clustering on the performance of theproposed method. All similarity scores in Table 2 are nor-malized into [0, 1] range for the ease of comparison, andFisher’s confidence intervals are computed for Spearmanand Pearson correlation coefficients. NGD is a distancemeasure and was converted to a similarity score by tak-ing the inverse. Original papers that proposed NGD andSH measures did not present their results on MC dataset.Therefore, we re-implemented those systems followingthe original papers. The proposed method achieves thehighest Pearson and Spearman coefficients in Table 2and outperforms all other web-based semantic similar-ity measures. WebPMI reports the highest correlationamong all page counts-based co-occurrence measures.However, methods that use snippets such as SH andCODC, have better correlation scores. MC dataset con-tains polysemous words such as father (priest vs. parent),oracle (priest vs. database system) and crane (machinevs. bird), which are problematic for page counts-basedmeasures that do not consider the local context of aword. The No Clust baseline which combines both pagecounts and snippets outperforms the CODC measure bya wide margin of 0.2 points. Moreover, by clustering thelexical patterns we can further improve the No Clustbaseline.

Table 3 summarizes the experimental results on RGand WS datasets. Likewise on the MC dataset, the

TABLE 3Correlation with RG and WS datasets.

Method WS RGWebJaccard 0.26 [0.16, 0.35] 0.51 [0.30, 0.67]WebDice 0.26 [0.16, 0.35] 0.51 [0.30, 0.67]WebOverlap 0.27 [0.17, 0.36] 0.54 [0.34, 0.69]WebPMI 0.36 [0.26, 0.45] 0.49 [0.28, 0.66]CODC [4] 0.55 [0.48, 0.62] 0.65 [0.49, 0.77]SH [2] 0.36 [0.26, 0.45] 0.31 [0.07, 0.52]NGD [12] 0.40 [0.31, 0.48] 0.56 [0.37, 0.71]No Clust 0.53 [0.45, 0.60] 0.73 [0.60, 0.83]Proposed 0.74 [0.69, 0.78] 0.86 [0.78, 0.91]

TABLE 4Comparison with previous work on MC dataset.

Method Source PearsonWikirelate! [35] Wikipedia 0.46Sahami & Heilman [2] Web Snippets 0.58Gledson [36] Page Counts 0.55Wu & Palmer [37] WordNet 0.78Resnik [8] WordNet 0.74Leacock [38] WordNet 0.82Lin [11] WordNet 0.82Jiang & Conrath [39] WordNet 0.84Jarmasz [40] Roget’s 0.87Li et al. [9] WordNet 0.89Schickel-Zuber [41] WordNet 0.91Agirre et al [42] WordNet+Corpus 0.93Proposed WebSnippets+Page Counts 0.87

proposed method outperforms all other methods onRG and WS datasets. In contrast to MC dataset, theproposed method outperforms the No Clust baseline bya wide margin in RG and WS datasets. Unlike the MCdataset which contains only 28 word pairs, RG and WSdatasets contain a large number of word pairs. Therefore,more reliable statistics can be computed on RG and WSdatasets. Fig. 9 shows the similarity scores produced bysix methods against human ratings in the WS dataset.We see that all methods deviate from the y = x line,and are not linear. We believe this justifies the use ofSpearman correlation instead of Pearson correlation byprevious work on semantic similarity as the preferredevaluation measure.

Tables 4, 5, and 6 respectively compare the proposedmethod against previously proposed semantic similaritymeasures. Despite the fact that the proposed methoddoes not require manually created resources such asWordNet, Wikipedia or fixed corpora, the performanceof the proposed method is comparable with methodsthat use such resources. The non-dependence on dic-tionaries is particularly attractive when measuring thesimilarity between named-entities which are not well-covered by dictionaries such as WordNet. We furtherevaluate the ability of the proposed method to computethe semantic similarity between named-entities in Sec-tion 4.3.

In Table 7 we analyze the effect of clustering. Wecompare No Clust (i.e. does not use any clustering in-formation in feature vector creation), singletons excluded(remove all clusters with only one pattern), and single-

11

TABLE 2Semantic similarity scores on MC dataset

word pair MC WebJaccard WebDice WebOverlap WebPMI CODC [4] SH [2] NGD [12] No Clust Proposedautomobile-car 1.00 0.65 0.66 0.83 0.43 0.69 1.00 0.15 0.98 0.92journey-voyage 0.98 0.41 0.42 0.16 0.47 0.42 0.52 0.39 1.00 1.00gem-jewel 0.98 0.29 0.30 0.07 0.69 1.00 0.21 0.42 0.69 0.82boy-lad 0.96 0.18 0.19 0.59 0.63 0.00 0.47 0.12 0.97 0.96coast-shore 0.94 0.78 0.79 0.51 0.56 0.52 0.38 0.52 0.95 0.97asylum-madhouse 0.92 0.01 0.01 0.08 0.81 0.00 0.21 1.00 0.77 0.79magician-wizard 0.89 0.29 0.30 0.37 0.86 0.67 0.23 0.44 1.00 1.00midday-noon 0.87 0.10 0.10 0.12 0.59 0.86 0.29 0.74 0.82 0.99furnace-stove 0.79 0.39 0.41 0.10 1.00 0.93 0.31 0.61 0.89 0.88food-fruit 0.78 0.75 0.76 1.00 0.45 0.34 0.18 0.55 1.00 0.94bird-cock 0.77 0.14 0.15 0.14 0.43 0.50 0.06 0.41 0.59 0.87bird-crane 0.75 0.23 0.24 0.21 0.52 0.00 0.22 0.41 0.88 0.85implement-tool 0.75 1.00 1.00 0.51 0.30 0.42 0.42 0.91 0.68 0.50brother-monk 0.71 0.25 0.27 0.33 0.62 0.55 0.27 0.23 0.38 0.27crane-implement 0.42 0.06 0.06 0.10 0.19 0.00 0.15 0.40 0.13 0.06brother-lad 0.41 0.18 0.19 0.36 0.64 0.38 0.24 0.26 0.34 0.13car-journey 0.28 0.44 0.45 0.36 0.20 0.29 0.19 0.00 0.29 0.17monk-oracle 0.27 0.00 0.00 0.00 0.00 0.00 0.05 0.45 0.33 0.80food-rooster 0.21 0.00 0.00 0.41 0.21 0.00 0.08 0.42 0.06 0.02coast-hill 0.21 0.96 0.97 0.26 0.35 0.00 0.29 0.70 0.87 0.36forest-graveyard 0.20 0.06 0.06 0.23 0.49 0.00 0.00 0.54 0.55 0.44monk-slave 0.12 0.17 0.18 0.05 0.61 0.00 0.10 0.77 0.38 0.24coast-forest 0.09 0.86 0.87 0.29 0.42 0.00 0.25 0.36 0.41 0.15lad-wizard 0.09 0.06 0.07 0.05 0.43 0.00 0.15 0.66 0.22 0.23cord-smile 0.01 0.09 0.10 0.02 0.21 0.00 0.09 0.13 0.00 0.01glass-magician 0.01 0.11 0.11 0.40 0.60 0.00 0.14 0.21 0.18 0.05rooster-voyage 0.00 0.00 0.00 0.00 0.23 0.00 0.20 0.21 0.02 0.05noon-string 0.00 0.12 0.12 0.04 0.10 0.00 0.08 0.21 0.02 0.00

Spearman 1.00 0.39 0.39 0.40 0.52 0.69 0.62 0.13 0.83 0.85Lower 1.00 0.02 0.02 0.04 0.18 0.42 0.33 −0.25 0.66 0.69Upper 1.00 0.67 0.67 0.68 0.75 0.84 0.81 0.48 0.92 0.93

Pearson 1.00 0.26 0.27 0.38 0.55 0.69 0.58 0.21 0.83 0.87Lower 1.00 −0.13 −0.12 0.01 0.22 0.42 0.26 −0.18 0.67 0.73Upper 1.00 0.58 0.58 0.66 0.77 0.85 0.78 0.54 0.92 0.94

TABLE 5Comparison with previous work on RG dataset.

Method Source SpearmanWikirelate! [35] Wikipedia 0.56Gledson [36] Page Counts 0.55Jiang & Conrath [39] WordNet 0.73Hirst & St. Onge [43] WordNet 0.73Resnik [8] WordNet 0.80Lin [11] WordNet 0.83Leacock [38] WordNet 0.85Proposed WebSnippets+Page Counts 0.86

TABLE 6Comparison with previous work on WS dataset.

Method Source SpearmanJarmasz [40] WordNet 0.35Wikirelate! [35] Wikipedia 0.48Jarmasz [40] Roget’s 0.55Hughes & Ramage [44] WordNet 0.55Finkelstein et al. [33] Corpus+WordNet 0.56Gabrilovich [45] ODP 0.65Gabrilovich [45] Wikipedia 0.75Proposed WebSnippets+Page Counts 0.74

tons included (considering all clusters). From Table 7 wesee in all three datasets, we obtain the best results byconsidering all clusters (singletons incl.). If we remove allsingletons, then the performance drops below No Clust.

TABLE 7Effect of pattern clustering (Spearman).

Method MC RG WSNo Clust 0.83 0.73 0.53[upper, lower] [0.66, 0.92] [0.60, 0.83] [0.45, 0.60]singletons excl. 0.59 0.68 0.49[upper, lower] [0.29, 0.79] [0.52, 0.79] [0.40, 0.56]singletons incl. 0.85 0.86 0.74[upper, lower] [0.69, 0.93] [0.78, 0.91] [0.69, 0.78]

TABLE 8Page counts vs Snippets. (Spearman)

Method MC RG WSPage counts only 0.57 0.57 0.37[upper, lower] [0.25, 0.77] [0.39, 0.72] [0.29, 0.46]Snippets only 0.82 0.85 0.72[upper, lower] [0.65, 0.91] [0.77, 0.90] [0.71, 0.79]Both 0.85 0.86 0.74[upper, lower] [0.69, 0.93] [0.78, 0.91] [0.69, 0.78]

Note that out of the 32.207 clusters used by the proposedmethod, 23, 836 are singletons (sparsity=0.74). Therefore,if we remove all singletons, we cannot represent someword pairs adequately, resulting in poor performance.

Table 8 shows the contribution of page counts-basedsimilarity measures, and lexical patterns extracted fromsnippets, on the overall performance of the proposed

12

0 0.2 0.4 0.6 0.8 10

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

(a) WebPMI (0.36)

0 0.2 0.4 0.6 0.8 10

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

(b) CODC (0.55)

0 0.2 0.4 0.6 0.8 10

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

(c) SH (0.36)

0 0.2 0.4 0.6 0.8 10

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

(d) NGD (0.40)

0 0.2 0.4 0.6 0.8 10

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

(e) No Clust (0.53)

0 0.2 0.4 0.6 0.8 10

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

(f) Proposed (0.74)

Fig. 9. Spearman rank correlation between various similarity measures (y-axis) and human ratings (x-axis) on the WSdataset.

method. To evaluate the effect of page counts-based co-occurrence measures on the proposed method, we gener-ate feature vectors only using the four page counts-basedco-occurrence measures, to train an SVM. Similarly, toevaluate the effect of snippets, we generate feature vec-tors only using lexical pattern clusters. From Table 8 wesee that on all three datasets, snippets have a greaterimpact on the performance of the proposed method thanpage counts. By considering both page counts as wellas snippets we can further improve the performancereported by individual methods. The improvement inperformance when we use snippets only is statisticallysignificant over that when we use page counts only inRG and WS datasets. However, the performance gain inthe combination is not statistically significant. We believethat this is because most of the words in the benchmarkdatasets are common nouns that co-occur a lot in websnippets. On the other hand, having page counts in themodel is particularly useful when two words do notappear in numerous lexical patterns.

4.3 Community Mining

Measuring the semantic similarity between named en-tities is vital in many applications such as query ex-pansion [2], entity disambiguation (e.g. namesake dis-ambiguation) and community mining [46]. Because most

named entities are not covered by WordNet, similaritymeasures that are based on WordNet cannot be useddirectly in these tasks. Unlike common English words,named entities are being created constantly. Manuallymaintaining an up-to-date taxonomy of named entities iscostly, if not impossible. The proposed semantic similar-ity measure is appealing for these applications becauseit does not require pre-compiled taxonomies.

In order to evaluate the performance of the proposedmeasure in capturing the semantic similarity betweennamed-entities, we set up a community mining task.We select 50 personal names from 5 communities: tennisplayers, golfers, actors, politicians and scientists5 , (10 namesfrom each community) from the open directory project(DMOZ)6. For each pair of names in our data set, wemeasure their similarity using the proposed method andbaselines. We use group-average agglomerative hierar-chical clustering (GAAC) to cluster the names in ourdataset into five clusters.

Initially, each name is assigned to a separate cluster.In subsequent iterations, group average agglomerativeclustering process, merges the two clusters with highestcorrelation. Correlation, Corr(Γ) between two clusters A

5. www.miv.t.u-tokyo.ac.jp/danushka/data/people.tgz6. http://dmoz.org

13

and B is defined as the following,

Corr(Γ) =1

2

1

|Γ|(|Γ| − 1)

∑(u,v)∈Γ

sim(u, v)

Here, Γ is the merger of the two clusters A and B.|Γ| denotes the number of elements (persons) in Γ andsim(u, v) is the semantic similarity between two personsu and v in Γ. We terminate GAAC process when exactlyfive clusters are formed. We adopt this clustering methodwith different semantic similarity measures sim(u, v) tocompare their accuracy in clustering people who belongto the same community.

We employed the B-CUBED metric [47] to evaluatethe clustering results. The B-CUBED evaluation metricwas originally proposed for evaluating cross-documentco-reference chains. It does not require the clusters to belabelled. We compute precision, recall and F -score foreach name in the data set and average the results overthe dataset. For each person p in our data set, let usdenote the cluster that p belongs to by C(p). Moreover,we use A(p) to denote the affiliation of person p, e.g.,A(“Tiger Woods”) =“Tennis Player”. Then we calculateprecision and recall for person p as,

Precision(p) =No. of people in C(p) with affiliation A(p)

No. of people in C(p),

Recall(p) =No. of people in C(p) with affiliation A(p)

Total No. of people with affiliation A(p).

Since, we selected 10 people from each of the fivecategories, the total number of people with a particularaffiliation is 10 for all the names p. Then, the F -score ofperson p is defined as,

F(p) =2× Precision(p)× Recall(p)

Precision(p) + Recall(p).

Overall precision, recall and F -score are computed bytaking the averaged sum over all the names in thedataset.

Precision =1

N

∑p∈DataSet

Precision(p)

Recall =1

N

∑p∈DataSet

Recall(p)

F−Score =1

N

∑p∈DataSet

F(p)

Here, DataSet is the set of 50 names selected fromthe open directory project. Therefore, N = 50 in ourevaluations.

Experimental results are shown in Table 9. The pro-posed method shows the highest entity clustering accu-racy in Table 9 with a statistically significant (p ≤ 0.01Tukey HSD) F score of 0.86. Sahami et al. [2]’s snippet-based similarity measure, WebJaccard, WebDice and We-bOverlap measures yield similar clustering accuracies.By clustering semantically related lexical patterns, we seethat both precision as well as recall can be improved ina community mining task.

TABLE 9Results for Community Mining

Method Precision Recall F MeasureWebJaccard 0.59 0.71 0.61WebOverlap 0.59 0.68 0.59WebDice 0.58 0.71 0.61WebPMI 0.26 0.42 0.29Sahami [2] 0.63 0.66 0.64Chen [4] 0.47 0.62 0.49No Clust 0.79 0.80 0.78Proposed 0.85 0.87 0.86

5 CONCLUSION

We proposed a semantic similarity measures using bothpage counts and snippets retrieved from a web searchengine for two words. Four word co-occurrence mea-sures were computed using page counts. We proposed alexical pattern extraction algorithm to extract numeroussemantic relations that exist between two words. More-over, a sequential pattern clustering algorithm was pro-posed to identify different lexical patterns that describethe same semantic relation. Both page counts-based co-occurrence measures and lexical pattern clusters wereused to define features for a word pair. A two-classSVM was trained using those features extracted forsynonymous and non-synonymous word pairs selectedfrom WordNet synsets. Experimental results on threebenchmark datasets showed that the proposed methodoutperforms various baselines as well as previously pro-posed web-based semantic similarity measures, achiev-ing a high correlation with human ratings. Moreover, theproposed method improved the F score in a communitymining task, thereby underlining its usefulness in real-world tasks, that include named-entities not adequatelycovered by manually created resources.

REFERENCES

[1] A. Kilgarriff, “Googleology is bad science,” Computational Linguis-tics, vol. 33, pp. 147–151, 2007.

[2] M. Sahami and T. Heilman, “A web-based kernel function formeasuring the similarity of short text snippets,” in Proc. of 15thInternational World Wide Web Conference, 2006.

[3] D. Bollegala, Y. Matsuo, and M. Ishizuka, “Disambiguatingpersonal names on the web using automatically extracted keyphrases,” in Proc. of the 17th European Conference on ArtificialIntelligence, 2006, pp. 553–557.

[4] H. Chen, M. Lin, and Y. Wei, “Novel association measures usingweb search with double checking,” in Proc. of the COLING/ACL2006, 2006, pp. 1009–1016.

[5] M. Hearst, “Automatic acquisition of hyponyms from large textcorpora,” in Proc. of 14th COLING, 1992, pp. 539–545.

[6] M. Pasca, D. Lin, J. Bigham, A. Lifchits, and A. Jain, “Organizingand searching the world wide web of facts - step one: the one-million fact extraction challenge,” in Proc. of AAAI-2006, 2006.

[7] R. Rada, H. Mili, E. Bichnell, and M. Blettner, “Development andapplication of a metric on semantic nets,” IEEE Transactions onSystems, Man and Cybernetics, vol. 9(1), pp. 17–30, 1989.

[8] P. Resnik, “Using information content to evaluate semantic simi-larity in a taxonomy,” in Proc. of 14th International Joint Conferenceon Aritificial Intelligence, 1995.

[9] D. M. Y. Li, Zuhair A. Bandar, “An approch for measuringsemantic similarity between words using multiple informationsources,” IEEE Transactions on Knowledge and Data Engineering, vol.15(4), pp. 871–882, 2003.

14

[10] G. Miller and W. Charles, “Contextual correlates of semanticsimilarity,” Language and Cognitive Processes, vol. 6(1), pp. 1–28,1998.

[11] D. Lin, “An information-theoretic definition of similarity,” in Proc.of the 15th ICML, 1998, pp. 296–304.

[12] R. Cilibrasi and P. Vitanyi, “The google similarity distance,” IEEETransactions on Knowledge and Data Engineering, vol. 19, no. 3, pp.370–383, 2007.

[13] M. Li, X. Chen, X. Li, B. Ma, and P. Vitanyi, “The similaritymetric,” IEEE Transactions on Information Theory, vol. 50, no. 12,pp. 3250–3264, 2004.

[14] P. Resnik, “Semantic similarity in a taxonomy: An informationbased measure and its application to problems of ambiguity innatural language,” Journal of Aritificial Intelligence Research, vol. 11,pp. 95–130, 1999.

[15] R. Rosenfield, “A maximum entropy approach to adaptive statis-tical modelling,” Computer Speech and Language, vol. 10, pp. 187–228, 1996.

[16] D. Lin, “Automatic retrieival and clustering of similar words,” inProc. of the 17th COLING, 1998, pp. 768–774.

[17] J. Curran, “Ensemble menthods for automatic thesaurus extrac-tion,” in Proc. of EMNLP, 2002.

[18] C. Buckley, G. Salton, J. Allan, and A. Singhal, “Automatic queryexpansion using smart: Trec 3,” in Proc. of 3rd Text REtreivalConference, 1994, pp. 69–80.

[19] V. Vapnik, Statistical Learning Theory. Wiley, Chichester, GB, 1998.[20] K. Church and P. Hanks, “Word association norms, mutual infor-

mation and lexicography,” Computational Linguistics, vol. 16, pp.22–29, 1991.

[21] Z. Bar-Yossef and M. Gurevich, “Random sampling from a searchengine’s index,” in Proceedings of 15th International World Wide WebConference, 2006.

[22] F. Keller and M. Lapata, “Using the web to obtain frequencies forunseen bigrams,” Computational Linguistics, vol. 29(3), pp. 459–484,2003.

[23] M. Lapata and F. Keller, “Web-based models ofr natural languageprocessing,” ACM Transactions on Speech and Language Processing,vol. 2(1), pp. 1–31, 2005.

[24] R. Snow, D. Jurafsky, and A. Ng, “Learning syntactic patternsfor automatic hypernym discovery,” in Proc. of Advances in NeuralInformation Processing Systems (NIPS) 17, 2005, pp. 1297–1304.

[25] M. Berland and E. Charniak, “Finding parts in very large cor-pora,” in Proc. of ACL’99, 1999, pp. 57–64.

[26] D. Ravichandran and E. Hovy, “Learning surface text patterns fora question answering system,” in Proc. of ACL ’02, 2001, pp. 41–47.

[27] R. Bhagat and D. Ravichandran, “Large scale acquisition of para-phrases for learning surface patterns,” in Proc. of ACL’08: HLT,2008, pp. 674–682.

[28] J. Pei, J. Han, B. Mortazavi-Asi, J. Wang, H. Pinto, Q. Chen,U. Dayal, and M. Hsu, “Mining sequential patterns by pattern-growth: the prefixspan approach,” IEEE Transactions on Knowledgeand Data Engineering, vol. 16, no. 11, pp. 1424–1440, 2004.

[29] Z. Harris, “Distributional structure,” Word, vol. 10, pp. 146–162,1954.

[30] J. Platt, “Probabilistic outputs for support vector machines andcomparison to regularized likelihood methods,” Advances in LargeMargin Classifiers, pp. 61–74, 2000.

[31] P. Gill, W. Murray, and M. Wright, Practical optimization. Aca-demic Press, 1981.

[32] H. Rubenstein and J. Goodenough, “Contextual correlates ofsynonymy,” Communications of the ACM, vol. 8, pp. 627–633, 1965.

[33] L. Finkelstein, E. Gabrilovich, Y. Matias, E. Rivlin, z. Solan,G. Wolfman, and E. Ruppin, “Placing search in context: The con-cept revisited,” ACM Transactions on Information Systems, vol. 20,pp. 116–131, 2002.

[34] D. Bollegala, Y. Matsuo, and M. Ishizuka, “Measuring semanticsimilarity between words using web search engines,” in Proc. ofWWW ’07, 2007, pp. 757–766.

[35] M. Strube and S. P. Ponzetto, “Wikirelate! computing semanticrelatedness using wikipedia,” in Proc. of AAAI’06, 2006, pp. 1419–1424.

[36] A. Gledson and J. Keane, “Using web-search results to measureword-group similarity,” in Proc. of COLING’08, 2008, pp. 281–288.

[37] Z. Wu and M. Palmer, “Verb semantics and lexical selection,” inProc. of ACL’94, 1994, pp. 133–138.

[38] C. Leacock and M. Chodorow, “Combining local context andwordnet similarity for word sense disambiguation,” WordNet: AnElectronic Lexical Database, vol. 49, pp. 265–283, 1998.

[39] J. Jiang and D. Conrath, “Semantic similarity based on corpusstatistics and lexical taxonomy,” in Proc. of the International Con-ference on Research in Computational Linguistics ROCLING X, 1997.

[40] M. Jarmasz, “Roget’s thesaurus as a lexical resource for naturallanguage processing,” University of Ottowa, Tech. Rep., 2003.

[41] V. Schickel-Zuber and B. Faltings, “Oss: A semantic similarityfunction based on hierarchical ontologies,” in Proc. of IJCAI’07,2007, pp. 551–556.

[42] E. Agirre, E. Alfonseca, K. Hall, J. Kravalova, M. Pasca, andA. Soroa, “A study on similarity and relatedness using distribu-tional and wordnet-based approaches,” in Proc. of NAACL-HLT’09,2009.

[43] G. Hirst, , and D. St-Onge, “Lexical chains as representationsof context for the detection and correction of malapropisms.”WordNet: An Electronic Lexical Database, pp. 305–?32, 1998.

[44] T. Hughes and D. Ramage, “Lexical semantic relatedness withrandom graph walks,” in Proc. of EMNLP-CoNLL’07, 2007, pp.581–589.

[45] E. Gabrilovich and S. Markovitch, “Computing semantic related-ness using wikipedia-based explicit semantic analysis,” in Proc. ofIJCAI’07, 2007, pp. 1606–1611.

[46] Y. Matsuo, J. Mori, M. Hamasaki, K. Ishida, T. Nishimura,H. Takeda, K. Hasida, and M. Ishizuka, “Polyphonet: An ad-vanced social network extraction system,” in Proc. of 15th Interna-tional World Wide Web Conference, 2006.

[47] A. Bagga and B. Baldwin, “Entity-based cross document corefer-encing using the vector space model,” in Proc. of 36th COLING-ACL, 1998, pp. 79–85.

Danushka Bollegala received his BS, MS andPhD degrees from the University of Tokyo, Japanin 2005, 2007, and 2009. He is currently a re-search fellow of the Japanese society for the pro-motion of science (JSPS). His research interestsare natural language processing, Web miningand artificial intelligence.

Yutaka Matsuo is an associate professor atInstitute of Engineering Innovation, the Univer-sity of Tokyo, Japan. He received his BS, MS,and PhD degrees from the University of Tokyoin 1997, 1999, and 2002. He joined NationalInstitute of Advanced Industrial Science andTechnology (AIST) from 2002 to 2007. He isinterested in social network mining, text process-ing, and semantic web in the context of artificialintelligence research.

Mitsuru Ishizuka (M’79) is a professor at Grad-uate School of Information Science and Technol-ogy, the University of Tokyo, Japan. He receivedhis BS and PhD degrees in electronic engineer-ing from the University of Tokyo in 1971 and1976. His research interests include artificial in-telligence, Web intelligence, and lifelike agents.

Related Documents