International Journal of Information Technology & Management Information System (IJITMIS), ISSN 0976 – 6405(Print), ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), © IAEME 68 DESIGN A TEXT-PROMPT SPEAKER RECOGNITION SYSTEM USING LPC-DERIVED FEATURES Dr. Mustafa Dhiaa Al-Hassani 1 , Dr. Abdulkareem A. Kadhim 2 1 Computer Science/Mustansiriyah University, Baghdad, Iraq 2 College of Information Technology/Al-Nahrain University, Baghdad, Iraq ABSTRACT Humans are integrated closer to computers every day, and computers are taking over many services that used to be based on face-to-face contact between humans. This has prompted an active development in the field of biometric systems. The use of biometric information has been known widely for both person identification and security applications. The paper is concerned with the use of speaker features for protection against unauthorized access. A speaker recognition system for 6304 speech samples is presented that relies on LPC- derived features. A vocabulary of 46 speech samples is built for 10 speakers, where each authorized person is asked to utter every sample 10 times. Two different modes are considered in identifying individuals according to their speech samples. In the closed-set speaker identification, it is found that all tested LPC-derived features outperform the raw LPC coefficients and 84% to 97% identification rates are achieved. Applying the preprocessing steps to the speech signals (preemphasis, remove DC offset, frame blocking, overlapping, normalization and windowing) improve the representation of speech features, and up to 100% identification rate was obtained using weighted Linear Predictive Cepstral Coefficients (LPCC). In the open-set speaker verification mode of our proposed system model, the system selects randomly a pass phrase of 8-samples length from its database for each trial a speaker is presented to the system. Up to 213 text-prompt trials from 23-different speakers (authorized and unauthorized) are recorded (i.e., 1704 samples) in order to study the system behavior and to generate the optimal threshold in which the speakers are verified or not when compared to those training references of authorized speakers constructed in the first mode, where the best obtained speaker verification rate is greater than 99%. Keywords: Biometric, LPC-derived features, LSF, Speaker Recognition, Speaker Identification, Speaker Verification, Text-prompt. INTERNATIONAL JOURNAL OF INFORMATION TECHNOLOGY & MANAGEMENT INFORMATION SYSTEM (IJITMIS) ISSN 0976 – 6405(Print) ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), pp. 68-84 © IAEME: http://www.iaeme.com/IJITMIS.asp Journal Impact Factor (2013): 5.2372 (Calculated by GISI) www.jifactor.com IJITMIS © I A E M E

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

International Journal of Information Technology & Management Information System (IJITMIS), ISSN

0976 – 6405(Print), ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), © IAEME

68

DESIGN A TEXT-PROMPT SPEAKER RECOGNITION SYSTEM

USING LPC-DERIVED FEATURES

Dr. Mustafa Dhiaa Al-Hassani1, Dr. Abdulkareem A. Kadhim

2

1Computer Science/Mustansiriyah University, Baghdad, Iraq

2College of Information Technology/Al-Nahrain University, Baghdad, Iraq

ABSTRACT

Humans are integrated closer to computers every day, and computers are taking over many services that used to be based on face-to-face contact between humans. This has prompted an active development in the field of biometric systems. The use of biometric information has been known widely for both person identification and security applications. The paper is concerned with the use of speaker features for protection against unauthorized access. A speaker recognition system for 6304 speech samples is presented that relies on LPC-derived features. A vocabulary of 46 speech samples is built for 10 speakers, where each authorized person is asked to utter every sample 10 times. Two different modes are considered in identifying individuals according to their speech samples. In the closed-set speaker identification, it is found that all tested LPC-derived features outperform the raw LPC coefficients and 84% to 97% identification rates are achieved. Applying the preprocessing steps to the speech signals (preemphasis, remove DC offset, frame blocking, overlapping, normalization and windowing) improve the representation of speech features, and up to 100% identification rate was obtained using weighted Linear Predictive Cepstral Coefficients (LPCC). In the open-set speaker verification mode of our proposed system model, the system selects randomly a pass phrase of 8-samples length from its database for each trial a speaker is presented to the system. Up to 213 text-prompt trials from 23-different speakers (authorized and unauthorized) are recorded (i.e., 1704 samples) in order to study the system behavior and to generate the optimal threshold in which the speakers are verified or not when compared to those training references of authorized speakers constructed in the first mode, where the best obtained speaker verification rate is greater than 99%. Keywords: Biometric, LPC-derived features, LSF, Speaker Recognition, Speaker Identification, Speaker Verification, Text-prompt.

INTERNATIONAL JOURNAL OF INFORMATION TECHNOLOGY &

MANAGEMENT INFORMATION SYSTEM (IJITMIS)

ISSN 0976 – 6405(Print) ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), pp. 68-84

© IAEME: http://www.iaeme.com/IJITMIS.asp

Journal Impact Factor (2013): 5.2372 (Calculated by GISI)

www.jifactor.com

IJITMIS

© I A E M E

International Journal of Information Technology & Management Information System (IJITMIS), ISSN

0976 – 6405(Print), ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), © IAEME

69

I. INTRODUCTION

As everyday life is getting more and more computerized, automated security systems are getting more and more important. Today most personal banking tasks can be performed over the Internet and soon they can also be performed on mobile devices such as cell phones and PDAs. The key task of an automated security system is to verify that the users are in fact those who claim to be [1].

Since the level of security breaches and transaction fraud increases, the need for highly secure identification and personal verification technologies is becoming apparent. Biometric-based solutions are able to provide confidential financial transactions and personal data privacy [2]. The need for biometrics can be found in federal, state and local governments, in the military, and in commercial applications [1, 3]. A biometric system is essentially a pattern recognition system that establishes the authenticity of a specific physiological or behavioral characteristic possessed by a user. They are typically based on some single biometric feature of humans, but several hybrid systems also exist [2, 4, 5, 1, 6].

Human voice can serve as a key for any security objects, and it is not easy to lose or forget it. This technique can be used to verify the identity claimed by people accessing systems; that is, it enables control of access to various services by voice [3, 7]. Speaker recognition has received for many years the attention of researchers working in the field of signal processing. This technology has been developed in such a way that it can be used in a number of applications, such as: voice dialing, banking over a telephone network, person authentication, remote access to computers, command and control systems, network security and protection, entry and access control systems, data access/information retrieval, Monitoring, … etc [8, 5, 9, 10, 11]. II. AIM OF THE WORK

This work aims to build a speaker recognition (identification/verification) system that

automatically authenticate a speaker's identity by his/her voice, according to a random text-prompt generated by the system, and then gives only the authorized persons a privilege or an access right to the facility that need to be protected from the intrusion of unauthorized persons. III. THE PROPOSED SPEAKER RECOGNITION SYSTEM MODEL

In this section, several linear prediction based methods (LPC, PARCOR, LAR, ASRC,

LPCC, and LSF) are tested for text-dependent speaker recognition system in a closed-set mode. The open-set speaker verification mode is also investigated, which involves speaker’s verification according to a randomly text-prompt sentence generated by the system. The block diagram for the proposed speaker recognition system model, shown in Fig. (1), illustrates that the input speech is passed through six preprocessing operations (preemphasis, remove DC offset, frame blocking, overlapping, normalization and windowing) prior to feature extraction phase. If the match is lower than certain threshold, then the identity claims is verified "Accepted", otherwise, the speaker is "Rejected" [1].

International Journal of Information Technology & Management Information System (IJITMIS), ISSN

0976 – 6405(Print), ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), © IAEME

70

Figure (1): Block-Diagram of the proposed Speaker Recognition System Model

3.1. Speech Recording Any speaker recognition system depends on speech recording samples as input data.

The speech signals used for training and testing are recorded in a quiet (but not a soundproof) rooms via high quality built-in microphone and digitized by a sound card of type Crystal − Intel (r) integrated audio using DELL Latitude C400 Notebook and having the following recording features: .wav file format, 11 kHz sampling rate, 2-bytes/sample and single channel [1].

3.2. Database Construction

In this work, database samples were recorded in two modes of operation: � Closed-set speaker identification mode � Open-set speaker verification mode

In order to evaluate the identification/verification performance of the proposed system model, each speaker is asked to utter the vocabulary data sets, shown in Table-1, for a maximum of 10 utterances/sample.

The number of repetition R ( 1≤ R < 10 ) can be considered as training set during an enrollment phase to train the speaker’s model of authorized persons, and the other ( 10 – R ) repetitions are considered for testing during a matching phase to classify them with those training references in the database. As a result, the total database size of speaker’s samples for this mode is [1]:

(1)

(2)

(3)

sSpeaofNoSamplesof No ize Total DB S ker..10 ××=

sSpeaofNo.Samplesof No.R ferencesTrainingofNo. kerRe ××=

sSpeaofNoSamplesofNoRSamplesTestofNo ker.. )10( . ××−=

International Journal of Information Technology & Management Information System (IJITMIS), ISSN

0976 – 6405(Print), ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), © IAEME

71

Table-1: The recorded speech samples

Data Sets Speech Samples

1) Digits 0 ... 9

2) Characters ‘A’ ... ‘Z’

3) Words Accept, Reject, Open, Close, Help, Computer, Yes, No, Copy, Paste

For practical purposes, these data sets are very interesting because the similarities

between several samples (especially letters) lead to the realization of important problems in speech recognition.

In the closed-set speaker identification mode, up to 4600 samples were collected from different persons, whereas 1704 samples were recorded in the open-set speaker verification mode.

In the open-set speaker verification mode of our proposed system model, the system selects randomly a pass phrase of 8-samples length from its database for each trial a speaker is presented to the system. Up to 213 text-prompt trials from different speakers (i.e., authorized and unauthorized) are recorded (i.e., 1704 samples) in order to study the system behavior. In fact, the generated text-prompt sentence, shown in Table-2, is a random number between 1 and 46 which corresponds to the samples in the vocabulary shown in Table-1. This is performed in order to study the system behavior and to generate the optimal threshold in which the speakers are verified to be accepted or not when compared to those training references of authorized speakers constructed in the first mode [1].

Table-2: Examples of Randomly Text-Prompt Sentences generated by the System

Table-2 illustrates five examples of text-prompt sentences generated by the system where column Si ( i=1,2,...,8 ) stands for sample number i , which compose a sentence in each row [1].

International Journal of Information Technology & Management Information System (IJITMIS), ISSN

0976 – 6405(Print), ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), © IAEME

72

3.3. Preprocessing The basic idea behind speech preprocessing is to generate a signal with a fine structure

as close as possible to that of the original speech signal. This produces a data reduction facility with easier task analysis [11]. A number of processing techniques adopted in this system model are applied in the following sequence:

• Preemphasis

Usually the digital speech signal, s[n], is preemphasized first. This is achieved by passing the signal through a high-pass filter. This process emphasis the high frequencies relative to low frequencies, hence, compensating the effect of band limiting the input signal with a low-pass filter in the recording process. The most commonly used preemphasis filter is given by the following transfer function [12, 13, 10, 14]:

(4)

where α typically lies in the range of 0.9 ≤ α < 1.0 , which controls the slope of the filter

that is simply implemented as a first order differentiator:

(5)

For the proposed system model α is set to 0.95 [1]. • The Removal of DC offset

DC offset occurs when hardware, such as a sound card, adds DC current to a recorded audio signal. This current produces a recorded waveform that is not centered on the baseline. Therefore, removing this DC offset is the process of forcing the input signal mean to the baseline by adding a constant value to the samples in the sound file. An illustrative example of removing DC offset from a waveform file is shown in Fig. (2) [1].

Figure (2): Removal of DC offset from a Waveform file (a) Exhibits DC offset,

(b) After the removal of DC offset

• Frame-Blocking It is the process of blocking or splitting the input speech samples into equal durations of

N samples length to carryout frame-wise analysis. The selection of the frame length is a crucial parameter for successful spectral analysis, due to the trade-off between the time and frequency resolutions. The window should be long enough for adequate frequency resolution, but on the other hand, it should be short enough so that it would capture the local spectral properties. Typically a frame length of 10 − 30 milliseconds is used. The signal for the i-th frame is given by [15, 14, 10, 12]:

International Journal of Information Technology & Management Information System (IJITMIS), ISSN

0976 – 6405(Print), ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), © IAEME

73

(6)

In this work, a frame length N = 256 samples with a duration of 23.2 milliseconds is used [1]. • Overlapping

Usually adjacent frames are overlapped. The frame is shifted forward by a fixed amount, typically 30 – 50 % of the frame length along the signal. The purpose of the overlapping is to avoid losing of information since that each speech sound of the input sequence would be approximately centered at some frame [1, 15, 13, 16].

• Normalization

The frames of speech are normalized to make their power equal to unity. This step is very important since the extracted frames have different intensities due to the speaker loudness, speaker distance from the microphone and recording level. The normalization is done by dividing each sample by the square root of the sum of squares of all the samples in the segment as stated below:

(7)

where S[n] is the speech sample, N is the number of samples in the segment which is 256, and the subscript norm refers to normalization [1].

• Windowing

The purpose of windowing is to reduce the effect of spectral-leakage (type of distortion in spectral analysis) that results from the framing process. Windowing involves multiplying a speech signal x(n) by a finite-duration window w(n), which yields a set of speech samples weighted by the shape of the window, as stated by the following equation [1, 15, 13, 17, 12]:

(8)

where N is the size of the window or frame.

There exist many different windowing functions; Table-3 lists the window functions that are used in our experiments and their shapes illustrated in Fig. (3) [1].

Table-3: Rectangular, Hamming and Kaiser Window-Function

International Journal of Information Technology & Management Information System (IJITMIS), ISSN

0976 – 6405(Print), ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), © IAEME

74

Figure (3): Rectangular, Hamming and Kaiser Window-Function of 256 Samples Length

[1] 3.4. Feature Extraction

Having acquired the testing or training utterances, it is now the role of the feature extractor to extract the features from the speech samples. Feature extraction refers to the process of reducing dimensionality by forming a new “smaller” set of features from the original feature set of the patterns. This can be done by extracting some numerical measurements from raw input patterns [8, 1, 15]. Several linear prediction based features are tested, which include LPC, PARCOR, LAR, ASRC, LPCC, and LSF.

• Linear Predictive Coding (LPC)

Linear prediction (LP) forms an integral part of almost all modern day speech coding algorithms. The fundamental idea is that a speech sample can be approximated as a linear combination of past samples. Within a signal frame, the weights used to compute the linear combination are found by minimizing the mean-squared prediction error; the resultant weights, or linear prediction coefficients (LPCs), are used to represent the particular frame [18]. The importance of this method lies in its ability to provide extremely accurate estimates of the speech parameters, and in its relative speed of computation [20, 19].

The LPC model, assumes that each sample s(n) at time n, can be approximated by a linear sum of the p previous samples

(9) where s[n] is an approximation of the present output, s[n−k] are past outputs, p is the prediction order; and {a[k]}, k = 1...p are the model parameters called the predictor coefficients that need to be determined so that the average prediction error (or residual) is as small as possible [10, 19].

The prediction error for nth sample is given by the difference between the actual sample and its predicted value [1, 13, 20, 10]:

(10)

∑=

−≈p

k

knskans1

][][][

∑=

−−=p

k

knskansne1

][][][][

International Journal of Information Technology & Management Information System (IJITMIS), ISSN

0976 – 6405(Print), ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), © IAEME

75

Equivalently, (11)

When the prediction residual e[n] is small, predictor Eq. (9) approximates s[n] well.

The total squared prediction error is given by

(12)

Minimization of error is achieved by setting the partial derivatives of E with respect to

the model parameters {a[k]} to zero:

(13)

By writing out Eq. (13) for k = 1 ... p, the problem of finding the optimal predictor

coefficients reduced to solve of so-called (Yule- Walker) AR equations. Depending on the choice of the error minimization interval in Eq. (12), there are two methods for solving the AR equations: covariance method and autocorrelation method [13, 10, 19]. The two methods do not have large difference, but the autocorrelation method is the preferred since it is computationally more efficient and it always guarantees a stable filter.

In matrix form, the set of linear equations is represented by vRa = which can be rewritten as [13, 1, 21]:

(14)

where R is a special type of matrix called Toeplitz matrix (symmetric with all diagonal elements equal, this facilitates the solution of the Yule-Walker equations for the LP coefficients {ak} through computationally fast algorithms such as the Levinson – Durbin algorithm), a is the vector of the LPC coefficients and v is the autocorrelation. Both the matrix R and vector v are completely defined by p autocorrelation samples. The autocorrelation sequence of s[n] is defined as [1, 21, 10, 19, 13]:

(15) where N is the number of data points in the segment.

Due to the redundancy in the Yule-Walker (AR) equations, there exists an efficient

algorithm for finding the solution, known as Levinson-Durbin recursion [1, 10, 19, 20, 13].

][][][][1

neknskansp

k

+−=∑=

∑ ∑

∑

=

−−=

=

n

p

k

n

knskans

neE

2

1

2

)][][][(

][

pkka

E,...,1,0

][==

∂

∂

R a v

=

−−

−

−

R(p)

R(2)

R(1)

R(0)2)R(p1)R(p

2)R(pR(0)R(1)

1)R(pR(1)R(0)

2

1

MM

K

MOMM

K

K

pa

a

a

∑−−

=

+=kN

n

knsnsN

kR1

0

][][1

][

International Journal of Information Technology & Management Information System (IJITMIS), ISSN

0976 – 6405(Print), ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), © IAEME

76

(16)

(17)

(18)

(19) where

k i: Partial Correlation Coefficients (PARCOR). a j

( i ) : is the jth predictor (LPC) coefficient after i iterations. E

( i ) : is the prediction error after i iterations.

The Levinson-Durbin procedure takes the autocorrelation sequence as its input, and produces the coefficients a[k]; k = 1… p. The time complexity of the procedure is O(p2) as opposed to standard Gaussian elimination method whose complexity is O(p3). Equations (16 – 19) are solved recursively for i = 1, 2, …, p, where p is the order of the LPC analysis and the final solution is given as [13, 1, 10, 20, 19]:

(20)

• Partial Correlation Coefficients (PARCOR)

Several alternative representations can be derived from LPC coefficients when the autocorrelation method is used. The Levinson-Durbin algorithm produces the quantities {[k i]}; i = 1, 2, … p (are in the range of -1≤k i≤1), which is known as the reflection or PARCOR coefficients [13, 1]. • Log Area Ratio (LAR)

A new parameter set, which can be derived from the PARCOR coefficients, is obtained by taking the logarithm of the area ratio, yielding log area ratios (LARs) {g i} defined as [19, 20, 22, 10, 1, 13].

(21)

• Arcsin Reflection Coefficients (ASRC) An alternative for the log area ratios are arcsin reflection coefficients, simply computed

as taking the sine inverse of the reflection coefficients [10, 1, 13].

(22)

)i(

i

)i(

)i(

jii

)i(

j

)i(

j

i

)i(

i

i

j

)i(

ji

)(

E)k(E

ij,akaa

ka

pi,)i(E)ji(Ra)i(Rk

)(RE

12

11

1

1

1

0

1

11

11

0

−

−

−

−

−

=

−

−=

−≤≤−=

=

≤≤−

−−=

=

∑

pjaap

jj ≤≤= 1,)(

pik

kg

i

i

i≤≤

+

−= 1,

11

log

pikii

≤≤= − 1,)(sinarcsin 1

International Journal of Information Technology & Management Information System (IJITMIS), ISSN

0976 – 6405(Print), ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), © IAEME

77

• Linear Predictive Cepstral Coefficients (LPCC) An important fact is that cepstrum can also be derived directly from the LPC parameter

set. The relationship between cepstrum coefficients cn and prediction coefficients ak is represented in the following equations [1, 9, 13]:

(23)

where p is a prediction order. It is usually said that the cepstrum, derived in such a way represents the “smoothed” version of the spectrum. Similar to LPC analysis, increasing the number of coefficients results in more details [10, 4].

Because of the sensitivity of the low-order cepstral coefficients to overall spectral slope and the sensitivity of the high-order cepstral coefficients to noise (and other forms of noise-like variability), it has become a standard technique to weight the cepstral coefficients by a tapered window so as to minimize these sensitivities and improving the performance of these coefficients [19, 14, 13, 1]. To achieve the robustness for large values of n, it must consider a more general weighting of the form:

(24)

where (25)

This weighting function truncates the computation and deemphasis cn around n = 1 and

around n = P [19]. • Line Spectral Frequencies (LSFs)

Another representation of the LP parameters of the all-pole spectrum is the set of line spectral frequencies (LSF’s) or line spectrum pairs (LSP’s) [23, 21]. It is proposed to be employed in speech compression and other audio signals, which is the most widely representation of LPC parameters used for quantization and coding but they have been applied with good results to speaker recognition [23, 24, 10, 1]. LSFs are the roots of the following polynomials:

(26)

(27)

where B(z) = 1/H(z) = 1 − A(z) is the inverse LPC filter. The roots of P(z) and Q(z) are

interleaved and occur in complex-conjugate pairs so that only p/2 roots are retained for each of P(z) and Q(z) (p roots in total). Also, the root magnitudes are known to be unity and, therefore, only their angles (frequencies) are needed.

Each root of B(z) corresponds to one root in each of P(z) and Q(z). Therefore, if the frequencies of this pair of roots are close, then the original root in B(z) likely represents a formant, and, otherwise, this latter root represents a wide bandwidth feature of the spectrum. These correspondences provide us with an intuitive interpretation of the LSP coefficients [13].

( ) pn,ac.a.n/kc

ac

nkn

n

k

kn ≤<+−=

=

−

−

=

∑ 111

1

11

pn1,w(n)c(n)(n)c ≤≤×=)

pn1,)p

nπsin(

2

P1w(n) ≤≤

+=

)B(z) + zP(z) = B(z-)-(p+ 11

)B(z) - zQ(z) = B(z -)-(p+ 11

International Journal of Information Technology & Management Information System (IJITMIS), ISSN

0976 – 6405(Print), ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), © IAEME

78

3.5. Pattern Matching The resulting test template, which is an N-dimensional feature vector, is compared

against the stored reference templates to find the closest match. The process is to find which unknown class matches a predefined class or classes. For the speaker identification task, the unknown speaker is compared to all references in the database. This comparison can be done through Euclidean (E.D.) or city-block (C.D.) distance measures [1, 25, 26], as shown below:

(28)

(29)

where A and B are two vectors, such that A = [a1 a2 … aN] and B = [b1 b2 … bN].

3.6. Decision Rule The decision rule process is to select the pattern that best match the unknown one. The

primary methods for the discrimination process are either to measure the difference between the two feature vectors or to measure the similarity. In our approach the minimum distance classifier, by measuring the difference between the two patterns, is used for speaker recognition. This classifier assigns the unknown pattern to the nearest predefined pattern. The bigger distance between the two vectors, is the greater difference. On the other hand, the identity of the unknown speaker was verified by considering the best matched reference in the database where their distance is lower than a certain threshold [1, 25, 26]. IV. EXPERIMENTAL RESULTS

Many experiments and test conditions were accomplished to measure the performance

of the proposed system with different criterions concerning: preemphasis, frame overlapping, LPC order, window type, cepstral weighting and the text-prompt speaker verification.

The identification rate is defined as the ratio of correct identified speakers to the total number of test samples which corresponds to a nearest neighbor decision rule.

(30) 4.1. Identification Rate for LP based Coefficients

A more appropriate comparison can be made if the entire LP based coefficients methods (LPC, PARCOR, LAR, ASRC, LPCC and LSF) are measured under identical conditions (the order of LP based coefficients P = 15, remove DC offset, no overlap between successive frames, normalization, rectangular window type). The classification results are shown in Table-4 and its equivalent chart Fig. (4).

%Tested SamplesNo. ofTotal

s SpeaIdentifiedCorrectly ofNo.Rate tionIdentifica 100

ker×=

∑=

−=N

iii ba

1

2)(.D.E

∑=

−=N

ii1i

ba.D.C

International Journal of Information Technology & Management Information System (IJITMIS), ISSN

0976 – 6405(Print), ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), © IAEME

79

Table-4: Identification Rate for the LP based Coefficients

Euclidean Distance

(E. D.)

City-block Distance

(C. D.)

LPC 84.173 84.217 PARCOR 95.173 95.260 LAR 94.000 94.652 ASRC 94.826 95.608 LPCC 97.087 97.521 LSF 95.695 95.782

Figure (4): Identification Rate for the LP based Coefficients

It is clear from Table-4 and its corresponding chart Fig. (4), that all tested LPC-derived

features outperform the raw LPC coefficients which give about 84% identification rates.

4.2. Preemphasis of Speech Signals There is a need to see the effects of preemphasis on digital speech signals before any

further preprocessing steps. This is obviously demonstrated in the classification results of Fig. (5) according to the following conditions: preemphasis of speech signals, P = 15, remove DC offset, no overlap between successive frames, normalization, rectangular window type, and City-block distance measure were used.

Figure (5): Effect of Preemphasis Speech Samples on Identification Rates

Figure (5) clearly indicates the higher improvements in identification rates overall LPC-

based systems in the range of 93% to 98% after applying the preemphasis step to the speech signal.

International Journal of Information Technology & Management Information System (IJITMIS), ISSN

0976 – 6405(Print), ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), © IAEME

80

4.3. LPC Predictor Order (P) The order of the linear prediction analysis (P) is a compromise among spectral accuracy

and computation complexity (time/memory). Based on the previous tests; further improvements in identification rates, shown by Table-5 and Fig. (6), can be achieved when LPC predictor order (P) is studied according to different values (P = 15, 30, 45) with overlapping successive frames to 50% of frame size.

Table-5: Identification Rates for different LPC Predictor Order (P =15, 30, 45)

P = 15 P = 30 P = 45

LPC 92.695 96.347 97.565 PARCOR 97.652 99.347 99.869 LAR 95.478 98.782 99.347 ASRC 97.434 99.173 99.695 LPCC 99.130 99.956 99.956 LSF 98.130 99.695 99.826

Figure (6): Effect of LPC Predictor Order (P =15, 30, 45) on Identification Rates

It is clearly seen from the results of Table-5 and Fig. (6) that the increasing number of predictor order P with the overlap between successive frames give positive influence for most identification rates. Therefore, the predictor order P is taken to be 45 for the next experimental tests.

4.4. Windowing Function

After determining the appropriate LPC predictor order, the system behavior for different window types must be studied. Therefore, Table-6 is considered for this purpose according to the following conditions: Rectangular, Hamming and Kaiser window types, overlap successive frames to 50% of frame size, LPCC cepstral weighting.

From this experiment, it is clearly indicated that the speaker identification rates are improved further by adopting new window types like Kaiser window. The latter gives the best accuracy when compared to the other two window types used (rectangular and Hamming).

Ide

nti

fic

ati

on

Ra

te %

92

93

94

95

96

97

98

99

100

15 30 45

LPC Order P (Number of Coefficients)

LPC

PARCOR

LAR

ASRC

LPCC

LSF

International Journal of Information Technology & Management Information System (IJITMIS), ISSN

0976 – 6405(Print), ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), © IAEME

81

Table-6: Identification Rates for LP based Coefficients with different Window Type

Rectangular Hamming Kaiser

LPC 97.5652 94.9130 97.6087 PARCOR 99.8696 99.4783 99.7391 LAR 99.3478 99.4783 99.6087 ASRC 99.6957 99.4783 99.6522 LPCC 99.9565 99.9565 100 LSF 99.8261 99.9130 99.9130

4.5. Text-Prompt Speaker Verification

Another test is needed for the sake of verifying speakers identity from a randomly text-prompt generated by the system. This is relies on the best results obtained from the previous experiments, it is undoubtedly illustrated that LPCC exhibits paramount results when compared to other LP based coefficients. Therefore, it is selected to be the feature extraction method for speaker verification mode. The advantage of text-prompting is that a possible intruder cannot know beforehand what the phrase will be because the prompt text is changed on each trial. Furthermore, our system takes additional precautions for the recording time by forcing the user to utter the pass phrase within a short time interval (up to 15 seconds), which provides additional difficulty on the intruder to use a device or software that synthesizes the user’s voice. It is worthwhile that the system is automatically split the sentence back to its attribute samples, then a pattern matching process is performed only to those equivalent samples features in the database (with regards to the system security threshold).

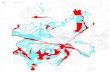

A total of 213 speakers’ trials (8 samples length each) from 23 persons (authorized and unauthorized) are considered for verification test to obtain the optimum threshold of Crossover Error Rate (CER). This is defined as the point where the False Rejection Rate (FRR) and the False Acceptance Rate (FAR) curves meet in verifying user's identity. Different threshold values were considered in the verification test, as shown in Table-7.

Table-7: Text-Prompt Speaker Verification Rates for different Thresholds using City-

block distance

Threshold

)(θ Successful

Decision FAR FRR

15.05 65.2582 0.0000 34.7418 15.40 71.8310 0.0000 28.1690 15.75 83.5681 0.0000 16.4319 16.10 92.9578 0.0000 7.0422 16.45 97.1831 0.0000 2.8169 16.80 99.5305 0.0000 0.4695 17.15 99.5305 0.0000 0.4695 17.50 97.1831 2.8169 0.0000 17.85 96.2442 3.7558 0.0000 18.20 95.3052 4.6948 0.0000 18.55 95.3052 4.6948 0.0000

The successful decision in Table-7 corresponds to the rate of accepting registered

persons and rejecting non-registered ones for all trials. The variation of FAR and FRR with

International Journal of Information Technology & Management Information System (IJITMIS), ISSN

0976 – 6405(Print), ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), © IAEME

82

different threshold values are also shown in Fig. (7), where the obtained CER is approximately 17.15 (which is the most suitable security threshold) for 99.53% successful decision rate.

Figure (7): FAR and FRR Performance Curve for different threshold levels using city-

block distance

V. CONCLUSION

A speaker recognition system for 6304 speech samples is presented that relies on LPC-

derived features and acceptable results have been obtained. In the closed-set speaker identification, it is found that all tested LPC-derived features

outperform the raw LPC coefficients where 84% to 97% identification rates are achieved. An improvement in identification rates with LPC-based systems is obtained in the range of 97% to 99% by applying the preprocessing steps (preemphasis, remove DC offset, frame blocking, overlap successive frames to 50% of frame size, normalization and windowing) to the speech signal and increasing the predictor order (P). According to speaker identification tests performed, one can deduce that LPCC exhibits paramount results when compared to other LPC based coefficients. However, the accuracy can be further improved by weighting the cepstral coefficients to obtain identification rates close to 100%.

The open-set speaker verification mode is also presented for 213 trials (randomly text-prompt sentences generated by the system) from 23 persons (1704 samples). The obtained verification rates, greater than 99%, using our proposed system model is considered to be quite suitable.

VI. REFERENCES

[1] Mustafa D. Al-Hassani, “Identification Techniques using Speech Signals and

Fingerprints”, Ph.D. Thesis, Department of Computer Science, Al-Nahrain University, Baghdad, Iraq, September 2006.

[2] Tiwalade O. Majekodunmi, Francis E. Idachaba, “A Review of the Fingerprint, Speaker Recognition, Face Recognition and Iris Recognition Based Biometric Identification Technologies”, Proceedings of the World Congress on Engineering Vol. II WCE, London, U.K, 2011.

[3] M. Eriksson, “Biometrics Fingerprint based identity verification”, M. Sc. Thesis, Department of Computer Science, UMEÅ University, August 2001.

International Journal of Information Technology & Management Information System (IJITMIS), ISSN

0976 – 6405(Print), ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), © IAEME

83

[4] Yuan Yujin, Zhao Peihua, ZhouQun, “Research of Speaker Recognition Based on Combination of LPCC and MFCC”, Electronic Information Engineering, Training and Experimental Center, Handan College, China, 2010.

[5] Anil K. Jain and Arun Ross, "Introduction to Biometrics”, Springer Science+Business Media, LLC, USA, 2008.

[6] S. Gunnam, “Fingerprint Recognition and Analysis System”, A mini-thesis Presented to Dr. David P. Beach, Dept of Electronics and Computer Technology, Indiana state university, Terre Haute, In Partial Fulfillment of the Requirements for ECT 680, April 2004.

[7] E. Hjelms, “Biometric Systems: A Face Recognition Approach”, Department of Informatics, University of Oslo, Oslo, Norway, 2000.

[8] Valentin Andrei, Constantin Paleologu, Corneliu Burileanu, “Implementation of a Real-Time Text Dependent Speaker Identification System”, University “Politehnica” of Bucharest, Romania, 2011.

[9] E. Karpov, “Real-Time Speaker Identification”, M. Sc. Thesis, Department of Computer Science, University of Joensuu, Finland, January 2003.

[10] T. Kinnunen, “Spectral Features for Automatic Text-Independent Speaker Recognition”, Ph. D. Thesis, Department of Computer Science, University of Joensuu, Finland, December 2003.

[11] T. Chen, “The Past, Present, and Future of Speech Processing”, IEEE Signal Processing Magazine, No.5, May 1998.

[12] Biswajit Kar, Sandeep Bhatia & P. K. Dutta, “Audio -Visual Biometric Based Speaker Identification”, International Conference on Computational Intelligence and Multimedia Applications, India, 2007.

[13] Antonio M. Peinado, Jos´e C. Segura, “Speech Recognition Over Digital Channels:

Robustness and Standards”, John Wiley & Sons Ltd, University of Granada, Spain, 2006.

[14] B. R. Wildermoth, “Text-Independent Speaker Recognition using Source Based Features”, M. Sc. Thesis, Griffith University, Australia, January 2001.

[15] Ch.Srinivasa Kumar, P. Mallikarjuna Rao ,“Design of An Automatic Speaker Recognition System Using MFCC, Vector Quantization And LBG Algorithm”, Ch.Srinivasa Kumar et al. / International Journal on Computer Science and Engineering (IJCSE), Vol. 3 No. 8 August 2011.

[16] Ciira wa Maina and John MacLaren Walsh, “Log Spectra Enhancement Using Speaker Dependent Priors for Speaker Verification”, Drexel University, Department of Electrical and Computer Engineering, Philadelphia, PA 19104, 2011.

[17] Ning WANG, P. C. CHING, and Tan LEE, “Robust Speaker Verification Using Phase Information of Speech”, Department of Electronic Engineering, The Chinese University of Hong Kong, 2010.

[18] Wai C. Chu, “Speech Coding Algorithms: Foundation and Evolution of Standardized

Coders”, John Wiley & Sons, Inc., California, USA, 2003. [19] L. Rabiner, B.-H. Juang, “Fundamentals of Speech Recognition”, Prentice-Hall, Inc.,

Englewood Cliffs, New Jersey, 1993. [20] Yasir. A.-M. Taleb, “Statistical and Wavelet Approaches for Speaker Identification”, M.

Sc. Thesis, Department of Computer Engineering, Al-Nahrain University, Iraq, June 2003.

[21] N. Batri, “Robust Spectral Parameter Coding in Speech Processing”, M. Sc. Thesis, Department of Electrical Engineering, McGill University, Montreal, Canada, May 1998.

International Journal of Information Technology & Management Information System (IJITMIS), ISSN

0976 – 6405(Print), ISSN 0976 – 6413(Online) Volume 4, Issue 3, September - December (2013), © IAEME

84

[22] J. P. Campbell, “Speaker Recognition: A Tutorial”, IEEE Proceedings, Vol. 85, No. 9, 1997.

[23] A. K. Khandani and F. Lahouti, “Intra-frame and Inter-frame Coding of Speech: LSF Parameters Using a Trellis Structure”, Department of Electrical and Computer Engineering, University of Waterloo, Ontario, Canada, June 2000.

[24] J. Rothweiler, “A Root Finding Algorithm for Line Spectral Frequencies”, Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP-99), March 15-19, U.S.A., 1999.

[25] S. E. Umbaugh, “Computer Vision and Image Processing”, Prentice-Hall, Inc., U.S.A., 1998.

[26] R. C. Gonzalez, Richard E. Woods, “Digital Image Processing”, Second Edition, Prentice-Hall Inc., New Jersey, U.S.A., 2002.

[27] Lokesh S. Khedekar and Dr.A.S.Alvi, “Advanced Smart Credential Cum Unique Identification and Recognition System. (ASCUIRS)”, International Journal of Computer Engineering & Technology (IJCET), Volume 4, Issue 1, 2013, pp. 97 - 104, ISSN Print: 0976 – 6367, ISSN Online: 0976 – 6375.

[28] Pallavi P. Ingale and Dr. S.L. Nalbalwar, “Novel Approach to Text Independent Speaker Identification”, International Journal of Electronics and Communication Engineering & Technology (IJECET), Volume 3, Issue 2, 2012, pp. 87 - 93, ISSN Print: 0976- 6464, ISSN Online: 0976 –6472.

[29] Vijay M.Mane, GauravV. Chalkikar and Milind E. Rane, “Multiscale Iris Recognition System”, International Journal of Electronics and Communication Engineering & Technology (IJECET), Volume 3, Issue 1, 2012, pp. 317 - 324, ISSN Print: 0976- 6464, ISSN Online: 0976 –6472.

[30] Dr. Mustafa Dhiaa Al-Hassani, Dr. Abdulkareem A. Kadhim and Dr. Venus W. Samawi, “Fingerprint Identification Technique Based on Wavelet-Bands Selection Features (WBSF)”, International Journal of Computer Engineering & Technology (IJCET), Volume 4, Issue 3, 2013, pp. 308 - 323, ISSN Print: 0976 – 6367, ISSN Online: 0976 – 6375.

[31] Viplav Gautam, Saurabh Sharma, Swapnil Gautam and Gaurav Sharma, “Identification and Verification of Speaker using Mel Frequency Cepstral Coefficient”, International Journal of Electronics and Communication Engineering & Technology (IJECET), Volume 3, Issue 2, 2012, pp. 413 - 423, ISSN Print: 0976- 6464, ISSN Online: 0976 –6472.

Related Documents

![content.alfred.com · B 4fr C#m 4fr G#m 4fr E 6fr D#sus4 6fr D# q = 121 Synth. Bass arr. for Guitar [B] 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 5](https://static.cupdf.com/doc/110x72/5e81a9850b29a074de117025/b-4fr-cm-4fr-gm-4fr-e-6fr-dsus4-6fr-d-q-121-synth-bass-arr-for-guitar-b.jpg)