1 Pattern Recognition: Statistical and Neural Lonnie C. Ludeman Lecture 24 Nov 2, 2005 Nanjing University of Science & Technology

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

1

Pattern Recognition:Statistical and Neural

Lonnie C. Ludeman

Lecture 24

Nov 2, 2005

Nanjing University of Science & Technology

2

Lecture 24 Topics

1.Review and Motivation for Link Structure

2.Present the Functional Link Artificial Neural Network.

3.Simple Example- design using ANN and FLANN

4. Performance for Neural Network Designs

5. Radial Basis Function Neural Networks

6. Problems, Advantages, Disadvantages, and promise of Artificial Neural Network Design

3

g1(x)

g2(x)

gj(x)

gM(x)

……

x

x

x

x

x + g(x)

wM

w2

wj

w1

Generalized Linear Discriminant Functions

Review 1

5

Example: Decision rule using one nonlinear discriminant function g(x)

Given the following g(x) and decision rule

Illustrate the decision regions R1 and R

2

where we respectively classify as C1 and C

2

for the decision rule aboveReview 3

7

Find a generalized linear discriminant function that separates the classes

Solution:

d(x) = w1f1(x)+ w2f2(x)+ w3f3(x)

+ w4f4(x) +w5f5(x) + w6f6(x)

= wT f (x)

in the f space (linear)

Review 5

9

Decision Boundary in original pattern space

-2

2

-1

1

1 2 3 4

x2

x1

from C1

from C2

d(x) = 0Boundary

Review 7

10

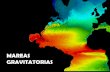

Potential Function Approach – Motivated by electromagnetic theory

Sample space

+ from C1- from C2

Review 8

12

Given Samples x from two classes C1 and C2

K(x) = ∑ K(x, xk) - ∑ K(x, xk)xk S2

xk S1 CC

S1

S2

Define Total Potential Function

Decision Boundary

K(x) = 0

C1

C2

Potential Function

Review 10

13

Algorithm converged in 1.75 passes through the data to give final discriminant function as

Review 11

17

Principal Component Functional Link

fk(x), k=1 to N are chosen as

the eigen vectors of the sample covariance matrix

18

Example: Comparison of Neural Net and functional link neural net

Given two pattern classes C1 and C2 with the following four patterns and their desired outputs

19

(a)Design an Artificial Neural Network to classify the two patterns given

(b) Design a Functional Link Artificial Neural Network to classify the patterns given.

(c) Compare the Neural Net and Functional Link Neural Net designs

21

After training using the training set and the backpropagation algorithm the design becomes

Values determined by neural net

23

A neural net was trained using the functional link output patterns as new pattern samples

The resulting weights and structure are

24

(c) Comparison Artificial Neural Net (ANN) and Functional Link Artificial Neural Net (FLANN} Designs

FLANN has simpler structure than the ANN with only one neural element and Link.

Fewer iterations and computations in the training algorithm for FLANN.

FLANN design may be more sensitive to errors in patterns.

26

Test Design on Testing Set

Classify each member of the testing set using the neural network design.

Determine Performance for Design using Training Set

Classify each member of the training set using the neural network design.

27

Could use

(a) Performance Measure ETOT

(b) The Confusion Matrix

(c) Probability of Error

(d) Bayes Risk

30

(c) Probability of Error- Example

Estimates of Probabilities of being Correct

Estimate of Total Probability of Error

34

Design Using RBF ANN

Let F(x1, x2, … , xn) represent the function we wish to approximate.

For pattern classification F(x) represents the class assignment or desired output (target value)

for each pattern vector x a member of the training set

Define the performance measure E by

E

We wish to Minimize E by selecting M, ,, , and z1, z2, ... zM

35

Finding the Best Approximation using RBF ANN

(1st ) Find the number M of prototypes and the prototypes { zj : j=1, 2, ... , M} by using a clustering algorithm(Presented in Chapter 6) on the training samples

Usually broken into two parts

(2nd ) With these fixed M and { zj: j=1,2, ... , M} find the ,, , that minimize E.

Notes: You can use any minimization procedure you wish. Training does not use the Backpropagation Algorithm

36

Problems Using Neural Network Designs

Failure to converge

Max iterations too small Lockup occurs Limit cycles

Good performance on training set – poor performance on testing set

Training set not representative of variation Too strict of a tolerance - “grandmothering”

Selection of insufficient structure

37

Advantages of Neural Network Designs

Can obtain a design for very complicated problems.

Parallel structure using identical elements allows hardware or software implementation

Structure of Neural Network Design similar for all problems.

38

Other problems that can be solved using Neural Network Designs

System Identification

Functional Approximation

Control Systems

Any problem that can be placed in the format of a clearly defined desired output for different given input vectors.

40

Famous Quotation

“Neural network designs are the second best way to solve all problems”

? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ?

41

Famous Quotation

“Neural network designs are the second best way to solve all problems”

The promise is that a Neural Network can be used to solve all problems; however, with the caveat that there is always a better way to solve a specific problem.

? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ?

44

So what is the best way to solve a given problem ???

?A design that uses and understands the structure of the data !!!

45

Summary Lecture 24

1.Reviewed and Motivated Link Structure

2.Presented the Functional Link Artificial Neural Network.

3. Presented Simple Example with designs using ANN and FLANN

4. Described Performance Measures for Neural Network Designs

5. Presented Radial Basis Function Neural Networks

46

6. Discussed Problems, Advantages, Disadvantages, and the Promise of Artificial Neural Network Design

Related Documents