CE 459 Statistics Assistant Prof. Muhammet Vefa AKPINAR Expected Normal VAR1 Upper Boundaries (x <= boundary) No of obs 0 2 4 6 8 10 12 14 16 50 55 60 65 70 75 80 85 90 95 100

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

CE 459 Statistics

Assistant Prof. Muhammet Vefa AKPINAR

ExpectedNormal

VAR1

Upper Boundaries (x <= boundary)

No

of

ob

s

0

2

4

6

8

10

12

14

16

50 55 60 65 70 75 80 85 90 95 100

08.10.2011 2

Lecture Notes

What is Statistics

Frequency Distribution

Descriptive Statistics

Normal Probability Distribution

Sampling Distribution of the Mean

Simple Linear Regression & Correlation

Multiple Regression & Correlation

08.10.2011 3

INTRODUCTION

Criticism

There is a general perception that statistical knowledge is all-too-frequently intentionally misused, by finding ways to interpret the data that are favorable to the presenter.

(A famous quote, variously attributed, but thought to be from Benjamin Disraeli is: "There are three types of lies - lies, damn lies, and statistics.") Indeed, the well-known book How to Lie with Statistics by Darrell Huff discusses many cases of deceptive uses of statistics, focusing on misleading graphs. By choosing (or rejecting, or modifying) a certain sample, results can be manipulated; throwing out outliers is one means of doing so. This may be the result of outright fraud or of subtle and unintentional bias on the part of the researcher.

WHAT IS STATISTICS?

Definition

Statistics is a group of methods used to collect, analyze, present, and interpret data and to make decisions.

08.10.2011 5

What is Statistics ?

American Heritage Dictionary defines statistics as: "The mathematics of the collection, organization, and interpretation of numerical data, especially the analysis of population characteristics by inference from sampling."

The Merriam-Webster‟s Collegiate Dictionary definition is: "A branch of mathematics dealing with the collection, analysis, interpretation, and presentation of masses of numerical data."

The word statistics is also the plural of statistic (singular), which refers to the result of applying a statistical algorithm to a set of data, as in employment statistics, accident statistics, etc.

08.10.2011 6

In applying statistics to a scientific, industrial, or societal problem, one begins with a process or population to be studied. This might be a population of people in a country, of crystal grains in a rock, or of goods manufactured by a particular factory during a given period.

For practical reasons, rather than compiling data about an entire population, one usually instead studies a chosen subset of the population, called a sample.

Data are collected about the sample in an observational or experimental setting. The data are then subjected to statistical analysis, which serves two related purposes: description and inference.

08.10.2011 7

Descriptive statistics and Inferential statistics.

Statistical data analysis can be subdivided into Descriptive statistics and Inferential statistics.

Descriptive statistics is concerned with exploring, visualising, and summarizing data but without fitting the data to any models. This kind of analysis is used to explore the data in the initial stages of data analysis. Since no models are involved, it can not be used to test hypotheses or to make testable predictions. Nevertheless, it is a very important part of analysis that can reveal many interesting features in the data.

Descriptive statistics can be used to summarize the data, either numerically or graphically, to describe the sample. Basic examples of numerical descriptors include the mean and standard deviation. Graphical summarizations include various kinds of charts and graphs.

08.10.2011 8

Inferential statistics is the next stage in data analysis and involves the identification of a suitable model. The data is then fit to the model to obtain an optimal estimation of the model's parameters. The model then undergoes validation by testing either predictions or hypotheses of the model. Models based on a unique sample of data can be used to infer generalities about features of the whole population.

Inferential statistics is used to model patterns in the data, accounting for randomness and drawing inferences about the larger population. These inferences may take the form of answers to yes/no questions (hypothesis testing), estimates of numerical characteristics (estimation), forecasting of future observations, descriptions of association (correlation), or modeling of relationships (regression).

Other modeling techniques include ANOVA, time series, and data mining.

Population and sample.

Population

Sample

A portion of the population selected for study is referred to as a sample.

A population consists of all elements – individuals, items, or objects – whose characteristics are being studied. The population that is being studied is also called the target population.

Measures of Central Tendency

Mean

Sum of all measurements divided by the number of measurements.

Median:

A number such that at most half of the measurements are below it and at most half of the measurements are above it.

Mode:

The most frequent measurement in the data.

The central tendency of a dataset, i.e. the centre of a frequency distribution, is most commonly measured by the 3 Ms:

= arithmetic mean =average

Mean The Sample Mean ( ) is the arithmetic average of a data set.

It is used to estimate the population mean, ( .

Calculated by taking the sum of the observed values (yi) divided by the number of observations (n).

System $K

1 22.2

2 17.3

3 11.8

4 9.6

5 8.8

6 7.6

7 6.8

8 3.2

9 1.7

10 1.6

n

yyy

n

yn

n

i

i 211y

K06.9$10

6.13.172.22y

yi

yy - yi

Residual

= 9.06

y

Historical Transmogrifier

Average Unit Production Costs

08.10.2011 12

The Mode

The mode, symbolized by Mo, is the most frequently occurring score value. If the scores for a given sample distribution are:

32 32 35 36 37 38 38 39 39 39 40 40 42 45

then the mode would be 39 because a score of 39 occurs 3 times, more than any other score.

08.10.2011 13

A distribution may have more than one mode if the two most frequently occurring scores occur the same number of times. For example, if the earlier score distribution were modified as follows:

32 32 32 36 37 38 38 39 39 39 40 40 42 45

then there would be two modes, 32 and 39. Such distributions are called bimodal. The frequency polygon of a bimodal distribution is presented below.

Example of Mode

Measurements

x

3

5

1

1

4

7

3

8

3

Mode: 3

Notice that it is possible for a data not to have any mode.

Mode

The Mode is the value of the data set that occurs most frequently

Example:

1, 2, 4, 5, 5, 6, 8

Here the Mode is 5, since 5 occurred twice and no other value occurred more than once

Data sets can have more than one mode, while the mean and median have one unique value

Data sets can also have NO mode, for example:

1, 3, 5, 6, 7, 8, 9

Here, no value occurs more frequently than any other, therefore no mode exists

You could also argue that this data set contains 7 modes since each value occurs as frequently as every other

Example of Mode

Measurements

x

3

5

5

1

7

2

6

7

0

4

In this case the data have tow modes:

5 and 7

Both measurements are repeated twice

08.10.2011 17

Median

Computation of Median When there is an odd number of numbers, the median is simply the middle number. For example, the median of 2, 4, and 7 is 4. When there is an even number of numbers, the median is the mean of the two middle numbers. Thus, the median of the numbers 2, 4, 7, 12 is (4+7)/2 = 5.5.

Example of Median

Median: (4+5)/2 = 4.5

Notice that only the two central values are used in the computation.

The median is not sensible to extreme values

Measurements Measurements

Ranked

x x

3 0

5 1

5 2

1 3

7 4

2 5

6 5

7 6

0 7

4 7

40 40

median rim diameter (cm)

unit 1 unit 2

9.7 9.0

11.5 11.2

11.6 11.3

12.1 11.7

12.4 12.2

12.6 12.5

12.9 <-- 13.2 13.2

13.1 13.8

13.5 14.0

13.6 15.5

14.8 15.6

16.3 16.2

26.9 16.4

Median

The Median is the middle observation of an ordered (from low to high) data set

Examples:

1, 2, 4, 5, 5, 6, 8

Here, the middle observation is 5, so the median is 5

1, 3, 4, 4, 5, 7, 8, 8

Here, there is no “middle” observation so we take the average of the two observations at the center

5.42

54Median

Mode Median

Mean

Mode = Median = Mean

Dispersion Statistics

The Mean, Median and Mode by themselves are not sufficient descriptors of a data set

Example:

Data Set 1: 48, 49, 50, 51, 52

Data Set 2: 5, 15, 50, 80, 100

Note that the Mean and Median for both data sets are identical, but the data sets are glaringly different!

The difference is in the dispersion of the data points

Dispersion Statistics we will discuss are:

Range

Variance

Standard Deviation

Range

The Range is simply the difference between the smallest and largest observation in a data set

Example

Data Set 1: 48, 49, 50, 51, 52

Data Set 2: 5, 15, 50, 80, 100

The Range of data set 1 is 52 - 48 = 4

The Range of data set 2 is 100 - 5 = 95

So, while both data sets have the same mean and median, the dispersion of the data, as depicted by the range, is much smaller in data set 1

08.10.2011 24

deviation score

A deviation score is a measure of by how much each point in a frequency distribution lies above or below the mean for the entire dataset:

where: X = raw score X= the mean

Note that if you add all the deviation scores for a dataset together, you automatically get the mean for that dataset.

Variance

The Variance, s2, represents the amount of variability of the data relative to their mean

As shown below, the variance is the “average” of the squared deviations of the observations about their mean

1

)( 2

2

n

yys

i

The Variance, s2, is the sample variance, and is used to estimate the actual population variance, 2

N

yi

2

2)(

Standard Deviation

The Variance is not a “common sense” statistic because it describes the data in terms of squared units

The Standard Deviation, s, is simply the square root of the variance

1

)( 2

n

yys

i

The Standard Deviation, s, is the sample standard deviation, and is used to estimate the actual population standard deviation,

N

yi

2)(

Standard Deviation

The sample standard deviation, s, is measured in the same units as the data from which the standard deviation is being calculated

)($4.449

8.399

110

7.559.677.172

1

)(

2

2

2

K

n

yys

i

System FY97$K

1 22.2 13.1 172.7

2 17.3 8.2 67.9

3 11.8 2.7 7.5

4 9.6 0.5 0.3

5 8.8 -0.3 0.1

6 7.6 -1.5 2.1

7 6.8 -2.3 5.1

8 3.2 -5.9 34.3

9 1.7 -7.4 54.2

10 1.6 -7.5 55.7

Average 9.06

yyi

2)y(yi

)($67.6

)($4.44 22

K

Kss

This number, $6.67K, represents the average estimating error for predicting subsequent observations

In other words: On average, when estimating the cost of transmogrifiers that belongs to the same population as the ten systems above, we would expect to be off by $6.67K

08.10.2011 28

Variance and the closely-related standard deviation

The variance and the closely-related standard deviation are measures of how spread out a distribution is. In other words, they are measures of variability.

In order to define the amount of deviation of a dataset from the mean, calculate the mean of all the deviation scores, i.e. the variance.

The variance is computed as the average squared deviation of each number from its mean.

For example, for the numbers 1, 2, and 3, the mean is 2 and the variance is: .

08.10.2011 29

variance in a population is:

variance in a sample is:

where; μ is the mean and N is the number of scores.

08.10.2011 30

The standard deviation is the square root of the variance.

08.10.2011 31

Variance and Standar Deviation

Example of Mean

Measurements Deviation

x x - mean

3 -1

5 1

5 1

1 -3

7 3

2 -2

6 2

7 3

0 -4

4 0

40 0

MEAN = 40/10 = 4

Notice that the sum of the “deviations” is 0.

Notice that every single observation intervenes in the computation of the mean.

Example of Variance

Measurements Deviations Square of

deviations

x x - mean

3 -1 1

5 1 1

5 1 1

1 -3 9

7 3 9

2 -2 4

6 2 4

7 3 9

0 -4 16

4 0 0

40 0 54

Variance = 54/9 = 6

It is a measure of “spread”.

Notice that the larger the deviations (positive or negative) the larger the variance

The standard deviation

It is defines as the square root of the variance

In the previous example

Variance = 6

Standard deviation = Square root of the variance = Square root of 6 = 2.45

08.10.2011 35

Observed Vehicle velocity

velocity km/saat

67 73 81 72 76 75 85 77 68 84

76 93 73 79 88 73 60 93 71 59

74 62 95 78 63 72 66 78 82 75

96 70 89 61 75 95 66 79 83 71

76 65 71 75 65 80 73 57 88 78

08.10.2011 36

Mean, Median, Standard Deviation

Valid

Numbers Range Mean Median Minimum Maximum Variance Standard.Dev.

50 39 75,62 75 57 96 96,362 9,816458

08.10.2011 37

Frequency Table

Number class class frequency relative freq. Cumulative freq.

Relative cumulative

freq.

of Class (intervals) intervals midpoints % %

1 50,000 < x <= 55,000 52,5 0 0 0 0

2 55,000 < x <= 60,000 57,5 3 6 3 6

3 60,000 < x <= 65,000 62,5 5 10 8 16

4 65,000 < x <= 70,000 67,5 5 10 13 26

5 70,000 < x <= 75,000 72,5 14 28 27 54

6 75,000 < x <= 80,000 77,5 10 20 37 74

7 80,000 < x <= 85,000 82,5 5 10 42 84

8 85,000 < x <= 90,000 87,5 3 6 45 90

9 90,000 < x <= 95,000 92,5 4 8 49 98

10 95,000 < x <= 100,00 97,5 1 2 50 100

08.10.2011 38

Frequency Table

A cumulative frequency distribution is a plot of the number of observations falling in or below an interval. The graph shown here is a cumulative frequency distribution of the scores on a statistics test.

A frequency table is constructed by dividing the scores into intervals and counting the number of scores in each interval. The actual number of scores as well as the percentage of scores in each interval are displayed. Cumulative frequencies are also usually displayed.

The X-axis shows various intervals of vehicle speed.

08.10.2011 39

Selecting the Interval Size

In order to find a starting interval size the first step is to find the range of the data by subtracting the smallest score from the largest. In the case of the example data, the range was 96-57 = 39. The range is then divided by the number of desired intervals, with a suggested starting number of intervals being ten (10). In the example, the result would be 50/10 = 5. The nearest odd integer value is used as the starting point for the selection of the interval size.

08.10.2011 40

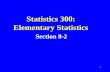

Histogram

A histogram is constructed from a frequency table. The intervals are shown on

the X-axis and the number of scores in each interval is represented by the

height of a rectangle located above the interval. A histogram of the vehicle

speed from the dataset is shown below. The shapes of histograms will vary

depending on the choice of the size of the intervals.

ExpectedNormal

VAR1

Upper Boundaries (x <= boundary)

No

of

ob

s

0

2

4

6

8

10

12

14

16

50 55 60 65 70 75 80 85 90 95 100

08.10.2011 41

There are many different-shaped frequency distributions:

08.10.2011 42

A frequency polygon is a graphical display of a frequency table. The intervals are shown on the X-axis and the number of scores in each interval is represented by the height of a point located above the middle of the interval. The points are connected so that together with the X-axis they form a polygon.

08.10.2011 43

Spread, Dispersion, Variability

A variable's spread is the degree to which scores on the variable differ from each other. If every score on the variable were about equal, the variable would have very little spread. There are many measures of spread. The distributions shown below have the same mean but differ in spread: The distribution on the bottom is more spread out. Variability and dispersion are synonyms for spread.

08.10.2011 44

Skew

Further Notes

When the Mean is greater than the Median the data distribution is skewed to the Right.

When the Median is greater than the Mean the data distribution is skewed to the Left.

When Mean and Median are very close to each other the data distribution is approximately symmetric.

08.10.2011 46

The distribution shown below has a positive skew. The mean is larger than the median.

test was very difficult and almost everyone in the class did very poorly on it,

the resulting distribution would most likely be positively skewed.

The Effect of Skew on the Mean and Median

08.10.2011 47

The distribution shown below has a negative skew. The mean is smaller than the median.

08.10.2011 48

Probability

Likelihood or chance of occurrence. The probability of an event is the theoretical relative frequency of the event in a model of the population.

08.10.2011 49

Normal Distribution or Normal Curve

Normal distribution is probably one of the most important and

widely used continuous distribution. It is known as a normal random variable, and its probability distribution is called a normal distribution.

The normal distribution is a theoretical function commonly used in inferential statistics as an approximation to sampling distributions. In general, the normal distribution provides a good model for a random variable.

08.10.2011 50

In a normal distribution:

68% of samples fall between ±1 SD

95% of samples fall between ±2 SD (actually + 1.96 SD)

99.7% of samples fall between ±3 SD

08.10.2011 51

The normal distribution function

The normal distribution function is determined by the following formula:

Where;

: mean : standard deviation e: Euler's constant (2.71...) : constant Pi (3.14...)

08.10.2011 52

Characteristics of the Normal Distribution:

It is bell shaped and is symmetrical about its mean. It is asymptotic to the axis, i.e., it extends indefinitely in either direction from the mean.

They are symmetric with scores more concentrated in the middle than in the tails.

It is a family of curves, i.e., every unique pair of mean and standard deviation defines a different normal distribution. Thus, the normal distribution is completely described by two parameters: mean and standard deviation.

There is a strong tendency for the variable to take a central value. It is unimodal, i.e., values mound up only in the center of the curve.

The frequency of deviations falls off rapidly as the deviations become larger.

08.10.2011 53

Total area under the curve sums to 1, the area of the distribution on each side of the mean is 0.5.

The Area Under the Curve Between any Two Scores is a PROBABILITY The probability that a random variable will have a value between any

two points is equal to the area under the curve between those points. Positive and negative deviations from this central value are equally likely

08.10.2011 54

Examples of normal distributions

Notice that they differ in how spread out they are. The area under each curve is the same. The height of a normal distribution can be specified mathematically in terms

of two parameters: the mean (μ) and the standard deviation (σ). The two

parameters, and , each change the shape of the distribution in a different

manner.

08.10.2011 55

Changes in without changes in

Changes in , without changes in , result in moving the distribution to the right or left, depending upon whether the new value of was larger or smaller than the previous value, but does not change the shape of the distribution.

08.10.2011 56

Changes in the value of

Changes in the value of , change the shape of the distribution without affecting the midpoint, because d affects the spread or the dispersion of scores. The larger the value of , the more dispersed the scores; the smaller the value, the less dispersed. The distribution below demonstrates the effect of increasing the value of :

08.10.2011 57

THE STANDARD NORMAL CURVE

The standard normal curve is a member of the family of normal curves with = 0.0 and = 1.0.

Note that the integral calculus is used to find the area under the normal distribution curve. However, this can be avoided by transforming all normal distribution to fit the standard normal distribution. This conversion is done by rescalling the normal distribution axis from its true units (time, weight, dollars, and...) to a standard measure called Z score or Z value.

08.10.2011 58

Standard Scores (z Scores)

A Z score is the number of standard deviations that a value, X, is away from the mean.

Standard scores are therefore useful for comparing datapoints in different distributions.

If the value of X is greater than the mean, the Z score is positive; if the value of X is less than the mean, the Z score is negative. The Z score or equation is as follows:

where z is the z-score for the value of X

08.10.2011 59

Table of the Standard Normal (z) Distribution

z 0.00 0.01 0.02 0.03 0.04 0.05 0.06 0.07 0.08 0.09

0.0 0.0000 0.0040 0.0080 0.0120 0.0160 0.0190 0.0239 0.0279 0.0319 0.0359

0.1 0.0398 0.0438 0.0478 0.0517 0.0557 0.0596 0.0636 0.0675 0.0714 0.0753

0.2 0.0793 0.0832 0.0871 0.0910 0.0948 0.0987 0.1026 0.1064 0.1103 0.1141

0.3 0.1179 0.1217 0.1255 0.1293 0.1331 0.1368 0.1406 0.1443 0.1480 0.1517

0.4 0.1554 0.1591 0.1628 0.1664 0.1700 0.1736 0.1772 0.1808 0.1844 0.1879

0.5 0.1915 0.1950 0.1985 0.2019 0.2054 0.2088 0.2123 0.2157 0.2190 0.2224

0.6 0.2257 0.2291 0.2324 0.2357 0.2389 0.2422 0.2454 0.2486 0.2517 0.2549

0.7 0.2580 0.2611 0.2642 0.2673 0.2704 0.2734 0.2764 0.2794 0.2823 0.2852

0.8 0.2881 0.2910 0.2939 0.2969 0.2995 0.3023 0.3051 0.3078 0.3106 0.3133

0.9 0.3159 0.3186 0.3212 0.3238 0.3264 0.3289 0.3315 0.3340 0.3365 0.3389

1.0 0.3413 0.3438 0.3461 0.3485 0.3508 0.3513 0.3554 0.3577 0.3529 0.3621

1.1 0.3643 0.3665 0.3686 0.3708 0.3729 0.3749 0.3770 0.3790 0.3810 0.3830

1.2 0.3849 0.3869 0.3888 0.3907 0.3925 0.3944 0.3962 0.3980 0.3997 0.4015

1.3 0.4032 0.4049 0.4066 0.4082 0.4099 0.4115 0.4131 0.4147 0.4162 0.4177

1.4 0.4192 0.4207 0.4222 0.4236 0.4251 0.4265 0.4279 0.4292 0.4306 0.4319

1.5 0.4332 0.4345 0.4357 0.4370 0.4382 0.4394 0.4406 0.4418 0.4429 0.4441

1.6 0.4452 0.4463 0.4474 0.4484 0.4495 0.4505 0.4515 0.4525 0.4535 0.4545

1.7 0.4554 0.4564 0.4573 0.4582 0.4591 0.4599 0.4608 0.4616 0.4625 0.4633

1.8 0.4641 0.4649 0.4656 0.4664 0.4671 0.4678 0.4686 0.4693 0.4699 0.4706

1.9 0.4713 0.4719 0.4726 0.4732 0.4738 0.4744 0.4750 0.4756 0.4761 0.4767

2.0 0.4772 0.4778 0.4783 0.4788 0.4793 0.4798 0.4803 0.4808 0.4812 0.4817

2.1 0.4821 0.4826 0.4830 0.4834 0.4838 0.4842 0.4846 0.4850 0.4854 0.4857

2.2 0.4861 0.4864 0.4868 0.4871 0.4875 0.4878 0.4881 0.4884 0.4887 0.4890

08.10.2011 60

Three areas on a standard normal curve

08.10.2011 61

Z-1.5

Total - infinity to Z-

1.5

Z

Total Z to +

infinity

Z

Total - infinity to

Z

+Z

- Z

Area from -Z to +Z

+Z

- Z

-infinity to -Z

plus

+Z to +

infinity

+Z

- Z

-infinity to -Z

plus

+Z to +

infinity

Z Area Under Curve

from negative infinity

to Z

Area Under Curve

from Z to positive

infinity

Area Under Curve

from -Z to +Z

Area Under Curve

(negative infinity to -

Z) PLUS

(+Z to positive infinity)

Convert

(negative infinity to -Z

) PLUS

(+Z to positive infinity)

into PPM

Area Under Curve

negative infinity to Z-

1.5

0,000 0,50000000000000 0,50000000000000 0,00000000000000 1,00000000000000 1.000.000,00000000 0,06680720126886

0,100 0,53982783727702 0,46017216272298 0,07965567455403 0,92034432544597 920.344,32544597 0,08075665923377

0,200 0,57925970943909 0,42074029056091 0,15851941887818 0,84148058112182 841.480,58112182 0,09680048458561

0,300 0,61791142218894 0,38208857781106 0,23582284437788 0,76417715562212 764.177,15562212 0,11506967022170

0,400 0,65542174161031 0,34457825838969 0,31084348322063 0,68915651677937 689.156,51677937 0,13566606094638

0,500 0,69146246127400 0,30853753872600 0,38292492254801 0,61707507745200 617.075,07745200 0,15865525393145

0,600 0,72574688224992 0,27425311775008 0,45149376449983 0,54850623550017 548.506,23550017 0,18406012534675

0,700 0,75803634777692 0,24196365222308 0,51607269555384 0,48392730444617 483.927,30444617 0,21185539858339

0,800 0,78814460141659 0,21185539858341 0,57628920283319 0,42371079716681 423.710,79716681 0,24196365222306

0,900 0,81593987465323 0,18406012534677 0,63187974930647 0,36812025069354 368.120,25069354 0,27425311775006

1,000 0,84134474606854 0,15865525393146 0,68268949213707 0,31731050786293 317.310,50786293 0,30853753872598

1,100 0,86433393905361 0,13566606094639 0,72866787810722 0,27133212189278 271.332,12189278 0,34457825838967

1,200 0,88493032977829 0,11506967022171 0,76986065955657 0,23013934044343 230.139,34044343 0,38208857781104

1,300 0,90319951541439 0,09680048458562 0,80639903082877 0,19360096917123 193.600,96917123 0,42074029056089

1,400 0,91924334076622 0,08075665923378 0,83848668153245 0,16151331846755 161.513,31846755 0,46017216272296

08.10.2011 62

The area between Z-scores of -1.00 and +1.00. It is .68 or 68%.

The area between Z-scores of -2.00 and +2.00 and is .95 or 95%.

08.10.2011 63

Exercise 1

An industrial sewing machine uses ball bearings that are targeted to have a diameter of 0.75 inch. The specification limits under which the ball bearing can operate are 0.74 inch (lower) and 0.76 inch (upper). Past experience has indicated that the actual diameter of the ball bearings is approximately normally distributed with a mean of 0.753 inch and a standard deviation of 0.004 inch.

For this problem, note that "Target" = .75, and "Actual mean" = .753.

08.10.2011 64

What is the probability that a ball bearing will be between the target and the actual mean?

P(-0.75 < Z < 0) = .2734

08.10.2011 65

What is the probability that a ball bearing will be between the lower specification limit and the target?

P(-3.25 < Z < -0.75) = .49942 - .2734 = .22602

08.10.2011 66

What is the probability that a ball bearing will be above the upper specification limit?

P(Z > 1.75) = .5 - .4599 = .0401

08.10.2011 67

What is the probability that a ball bearing will be below the lower specification limit?

P (Z < -3.25) = .5 - .49942 = .00058

08.10.2011 68

Above which value in diameter will 93% of the ball bearings be?

The value asked for here will be the 7th percentile, since 93% of the ball bearings will have diameters above that. So we will look up .4300 in the Z-table in a "backwards“ manner. The closest area to this is .4306, which corresponds to a Z-value of 1.48.

-0.00592 = X - 0.753 X = 0.74708

So 0.74708 in. is the value that 93% of the diameters are above.

08.10.2011 69

Exercise 2

Graduate Management Aptitude Test (GMAT) scores are widely used by graduate schools of business as an entrance requirement. Suppose that in one particular year, the mean score for the GMAT was 476, with a standard deviation of 107. Assuming that the GMAT scores are normally distributed, answer the following questions:

08.10.2011 70

Question 1

What is the probability that a randomly selected score from this GMAT falls between 476 and 650 (476 <= x <= 650) the following figure shows a graphic representation of this problem.

Answer: Z = (650 - 476)/107 = 1.62. The Z value of 1.62 indicates that the GMAT score of 650 is 1.62 standard

deviation above the mean. The standard normal table gives the probability of value falling between 650 and the mean. The whole number and tenths place portion of the Z score appear in the first column of the table. Across the top of the table are the values of the hundredths place portion of the Z score. Thus the answer is that 0.4474 or 44.74% of the scores on the GMAT fall between a score of 650 and 476.

08.10.2011 71

Question 2. What is the probability of receiving a score greater than 750 on a GMAT test that

has a mean of 476 and a standard deviation of 107 i.e., P(X >= 750) = ?. Answer This problem is asking for determining the area of the upper tail of the distribution. The Z score is: Z = ( 750 - 476)/107 = 2.56- Table- P(Z=2.56) = 0.4948.

This is the probability of a GMAT with a score between 476 and 750. 0.5 - 0.4948 = 0.0052 or 0.52%. Note that P(X >= 750) is the same as P(X >750), because, in continuous

distribution, the area under an exact number such as X=750 is zero.

08.10.2011 72

What is the probability of receiving a score of 540 or less on a GMAT test that has a mean of 476 and a standard deviation of 107 i.e., P(X <= 540)= ?

we are asked to determine the area under the curve for all values less than or equal to 540.

z score (540-476)/107=0.6 -Table- P (z= 0.2257) which is the probability of getting a score between the mean 476 and 540.

The answer to this problem is: 0.5 + 0.2257 = 0.73 or 73%. Graphic representation of this problem.

08.10.2011 73

Question 4 What is the probability of receiving a score between 440 and 330 on a GMAT test that

has a mean of 476 and a standard deviation of 107. i.e., P(330 < 440)="?."

The two values fall on the same side of the mean.

The Z scores are: Z1 = (330 - 476)/107 = -1.36, and Z2 = (440 - 476)/107 = -0.34. The probability associated with Z = -1.36 is 0.4131,

The probability associated with Z = -0.34 is 0.1331.

Thee answer to this problem is: 0.4131 - 0.1331 = 0.28 or 28%.

08.10.2011 74

Standard Error (SE)

Any statistic can have a standard error. Each sampling distribution has a standard error.

Standard errors are important because they reflect how much sampling fluctuation a statistic will show, i.e. how good an estimate of the population the sample statistic is

How good an estimate is the mean of a population? One way to determine this is to repeat the experiment many times and to determine the mean of the means. However, this is tedious and frequently impossible.

SE refers to the variability of the sample statistic, a measure of spread for random variables

The inferential statistics involved in the construction of confidence intervals (CI) and significance testing are based on standard errors.

08.10.2011 75

Standard Error of the Mean, SEM, σM

The standard deviation of the sampling distribution of the mean is called the standard error of the mean.

The size of the standard error of the mean is inversely proportional to the square root of the sample size.

Not:

08.10.2011 76

The standard error of any statistic depends on the sample size - in general, the larger the sample size the smaller the standard error.

Note that the spread of the sampling distribution of the mean decreases as the sample size increases.

Notice that the mean of the distribution is not affected by sample size.

08.10.2011 77

Comparing the Averages of Two Independent Samples

Is there "grade inflation" in KTU? How does the average GPA of KTU students today compare with, say 10, years ago?

Suppose a random sample of 100 student records from 10 years ago yields a sample average GPA of 2.90 with a standard deviation of .40.

A random sample of 100 current students today yields a sample average of 2.98 with a standard deviation of .45.

The difference between the two sample means is 2.98-2.90 = .08. Is this proof that GPA's are higher today than 10 years ago?

08.10.2011 78

First we need to account for the fact that 2.98 and 2.90 are not the true averages, but are computed from random samples. Therefore, .08 is not the true difference, but simply an estimate of the true difference.

Can this estimate miss by much? Fortunately, statistics has a way of measuring the expected size of the ``miss'' (or error of estimation) . For our example, it is .06 (we show how to calculate this later). Therefore, we can state the bottom line of the study as follows: "The average GPA of KTU students today is .08 higher than 10 years ago, give or take .06 or so."

08.10.2011 79

Overview of Confidence Intervals

Once the population is specified, the next step is to take a random sample from it. In this example, let's say that a sample of 10 students were drawn and each student's memory tested. The way to estimate the mean of all high school students would be to compute the mean of the 10 students in the sample. Indeed, the sample mean is an unbiased estimate of μ, the population mean.

Clearly, if you already knew the population mean, there would be no need for a confidence interval.

08.10.2011 80

We are interested in the mean weight of 10-year old kids living in Turkey. Since it would have been impractical to weigh all the 10-year old kids in Turkey, you took a sample of 16 and found that the mean weight was 90 pounds. This sample mean of 90 is a point estimate of the population mean.

A point estimate by itself is of limited usefulness because it does not reveal the uncertainty associated with the estimate; you do not have a good sense of how far this sample mean may be from the population mean. For example, can you be confident that the population mean is within 5 pounds of 90? You simply do not know.

08.10.2011 81

Confidence intervals provide more information than point estimates.

An example of a 95% confidence interval is shown below:

72.85 < μ < 107.15

There is good reason to believe that the population mean lies between these two bounds of 72.85 and 107.15 since 95% of the time confidence intervals contain the true mean.

If repeated samples were taken and the 95% confidence interval computed for each sample, 95% of the intervals would contain the population mean. Naturally, 5% of the intervals would not contain the population mean.

08.10.2011 82

It is natural to interpret a 95% confidence interval as an interval with a 0.95 probability of containing the population mean

The wider the interval, the more confident you are that it contains the parameter. The 99% confidence interval is therefore wider than the 95% confidence interval and extends from 4.19 to 7.61.

08.10.2011 83

Example

Assume that the weights of 10-year old children are normally distributed with a mean of 90 and a standard deviation of 36. What is the sampling distribution of the mean for a sample size of 9?

standard deviation of 36/3 = 12. Note that the standard deviation of a sampling distribution is its standard error.

90 - (1.96)(12) = 66.48

90 + (1.96)(12) = 113.52

The value of 1.96 is based on the fact that 95% of the area of a normal distribution is within 1.96 standard deviations of the mean; 12 is the standard error of the mean.

08.10.2011 84

Figure shows that 95% of the means are no more than 23.52 units

(1.96x12) from the mean of 90.

Now consider the probability that a sample mean computed in a

random sample is within 23.52 units of the population mean of 90.

Since 95% of the distribution is within 23.52 of 90, the probability that

the mean from any given sample will be within 23.52 of 90 is 0.95.

This means that if we repeatedly compute the mean (M) from a

sample, and create an interval ranging from M - 23.52 to M +

23.52, this interval will contain the population mean 95% of the

time.

08.10.2011 85

notice that you need to know the standard deviation (σ) in order

to estimate the mean. This may sound unrealistic, and it is.

However, computing a confidence interval when σ is known is

easier than when σ has to be estimated, and serves a

pedagogical purpose.

Suppose the following five were sampled from a normal

distribution with a standard deviation of 2.5: 2, 3, 5, 6, and 9. To

compute the 95% confidence interval, start by computing the

mean and standard error:

M = (2 + 3 + 5 + 6 + 9)/5 = 5. σm = = 1.118.

08.10.2011 86

Z.95 --the value is 1.96.

08.10.2011 87

If you had wanted to compute the 99% confidence interval, you would have set the shaded area to 0.99 and the result would have been 2.58.

The confidence interval can then be computed as follows:

Lower limit = 5 - (1.96)(1.118)= 2.81

Upper limit = 5 + (1.96)(1.118)= 7.19

08.10.2011 88

Estimating the Population Mean Using Intervals

Estimate the average GPA of the population of approximately 23000 KTU undergraduates.n=25 randomly selected students, sample average= 3.05.

Consider estimating the population average

Now chances are the true average is not equal to 3.05.

True KTU average GPA is between 1.00 and 4.00, and with high confidence between (2.50, 3.50); but what level of confidence do we have that it is between say, (2.75, 3.25) or (2.95, 3.15)?

Even better, can we find an interval (a, b) which will contain with 95%

certainty?

08.10.2011 89

Example:

Given the following GPA for 6 students: 2.80, 3.20, 3.75, 3.10, 2.95, 3.40

Calculate a 95% confidence interval for the population mean GPA.

08.10.2011 90

Determining Sample Size for Estimating the Mean

want to estimate the average GPA of KTU undergraduates this school year. Historically, the SD of student GPA is known to be .

If a random sample of size n=25 yields a sample mean of , then the population mean is estimated as lying within the interval

with 95% confidence. The plus-or-minus quantity .12 is called the margin of error of the sample mean associated with a 95% confidence level. It is also correct to say ``we are 95% confident that is within .12 of the sample mean 3.05''.

Confidence Interval for μ, Standard Deviation Estimated

It is very rare for a researcher wishing to estimate the mean of a population to already know its standard deviation. Therefore, the construction of a confidence interval almost always involves the estimation of both μ and σ. When σ is known -> M - zσM ≤ μ ≤ M + zσM is used for a confidence interval.

When σ is not known, Whenever the standard deviation is estimated (NOT KNOWN), the t rather than the normal (z) distribution should be used. for μ when σ is estimated is: M - t sM ≤ μ ≤ M + t sM where M is the sample mean, sM is an estimate of σM (standard error), and t depends on the degrees of freedom and the level of confidence.

confidence interval on the mean:

More generally, the formula for the 95% confidence interval on the mean is:

Lower limit = M - (t)(sm) Upper limit = M + (t)(sm)

where;

M is the sample mean, t is the t for the confidence level desired (0.95 in the above example), and sm is the estimated standard error of the mean.

A comparison of the t and normal distribution

A comparison of the t distribution with 4 df

(in blue) and the standard normal

distribution (in red).

Finding t-values

Find the t-value such that the area under the t distribution to the right of the t-value is 0.2 assuming 10 degrees of freedom. That is, find t0.20 with 10 degrees of freedom.

Upper tail probability p (area under the right side)

Example:

P[t(2) > 2.92] = 0.05

P[-2.92 < t(2) < 2.92] = 0.9

50% 60% 70% 80% 90% 95% 96% 98% 99% 99.5% 99.8% 99.9%

0.25 0.2 0.15 0.1 0.05 0.025 0.02 0.01 0.005 0.0025 0.001 0.0005

df

1 1.000 1.376 1.963 3.078 6.314 12.706 15.895 31.821 63.657 127.32 318.30 636.61

2 0.817 1.061 1.386 1.886 2.920 4.303 4.849 6.965 9.925 14.089 22.327 31.599

3 0.765 0.979 1.250 1.638 2.353 3.182 3.482 4.541 5.841 7.453 10.215 12.924

4 0.741 0.941 1.190 1.533 2.132 2.776 2.999 3.747 4.604 5.598 7.173 8.610

5 0.727 0.920 1.156 1.476 2.015 2.571 2.757 3.365 4.032 4.773 5.893 6.869

6 0.718 0.906 1.134 1.440 1.943 2.447 2.612 3.143 3.707 4.317 5.208 5.959

7 0.711 0.896 1.119 1.415 1.895 2.365 2.517 2.998 3.499 4.029 4.785 5.408

8 0.706 0.889 1.108 1.397 1.860 2.306 2.449 2.896 3.355 3.833 4.501 5.041

9 0.703 0.883 1.100 1.383 1.833 2.262 2.398 2.821 3.250 3.690 4.297 4.781

10 0.700 0.879 1.093 1.372 1.812 2.228 2.359 2.764 3.169 3.581 4.144 4.587

11 0.697 0.876 1.088 1.363 1.796 2.201 2.328 2.718 3.106 3.497 4.025 4.437

12 0.696 0.873 1.083 1.356 1.782 2.179 2.303 2.681 3.055 3.428 3.930 4.318

13 0.694 0.870 1.079 1.350 1.771 2.160 2.282 2.650 3.012 3.372 3.852 4.221

14 0.692 0.868 1.076 1.345 1.761 2.145 2.264 2.624 2.977 3.326 3.787 4.140

15 0.691 0.866 1.074 1.341 1.753 2.131 2.249 2.602 2.947 3.286 3.733 4.073

Abbreviated t table

df

0.95

0.99

2 4.303 9.925

3 3.182 5.841

4 2.776 4.604

5 2.571 4.032

8 2.306 3.355

10 2.228 3.169

20 2.086 2.845

50 2.009 2.678

100 1.984 2.626

Example

Assume that the following five numbers are sampled from a normal distribution: 2, 3, 5, 6, and 9 and that the standard deviation is not known. The first steps are to compute the sample mean and variance: M = 5 sm = 7.5 Standard error (sm)= 1.225

df = N - 1 = 4

t t tablethe value for the 95% interval for is

2.776.

Lower limit = 5 - (2.776)(1.225)= 1.60 Upper limit = 5 + (2.776)(1.225)= 8.40

Example

Suppose a researcher were interested in estimating the mean reading speed (number of words per minute) of high-school graduates and computing the 95% confidence interval. A sample of 6 graduates was taken and the reading speeds were: 200, 240, 300, 410, 450, and 600. For these data, M = 366.6667 sM= 60.9736 df = 6-1 = 5 t = 2.571

lower limit is: M - (t) (sM) = 209.904

upper limit is: M + (t) (sM) = 523.430,

95% confidence interval is: 209.904 ≤ μ ≤ 523.430

Thus, the researcher can conclude based on the rounded off 95% confidence interval that the mean reading speed of high-school graduates is between 210 and 523.

Homework 1

The mean time difference for all 47 subjects is 16.362 seconds and the standard deviation is 7.470 seconds. The standard error of the mean is 1.090.

A t table shows the critical value of t for 47 - 1 = 46 degrees of freedom is 2.013 (for a 95% confidence interval). The confidence interval is computed as follows:

Lower limit = 16.362 - (2.013)(1.090)= 14.17 Upper limit = 16.362 + (2.013)(1.090)= 18.56

Therefore, the interference effect (difference) for the whole population is likely to be between 14.17 and 18.56 seconds.

Homework 2

The pasteurization process reduces the amount of bacteria found in dairy products, such as milk. The following data represent the counts of bacteria in pasteurized milk (in CFU/mL) for a random sample of 12 pasteurized glasses of milk.

Construct a 95% confidence interval for the bacteria count.

NOTE: Each observation is in tens of thousand. So, 9.06 represents 9.06 x 104.

Prediction with Regression Analysis

The relationship(s) between values of the response variable and corresponding values of the predictor variable(s) is (are) not deterministic.

Thus the value of y is estimated given the value of x. The estimated value of the dependent variable is denoted y, and the population slope and intercept are usually denoted β1 and β0.

Linear Regression

The idea is to fit a straight line through data points

Linear Regression - Indicates that the relationship(s) between the dependent variable and the independent variable(s).

Can extend to multiple dimensions

correlation analysis is applied to independent factors: if X increases, what will Y do (increase, decrease, or perhaps not change at all)?

In regression analysis a unilateral response is assumed: changes in X result in changes in Y, but changes in Y do not result in changes in X.

0.1 0.0-0.1-0.2

0.4

0.3

0.2

0.1

0.0

-0.1

-0.2

-0.3

vwmkt

m1

S = 0.0590370 R-Sq = 31.3 % R-Sq(adj) = 30.8 %

m1 = 0.0095937 + 0.880436 vwmkt

Regression Plot

Linear regression means a regression that is linear in the parameters

A linear regression can be non-linear in the variables

Example: Y = β0 + β1X2

Some non-linear regression models can be transformed

into a linear regression model

(e.g., Y=aXbZc can be transformed into

lnY = ln a + b*ln X + c*ln Z)

Example

Given one variable

Goal: Predict Y

Example: Given Years of

Experience

Predict Salary

Questions: When X=10, what is Y?

When X=25, what is Y?

This is known as regression

X

(years)

Y (salary, $1,000)

3 30

8 57

9 64

13 72

3 36

6 43

11 59

21 90

1 20

For the example data

xy 5.32.23

5.3

,2.23

x=10 years prediction of y (salary) is:

23.2+35=58.2 K dollars/year.

Linear Regression Example Linear Regression: Y=3.5*X+23.2

0

20

40

60

80

100

120

0 5 10 15 20 25

Years

Sal

ary

XY

xy

xx

yyxx

i

i

i

ii

2)(

))((

Regression Error

We can also write a regression equation slightly differently:

Also called the residual, this is the difference between our estimate of the value of

the dependent variable y and the actual value of the dependent variable y.

Unless we have perfect prediction, many of the y values will fall off of the line. The added e in the equation refers to this fact. It would be incorrect to write the equation without the e, because it would suggest that the y scores are completely accounted for by just knowing the slope, x values, and the intercept. Almost always, that is not true. There is some error in prediction, so we need to add an e for error variation into the equation.

The actual values of y can be accounted for by the regression line equation (y=a+bx) plus some degree of error in our prediction (the e's).

r correlation coefficient

The correlation between X and Y is expressed by the correlation coefficient r :

xi = data X, ¯x = mean of data X yi = data Y, ¯y = mean of data Y

1 >r > -1

r = 1 perfect positive linear correlation between two variables

r = 0 no linear correlation (maybe other correlation) r = -1 perfect negative linear correlation

Notice that for the perfect correlation, there is a perfect line of points. They do not deviate from that line.

least squares

The principle is to establish a statistical linear relationship between two sets of corresponding data by fitting the data to a straight line by means of the "least squares" technique.

The resulting line takes the general form: y = bx + a

a = intercept of the line with the y-axis

b = slope (tangent)

a = 0, b= 1 perfect positive correlation without bias a= 0 systematic discrepancy (bias, error) between X and Y; b = 1 proportional response or difference between X and Y.

Example

Each point represents one student with a certain score for time on the exam, x, and grade, y. The scatter plot reveals that, in general, longer times on the exam tend to be associated with higher grades.

0.64

ID Grade on

Exam (x)

Time on

Exam (y)

X-X avr Y-Yavr (X-Xavr)*(Y-Yavr) (X-Xavr)2

1 88 60 8.6 18.55 159.53 73.96

2 96 53 16.6 11.55 191.73 275.56

3 72 22 -7.4 -19.45 143.93 54.76

4 78 44 -1.4 2.55 -3.57 1.96

5 65 34 -14.4 -7.45 107.28 207.36

6 80 47 0.6 5.55 3.33 0.36

7 77 38 -2.4 -3.45 8.28 5.76

8 83 50 3.6 8.55 30.78 12.96

9 79 51 -0.4 9.55 -3.82 0.16

10 68 35 -11.4 -6.45 73.53 129.96

11 84 46 4.6 4.55 20.93 21.16

12 76 36 -3.4 -5.45 18.53 11.56

13 92 48 12.6 6.55 82.53 158.76

r correlation

The Pearson r can be positive or negative, ranging from -1.0 to

1.0. If the correlation is 1.0, the longer the amount of time spent on

the exam, the higher the grade will be--without any exceptions. An r value of -1.0 indicates a perfect negative correlation--

without an exception, the longer one spends on the exam, the poorer the grade.

If r=0, there is absolutely no relationship between the two variables. When r=0, on average, longer time spent on the exam does not result in any higher or lower grade. Most often r is somewhere in between -1.0 and +1.0.

ID Grade on Exam (x) x2 Time on Exam (y) y2 xy

1 88 7744 60 3600 5280

2 96 9216 53 2809 5088

3 72 5184 22 484 1584

4 78 6084 44 1936 3432

5 65 4225 34 1156 2210

6 80 6400 47 2209 3760

7 77 5929 38 1444 2926

8 83 6889 50 2500 4150

9 79 6241 51 2601 4029

10 68 4624 35 1225 2380

11 84 7056 46 2116 3864

12 76 5776 36 1296 2736

13 92 8464 48 2304 4416

14 80 6400 43 1849 3440

15 67 4489 40 1600 2680

16 78 6084 32 1024 2496

17 74 5476 27 729 1998

18 73 5329 41 1681 2993

19 88 7744 39 1521 3432

20 90 8100 43 1849 3870

S 1588 127454 829 35933 66764

ID Grade on

Exam (x)

Time on

Exam (y)

X-X ort Y-Yort (X-Xort)*(Y-Yort) (X-Xort)2 (Y-Yort)

2

1 88 60 8,6 18,55 159,53 73,96 344,1025

2 96 53 16,6 11,55 191,73 275,56 133,4025

3 72 22 -7,4 -19,45 143,93 54,76 378,3025

4 78 44 -1,4 2,55 -3,57 1,96 6,5025

5 65 34 -14,4 -7,45 107,28 207,36 55,5025

6 80 47 0,6 5,55 3,33 0,36 30,8025

7 77 38 -2,4 -3,45 8,28 5,76 11,9025

8 83 50 3,6 8,55 30,78 12,96 73,1025

9 79 51 -0,4 9,55 -3,82 0,16 91,2025

10 68 35 -11,4 -6,45 73,53 129,96 41,6025

11 84 46 4,6 4,55 20,93 21,16 20,7025

12 76 36 -3,4 -5,45 18,53 11,56 29,7025

13 92 48 12,6 6,55 82,53 158,76 42,9025

14 80 43 0,6 1,55 0,93 0,36 2,4025

15 67 40 -12,4 -1,45 17,98 153,76 2,1025

16 78 32 -1,4 -9,45 13,23 1,96 89,3025

17 74 27 -5,4 -14,45 78,03 29,16 208,8025

18 73 41 -6,4 -0,45 2,88 40,96 0,2025

19 88 39 8,6 -2,45 -21,07 73,96 6,0025

20 90 43 10,6 1,55 16,43 112,36 2,4025

Total 1588 829 941,4 1366,8 1570,95

Average 79,4 41,45

r = 0.6424

r2 square of the correlation coefficient

r² is the proportion of the sum of squares explained in one-variable regression,

r² is the proportion of the sum of squares explained in multiple regression.

Is an R-Square < 1.00 Good or bad?

This is both a statistical and a philosophical question; It is quite rare, especially in the social sciences, to get an R-square that is really high (e.g., 98%).

The goal is NOT to get the highest R-square per se. Instead, the goal is to develop a model that is both statistically and theoretically sound, creating the best fit with existing data.

Do you want just the best fit, or a model that theoretically/conceptually makes sense? Yes, you might get a good fit with nonsensical explanatory variables. But, this opens you to spurious/intervening relationships. THEREFORE: hard to use model for explanation.

Why might an R-Square be less than 1.00?

underdetermined model (need more variables) nonlinear relationships measurement error sampling error not fully predictable/explainable even with all data

available; there is a certain amount of unexplainable chaos/static/randomness in the universe (which may be reassuring)

the unit of analysis is too aggregated (e.g., you are predicting mean housing values for a city -- you might get better results with predicting individual housing prices, or neighborhood housing prices).

Adjusted R2 (R-square)

What is an "Adjusted" R-Square? The Adjusted R-Square takes into account not only how much of the variation is explained, but also the impact of the degrees of freedom. It "adjusts" for the number of variables use. That is, look at the adjusted R- Square to see how adding another variable to the model both increases the explained variance but also lowers the degrees of freedom. Adjusted R2 = 1- (1 - R2 )((n - 1)/(n - k - 1)). As the number of variables in the model increases, the gap between the R-square and the adjusted R-square will increase. This serves as a disincentive to simply throwing in a huge number of variables into the model to increase the R-square.

This adjusted value for R-square will be equal or smaller than the regular R-square. The adjusted R-square adjusts for a bias in R-square. R-square tends to over estimate the variance accounted for compared to an estimate that would be obtained from the population. There are two reasons for the overestimate, a large number of predictors and a small sample size.

So, with a small sample and with few predictors, adjusted R-square should be very similar to the R-square value. Researchers and statisticians differ on whether to use the adjusted R-square. It is probably a good idea to look at it to see how much your R-square might be inflated, especially with a small sample and many predictors.

Example

Suppose we have collected the following sample of 6 observations on age and income:

Find the estimated regression line for the sample of six observations we have collected on age and income:

Which is the independent variable and which is the dependent variable for this problem?

Cautions About Simple Linear Regression

Correlation and regression describe only linear relations Correlation and least-squares regression line are not resistant to

outliers Predictions outside the range of observed data are often

inaccurate Correlation and regression are powerful tools for describing

relationship between two variables, but be aware of their limitations

Multiple Prediction

Regression analysis allows us to use more than one independent variable to predict values of y. Take the fat intake and blood cholesterol level study as an example. If we want to predict cholesterol as accurately as possible, we need to know more about diet than just how much fat intake there is.

On the island of Crete, they consume a lot of olive oil, so there fat intake is high. This, however, seems to have no dramatic affect on cholesterol (at least the bad cholesterol, the LDLs). They also consume very little cholesterol in their diet, which consists more of fish than high cholesterol foods like cheese and beef (hopefully this won't be considered libelous in Texas). So, to improve our prediction of blood cholesterol levels, it would be helpful to add another predictor, dietary cholesterol.

From Bivariate to Multiple regression: what changes?

potentially more explanatory power with more variables.

the ability to control for other variables: and one sees the interaction of the various explanatory variables. partial correlations and multicollinearity.

harder to visualize drawing a line through three+ n-dimensional space.

the R is no longer simply the square of the correlation statistic r.

From Two to Three Dimensions With simple regression (one predictor) we had only the x-axis and the y-axis. Now we need an axis for x1, x2, and y.

where Y' is the predicted score, X1 is the score on the first predictor variable, X2 is the score on the second, etc. The Y intercept is A. The regression coefficients (b1, b2, etc.) are analogous to the slope in simple regression.

If we want to predict these points, we now need a regression plane rather than just a regression line. That looks something like this:

More than one prediction attribute

X1, X2

For example,

X1=„years of experience‟

X2=„age‟

Y=„salary‟

2211 xxY

x1

x2

y

0=10

0

(xi1, xi2)

E(yi)

yi

i

Response Surface

The parameters β0, β1, β2,… , βk are called partial regression coefficients.

β1 represents the change in y corresponding to a unit increase in x1, holding all the other predictors constant.

A similar interpretation can be made for β2, β3, ……, βk

Regression Statistics

Multiple R 0,995

R Square 0,990

Adjusted R Square 0,989

Standard Error 0,008

Observations 30

ANOVA

df SS MS F

Significa

nce F

Regression 4 0,164 0,041 628,372 0,000

Residual 25 0,002 0,000

Total 29 0,165

Coefficie

nts

Standard

Error t Stat P-value

Intercept 0,500 0,008 60,294 0,000

Percent of Gross Hhd Income Spent on rent -0,399 0,016 -24,610 0,000

percent 2-parent families -0,288 0,015 -19,422 0,000

Police Anti-Drug Program? -0,004 0,004 -1,238 0,227

Active Tenants Group? (1 = yes; 0 = no) -0,102 0,004 -28,827 0,000

Controlling also for this new variable, the police anti-drug program is no

longer statistically significant, an instead the presence of the active

tenants group makes the dramatic difference. (and look at that great R

square!). However, we are no quite done…

SUMMARY OUTPUT

Regression Statistics

Multiple R 0.928

R Square 0.861

Adjusted R Square 0.850

Standard Error 0.030

Observations 30

ANOVA

df SS MS F Significance F

Regression 2 0.149 0.074 83.484 0.000

Residual 27 0.024 0.001

Total 29 0.173

Coeffici

ents

Standard

Error t Stat P-value BETA

Intercept 0.36582 0.017 20.908 0.000

percent 2-parent

families -0.2565 0.051 -5.017 0.000 -0.362

Active Tenants Group?

(1 = yes; 0 = no) -0.1246 0.011 -11.347 0.000 -0.821

Since the police variable now has a statistically insignificant t-score, we remove it

from the model. (We also remove the income variable, since it also becomes

insignificant after we remove the police variable.) We are left with two independent

variables: percent of 2-parent families and active tenants group.

Stepwise Regression Algorithms

• Backward Elimination

• Forward Selection

• Stepwise Selection

Backward Elimination

1. Fit the model containing all (remaining)

predictors.

2. Test each predictor variable, one at a

time, for a significant relationship with y.

3. Identify the variable with the largest pvalue.

If p > α, remove this variable from

the model, and return to (1.).

4. Otherwise, stop and use the existing

model.

Forward Selection

1. Fit all models with one (more) predictor.

2. Test each of these predictor variables,

for a significant relationship with y.

3. Identify the variable with the smallest pvalue.

If p < α, add this variable to the

model, and return to (1.).

4. Otherwise, stop and use the existing

model.

Stepwise Selection

• The Stepwise Selection method is

basically Forward Selection with Backward

Elimination added in at every step.

Stepwise Selection 1. Fit all models with one (more) predictor. 2. Test each of these predictor variables, for a significant relationship with y. 3. Identify the variable with the smallest p-value. If p < α, add this variable to the model, and return to (1.). 4. Now, for the model being considered, test each predictor variable, one at a time, for a significant relationship with y. 5. Identify the variable with the largest p-value. If p > α, remove this variable from the model, and return to (1.). 6. Otherwise, stop and use the existing model.

Linear regression

Review

Multiple Regression Models

Chapter Topics

The Multiple Regression Model

Contribution of Individual Independent Variables

Coefficient of Determination

Categorical Explanatory Variables

Transformation of Variables

Violations of Assumptions

Qualitative Dependent Variables

Multiple Regression Models

MultipleRegression

Models

LinearDummy

Variable

LinearNon-

Linear

Inter-action

Poly-

Nomial

SquareRoot

Log Reciprocal Exponential

Linear Multiple Regression Model

Additional Assumption for Multiple Regression

No exact linear relation exists between any subset of explanatory variables (perfect

"multicollinearity")

The Multiple Regression Model

ipipiii XXXY 22110

Relationship between 1 dependent & 2 or more independent variables is a linear

function Population

Y-intercept Population slopes

Dependent (Response)

variable for sample

Independent (Explanatory)

variables for sample model

Random

Error

ipipiii eXbXbXbbY 22110

Population Multiple Regression Model

X2

Y

X1

YX =

0 +

1X

1i +

2X

2i

0

Yi =

0 +

1X

1i +

2X

2i +

i

Response

Plane

(X1i

,X2i

)

(Observed Y)

i

Bivariate model

Sample Multiple Regression Model

X2

Y

X1

b0

Yi = b

0 + b

1X

1i + b

2X

2i + e

i

Response

Plane

(X1i

,X2i

)

(Observed Y)

^

ei

Yi = b

0 + b

1X

1i + b

2X

2i

Bivariate model

Parameter Estimation

Linear Multiple Regression Model

O il (G a l) T e m p In su la tio n

275.30 40 3

363.80 27 3

164.30 40 10

40.80 73 6

94.30 64 6

230.90 34 6

366.70 9 6

300.60 8 10

237.80 23 10

121.40 63 3

31.40 65 10

203.50 41 6

441.10 21 3

323.00 38 3

52.50 58 10

Multiple Regression Model: Example

(0F)

Develop a model for estimating

heating oil used for a single

family home in the month of

January based on average

temperature and amount of

insulation in inches.

Interpretation of Estimated Coefficients

Slope (bP)

Estimated Y changes by bP for each 1 unit increase in XP holding all other variables constant (ceterus paribus) Example: If b1 = -2, then fuel oil usage (Y) is

expected to decrease by 2 gallons for each 1 degree increase in temperature (X1) given the inches of insulation (X2)

Y-Intercept (b0) Average value of Y when all XP = 0

Sample Regression Model: Example

C o e ffic ie n ts

I n te r c e p t 5 6 2 . 1 5 1 0 0 9 2

X V a r i a b l e 1 -5 . 4 3 6 5 8 0 5 8 8

X V a r i a b l e 2 -2 0 . 0 1 2 3 2 0 6 7

iii X.X..Y 21 012204375151562

For each degree increase in

temperature, the average amount of

heating oil used is decreased by 5.437

gallons, holding insulation constant.

For each increase in one inch of

insulation, the use of heating oil is

decreased by 20.012 gallons,

holding temperature constant.

Evaluating the Model

Evaluating Multiple Regression Model Steps

Examine variation measures

Test parameter significance

Overall model

Portions of model

Individual coefficients

Variation Measures

Coefficient of Multiple Determination

r2Y.12..P = Explained variation = SSR

Total variation SST

r2=0 all the variables taken together do

not explain variation in Y

NOT proportion of variation in Y „explained‟ by all X variables taken together

Reflects

Sample size

Number of independent variables

Smaller than r2Y.12..P

Sometimes used to compare models

Adjusted Coefficient of Multiple Determination

Simple and Multiple Regression Compared:Example

Two simple regressions:

ABSENCES= + 1AUTONOMY

ABSENCES= + 2SKILLVARIETY

Multiple Regression:

ABSENCES= + 1AUTONOMY+

2SKILLVARIETY

Overlap in Explanation

SIMPLE REGRESSION: AUTONOMY MULTIPLE REGRESSION

Multiple R 0,169171 Multiple R 0,231298

R Square 0,028619 R Square 0,053499

Adjusted R Square0,027709 Adjusted R Square0,051723

Standard Error 12,443 Standard Error12,28837

Observations 1069 Observations 1069

ANOVA ANOVA

df SS MS F Significance F df SS MS F

Regression 1 4867,198 4867,198 31,43612 2,62392E-08 Regression 2 9098,483 4549,242 30,1266

Residual 1067 165201,7 154,8282 Residual 1066 160970,4 151,0041

Total 1068 170068,9 Total 1068 170068,9

SIMPLE REGRESSION: SKILL VARIETY

Multiple R 0,193838 0,06619206 SUM OF SIMPLE R2

R Square 0,037573 0,05349881 MULTIPLE R2

Adjusted R Square0,036671 0,01269325 OVERLAP ATTRIBUTED TO BOTH

Standard Error 12,38552

Observations 1069

11257,2098 SUM OF REGRESSION SUM OF SQUARES

ANOVA 9098,4831 REGRESSION SUM OF SQUARES

df SS MS F Significance F 2158,72671 OVERLAP

Regression 1 6390,011 6390,011 41,6556 1,64882E-10

Residual 1067 163678,9 153,401

Total 1068 170068,9

Testing Parameters

F 0 3.89

H0: 1 = 2 = … = p = 0

H1: At least one I 0

= .05

df = 2 and 12

Critical Value(s):

Test Statistic:

Decision:

Conclusion:

Reject at = 0.05

There is evidence that at

least one independent

variable affects Y

= 0.05

F

Test for Overall Significance Example Solution

168.47

Test for Significance: Individual Variables

•Shows if there is a linear relationship between the

variable Xi and Y

•Use t test Statistic

•Hypotheses:

H0: i = 0 (No linear relationship)

H1: i 0 (Linear relationship between Xi and Y)

C o e ffic ie n ts S ta n d a rd E rro r t S ta t

I n te r c e p t 5 6 2 . 1 5 1 0 0 9 2 1 . 0 9 3 1 0 4 3 3 2 6 . 6 5 0 9 4

X V a r i a b l e 1 -5 . 4 3 6 5 8 0 6 0 . 3 3 6 2 1 6 1 6 7 -1 6 . 1 6 9 9

X V a r i a b l e 2 -2 0 . 0 1 2 3 2 1 2 . 3 4 2 5 0 5 2 2 7 -8 . 5 4 3 1 3

t Test Statistic Excel Output: Example

t Test Statistic for X1

(Temperature)

t Test Statistic for X2

(Insulation) Seb

bt

H0: 1 = 0

H1: 1 0

df = 12

Critical Value(s):

Test Statistic:

Decision:

Conclusion:

Reject H0 at = 0.05

There is evidence of a

significant effect of

temperature on oil

consumption. Z 0 2.1788 -2.1788

.025

Reject H 0 Reject H 0

.025

Does temperature have a significant effect on monthly

consumption of heating oil? Test at = 0.05.

t Test : Example Solution

t Test Statistic = -16.1699

Example: Analysis of job earnings

What is the impact of employer tenure

(ERTEN), unemployment (UNEM) and

education (EDU) on job earnings (JEARN)?

Example: Analysis of job earnings

Correlations

Results: Anova

Results

Examines the contribution of a set of X variables to the relationship with Y

Null hypothesis:

Variables in set do not improve significantly the model when all other variables are included

Alternative hypothesis:

At least one variable is significant

Testing Model Portions

Only one-tail test

Requires comparison of two regressions

One regression includes everything

One regression includes everything except the portion to be tested.

Testing Model Portions

Testing Model Portions Test Statistic

)X, ,

))/k-)X, ,

3

3

21

321

(

(((

XXMSE

XSSRXXSSRF

From ANOVA section

of regression for

iiii XbXbXbbY 3322110ˆ

ii XbbY 330ˆ

From ANOVA section

of regression for

Test H0: 1= 2 = 0 in a 3 variable model

Testing Portions of Model: SSR

Contribution of X1 and X2 given X3 has been

included:

SSR(X1and X2 X3) = SSR(X1,X2 and X3) -

SSR(X3)

From ANOVA section of

regression for

iiii XbXbXbbY 3322110ˆ

From ANOVA section of

regression for

ii XbbY 320ˆ

Partial F Test For Contribution of Set of X variables

Hypotheses:

H0 : Variables Xi... do not significantly improve

the model given all others included

H1 : Variables Xi... significantly improve the

model given all others included

Test Statistic:

F = MSE

kothersallXSSR i /)....(

With df = k and (n - p -1)

k=# of variables

tested

Testing Portions of Model: Example

Test at the = .05 level

to determine if the

variable of average

temperature

significantly improves

the model given that

insulation is included.

Testing Portions of Model: Example

H0: X1 does not improve

model (X2 included)

H1: X1 does improve model

= .05, df = 1 and 12

Critical Value = 4.75

A N O V A

S S

R e g r e s s i o n 5 1 0 7 6 . 4 7

R e s i d u a l 1 8 5 0 5 8 . 8

T o t a l 2 3 6 1 3 5 . 2

717,676

076,51015,228)( 21

MSE

XXSSRF

A N O V A

S S M S

R e g re ssio n 2 2 8 0 1 4 .6 2 6 3 1 1 4 0 0 7 .3 1 3

R e sid u a l 8 1 2 0 .6 0 3 0 1 6 6 7 6 .7 1 6 9 1 8

T o ta l 2 3 6 1 3 5 .2 2 9 3

(For X1 and X2) (For X2)

= 261.47

Conclusion: Reject H0. X1 does improve model

Do I need to do this for one variable?

•The F test for the inclusion of a single variable

after all other variables are included in the

model is IDENTICAL to the t test of the slope

for that variable

•The only reason to do an F test is to test several

variables together.

Example: Collinear Variables

20,000 Execs in 439 Corps: Dependent Variable=base pay+bonus

Individual Simple Regression Multiple Regression

R2 Contribution to R2

Company Dummies .33 .08

Occupational Dummies .52 .022

Position in hierarchy .69 .104

Human Capital Vars .28 .032

Shared .632

TOTAL .87

Yedek

Multiple Regression

The value of outcome variable depends on several explanatory variables.

F-test. To judge whether the explanatory variables in the model adequately describe the outcome variable.

t-test. Applies to each individual explanatory variable. Significant t indicates whether the explanatory variable has an effect on outcome variable while controlling for other X‟s.

T-ratio. To judge the relative importance of the explanatory variable.

Problem of Multicollinearity

When explanatory variables are correlated there is difficulty in interpreting the effect of explanatory variables on the outcome.

Check by:

Correlation coefficient matrix (see next slide).

F-test significant with insignificant t.

Large changes occur in the regression coefficients when variables are added or deleted. (Variance Inflation). Vi > 4 or 5 means there is multicollinearity.

Example of a Matrix Plot

This matrix plot comprises several scatter plots to provide visual information as to whether variables are correlated

The arrow points at a scatter plot where two explanatory variables are strongly correlated

Selecting the most Economic Model

The purpose is to find the smallest number of explanatory variables which make the maximum contribution to the outcome.

After excluding variables that may be causing multicollinearity, examine the table of t-ratios in the full model. Those variables with a significant t are included in the sub-set.

In the Analysis of Variance table examine the column headed SEQ SS. Check that the candidate variables are indeed making a sizable contribution to the Regression Sum of Squares

Stepwise Regression Analysis

Stepwise finds the explanatory variable with the highest R2 to start with. It then checks each of the remaining variables until two variables with highest R2 are found. It then repeats the process until three variables with highest R2 are found, and so on.

The overall R2 gets larger as more variables are added.

Stepwise may be useful in the early exploratory stage of data analysis, but not to be relied upon for the confirmatory stage.

Is the Model Adequate?

Judged by the following:

R2 value. Increase in R2 on adding another variable gives a useful hint

Adjusted R2 is a more sensitive measure.

Smallest value of s (standard deviation).

C-p statistic. A model with the smallest C-p is used such that Cp value is closest to p (the number of parameters in the

Confidence Interval Estimate For The Slope

Provide the 95% confidence interval for the population

slope 1 (the effect of temperature on oil consumption).

111 bpn Stb

Coefficients Lower 95% Upper 95%

Intercept 562,151009 516,1930837 608,108935

X Variable 1 -5,4365806 -6,169132673 -4,7040285

X Variable 2 -20,012321 -25,11620102 -14,90844

-6.169 1 -4.704

The average consumption of oil is reduced by between

4.7 gallons to 6.17 gallons per each increase of 10 F in

houses with the same insulation.

Related Documents