Understanding User-generated Content on Social Media Meena Nagarajan Ph.D. Dissertation Defense Kno.e.sis Center, College of Engineering and Computer Science Wright State University 1

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

Understanding User-generated Content on

Social Media Meena Nagarajan

Ph.D. Dissertation DefenseKno.e.sis Center, College of Engineering and Computer Science

Wright State University

1

Introductions and Thank-you!

2

Social Information Needs

• Facts, Networked Public Conversations, Opinions, Emotions, Preferences..

3

Social Information Needs

• Can we use this information to assess a population’s preference?

• Can we study how these preferences propagate in a network of friends?

• Are such crowd-sourced preferences a good substitute for traditional polling methods?

4

!" !"

Social Information Processing

• "Who says what, to whom, why, to what extent and with what effect?" [Laswell]

• Network: Social structure emerges from the aggregate of relationships (ties)

• People: poster identities, the active effort of accomplishing interaction

• Content : studying the content of communication.

ABOUTNESS of textual user-generated content via the lens of TEXT MINING

5

Aboutness Of Text

• One among several terms used to express certain attributes of a discourse, text or document

• characterizing what a document is about, what its content, subject or topic matter are

• A central component of knowledge organization and information retrieval

• For machine and human consumption

6

Aboutness & Subgoals in IE

• Named entity recognition

• Co-reference, anaphora resolution• "International Business Machines" and "IBM"; ‘he’ in a passage

refers to the mention of ‘John Smith’

• Terminology, key-phrase, lexical chain extraction

• Relationship and fact extraction• ‘person works for organization’

7

Text Mining and Aboutness

• Thesis focus: `Aboutness’ understanding via Text Mining

• Gleaning meaningful information from natural language text useful for particular purposes

• Indicators of thematic elements for aboutness

• via NER, Key phrase extraction

8

Aboutness & The Role Of Context

• Extracting thematic elements : interpretation of the individual elements in context.

• (a) I can hear bass sounds. (b) They like grilled bass.

• Typical context cues that are employed

• Word Associations, Linguistic Cues, Syntactic, Structural Cues, Knowledge Sources..

9

10

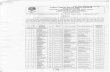

1.2. THESIS CONTRIBUTIONS – ‘ABOUTNESS’ OF INFORMAL TEXT August 10, 2010

User-generated content on Twitter during the 2009 Iran Election

show support for democracy in Iranadd green overlay to your Twitter avatar with 1-click - http://helpiranelection.com/

Twitition: Google Earth to update satellite images of Tehran#Iranelection http://twitition.com/csfeo @patrickaltoft

Set your location to Tehran and your time zone to GMT +3.30.Security forces are hunting for bloggers using location/timezone searches

User comments on music artist pages on MySpace

Your music is really bangin!

You’re a genius! Keep droppin bombs!

u doin it up 4 real. i really love the album.

hey just hittin you up showin love to one ofchi-town’s own. MADD LOVE.

Comments on Weblogs about movies and video games

I decided to check out Wanted demo today even though I really did not like the movie

It was THE HANGOVER of the year..lasted forever..so I went to the movies..bad choice picking GI Jane worse now

Excerpt from a blog around the 2009 Health Care Reform debate

Hawaii’s Lessons - NY timesIn Hawaii’s Health System, Lessons for LawmakersSince 1974, Hawaii has required all employers to provide relatively generoushealth care benefits to any employee who works 20 hours a week or more. Ifhealth care legislation passes in Congress, the rest of the country may barelycatch up.Lawmakers working on a national health care fix have much to learnfrom the past 35 years in Hawaii, President Obama’s native state.Among the most important lessons is that even small steps to change the sys-tem can have lasting effects on health. Another is that, once benefits are en-trenched, taking them away becomes almost impossible. There have not beenany serious efforts in Hawaii to repeal the law, although cheating by employersmay be on the rise. But perhaps the most intriguing lesson from Hawaii has todo with costs. This is a state where regular milk sells for $8 a gallon, gasolinecosts $3.60 a gallon and the median price of a home in 2008 was $624, 000 Ñthe second-highest in the nation.

Figure 1.1: Examples of user-generated content from different social media platforms

5

10

1.2. THESIS CONTRIBUTIONS – ‘ABOUTNESS’ OF INFORMAL TEXT August 10, 2010

User-generated content on Twitter during the 2009 Iran Election

show support for democracy in Iranadd green overlay to your Twitter avatar with 1-click - http://helpiranelection.com/

Twitition: Google Earth to update satellite images of Tehran#Iranelection http://twitition.com/csfeo @patrickaltoft

Set your location to Tehran and your time zone to GMT +3.30.Security forces are hunting for bloggers using location/timezone searches

User comments on music artist pages on MySpace

Your music is really bangin!

You’re a genius! Keep droppin bombs!

u doin it up 4 real. i really love the album.

hey just hittin you up showin love to one ofchi-town’s own. MADD LOVE.

Comments on Weblogs about movies and video games

I decided to check out Wanted demo today even though I really did not like the movie

It was THE HANGOVER of the year..lasted forever..so I went to the movies..bad choice picking GI Jane worse now

Excerpt from a blog around the 2009 Health Care Reform debate

Hawaii’s Lessons - NY timesIn Hawaii’s Health System, Lessons for LawmakersSince 1974, Hawaii has required all employers to provide relatively generoushealth care benefits to any employee who works 20 hours a week or more. Ifhealth care legislation passes in Congress, the rest of the country may barelycatch up.Lawmakers working on a national health care fix have much to learnfrom the past 35 years in Hawaii, President Obama’s native state.Among the most important lessons is that even small steps to change the sys-tem can have lasting effects on health. Another is that, once benefits are en-trenched, taking them away becomes almost impossible. There have not beenany serious efforts in Hawaii to repeal the law, although cheating by employersmay be on the rise. But perhaps the most intriguing lesson from Hawaii has todo with costs. This is a state where regular milk sells for $8 a gallon, gasolinecosts $3.60 a gallon and the median price of a home in 2008 was $624, 000 Ñthe second-highest in the nation.

Figure 1.1: Examples of user-generated content from different social media platforms

5

Unmediated Interpersonal communication

Informal English Domain

10

1.2. THESIS CONTRIBUTIONS – ‘ABOUTNESS’ OF INFORMAL TEXT August 10, 2010

User-generated content on Twitter during the 2009 Iran Election

show support for democracy in Iranadd green overlay to your Twitter avatar with 1-click - http://helpiranelection.com/

Twitition: Google Earth to update satellite images of Tehran#Iranelection http://twitition.com/csfeo @patrickaltoft

Set your location to Tehran and your time zone to GMT +3.30.Security forces are hunting for bloggers using location/timezone searches

User comments on music artist pages on MySpace

Your music is really bangin!

You’re a genius! Keep droppin bombs!

u doin it up 4 real. i really love the album.

hey just hittin you up showin love to one ofchi-town’s own. MADD LOVE.

Comments on Weblogs about movies and video games

I decided to check out Wanted demo today even though I really did not like the movie

It was THE HANGOVER of the year..lasted forever..so I went to the movies..bad choice picking GI Jane worse now

Excerpt from a blog around the 2009 Health Care Reform debate

Hawaii’s Lessons - NY timesIn Hawaii’s Health System, Lessons for LawmakersSince 1974, Hawaii has required all employers to provide relatively generoushealth care benefits to any employee who works 20 hours a week or more. Ifhealth care legislation passes in Congress, the rest of the country may barelycatch up.Lawmakers working on a national health care fix have much to learnfrom the past 35 years in Hawaii, President Obama’s native state.Among the most important lessons is that even small steps to change the sys-tem can have lasting effects on health. Another is that, once benefits are en-trenched, taking them away becomes almost impossible. There have not beenany serious efforts in Hawaii to repeal the law, although cheating by employersmay be on the rise. But perhaps the most intriguing lesson from Hawaii has todo with costs. This is a state where regular milk sells for $8 a gallon, gasolinecosts $3.60 a gallon and the median price of a home in 2008 was $624, 000 Ñthe second-highest in the nation.

Figure 1.1: Examples of user-generated content from different social media platforms

5

Unmediated Interpersonal communication

Informal English Domain

Context is implicit

Interactions between like-minded people

10

1.2. THESIS CONTRIBUTIONS – ‘ABOUTNESS’ OF INFORMAL TEXT August 10, 2010

User-generated content on Twitter during the 2009 Iran Election

show support for democracy in Iranadd green overlay to your Twitter avatar with 1-click - http://helpiranelection.com/

Twitition: Google Earth to update satellite images of Tehran#Iranelection http://twitition.com/csfeo @patrickaltoft

Set your location to Tehran and your time zone to GMT +3.30.Security forces are hunting for bloggers using location/timezone searches

User comments on music artist pages on MySpace

Your music is really bangin!

You’re a genius! Keep droppin bombs!

u doin it up 4 real. i really love the album.

hey just hittin you up showin love to one ofchi-town’s own. MADD LOVE.

Comments on Weblogs about movies and video games

I decided to check out Wanted demo today even though I really did not like the movie

It was THE HANGOVER of the year..lasted forever..so I went to the movies..bad choice picking GI Jane worse now

Excerpt from a blog around the 2009 Health Care Reform debate

Hawaii’s Lessons - NY timesIn Hawaii’s Health System, Lessons for LawmakersSince 1974, Hawaii has required all employers to provide relatively generoushealth care benefits to any employee who works 20 hours a week or more. Ifhealth care legislation passes in Congress, the rest of the country may barelycatch up.Lawmakers working on a national health care fix have much to learnfrom the past 35 years in Hawaii, President Obama’s native state.Among the most important lessons is that even small steps to change the sys-tem can have lasting effects on health. Another is that, once benefits are en-trenched, taking them away becomes almost impossible. There have not beenany serious efforts in Hawaii to repeal the law, although cheating by employersmay be on the rise. But perhaps the most intriguing lesson from Hawaii has todo with costs. This is a state where regular milk sells for $8 a gallon, gasolinecosts $3.60 a gallon and the median price of a home in 2008 was $624, 000 Ñthe second-highest in the nation.

Figure 1.1: Examples of user-generated content from different social media platforms

5

Unmediated Interpersonal communication

Informal English Domain

Context is implicit

Interactions between like-minded people

Variations and creativity in expression

Properties of the medium

10

1.2. THESIS CONTRIBUTIONS – ‘ABOUTNESS’ OF INFORMAL TEXT August 10, 2010

User-generated content on Twitter during the 2009 Iran Election

show support for democracy in Iranadd green overlay to your Twitter avatar with 1-click - http://helpiranelection.com/

Twitition: Google Earth to update satellite images of Tehran#Iranelection http://twitition.com/csfeo @patrickaltoft

Set your location to Tehran and your time zone to GMT +3.30.Security forces are hunting for bloggers using location/timezone searches

User comments on music artist pages on MySpace

Your music is really bangin!

You’re a genius! Keep droppin bombs!

u doin it up 4 real. i really love the album.

hey just hittin you up showin love to one ofchi-town’s own. MADD LOVE.

Comments on Weblogs about movies and video games

I decided to check out Wanted demo today even though I really did not like the movie

It was THE HANGOVER of the year..lasted forever..so I went to the movies..bad choice picking GI Jane worse now

Excerpt from a blog around the 2009 Health Care Reform debate

Hawaii’s Lessons - NY timesIn Hawaii’s Health System, Lessons for LawmakersSince 1974, Hawaii has required all employers to provide relatively generoushealth care benefits to any employee who works 20 hours a week or more. Ifhealth care legislation passes in Congress, the rest of the country may barelycatch up.Lawmakers working on a national health care fix have much to learnfrom the past 35 years in Hawaii, President Obama’s native state.Among the most important lessons is that even small steps to change the sys-tem can have lasting effects on health. Another is that, once benefits are en-trenched, taking them away becomes almost impossible. There have not beenany serious efforts in Hawaii to repeal the law, although cheating by employersmay be on the rise. But perhaps the most intriguing lesson from Hawaii has todo with costs. This is a state where regular milk sells for $8 a gallon, gasolinecosts $3.60 a gallon and the median price of a home in 2008 was $624, 000 Ñthe second-highest in the nation.

Figure 1.1: Examples of user-generated content from different social media platforms

5

Unmediated Interpersonal communication

Informal English Domain

Context is implicit

Interactions between like-minded people

Variations and creativity in expression

Properties of the medium

One solution rarely fits all

social media content

Thesis Contributions• Compensating for informal highly variable

language, lack of context

• Examining usefulness of multiple context cues for text mining algorithms

• Context cues: Document corpus, syntactic, structural cues, social medium and external domain knowledge

• End goal: NER, Key Phrase Extraction11

Thesis Statements

• We show that for 2 Aboutness Understanding tasks -- NER, Key Phrase Extraction

• Multiple contextual information can supplement and improve the reliability and performance of existing NLP/ML algorithms

• Improvements tend to be robust across domains and data sources

12

13

Con

text

Cue

s

In Content

Medium Metadata,

Structural cues

External Knowledge

Sources

Text Formality

Thesis ContributionsTask : Aboutness of text

Weblogs MySpace Music Forum

NER - Movie Names NER - Music Album/Track names

I loved your music Yesterday!

“It was THE HANGOVER of the year..lasted forever.. so I went to the movies..bad choice

picking “GI Jane” worse now”

13

Con

text

Cue

s

In Content

Medium Metadata,

Structural cues

External Knowledge

Sources

Text Formality

Thesis ContributionsTask : Aboutness of text

Weblogs MySpace Music Forum

Wikipedia Infoboxes

Word Associations from large corpora

Blog URL, Title, Post URL

NER - Movie Names NER - Music Album/Track names

13

Con

text

Cue

s

In Content

Medium Metadata,

Structural cues

External Knowledge

Sources

Text Formality

Thesis ContributionsTask : Aboutness of text

Weblogs MySpace Music Forum

Wikipedia Infoboxes

Word Associations from large corpora

Blog URL, Title, Post URL

Word associations from large corpora, POS Tags, Syntactic

Dependencies

Music Brainz, UrbanDictionary

Page URL

NER - Movie Names NER - Music Album/Track names

14

Con

text

Cue

s

In Content

Medium Metadata,

Structural cues

External Knowledge

Sources

Text Formality

Thesis ContributionsTask : Aboutness of text

Twitter Facebook, MySpace Forums

Key Phrase Extraction Key Phrase Elimination

4.1. KEY PHRASE EXTRACTION - ‘ABOUTNESS’ OF CONTENT August 10, 2010

document that are descriptive of its contents.

The contributions made in this thesis fall under the second category of extracting key phrases

that are explicitly present in the content and are also indicative of what the document is ‘about’.

The focus of previous approaches to key phrase extraction have been on extracting phrases

that summarize a document, e.g. a news article, a web page, a journal article or a book. In

contrast, the focus of this thesis is not in summarizing a document generated by users on social

media platforms but to extract key phrases that are descriptive of information present in multiple

observations (or documents) made by users about an entity, event or topic of interest.

The primary motivation is to obtain an abstraction of a social phenomenon that makes volumes

of unstructured user-generated content easily consumable by humans and agents alike. As an

example of the goals of our work, Table 4.1 shows key phrases extracted from online discussions

around the 2009 Health Care Reform debate and the 2008 Mumbai terror attack, summarizing

hundreds of user comments to give a sense of what the population cared about on a particular day.

2009 Health Care Reform 2008 Mumbai Terror Attack

Health care debate Foreign relations perspectiveHealthcare staffing problem Indian prime minister speechObamacare facts UK indicating supportHealthcare protestors Country of IndiaParty ratings plummet Rejected evidence providedPublic option Photographers capture images of Mumbai

Table 4.1: Showing summary key phrases extracted from more than 500 online posts on Twitteraround two news-worthy events on a single day.

Solutions to key phrase extraction have ranged from both unsupervised techniques that are

based on heuristics to identify phrases and supervised learning approaches that learn from human

105

14

Con

text

Cue

s

In Content

Medium Metadata,

Structural cues

External Knowledge

Sources

Text Formality

Thesis ContributionsTask : Aboutness of text

Twitter Facebook, MySpace Forums

n-grams for thematic cues

spatial, temporal metadata

Key Phrase Extraction Key Phrase Elimination

14

Con

text

Cue

s

In Content

Medium Metadata,

Structural cues

External Knowledge

Sources

Text Formality

Thesis ContributionsTask : Aboutness of text

Twitter Facebook, MySpace Forums

n-grams for thematic cues

spatial, temporal metadata

Word associations from large corpora

Page Title

Key Phrase Extraction Key Phrase Elimination

Seeds from a Domain Knowledge base

WHY

15

WHAT

HOW

Building on results of NER, Key Phrase Extraction

WHEREWHEN

WHO

Thesis ContributionsBuilding Social Intelligence Applications

WHY

15

WHAT

HOW

Social Intelligence Applications

1. Application of NER results : BBC Sound Index with IBM Almaden2. Application of Key Phrase Extraction : Twitris @ Knoesis

Building on results of NER, Key Phrase Extraction

WHEREWHEN

WHO

Thesis ContributionsBuilding Social Intelligence Applications

Thesis Significance, Impact• Focuses on relatively less explored content aspects

of expression on social media platforms

• Why text on social media is different from what most text mining applications have focused on

• Combination of top-down, bottom-up analysis for informal text

• Statistical NLP, ML algorithms over large corpora

• Models and rich knowledge bases in a domain16

TALK OUTLINE - In Detail

ABOUTNESS UNDERSTANDING

• Named Entity Identification in Informal Text

TALK OUTLINE - Overviews

• Topical Key Phrase Extraction from Informal Text

• Applications and Consequences of Understanding content : Social Intelligence Application

• BBC SoundIndex, Twitris

17

18

Named Entity RecognitionI loved your music Yesterday!

“It was THE HANGOVER of the year..lasted forever..

so I went to the movies..bad choice picking “GI Jane” worse now”

Thesis Contributions

19

Predominant Focus of Prior Work Thesis Focus

Entity Type Focus : PER, LOC, ORGN, DATE, TIME.. [TREC]

Entity Type Focus: Cultural Entities

Method: Sequential Labeling Method: Spot and Disambiguate (pre-supposed knowledge)

Document Types: Scientific Literature, News, Blogs (formal)

Document Types: Social Media Content, Blogs, MySpace Forums

Features: Word-Level Features, List-lookup Features, Documents and

corpus features

Features: Word-Level Features, List-lookup Features, Documents and

corpus features

Cultural Named Entities

20

• NER focus in my work: Cultural Named Entities

• Name of a books, music albums, films, video games, etc.

• The Lord of the Rings, Lips, Crash, Up, Wanted, Today, Twilight, Dark Knight...

• Common words in a language

Characteristics of Cultural Entities

• Varied senses, several poorly documented• Merry Christmas covered by 60+ artists

Star Trek: movies, tv series, media franchise.. and cuisines !!

• Changing contexts with recent events• The Dark Knight reference to Obama, health care reform

• Unrealistic expectations: Comprehensive sense definitions, enumeration of contexts, labeled corpora for all senses ..

21

Characteristics of Cultural Entities

• Varied senses, several poorly documented• Merry Christmas covered by 60+ artists

Star Trek: movies, tv series, media franchise.. and cuisines !!

• Changing contexts with recent events• The Dark Knight reference to Obama, health care reform

• Unrealistic expectations: Comprehensive sense definitions, enumeration of contexts, labeled corpora for all senses ..

21NER Relaxing the closed-world sense assumptions

Thesis Contributions

22

Predominant Focus of Prior Work Thesis Focus

Entity Types : PER, LOC, ORGN, DATE, TIME Entity Type Focus: Cultural Entities

Method: Sequential Labeling Method: Spot and Disambiguate (pre-supposed knowledge)

Document Types: Scientific Literature, News, Blogs (formal)

Document Types: Social Media Content, Blogs, MySpace Forums

Features: Word-Level Features, List-lookup Features, Documents and

corpus features

Features: Word-Level Features, List-lookup Features, Documents and

corpus features

A Spot and Disambiguate Paradigm

23

• NER generally a sequential prediction problem• NER system that achieves 90.8 F1 score on the CoNLL-2003 NER

shared task (PER, LOC, ORGN entities) [Lev Ratinov, Dan Roth]

• My approach: Spot and Disambiguate Paradigm• Dictionary or list of entities we want to spot

• Disambiguate in context (natural language, domain knowledge cues)

• Binary Classification

Thesis Contributions

24

Predominant Focus of Prior Work Thesis Focus

Entity Types : PER, LOC, ORGN, DATE, TIME Entity Type Focus: Cultural Entities

Method: Sequential Labeling Method: Spot and Disambiguate (pre-supposed knowledge)

Document Types: Scientific Literature, News, Blogs (formal)

Document Types: Informal Social Media Content, Blogs, MySpace

Forums, Twitter, Facebook

Features: Word-Level Features, List-lookup Features, Documents

and corpus features

Features: SENSE BIASED Word-Level Features, List-lookup

Features, Documents and corpus features

NER Algorithmic Contributions Supervised, Two Flavors

25

3.2. THESIS FOCUS - CULTURAL NER IN INFORMAL TEXT August 10, 2010

(a) Multiple Senses in the same Music DomainBands with a song “Merry Christmas” 60Songs with “Yesterday” in the title 3,600Releases of “American Pie” 195Artists covering “American Pie” 31

(b) Multiple senses in different domains for the same movie entitiesTwilight Novel, Film, Short story, Albums, Places, Comics, Poem, Time of dayTransformers Electronic device, Film, Comic book series, Album, Song, Toy LineThe Dark Knight Nickname for comic superhero Batman, Film, Soundtrack, Video game,

Themed roller coaster ride

Table 3.3: Challenging Aspects of Cultural Named Entities

3.2.3 Two Approaches to Cultural NER

In this thesis, we present two approaches to Cultural NER, both addressing different challenges in

their identification. Cultural entities display two characteristic challenges related to their sense or

meanings – certain Cultural entities are so commonly used that they tend to have multiple senses

in the same domain. The music industry is a great example of this scenario where popular themes

feature in several track/album titles of different artists. Table 3.3(a) shows examples of such cases

– for example, there are more than 3600 songs with the word ‘Yesterday’ in their title.

Connecting mentions of such entities in free text to their actual real-world references is rather

challenging, especially in light of poor contextual information. If a user post mentioned the song

‘Merry Christmas’, as in, “This new Merry Christmas tune is so good!”; it is non-trivial to disam-

biguate its reference to one among 60 artists who have covered that song.

On the other hand, there are Cultural entities that span multiple domains. The phrase, ‘The

Hangover’ is a named entity in the film and music domain. Movies that are based on novels

or video games are great examples of such cases of sense ambiguity. Resolving the mention of

‘Wanted’ in Figure 3.2 as a reference to the video game entity (and not the movie reference) is a

38

“I am watching Pattinson scenes in <movie id=2341>Twilight</movie> for the nth time.” “I spent a romantic evening watching the Twilight by the bay..”

“I love <artist id=357688>Lilyʼs</artist> song <track id=8513722>smile</track>”.

NER - Approach 1

26

Approach 1: Multiple Senses, Multiple Domains

• When a Cultural entity appears in multiple senses across domains in the same corpus

27

3.3. CULTURAL NER – MULTIPLE SENSES ACROSS MULTIPLE DOMAINSAugust 10, 2010

Title: Peter Cullen Talks Transformers: War for Cybertron

Recently, we heard legendary Transformers voice actor Peter

Cullen talk not only about becoming an hero to millions for his

portrayal of the heroic Autobot leader, Optimus Prime, but also

about being the first person to play the role of video game icon

Mario. But today, he focuses more on the recent Transformersvideo game release, War for Cybertron.

Following are some excerpts from an interview Cullen recently

conducted with Techland. On how the Optimus Prime seen in War

for Cybertron differs from the versions seen in other branches of

the franchise and its multiverse...

Figure 3.1: Showing excerpt of a blog discussing two senses of the entity ‘Transformers’

the second mention.

3.3.1 A Feature Based Approach to Cultural NER

In this work, we propose a new feature that represents the complexity of extracting particular en-

tities. We hypothesize that knowing how hard it is to extract an entity is useful for learning better

entity classifiers. With such a measure, entity extractors become ‘complexity aware’, i.e. they can

respond differently to the extraction complexity associated with different target entities.

Suppose that we have two entities, one deemed easy to extract and the other more complex.

When a classifier knows the extraction complexity of the entity, it may require more evidence (or

apply more complex rules) in identifying the more complex entity compared to the easier target.

Consider concretely a movie recognition system dealing with two movies, say, ‘The Curious Case

of Benjamin Button’ a title appearing only in reference to a recent movie, and ‘Wanted’, a segment

with wider senses and intentions. With comparable signals a traditional NER system can only

apply the same inference to both cases whereas a ‘complexity aware’ system has the advantage of

42

Algorithm Preliminaries• Problem Space

• Corpus: Weblogs, Distribution: unknown

• All senses of a cultural entity: unknown

• Problem Definition

• Input: A target Sense (e.g., movie); List of Entities to be extracted

• Goal: Disambiguating every entity’s mention as related to target sense or not

28

Contribution: Improving NER - feature-based approach

• Improving classifiers using a novel feature• the “complexity of extraction” in a target sense

• Hypothesis: knowing how hard or easy it is to extract this entity in a particular sense will improve extraction accuracy of learners

29

Contribution: Improving NER - feature-based approach

• Improving classifiers using a novel feature• the “complexity of extraction” in a target sense

• Hypothesis: knowing how hard or easy it is to extract this entity in a particular sense will improve extraction accuracy of learners

• Making classifiers ‘complexity aware’ • ‘The Curious Case of Benjamin Button’ vs. ‘Wanted’

29

Overview

The Curious Case of Benjamin ButtonTwilightDate NightDeath at a FuneralThe Last SongUpAngels and Demons

Sample Population

List of movies to extract

Uncharacterized population (blog corpus), target sense (movies)

Overview

The Curious Case of Benjamin ButtonTwilightDate NightDeath at a FuneralThe Last SongUpAngels and Demons

Sample Population

The Curious Case of Benjamin Button

List of movies to extract

Uncharacterized population (blog corpus), target sense (movies)

Overview

The Curious Case of Benjamin ButtonTwilightDate NightDeath at a FuneralThe Last SongUpAngels and Demons

Sample Population

The Curious Case of Benjamin Button 0.2

Complexity of ExtractionEntityList of movies to extract

Uncharacterized population (blog corpus), target sense (movies)

Overview

The Curious Case of Benjamin ButtonTwilightDate NightDeath at a FuneralThe Last SongUpAngels and Demons

Sample Population

The Curious Case of Benjamin Button 0.2

Date Night

Complexity of ExtractionEntityList of movies to extract

Uncharacterized population (blog corpus), target sense (movies)

Overview

The Curious Case of Benjamin ButtonTwilightDate NightDeath at a FuneralThe Last SongUpAngels and Demons

Sample Population

The Curious Case of Benjamin Button 0.2

Date Night 0.5

Complexity of ExtractionEntityList of movies to extract

Uncharacterized population (blog corpus), target sense (movies)

Overview

The Curious Case of Benjamin ButtonTwilightDate NightDeath at a FuneralThe Last SongUpAngels and Demons

Sample Population

The Curious Case of Benjamin Button 0.2

Date Night 0.5

Complexity of ExtractionEntity

Use Complexity of Extraction as a feature in named entity classifiers

List of movies to extract

Uncharacterized population (blog corpus), target sense (movies)

Overview

The Curious Case of Benjamin ButtonTwilightDate NightDeath at a FuneralThe Last SongUpAngels and Demons

Sample Population

The Curious Case of Benjamin Button 0.2

Date Night 0.5

Complexity of ExtractionEntity

Use Complexity of Extraction as a feature in named entity classifiers

List of movies to extract

Uncharacterized population (blog corpus), target sense (movies)

NOTE: An entity occurring in fewer varied senses (The Curious Case of Benjamin Button) could still have a high complexity of extraction if the distribution is skewed away from the

sense of interest!

Extraction in a Target Sense

• Complexity of extraction in a sense of interest = how much support in corpus toward that sense

31

Extraction in a Target Sense

• Complexity of extraction in a sense of interest = how much support in corpus toward that sense

• How do we find this?

31

Extraction in a Target Sense

• Complexity of extraction in a sense of interest = how much support in corpus toward that sense

• How do we find this?• Documents that mention the entity in word contexts that

are biased to our sense of interest (language models)

31

Extraction in a Target Sense

• Complexity of extraction in a sense of interest = how much support in corpus toward that sense

• How do we find this?• Documents that mention the entity in word contexts that

are biased to our sense of interest (language models)

• More document, implies a lot of support, implies easy to extract, low complexity of extraction

31

Support via Word Associations

• Co-occurring words alone wont cut it!

• Prolific discussion and comparison of different senses

• Co-occurrence based language models will give us everything unless we bias it to our sense (movies)

32

Complexity of Extraction• Goal: Complexity of Extraction in a target sense

• Subgoal: Support in terms of sense-biased contexts in documents that mention entity

• Step1: Extract a sense-biased LM

• Step 2: Identify documents that mention entity in the context of the sense-biased LM

33

Knowledge Features to seed Sense-biased Word Association Gathering

34

• Sense Definition (hints) from Wikipedia Infoboxes

• Working definition: Sense is domain of interest

Knowledge Features to seed Sense-biased Word Association Gathering

34

• Sense Definition (hints) from Wikipedia Infoboxes

• Working definition: Sense is domain of interest

• Use sense hints to derive contextual support

Lot of support, easy to extract, implies a low ‘complexity of extraction’ score!

Measuring ‘complexity of extraction’

• Step 1: Propagate sense evidence in contexts of e, extract a sense-biased language model (LM)• random walks, distributional similarity approaches

• SPREADING ACTIVATION NETWORKS

35

Two step framework (unsupervised)

D e e

Measuring ‘complexity of extraction’

• Step 1: Propagate sense evidence in contexts of e, extract a sense-biased language model (LM)• random walks, distributional similarity approaches

• SPREADING ACTIVATION NETWORKS

35

Two step framework (unsupervised)

Sense hint nodesSense-biased Language Model

D e e

Overview

• Result: Clustered Documents in similar senses

• Not just similar words!

SenseRel doc 1 doc 2 doc n

sense LM term 1 SenseRel (t1) SenseRel (t1) SenseRel (t1)

sense LM term 2 SenseRel (t2)

sense LM term m SenseRel (tm) SenseRel (tm)

• Step 2: Clustering documents represented by sense-relatedness vectors

• CHINESE WHISPERS CLUSTERING

Constructing the SAN

Constructing the SANJ. J. Abrams Damon Lindelof Roberto Orci Alex Kurtzman Paramount Pictures Chris Pine Zachary Quinto Eric Bana Zoe Saldana Karl Urban John Cho Anton Yelchin Simon Pegg Bruce Greenwood Leonard Nimoy Kirk Spock Nero Pavel Chekov Nyota Uhura .. Greenwood Leonard Nimoy Pavel Chekov Nyota Uhura

Star Trek Startrek

Constructing the SANJ. J. Abrams Damon Lindelof Roberto Orci Alex Kurtzman Paramount Pictures Chris Pine Zachary Quinto Eric Bana Zoe Saldana Karl Urban John Cho Anton Yelchin Simon Pegg Bruce Greenwood Leonard Nimoy Kirk Spock Nero Pavel Chekov Nyota Uhura .. Greenwood Leonard Nimoy Pavel Chekov Nyota Uhura

Star Trek Startrek

indicative of being a Named Entity

Constructing the SANJ. J. Abrams Damon Lindelof Roberto Orci Alex Kurtzman Paramount Pictures Chris Pine Zachary Quinto Eric Bana Zoe Saldana Karl Urban John Cho Anton Yelchin Simon Pegg Bruce Greenwood Leonard Nimoy Kirk Spock Nero Pavel Chekov Nyota Uhura .. Greenwood Leonard Nimoy Pavel Chekov Nyota Uhura

Star Trek Startrek

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

indicative of being a Named Entity

Constructing the SANJ. J. Abrams Damon Lindelof Roberto Orci Alex Kurtzman Paramount Pictures Chris Pine Zachary Quinto Eric Bana Zoe Saldana Karl Urban John Cho Anton Yelchin Simon Pegg Bruce Greenwood Leonard Nimoy Kirk Spock Nero Pavel Chekov Nyota Uhura .. Greenwood Leonard Nimoy Pavel Chekov Nyota Uhura

Star Trek Startrek

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

Top X keywords (IDF) - Among the context surrounding (but excluding) entity of interest - Force include sense related words

Spock IMAX .. Kirk Karl Urban James .. canon Chris Pine libidinous

indicative of being a Named Entity

Constructing the SANJ. J. Abrams Damon Lindelof Roberto Orci Alex Kurtzman Paramount Pictures Chris Pine Zachary Quinto Eric Bana Zoe Saldana Karl Urban John Cho Anton Yelchin Simon Pegg Bruce Greenwood Leonard Nimoy Kirk Spock Nero Pavel Chekov Nyota Uhura .. Greenwood Leonard Nimoy Pavel Chekov Nyota Uhura

Star Trek Startrek

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

Top X keywords (IDF) - Among the context surrounding (but excluding) entity of interest - Force include sense related words

Spock IMAX .. Kirk Karl Urban James .. canon Chris Pine libidinous

Activation Network

indicative of being a Named Entity

Constructing the SANJ. J. Abrams Damon Lindelof Roberto Orci Alex Kurtzman Paramount Pictures Chris Pine Zachary Quinto Eric Bana Zoe Saldana Karl Urban John Cho Anton Yelchin Simon Pegg Bruce Greenwood Leonard Nimoy Kirk Spock Nero Pavel Chekov Nyota Uhura .. Greenwood Leonard Nimoy Pavel Chekov Nyota Uhura

Star Trek Startrek

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

Top X keywords (IDF) - Among the context surrounding (but excluding) entity of interest - Force include sense related words

Spock IMAX .. Kirk Karl Urban James .. canon Chris Pine libidinous

Activation Network

Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of

indicative of being a Named Entity

Constructing the SANJ. J. Abrams Damon Lindelof Roberto Orci Alex Kurtzman Paramount Pictures Chris Pine Zachary Quinto Eric Bana Zoe Saldana Karl Urban John Cho Anton Yelchin Simon Pegg Bruce Greenwood Leonard Nimoy Kirk Spock Nero Pavel Chekov Nyota Uhura .. Greenwood Leonard Nimoy Pavel Chekov Nyota Uhura

Star Trek Startrek

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

Top X keywords (IDF) - Among the context surrounding (but excluding) entity of interest - Force include sense related words

Spock IMAX .. Kirk Karl Urban James .. canon Chris Pine libidinous

Activation Network

Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of

libidinous

Spock 1 imax

1 1

indicative of being a Named Entity

Constructing the SANJ. J. Abrams Damon Lindelof Roberto Orci Alex Kurtzman Paramount Pictures Chris Pine Zachary Quinto Eric Bana Zoe Saldana Karl Urban John Cho Anton Yelchin Simon Pegg Bruce Greenwood Leonard Nimoy Kirk Spock Nero Pavel Chekov Nyota Uhura .. Greenwood Leonard Nimoy Pavel Chekov Nyota Uhura

Star Trek Startrek

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

Top X keywords (IDF) - Among the context surrounding (but excluding) entity of interest - Force include sense related words

Spock IMAX .. Kirk Karl Urban James .. canon Chris Pine libidinous

Activation Network

effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

libidinous

Spock 1 imax

1 1

indicative of being a Named Entity

Constructing the SANJ. J. Abrams Damon Lindelof Roberto Orci Alex Kurtzman Paramount Pictures Chris Pine Zachary Quinto Eric Bana Zoe Saldana Karl Urban John Cho Anton Yelchin Simon Pegg Bruce Greenwood Leonard Nimoy Kirk Spock Nero Pavel Chekov Nyota Uhura .. Greenwood Leonard Nimoy Pavel Chekov Nyota Uhura

Star Trek Startrek

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

Top X keywords (IDF) - Among the context surrounding (but excluding) entity of interest - Force include sense related words

Spock IMAX .. Kirk Karl Urban James .. canon Chris Pine libidinous

Activation Network

effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

Chris Pine

Kirk 1

1

1 libidinous

Spock 1 imax

1 1

indicative of being a Named Entity

Constructing the SANJ. J. Abrams Damon Lindelof Roberto Orci Alex Kurtzman Paramount Pictures Chris Pine Zachary Quinto Eric Bana Zoe Saldana Karl Urban John Cho Anton Yelchin Simon Pegg Bruce Greenwood Leonard Nimoy Kirk Spock Nero Pavel Chekov Nyota Uhura .. Greenwood Leonard Nimoy Pavel Chekov Nyota Uhura

Star Trek Startrek

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

Top X keywords (IDF) - Among the context surrounding (but excluding) entity of interest - Force include sense related words

Spock IMAX .. Kirk Karl Urban James .. canon Chris Pine libidinous

Activation Network

effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

Chris Pine

Kirk 1

1

1 Repeat this procedure for all blogs End up with a connected SAN With some sense Nodes and other words in context of entity

libidinous

Spock 1 imax

1 1

indicative of being a Named Entity

Node and Edge Semantics• Pre-adjustment phase

• Node weights: Sense nodes: 1; Other nodes: 0.1

• ambiguous sense nodes

• alternate seeding methods: distributional similarity with unambiguous domain terms (movie, theatre, imax, cinemas)

• Edge weights: co-occurrence counts

38

Node and Edge Semantics• Pre-adjustment phase

• Node weights: Sense nodes: 1; Other nodes: 0.1

• ambiguous sense nodes

• alternate seeding methods: distributional similarity with unambiguous domain terms (movie, theatre, imax, cinemas)

• Edge weights: co-occurrence counts

38

1

1

1

1

1

Eric Bana

Sulu

Romulan

movie

franchise

Chris pine

J. J. Abrams starship

seats

Constructing the Spreading Activation Network G from

words co-occurring with e in D

sense hints Yother vertices X

Propagating Sense Evidences Pulse sense nodes and spread effect As many pulses (iterations) as number of

sense nodes

39

1

1

1

1

1

Eric Bana

Sulu

Romulan

movie

franchise

Chris pine

J. J. Abrams starship

seats

Constructing the Spreading Activation Network G from

words co-occurring with e in D

sense hints Yother vertices X

Propagating Sense Evidences Pulse sense nodes and spread effect As many pulses (iterations) as number of

sense nodes

39

At every iterationA BFS walk starting at a sense node (weight 1)Revisiting nodes not edgesAmplifying weights of visited nodes:W [ j ] = W [ j ] + (W [ i ] * co-occ[ i, j ] * α)

1

1

1

1

1

Eric Bana

Sulu

Romulan

movie

franchise

Chris pine

J. J. Abrams starship

seats

Constructing the Spreading Activation Network G from

words co-occurring with e in D

sense hints Yother vertices X

Propagating Sense Evidences Pulse sense nodes and spread effect As many pulses (iterations) as number of

sense nodes

39

At every iterationA BFS walk starting at a sense node (weight 1)Revisiting nodes not edgesAmplifying weights of visited nodes:W [ j ] = W [ j ] + (W [ i ] * co-occ[ i, j ] * α)

1

1

1

1

1

Eric Bana

Sulu

Romulan

movie

franchise

Chris pine

J. J. Abrams starship

seats

Constructing the Spreading Activation Network G from

words co-occurring with e in D

sense hints Yother vertices X

Collective Spreading controlled by dampening factor α, co-occurrence thresholds

Propagating Sense Evidences

Eric Bana

Sulu

Romulan

movie

franchise

Chris pine

J. J. Abrams starship

seats

non-activated vertices

Post Propagation of Sense Evidences:

Spreading Activation Theory

Pulse sense nodes and spread effect As many pulses (iterations) as number of

sense nodes

39

Final activated portions of the network indicate word’s

relatedness to sense = sense-biased LM

At every iterationA BFS walk starting at a sense node (weight 1)Revisiting nodes not edgesAmplifying weights of visited nodes:W [ j ] = W [ j ] + (W [ i ] * co-occ[ i, j ] * α)

Collective Spreading controlled by dampening factor α, co-occurrence thresholds

Sense-biased LM

Entity: Star Trek(movie)20 iterations (pulsed sense nodes)900+ blogs, 35K+ words in co-occ graph167 words in the LM

40

Sense-biased LM

Entity: Star Trek(movie)20 iterations (pulsed sense nodes)900+ blogs, 35K+ words in co-occ graph167 words in the LM

Sense-biased Spreading Activation already lends one type of clustering (separation of words strongly related to our sense)

40

Sense-biased LM

Entity: Star Trek(movie)20 iterations (pulsed sense nodes)900+ blogs, 35K+ words in co-occ graph167 words in the LM

Sense-biased Spreading Activation already lends one type of clustering (separation of words strongly related to our sense)

40

Documents D Represented in terms of LMe

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

di(LMe) = {w1, LMe(w1) ; .. wx, LMe(wx) }

Step2:Clustering using Extracted LMAlgorithmic Implementations

41

Vector Space ModelTypically: word, tfidf score

Here: word, sense relatedness score

Step2:Clustering using Extracted LMAlgorithmic Implementations

41

Vector Space ModelTypically: word, tfidf score

Here: word, sense relatedness score

http://realart.blogspot.com/2009/05/star-trek-balance-of-terror-from.html

http://realart.blogspot.com/2009/05/star-trek-balance-of-terror-from.html

http://susanisaacs.blogspot.com/2009/04/quantum-leap-convention.html

http://semioblog.blogspot.com/2009/01/retrofuturo-web.html http://wilwheaton.net/2006/05/learn_to_swim.php

No Representation

Step2:Clustering using Extracted LM

Clustering documents in D along same the dimensions of

propagation

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

Algorithmic Implementations

Cluster scores are as high as sense-relatedness scores of terms in

documents in clusters41

Vector Space ModelTypically: word, tfidf score

Here: word, sense relatedness score

http://realart.blogspot.com/2009/05/star-trek-balance-of-terror-from.html

http://realart.blogspot.com/2009/05/star-trek-balance-of-terror-from.html

http://susanisaacs.blogspot.com/2009/04/quantum-leap-convention.html

http://semioblog.blogspot.com/2009/01/retrofuturo-web.html http://wilwheaton.net/2006/05/learn_to_swim.php

No Representation

Chinese Whispers

42

*[Biemann 2006] Biemann, C. (2006): Chinese Whispers - an Efficient Graph Clustering Algorithm and its Application to Natural Language Processing Problems. Proceedings of the HLT-NAACL-06 Workshop on Textgraphs-06,!New York, USA.

Clustering documents in D along same the dimensions of

propagation

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

! Randomized graph-clustering algorithm ! for undirected, weighted graphs

! Nodes are documents, edges represent Dot Product similarity between documents ! Feature vector = language model from Step1

! Partitions nodes i.e. documents based on max average similarity

High and Low Scoring Clusters

43

++++++++++CLUSTER++++++++++On cluster 1032 total of 4 members and score = 7.46775436953479E-05 http://...mish-mashy-hodge-podge-with-no.html Keywords adventure 0.0016552556767791 1 movie 0.000477036040807386 5 http://perchedraven.blogspot.com/2006/06/reading-habits.html Keywords adventure 0.0016552556767791 1 movie 0.000477036040807386 4 http:/..9/05/27/new-york-times-crossword-will-shortz-corey-rubin/ Keywords adventure 0.0016552556767791 1 movie 0.000477036040807386 1 http://....no-doubt-have-heard-by-now.html Keywords adventure 0.0016552556767791 1 movie 0.000477036040807386 1

++++++++++CLUSTER++++++++++On cluster 2382 total of 4 members and score = 0.130057194715825 http://torontomike.com/2009/05/advanced_screening_of_pixars_u.html Keywords comedy 0.00256627265885554 1 adventure 0.0016552556767791 2 carl 0.020754281327519 1 pete docter 0.0549975353578234 1 carl fredricksen 0.133630375166943 1 russell 0.116638105837327 1 pixar 0.0048327182073532 2 digital 5.70733953613266E-05 1 disney 2.05783382714942E-06 2 fredricksen 1 1 docter 0.016306935328765 1 http://theplaylist.blogspot.com/2009/05/up-pixars-latest-is-profoundly.html Keywords russell 0.116638105837327 2 carl 0.020754281327519 3 carl fredricksen 0.133630375166943 1 pete docter 0.0549975353578234 1 comedy 0.00256627265885554 1 animation 0.0164047754350987 1 pixar 0.0048327182073532 7 film 1.32766200713231E-05 4 adventure 0.0016552556767791 1 movies 0.0399341006575118 2

Low scoring cluster - less evidence for relatedness to sense

High scoring cluster

From Clusters to SupportClustering documents in D along

same the dimensions of propagation

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock

10 minutes. That is all it took for JJ Abrams to make a believer out of me. 10 minutes. Let us set the stage for my viewing of Star Trek. IMAX? Check. Perfect seats? Check..not sit well with me was the libidinous Spock. It changed one of the fundamental aspects of the character for no good reason. Other than that, however, none of the changes to Trek canon particularly bothered me in a "get a life" kind of way.………….the special effects were stunning, and the performances were...wow. Chris Pine IS James T. Kirk. Karl Urban IS Leonard McCoy…Spock