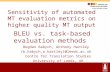

Human Evaluation of Machine Translation Systems MODL5003 Principles and applications of machine translation Lecture 13/03/2006 Bogdan Babych [email protected] (Slides: Debbie Elliott, Tony Hartley)

Human Evaluation of Machine Translation Systems MODL5003 Principles and applications of machine translation Lecture 13/03/2006 Bogdan Babych [email protected].

Mar 28, 2015

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

Human Evaluation of Machine Translation Systems

MODL5003 Principles and applications of machine translationLecture 13/03/2006

Bogdan [email protected]

(Slides: Debbie Elliott, Tony Hartley)

13 March 2006 MODL5003 Principles and applications of MT

2

Outline• MT Evaluation – general perspective• Purposes of MT evaluation• Why evaluating translation quality is

difficult• A brief history of MT evaluation• Examples of MT evaluation methods

for users• Where next?

13 March 2006 MODL5003 Principles and applications of MT

3

MT Evaluation – a big space• Requirements

– Task: assimilation, dissemination, ...– Text: type, provenance, ...– User: translators, consumers, ...

• Quality attributes– Internal: architecture, resources, ...– External: readability, fidelity, well-

formedness, ...

13 March 2006 MODL5003 Principles and applications of MT

4

What is evaluated• “It looks good to me” evaluation• Test suites

– Syntactic coverage, degradation• Corpus-based evaluation

– Real texts • Don’t have to read them all !

– Coverage of typical problems– Interaction between different levels– General performance (bird's-eye view)

• Aspects more/less important for overall quality

13 March 2006 MODL5003 Principles and applications of MT

5

Purposes of MT evaluationInternational Standard for Language Engineering (ISLE)

http://www.mpi.nl/ISLE/ Framework for the Evaluation of Machine Translation in ISLE

(FEMTI)

http://www.issco.unige.ch/projects/isle/femti/framed-glossary.html

Defines 7 types of MT evaluation:1. Feasibility testing2. Requirements elicitation3. Internal evaluation4. Diagnostic evaluation5. Declarative evaluation6. Operational evaluation7. Usability evaluation

13 March 2006 MODL5003 Principles and applications of MT

6

Purposes of MT evaluation1. Feasibility testing“An evaluation of the possibility that a particular

approach has any potential for success after further research and implementation.” (White 2000)

(Eg. sub-problems connected to a particular language pair)

Purpose: To decide whether to invest in further research

into a particular approach

For: Researchers, sponsors of research

13 March 2006 MODL5003 Principles and applications of MT

7

Purposes of MT evaluation2. Requirements elicitationResearchers and developers create prototypes designed to demonstrate particular functional capabilities that might be implemented

Purpose: To elicit reactions from potential investors before implementing new approaches.

For: Researchers and developers, project managers, end-users

13 March 2006 MODL5003 Principles and applications of MT

8

Purposes of MT evaluation3. Internal evaluation

Researchers and developers test components of a prototype or pre-release system. This can involve the use of test suites to evaluate output quality during the course of system modifications.

Purpose: • To measure how well each component performs its

function• To test linguistic coverage (that a new grammar rule

works in all circumstances)• Iterative testing: to check that particular modifications do

not have adverse effects elsewhereFor: Researchers, developers, investors

13 March 2006 MODL5003 Principles and applications of MT

9

Purposes of MT evaluation4. Diagnostic evaluationResearchers and developers of prototype systems evaluate functionality characteristics and analyse intermediate results produced by the system

Purpose: To discover why a system did not give the expected results

For: Researchers and developers

13 March 2006 MODL5003 Principles and applications of MT

10

Purposes of MT evaluation5. Declarative evaluation Evaluators rate the quality of MT output

Purpose: • To measure how well a system translates• To measure fidelity (how much of the source text content is

correctly conveyed in the target text)• To measure the fluency of the target text• To measure the usability of a MT output for a particular purpose• To evaluate a system’s improvability (to what extent can

dictionary update improve output quality?)• To help decide which system to buy• To indicate whether buying a system will be cost-effective (will

post-editing MT output be cheaper than translating from scratch?)

For: End-users, researchers, developers, managers, investors, vendors

13 March 2006 MODL5003 Principles and applications of MT

11

Purposes of MT evaluation6. Operational evaluationManagers calculate purchase and running costs and compare with benefits

Purpose: To determine the cost-benefit of an MT system in a particular operational environment, and whether a system will serve its required purpose

For: Managers, investors, vendors

13 March 2006 MODL5003 Principles and applications of MT

12

Purposes of MT evaluation

7. Usability evaluation• Evaluators test how easy the application is to use. • Systems are evaluated using questionnaires on usability. • Evaluators may record how long it takes to complete

particular tasks

Purpose: To measure how useful the product will be for the end-user in a

specific contextTo evaluate user-friendliness

For: End-users, researchers, developers, managers, investors, vendors

13 March 2006 MODL5003 Principles and applications of MT

13

Why evaluating translation quality is difficult• No perfect standard exists for comparison• Scoring is subjective (so several

evaluators and texts are needed)• The "training effect" can influence results• Bilingual evaluators or human reference

translations are usually required• Different evaluations are needed

depending on use of MT output (eg. filtering, gisting, information gathering, post-editing for internal or external use)

13 March 2006 MODL5003 Principles and applications of MT

14

A brief history of MT evaluation: the 1950’s and 1960’s

• 1954: First public demonstration MT (Georgetown University/IBM)

• Research in USA, Western Europe, Soviet Union and Japan

• 1966: ALPAC Report (funded by US Government sponsors of MT to advise on further R & D) …

• advised against further investment in MT• concluded that MT was slower, less accurate and more

expensive that human translation• recommended research into:

- practical methods for evaluation of translations- evaluation of quality and cost of various sources of translations- evaluation of the relative speed and cost of various

sorts of machine-aided translation

13 March 2006 MODL5003 Principles and applications of MT

15

A brief history of MT evaluation: the 1970’s and 1980’s

• 1976: EC bought a version of Systran and began to develop own system Eurotra in 1978

• EC needed recommendations for evaluation: “Van Slype report” (Critical Methods for Evaluating the Quality of Machine Translation.) published in 1979Aims of the report:- to establish the state of MT evaluation- to advise the EC on evaluation methodology and research- to provide examples of evaluation methods and their applicationsAvailable online: http://issco-www.unige.ch/projects/isle/van-slype.pdf

• 1980’s: Greater need for MT evaluation: MT attracting commercial interest, tailor-made systems designed for large corporations

• 1987: First MT Summit (opportunity to publish research on evaluation)

13 March 2006 MODL5003 Principles and applications of MT

16

A brief history of MT evaluation: The 1990’s

• 1992: AMTA workshop: MT Evaluation: Basis for Future DirectionsJEIDA Report presented (Japan Electronic Industry Development Association): Methodology and Criteria on Machine Translation Evaluation. This stressed the importance of judging systems according to context of use and user requirements

• 1993: Machine Translation journal devoted to MT evaluation

• 1992 -1994 DARPA (Defense Advanced Research Projects Agency) MT evaluations

• 1993 - 1999 EAGLES (Expert Advisory Group on Language Engineering) set up by European CommissionOne of aims: To propose standards, guidelines and recommendations for good practice in the evaluation of language engineering productsThe EAGLES 7-step recipe for evaluation:http://www.issco.unige.ch/projects/eagles/ewg99/7steps.html

13 March 2006 MODL5003 Principles and applications of MT

17

The ISLE Project and FEMTIInternational Standards for Language EngineeringFramework for the Evaluation of Machine Translation in ISLE

• ISLE Evaluation Working Group set up in response to EAGLES

• Funded by EC, National Science Foundation of the USA and Swiss Government

• Established a classification scheme of quality characteristics of MT systems and a set of measures to use when evaluating these characteristics

• Scheme designed to help developers, users and evaluators to select evaluation criteria according to their needs

• Workshops organised to involve hands-on evaluation exercises to test reliability of metrics

• Latest research involves the investigation of automated evaluation methods: quicker and cheaper

13 March 2006 MODL5003 Principles and applications of MT

18

Evaluation methods: Carroll 1966Source: Carroll, J. B. (1966). An experiment in evaluating the quality of translations. In Pierce, J. (Chair). (1966). Language and Machines: computers in Translation and Linguistics. Report by the Automatic Language Processing Advisory Committee (ALPAC). Publication 1416. National Academy of Sciences National Research Council, pp 67-75. http://www.nap.edu/books/ARC000005/html/

• Evaluation of scientific Russian texts translated into English• 3 human translations and 3 machine translations of 4 texts evaluated• Evaluators: 18 monolingual English speakers and 18 native English

speakers with good understanding of scientific Russian

Intelligibility:• Each sentence scored on a 9-point scale with no reference to source

text

Informativeness (fidelity) • Original Russian sentences rated for informativeness compared with

the translation• Monolinguals used human reference translations instead of source

texts for comparison

13 March 2006 MODL5003 Principles and applications of MT

19

Evaluation methods: Carroll 1966Extracts from 9-point intelligibility scale

9. Perfectly clear and intelligible. Reads like ordinary text: has no stylistic infelicities

5. The general idea is intelligible only after considerable study, but after this study one is fairly confident that he understands. Poor word choice, grotesque syntactic arrangement, untranslated words, and similar phenomena are present, but constitute mainly "noise" through which the main idea is still perceptible

1. Hopelessly unintelligible. It appears that no amount of study and reflection would reveal the thought of the sentence.

13 March 2006 MODL5003 Principles and applications of MT

20

Evaluation methods: Carroll 1966

Rating original sentencesExtracts from 10-point informativeness scale

9. Extremely informative. Makes "all the difference in the world" in comprehending the meaning intended. (A rating of 9 should always be assigned when the original completely changes or reverses the meaning conveyed by the translation)

4. In contrast to 3, adds a certain amount of information about the sentence structure and syntactical relationships; it may also correct minor misapprehensions about the general meaning of the sentence or the meaning of individual words

0. The original contains, if anything, less information than the translation. The translator has added certain meanings, apparently to make the passage more understandable.

13 March 2006 MODL5003 Principles and applications of MT

21

Evaluation methods: Crook & Bishop 1979

Source: Crook & Bishop (reported by T C Halliday). Measurement of readability by the cloze test. In Van Slype, G.. (1979). Critical Methods for Evaluating the Quality of Machine Translation. Prepared for the European Commission Directorate General Scientific and Technical Information and Information Management. Report BR 19142. Bureau Marcel van Dijk, p65.

• Evaluators rate the readability of translations using a cloze test

• Human and machine translations produced• Every eighth word of machine translation omitted• Evaluators fill in the gaps• The more intelligible the MT output, the easier

the “test” is to complete

13 March 2006 MODL5003 Principles and applications of MT

22

Evaluation methods: Sinaiko 1979

Source: Sinaiko, H. W. Measurement of usefulness by performance test. In Van Slype, G. (1979). Critical Methods for Evaluating the Quality of Machine Translation. Prepared for the European Commission Directorate General Scientific and Technical Information and Information Management. Report BR 19142. Bureau Marcel van Dijk, p91.

• Aim: to evaluate the English-Vietnamese LOGOS system

• All source texts contained instructions• Evaluators (native speakers of TL) use

machine translated instructions to perform tasks

• Errors in performance were measured (weighting system used)

13 March 2006 MODL5003 Principles and applications of MT

23

Evaluation methods: Nagao 1985Source: Nagao, M., Tsujii, J. & Nakamura, J. (1985). The Japanese government project for machine translation. In Computational Linguistics 11, 91-109.

• Aim: to test the feasibility of using MT to translate abstracts of scientific papers

• 1,682 sentences from a Japanese scientific journal were machine translated into English

• Intelligibility:• 2 native speakers of English (with no knowledge of

Japanese) scored each sentence using a 5-point scale• Accuracy: • 4 Japanese-English translators evaluated how much

of the meaning of the original text was conveyed in the MT output

13 March 2006 MODL5003 Principles and applications of MT

24

Evaluation methods: Nagao 1985

Extracts from 5-point intelligibility scale

1. The meaning of the sentence is clear, and there are no questions. Grammar, word usage, and style are all appropriate, and no rewriting is needed.

3. The basic thrust of the sentence is clear, but the evaluator is not sure of some detailed parts because of grammar and word usage problems. The problems cannot be resolved by any set procedure; the evaluator needs the assistance of a Japanese evaluator to clarify the meaning of those parts in the Japanese original.

5. The sentence cannot be understood at all. No amount of effort will produce any meaning.

13 March 2006 MODL5003 Principles and applications of MT

25

Evaluation methods: Nagao 1985Extracts from 7-point accuracy scale

0. The content of the input sentence is faithfully conveyed to the output sentence. The translated sentence is clear to a native speaker and no rewriting is needed.

3. While the content of the input sentence is generally conveyed faithfully to the output sentence, there are some problems with things like relationships, between phrases and expressions, and with tense, voice, plurals, and the positions of adverbs. There is some duplication of nouns in the sentence.

6. The content of the input sentence is not conveyed at all. The output is not a proper sentence; subjects and predicates are missing. In noun phrases, the main noun (the noun positioned last in the Japanese) is missing, or a clause or phrase acting as a verb and modifying a noun is missing.

13 March 2006 MODL5003 Principles and applications of MT

26

Evaluation methods: DARPA 1992-4Adequacy, fluency and informativeness

Sources: White, J., O'Connell, T., O’Mara, F.: The ARPA MT evaluation methodologies: evolution, lessons, and future approaches. In: Proceedings of the 1994 Conference, Association for Machine Translation in the Americas, Columbia, Maryland (1994)White, J. (Forthcoming). How to evaluate Machine Translation. In H. Somers (ed.) Machine translation: a handbook for translators. Benjamins, Amsterdam.

• Aim: to compare prototype systems funded by DARPA• Evaluators: 100 monolingual native English speakers• Largest evaluation resulted in: corpus of 100 news articles (of c.400 words) in each

SL: French, Spanish and Japanese2 English human translations of eachEnglish machine translations of each text by several

systemsDetailed evaluation results

13 March 2006 MODL5003 Principles and applications of MT

27

Evaluation methods: DARPA 1992-4DARPA: AdequacySegments of MT output were compared with equivalent human reference translations and scored on a 5-point scale according to how much of the original content was preserved (regardless of imperfect English)

5 – All meaning expressed in the source fragment appears in the translation fragment4 – Most of the source fragment meaning is expressed in the translation fragment3 – Much of the source fragment meaning is expressed in the translation fragment2 – Little of the source fragment meaning is expressed in the translation fragment1 – None of the meaning expressed in the source fragment is expressed in the translation fragment

13 March 2006 MODL5003 Principles and applications of MT

28

Evaluation methods: DARPA 1992-4DARPA: Fluency• Each sentence scored for intelligibility without reference

to the source text or human reference translation• Simple 5-point scale used

DARPA: Informativeness• Designed to test whether enough information was

conveyed in MT output to enable evaluators to answer questions on its content

• Each translation accompanied by 6 multiple-choice questions on content

• 6 choices for each question

13 March 2006 MODL5003 Principles and applications of MT

29

DARPA-inspired evaluation methods…Many subsequent evaluations have followed in the footsteps of DARPA …..

Fluency and Adequacy using 5-point scales: Source: Elliott, D., Atwell, E., Hartley, A.: Compiling and Using a Shareable Parallel Corpus for Machine Translation Evaluation. In: Proceedings of the Workshop on The Amazing Utility of Parallel and Comparable Corpora, Fourth International Conference on Language Resources and Evaluation (LREC), Lisbon, Portugal (2004)

Usability 5-point scale

13 March 2006 MODL5003 Principles and applications of MT

30

Where next?• Beyond similarity metrics

– FEMTI offers a rich palette of techniques

• Beyond adequacy and fluency– Too generic / abstract for specific tasks?– Consider MT output in its own right

• Beyond conventional uses of MT as surrogate human translation (emulation)– MT as a component in a workflow

13 March 2006 MODL5003 Principles and applications of MT

31

Restore a sense of purpose

Texts are meant to be used.There are no absolute standards of translation quality but only more or less appropriate translations for the purpose for which they are intended.(Sager 1989: 91)

13 March 2006 MODL5003 Principles and applications of MT

32

Revisit MT proficiency (White 2000)

• View MT output as a genre– Characterise inadequacy, disfluency,

ill-formedness

• Embed MT and adapt (to) the environment– in IE, CLIR, CLQA, Speech2Speech– in pre- and post-editing

Human Evaluation of Machine Translation Systems

Any questions…?

Related Documents