1 Machine Translation: Architectures, Evaluation and Limits Bogdan Babych, [email protected] University of Leeds

1 Machine Translation: Architectures, Evaluation and Limits Bogdan Babych, [email protected] University of Leeds.

Mar 31, 2015

Welcome message from author

This document is posted to help you gain knowledge. Please leave a comment to let me know what you think about it! Share it to your friends and learn new things together.

Transcript

1

Machine Translation: Architectures, Evaluation and Limits

Bogdan Babych, [email protected]

University of Leeds

2

Overview• Classification of approaches to MT• Architectures of rule-based MT systems

– Rule-based MT– Data-driven MT (SMT, EBMT, hybrid MT)

• Evaluation of MT– Human evaluations– Automated methods

• Limits of MT

3

Architectural challenges for MT• Rule-based approaches : expert systems with

formal models of translation / linguistic knowledge– Direct MT– Transfer MT– Interlingua MT

• Problems– expensive to build– require precise knowledge, which might be not

available• Advantages:

– rules are observable; errors easy to correct; will work for under-resourced languages

4

Architectural challenges for MT

• Corpus-based approaches– Statistical MT, Example-Based MT, hybrid

approaches...– Use machine learning techniques on large

collections of available parallel texts– "let the data speak for themselves“

• Problems:– language data are sparse (difficult to achieve

saturation)– high-quality linguistic resources are also

expensive• Corpus-based support for rule-based

approaches

5

Possible Architecture of MT systems (the MT triangle)

**Interlingua = language independent representation of a text

6

• Direct – n × (n – 1) modules– 5 languages = 20

modules

• Transfer– n × (n – 1) transfer– n × (n + 1) in total= 30 modules in total

• Interlingua– n × 2 modules– 5 languages = 10

modules

7

MT triangle & RBMT/SMT...

?~ “EUROTRA”Interlingua

?~ “Reverso”Transfer

~ “Candide”, “Language Weaver”

~ “Systran”Direct

Data-driven (SMT,EBMT)

Rule-based MT

What information is used?How MT is built?

8

NLP technologies in MTRBMT / SMT require robust NLP annotation tools,

e.g.:– Part-of-speech tagging & lemmatisation

• SMT: factored models– Syntactic Parsing

• SMT: syntax-based models– Semantic categorisation– Word sense disambiguation– Semantic role labeling– Named entity recognition– Information extraction

9

Transfer example (1) (TAUM domain)• Input: Carefully check services for movement• Lemmatisation / PoS tagging

– Carefully [carefully/ADV]– Check [check/V_]| [check/N_sing]– Services [service/N_plur] |

[service+V_sing_3p]– For [for/PREP]– Movement [N_sing]

10

Transfer example (2)• Syntactico-semantic 'super-tagging' and parsing:

– check • [check/V_/Action] →

– 0.Part [out, up]; 1.NP[Human]; 2.NP|Sub(if, that); 3.PP[for..., against...]

• [check/N_sing/Abstract,Action]– services →

• [service/N_plur/Concrete,PhysObj]• [service+V_sing_3p/Action]

– 1.NP[Human]; 2.NP[Physobj]

– movement → [movement/N_sing/Abstract, Action]

11

Transfer example (3)• Disambiguation by features:

– check • [check/V_/Action] →

– 0.Part [out, up]; 1.NP[Human]; 2.NP|Sub(if, that); 3.PP[for..., against...]

• [check/N_sing/Abstract,Action]– services →

• [service/N_plur/Concrete,PhysObj]• [service+V_sing_3p/Action]

– 1.NP[Human]; 2.NP[Physobj]

– for movement [PP]

12

Transfer example (4)• Disambiguation by features:

– Check • [check/V_/Action] →

– 0.Part [out, up]; 1.NP[Human]; 2.NP|Sub(if, that); 3.PP[for..., against...]

• [check/N_sing/Abstract,Action]– Services →

• [service/N_plur/Concrete,PhysObj]• [service+V_sing_3p/Action]

– 1.NP[Human]; 2.NP[Physobj]

– for movement [PP]

13

Transfer example (5)Syntactico-semantic analysis tree:

14

Transfer example (6)• Transfer rules

15

Transfer example (7)Transfer operations on the tree (lexical and

structural)

16

Transfer example (8)Syntactic and morphological generation

17

Relevance for SMTEn: Carefully check services for movement → Google-

translate (March 2009) → Fr:Vérifiez soigneusement les services de circulationlit: 'Check carefully services of circulation'– Error in PP attachment / semantic role of PP

NotOK: * [services for movement]OK: [check ... for movement]

• Learning attachment preferences from corpora– Turn off each service, one at a time, and check

for movement again• Learning semantic features, e.g.[check/N

Abstract,Action]

18

Direct systems• Essentially: word for word translation with some

attention to local linguistic context• No linguistic representation is built

– (historically come first: the Georgetown experiment 1954-1963: 250 words, 6 grammar rules, 49 sentences)

– Sentence: The questions are difficult (P.Bennett, 2001)

– (algorithm: a "window" of a limited size moves through the text and checks if any rules match) /*before plural noun*/

/*when it follows 'are'*/

1. the <[N.plur]> à les 2. <[article]> questions[N.plur] à questions

/*'questions' is plur. noun after the article */

3. <[not: "we" or "you"]> are à sont /* unless it follows the words "we" or "you"*/

4. <are> difficult à difficiles

19

direct systems: advantages• Technical:

– ‘Machine-learning’ can be easily applied• It is straightforward to learn direct rules • Intermediate representations are more difficult

• Linguistic:– Exploiting structural similarity between languages

• similarity is not accidental – historic, typological, based on language and cognitive universals

• High-quality MT for direct systems between closely-related languages

20

A. direct systems: technical problems 1/2

• rules are "tactical", not "strategic" (do not generalise)

• have little linguistic significance • no obvious link between our ideas about

translation and the formalism• large systems are difficult to maintain and to

develop: systems become non-manageable• interaction of a large number of rules: rules

are not completely independent

21

A. direct systems: technical problems 2/2

• no reusability• a new set of rules is

required for each language pair

• no knowledge can be reused for new language pairs

• Rules are complex and specific to translation direction

22

B. direct systems: linguistic problems:

• Information for disambiguation appears not locally

• context length cannot be predicted in advanced

• Hard to handle for direct systems:– Lexical Mismatch

– (no 1 to 1 correspondence between words)

– Structural Mismatch– (no 1 to 1 correspondence between

constructions)

23

B1. Lexical Mismatch: 1/2Das ist ein starker Mann This is a strong manEs war sein stärkstes Theaterstück It has been his best playWir hoffen auf eine starke Beteiligung We hope a large number of people will

take partEine 100 Mann starke Truppe A 100 strong unitDer starke Regen überraschte uns We were surprised by the heavy rainMaria hat starkes Interesse gezeigt Mary has shown strong interestPaul hat starkes Fieber Paul has high temperatureDas Auto war stark beschädigt The car was badly damagedDas Stück fand einen starken Widerhall

The piece had a considerable response

Das Essen was stark gewürzt The meal was strongly seasonedHans ist ein starker Raucher John is a heavy smokerEr hatte daran starken Zweifel He had grave doubts about it

(example by John Hutchins, 2002)

24

B1. Lexical Mismatch: 2/2• the pressure on the skin was light à

… peau était claire vs. pression … était légère

light [antonym: heavy] à légerlight [antonym: dark] à clair

• Non-local context needed for disambiguation

25

B2. Structural Mismatch: 1/2• EN: I will go to see my GP tomorrow• JP: Watashi wa asu isha ni mite morau

• Lit: 'I will ask my GP to check me tomorrow'

• EN: ‘The bottle floated out of the cave’• ES: La botella salió de la cueva (flotando)

• Lit.: the bottle moved-out from the cave (floating)

• Same meaning is typically expressed by different structures

26

B2. Structural Mismatch: 2/2Ukr.: Питання N.nom міняється. V щодня

Pytann'a .N.nom min'ajet's'a. V shchodn'a

Ukr.: Зміну . N.acc. питань N.gen було погоджено

Zminu N.acc pytan' N.gen bulo pohodzheno

Ukr.: Змін а . N.nom. питань N.gen бул а складною

Zmin a N.nom pytan' N.gen bul a skladnoju

1. The question N changes V

every day

2. The question .N changes N

have been agreed

3. The question .N changes N

have been difficult

– translation of the word question is also different, because its function in a phrase has changed

– translation might depend on the overall structure• even if the function does not change in the

English sentence

27

Indirect systems

28

Indirect systems• linguistic analysis of the ST • some kind of linguistic representation (“Interface

or Intermediate Representation” -- IR)ST à Interface Representation(s) à TT

• Transfer systems: • -- IRs are language-specific• -- Language-pair specific mappings are used

• Interlingual systems:• -- IRs are language-independent• -- No language-pair specific mappings

29

Transfer systems• 3 stages: Analysis - Transfer – Synthesis• Analysis and synthesis are monolingual:

•analysis is the same irrespective of the TL;

•synthesis is the same irrespective of the SL

• Transfer is bilingual & specific to a particular language-pair – e.g., “Comprendium” MT system – SailLabs

30

Direct vs Transfer : how to update a dictionary?

– Direct: 1 dictionary (e.g., Systran)•Ru: { ‘primer’ à ‘example’,

‘primery’ à ‘examples’}– Transfer: 3 dictionaries (e.g.,

Comprendium)•(1)Ru {‘primery’ à N, plur, nom,

lemma=‘primer’}•(2)Ru-En {‘primer’à‘example’} •(3)En {lemma=‘example’, N, sing à ‘example’; … N, plur à examples}

31

… the advantage?

– Direct: 1 dictionary (e.g., Systran)•Ru: { ‘primer’ à ‘example’,

‘primery’ à ‘examples’}– Transfer: 3 dictionaries (e.g.,

Comprendium)•(1)Ru {‘primery’ à N, plur, nom,

lemma=‘primer’}•(2)Ru-En {‘primer’à‘example’} •(3)En {lemma=‘example’, N, sing à ‘example’; … N, plur à examples}

32

… Multilingual MT: Ru-Es

– Direct: 1 dictionary (e.g., Systran)•Ru-Es: { ‘primer’ à ‘ejemplo’,

‘primery’ à ‘ejemplos’}– Transfer: 3 dictionaries (e.g.,

Comprendium)•(1)Ru {‘primery’ à N, plur, nom,

lemma=‘primer’}•(2)Ru-Es {‘primer’à‘ejemplo’} •(3)Es {lemma=‘ejemplo’, N, sing à

‘ejemplo’; … N, plur à ‘ejemplos’}

33

… Multilingual MT: En-Es

– Direct: 1 dictionary (e.g., Systran)•En-Es: { ‘example’ à ‘ejemplo’,

‘examples’ à ‘ejemplos’}– Transfer: 3 dictionaries (e.g.,

Comprendium)•(1)En {‘example’ à N, plur, nom,

lemma=‘example’}•(2)En-Es {‘example’à‘ejemplo’} •(3)Es {lemma=‘ejemplo’, N, sing à

‘ejemplo’; … N, plur à ejemplos}

34

The number of modules for a multilingual transfer system

• n × (n – 1) transfer modules• n × (n + 1) modules in total

e.g.: 5-language system (if translates in both directions between all language-pairs) has

• 20 transfer modules and 30 modules in total(There are more modules than for direct systems, but modules are

simpler)

35

Advantages of transfer systems: 1/2

• Technical:– Analysis and Synthesis modules are reusable

• We separate reusable (transfer-independent) information from language-pair mapping

• operations performed on higher level of abstraction

– Challenges:• to do as much work as possible in reusable

modules of analysis and synthesis• to keep transfer modules as simple as

possible = "moving towards Interlingua"

36

Advantages of transfer systems: 2/2

• Linguistic: – MT can generalise over morphological

features, lexemes, tree configurations, functions of word groups

– MT can access annotated linguistic features for disambiguation

37

Transfer: dealing with lexical and structural mismatch, w.o.: 1/2

– Dutch: Jan zwemt à English: Jan swims– Dutch: Jan zwemt graag à English: Jan

likes to swim(lit.: Jan swims "pleasurably", with pleasure)

– Spanish: Juan suele ir a casa à English: Juan usually goes home

(lit.: Juan tends to go home, soler (v.) = 'to tend')

– English: John hammered the metal flat à French: Jean a aplati le métal au marteau

Resultative construction in English; French lit.: Jean flattened the metal with a hammer

38

Transfer: dealing with lexical and structural mismatch, w.o.: 2/2

– English: The bottle floated past the rock à Spanish: La botella pasó por la piedra flotando

(Spanish lit.: 'The bottle passed the rock floating')– English: The hotel forbids dogs à German: In

diesem Hotel sind Hunde verboten– (German lit.: Dogs are forbidden in this hotel)

– English: The trial cannot proceed à German: Wir können mit dem Prozeß nicht fortfahren

– (German lit.: We cannot proceed with the trial)– English: This advertisement will sell us a lot à

German: Mit dieser Anziege verkaufen wir viel– (German lit.: With this advertisement we will sell a

lot)

39

Principles of Interface Representations (IRs)

• IRs should form an adequate basis for transfer, i.e., they should

• contain enough information to make transfer (a) possible; (b) simple

• provide sufficient information for synthesis• need to combine information of different

kinds1. lematisation2. featurisation3. neutralisation4. reconstruction5. disambiguation

40

IR features: 1/31. lematisation

– each member of a lexical item is represented in a uniform way, e.g., sing.N., Inf.V.

– (allows the developers to reduce transfer lexicon)

2. featurisation– only content words are represented in IRs 'as

such',– function words and morphemes become

features on content words (e.g., plur., def., past…)

– inflectional features only occur in IRs if they have contrastive values (are syntactically or semantically relevant)

41

IR features: 2/33. neutralisation

– neutralising surface differences, e.g., • active and passive distinction• different word order

– surface properties are represented as features • (e.g., voice = passive)

– possibly: representing syntactic categories:E.g.: John seems to be rich (logically, John is not a subject of seem):= It seems to someone that John is richMary is believed to be rich = One believes that

Mary is rich– translating "normalised" structures

42

IR features: 3/34. reconstruction

– to facilitate the transfer, certain aspects that are not overtly present in a sentence should occur in IRs

– especially, for the transfer to languages, where such elements are obligatory: • John tried to leave: S[ try.V John.NP S[ leave.V

John.NP]] Vs.: John seems to be leaving… 5. disambiguation

– ambiguities should be resolved at IR: e.g., PP attachment• I saw a man with a telescope; … a star with a

telescope– Lexical ambiguities should be annotated: ‘table’_1,

_2…

43

Interlingual systems

44

Interlingual systems• involve just 2 stages:

• analysis à synthesis• both are monolingual and independent

• there are no bilingual parts to the system at all (no transfer)

• generation is not straightforward

45

The number of modules in an Interlingual system

• A system with n languages (which translates in both directions between all language-pairs) requires 2*n modules:

• 5-language system contains 10 modules

46

Features of “Interlingua”• Each module is more complex• Language-independent IR • IL based on universal semantics, and not

oriented towards any particular family or type of languages

• IR principles still apply (even more so): – Neutralisation must be applied cross-

linguistically,• no ‘lexical items’, just universal ‘semantic

primitives’:

(e.g., kill: [cause[become [dead]]])

47

From transfer to interlingua

• En: Luc seems to be ill à Fr: *Luc semble être maladeà Fr: Il semble que Luc est malade

SEEM-2 (ILL (Luc))SEMBLER (MALADE (Luc)) (Ex.: by F. van

Eynde)

– Problem: the translation of predicates:– Solution: treat predicates as language-specific

expressions of universal conceptsSHINE = concept-372SEEM = concept-373BRILLER = concept-372SEMBLER = concept-373

48

Transfer and Interlingua compared• Transfer = translation vs. Interlingual = paraphrase

– Bilingual contrastive knowledge is central to translation• Translators know correct correspondences, e.g.,

legal terms, where "retelling" is not an option• Transfer systems can capture contrastive knowledge• IL leaves no place for bilingual knowledge

• can work only in syntactically and lexically restricted domains

49

Problems with Interlingua 1/2• Semantic differentiation is target-language specific

•runway à startbaan, landingsbaan (landing runway; take-of runway)

•cousin à cousin, cousine (m., f.)– No reason in English to consider these words

ambiguous•making such distinctions is comparable to

lexical transfer•not all distinctions needed for translation

are motivated monolingually: no "universal semantic features“

50

Problems with Interlingua 2/2 • Result: Adding a new language requires changing

all other modules– exactly what we tried to avoid

• Interlingua doesn’t work: why?– Sapir-Whorf Hypothesis: can this be an

explanation?• There is no ‘universal language of thought’• The way how we think / perceive the world is

determined by our language• We can put off ‘spectacles’ of language only

by putting on other ‘’spectacles’ of another language

51

… Transfer vs. Interlingua

• Transfer has a theoretical background, it is not an engineering ad-hoc solution, a "poor substitute for Interlingua". It must be takes seriously and developed through solving problems in contrastive linguistics and in knowledge representation appropriate for translation tasks".

Whitelock and Kilby, 1995, p. 7-9

52

MT architectures: open questions

• Depth of the SL analysis• Nature of the interface representation

(syntactic, semantic, both?)• Size and complexity of components

depending how far up the MT triangle they fall

• Nature of transfer may be influenced by how typologically similar the languages involved are– the more different -- the more complex is

the transfer

53

Automatic evaluation of MT quality

54

Aspects of MT evaluation (1)

(Hutchins & Somers, 1992:161-174)• Text quality

– (important for developers, users and managers);

• Extendibility – (developers)

• Operational capabilities of the system – (users)

• Efficiency of use – (companies, managers, freelance translators)

55

Aspects of MT evaluation (2)• Text Quality

– can be done manually and automatically– central issue in MT quality…

• Extendibility = architectural considerations: – adding new language pairs– extending lexical / grammatical coverage– developing new subject domains:

• “improvability” and “portability” of the system

56

Aspects of MT evaluation (3)• Operational capabilities of the system

– user interface– dictionary update: cost / performance, etc.

• Efficiency of use – is there an increase in productivity?– the cost of buying / tuning / integrating into

the workflow / maintaining / training personnel

– how much money can be saved for the company / department?

57

Text quality evaluation (TQE) – issues 1/2• Quality evaluation vs. error identification /

analysis• Black box vs. glass box evaluation• Error correction on the user side

– dictionary updating– do-not-translate lists, etc.

58

Text quality evaluation (TQE) – issues 2/2

• Multiple quality parameters & their relations • fidelity (adequacy)• fluency (intelligibility, clarity)• style• informativeness…

• Are these parameters completely independent?

• Or is intelligibility a pre-condition for adequacy or style?

• Granularity of evaluation different for different purposes

• individual sentences; texts; corpora of similar documents; the average performance of an MT system

59

Advantages of automatic evaluation

• Low cost• Objective character of evaluated parameters• reproducibility• comparability

– across texts: relative difficulty for MT– across evaluations

60

& Disadvantages …

• need for “calibration” with human scores• interpretation in terms of human quality

parameters is not clear• do not account for all quality dimensions

– hard to find good measures for certain quality parameters

• reliable only for homogeneous systems – the results for non-native human translation,

knowledge-based MT output, statistical MT output may be non-comparable

61

Methods of automatic evaluation• Automatic Evaluation is more recent: first

methods appeared in the late 90-ies– Performance methods

• Measuring performance of some system which uses degraded MT output

– Reference proximity methods• Measuring distance between MT and a

“gold standard” translation

62

A. Performance methods• A pragmatic approach to MT: similar to

performance-based human evaluation– “…can someone using the translation carry

out the instructions as well as someone using the original?” (Hutchins & Somers, 1992: 163)

• Different from human performance evaluation– 1. Tasks are carried out by an automated

system– 2. Parameter(s) of the output are

automatically computed

63

… automated systems used & parameters computed

• parser (automatic syntactic analyser) – Computing an average depth of syntactic trees

• (Rajman and Hartley, 2000)

• Named Entity Recognition system (a system which finds proper names, e.g., names of organisations…)– Number of extracted organisation names

• Information Extraction – filling a database: events, participants of events– Computing ratio of correctly filled database

fields

64

Performance-based methods: an example 1/2• Open-source NER system for English

(ANNIE) www.gate.ac.uk• the number of extracted Organisation Names

gives an indication of Adequacy

– ORI: … le chef de la diplomatie égyptienne– HT: the <Title>Chief</Title> of the

<Organization>Egyptian Diplomatic Corps </Organization>

– MT-Systran: the <JobTitle> chief </JobTitle> of the Egyptian diplomacy

65

Performance-based methods: an example 2/2• count extracted organisation names• the number will be bigger for better

systems– biggest for human translations

• other types of proper names do not correspond to such differences in quality– Person names– Location names– Dates, numbers, currencies …

66

NE recognition on MT output

0

100

200

300

400

500

600

700

Organ

izat

ion

Tit le

JobTit l

e

{Job}T

it le

Firs

tPer

son

Pers

onDat

e

Loca

t ion

Money

Perc

ent

ReferenceExpertCandideGlobalinkMetalReversoSystran

67

Performance-based methods: interpretation• built on prior assumptions about natural

language properties– sentence structure is always connected;– MT errors more frequently destroys relevant

contexts than creates spurious contexts;– difficulties for automatic tools are

proportional to relative “quality” (the amount of MT degradation)

• Be careful with prior assumptions– what is worse for the human user may be

better for an automatic system

68

Example 1• ORI : “Il a été fait chevalier dans l'ordre national du Mérite en mai 1991”

• HT: “He was made a Chevalier in the National Order of Merit in May, 1991.”

• MT-Systran: “It was made <JobTitle> knight</JobTitle> in the national order of the Merit in May 1991”.

• MT-Candide: “He was knighted in the national command at Merite in May, 1991”.

69

Example 2• Parser-based score: X-score• Xerox shallow parser XELDA produces

annotated dependency trees; identifies 22 types of dependencies– The Ministry of Foreign Affairs echoed this

view• SUBJ(Ministry, echoed)• DOBJ(echoed, view)• NN(Foreign, Affairs)• NNPREP(Ministry, of, Affairs)

70

Example 2 (contd.)• a hearing that lasted more then 2 hours

– RELSUBJ(hearing, lasted)• a public program that has already been

agreed on– RELSUBJPASS(program, agreed)

• to examine the effects as possible– PADJ(effects, possible)

• brightly coloured doors– ADVADJ(brightly, coloured)

• X-score = (#RELSUBJ + #RELSUBJPASS – #PADJ – #ADVADJ)

71

B. Reference proximity methods• Assumption of Reference Proximity (ARP):

– “…the closer the machine translation is to a professional human translation, the better it is” (Papineni et al., 2002: 311)

• Finding a distance between 2 texts– Minimal edit distance– N-gram distance– …

72

Minimal edit distance• Minimal number of editing operations to

transform text1 into text2– deletions (sequence xy changed to x)– insertions (x changed to xy)– substitutions (x changed by y)– transpositions (sequence xy changed to yx)

• Algorithm by Wagner and Fischer (1974).• Edit distance implementation: RED

method – Akiba Y., K Imamura and E. Sumita. 2001

73

Problem with edit distance: Legitimate translation variation• ORI: De son côté, le département d'Etat

américain, dans un communiqué, a déclaré: ‘Nous ne comprenons pas la décision’ de Paris.

• HT-Expert: For its part, the American Department of State said in a communique that ‘We do not understand the decision’ made by Paris.

• HT-Reference: For its part, the American State Department stated in a press release: We do not understand the decision of Paris.

• MT-Systran: On its side, the American State Department, in an official statement, declared: ‘We do not include/understand the decision’ of Paris.

74

Legitimate translation variation (LTV) …contd.• to which human translation should we

compute the edit distance?• is it possible to integrate both human

translations into a reference set?

75

N-gram distance • the number of common words (evaluating

lexical choices);• the number of common sequences of 2, 3,

4 … N words (evaluating word order):– 2-word sequences (bi-grams)– 3-word sequences (tri-grams)– 4-word sequences (four-grams)– … N-word sequences (N-grams)

• N-grams allow us to compute several parameters…

76

Proximity to human reference (1)

• MT “Systran”: The 38 heads of undertaking put in examination in the file were the subject of hearings […] in the tread of "political" confrontation.

• Human translation “Expert”: The 38 heads of companies questioned in the case had been heard […] following the "political" confrontation.

• MT “Candide”: The 38 counts of company put into consideration in the case had the object of hearings […] in the path of confrontal "political."

77

Proximity to human reference (2)

• MT “Systran”: The 38 heads of undertaking put in examination in the file were the subject of hearings […] in the tread of "political" confrontation.

• Human translation “Expert”: The 38 heads of companies questioned in the case had been heard […] following the "political" confrontation.

• MT “Candide”: The 38 counts of company put into consideration in the case had the object of hearings […] in the path of confrontal "political."

78

Proximity to human reference (3)

• MT “Systran”: The 38 heads of undertaking put in examination in the file were the subject of hearings […] in the tread of "political" confrontation.

• Human translation “Expert”: The 38 heads of companies questioned in the case had been heard […] following the "political" confrontation.

• MT “Candide”: The 38 counts of company put into consideration in the case had the object of hearings […] in the path of confrontal "political."

79

Matches of N-grams

HT

MT

True hits

False hitsOmissions

80

Matches of N-grams (contd.)

↓precision (avoiding false hits)

false hits Human text –

→ recall (avoiding omissions)

omissions true hits Human text +

MT – MT +

81

Precision and Recall• Precision = how accurate is the answer?

– “Don’t guess, wrong answers are deducted!”

• Recall = how complete is the answer?– “Guess if not sure!”, don’t miss anything!

precision=TrueHits

MT {TrueHits+FalseHits }

recall=TrueHits

HT {TrueHits+Omissions }

82

NE recognition on MT output

0

100

200

300

400

500

600

700

Organ

izat

ion

Tit le

JobTit l

e

{Job}T

it le

Firs

tPer

son

Pers

onDat

e

Loca

t ion

Money

Perc

ent

ReferenceExpertCandideGlobalinkMetalReversoSystran

83

Precision (P) and Recall (R): Organisation names

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7P.HT- exp.

P.HT- ref

P.candide

P.globalink

P.ms

P.reverso

P.systran

R.HT- exp.

R.HT- ref

R.candide

R.globalink

R.ms

R.reverso

R.systran

HT- Ref

HT- Exp.

U/ I

84

N-grams: Union and Intersection• Union Intersection

~Precision ~Recall

85

Translation variation and N-grams• N-gram distance to multiple human

reference translations • Precision on the union of N-gram sets

in HT1, HT2, HT3…• N-grams in all independent human translations

taken together with repetitions removed

• Recall on the intersection of N-gram sets

• N-grams common to all sets – only repeated N-grams! (most stable across different human translations)

86

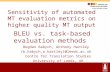

Human and automated scores• Empirical observations:

– Precision on the union gives indication of Fluency

– Recall on intersection gives indication of Adequacy• Automated Adequacy evaluation is less accurate –

harder

• Now most successful N-gram proximity -- – BLEU evaluation measure (Papineni et al.,

2002)• BiLingual Evaluation Understudy

87

BLEU evaluation measure

• computes Precision on the union of N-grams

• accurately predicts Fluency• produces scores in the range of [0,1]• Usage:

– download and extract Perl script “bleu.pl”– prepare MT output and reference translations

in separate *.txt files– Type in the command prompt:

• perl bleu-1.03.pl -t mt.txt -r ht.txt

88

BLEU evaluation measure• Texts may be surrounded by tags:

– e.g.: <DOC doc_ID="1" sys_ID="orig"> </DOC>

• different reference translations:– <DOC doc_ID="1" sys_ID="orig">– <DOC doc_ID="1" sys_ID="ref2">– <DOC doc_ID="1" sys_ID="ref3">

• paragraphs may be surrounded by tags:– e.g.: <seg id="1"> </seg>

89

Validation of automatic scores

• Automatic scores have to be validated– Are they meaningful,

• whether or not predict any human evaluation measures, e.g., Fluency, Adequacy, Informativeness

• Agreement human vs. automated scores – measured by Pearson’s correlation coefficient r

• a number in the range of [–1, 1]• –1 < r < –0.5 = strong negative correlation• 0.5 < r < +1 = strong positive correlation• –0.5 < r < 0.5 no correlation or weak correlation

90

Pearson’s correlation coefficient r in Excel

91

HumanSc = Slope * AutomatedSc + Intercept

Bleu-Em: Regression LineCorrel= 0.7699; Slope= 0.5996; Intercept= –0.2291

0

0.05

0.1

0.15

0.2

0.25

0.3

0.35

0.4

0 0.2 0.4 0.6 0.8 1

α; Slope = tg(α)

Intercept = x, where regression line crosses x axis

92

Challenges• Multi-dimensionality

– no single measure of MT quality– some quality measures are harder

• Evaluating usefulness of imperfect MT– different needs of automatic systems and

human users• human users have in mind publication

(dissemination)• MT is primarily used for understanding

(assimilation)

93

Limitations of MT architectures

94

MT: where we are now?• The prima face case against operational

machine translation from the linguistic point of view will be to the effect that there is unlikely to be adequate engineering where we know there is no adequate science. A parallel case can be made from the point of view of computer science, especially that part of it called artificial intelligence. (Kay, 1980: 222).

• … If we are doing something we understand weakly, we cannot hope for good results. And language, including translation, is still rather weakly understood. (Kettunen, 1986: 37)

95

BLEU scores for MT and Human Translation

0.2037 0.20480.2197 0.2207

0.2348 0.2387

0.2724 0.27420.2771 0.2831

0.4303 0.4304

0

0.05

0.1

0.15

0.2

0.25

0.3

0.35

0.4

0.45

0.5

BLEU-r BLEU-e

MT-ms

MT-globalink

MT-candide

MT-reverso

MT-systran

HT-expert/ref

96

Estimation of effort to reach human quality in MT

0

0.02

0.04

0.06

0.08

0.1

0.12

blue-r^E blue-e^E

MT-ms

MT-globalink

MT-candide

MT-reverso

MT-systran

HT-expert/ref

97

Principal challenge: Meaning is not explicitly present

• "The meaning that a word, a phrase, or a sentence conveys is determined not just by itself, but by other parts of the text, both preceding and following… The meaning of a text as a whole is not determined by the words, phrases and sentences that make it up, but by the situation in which it is used".

M.Kay et. al.: Verbmobil, CSLI 1994, pp. 11-1

98

Limitations of the state-of-the-art MT architectures• Q.: are there any features in human translation

which cannot be modelled in principle (e.g., even if dictionary and grammar are complete and “perfect”)?

• MT architectures are based on searching databases of translation equivalents, cannot

• invent novel strategies• add / removing information• prioritise translation equivalents

– trade-off between fluency and adequacy of translation

99

Limitations of the state-of-the-art MT architectures

• Q.: are there any features in human translation which cannot be modelled in principle (e.g., even if dictionary and grammar are complete and “perfect”)?

• MT architectures are based on searching databases of translation equivalents, cannot

• invent novel strategies• add / removing information• prioritise translation equivalents

– trade-off between fluency and adequacy of translation

100

Problem 1: Obligatory loss of information: negative equivalents

• ORI: His pace and attacking verve saw him impress in England’s game against Samoa

• HUM: Его темп и атакующая мощь впечатляли во время игры Англии с Самоа

• HUM: His pace and attacking power impressed during the game of England with Samoa

• ORI: Legout’s verve saw him past world No 9 Kim Taek-Soo

• HUM: Настойчивость Легу позволила ему обойти Кима Таек-Соо, занимающего 9-ю позицию в мировом рейтинге

• HUM: Legout’s persistency allowed him to get round Kim Taek-Soo

101

Problem 2: Information redundancy • Source Text and the Target Text usually are

not equally informative:– Redundancy in the ST: some information is

not relevant for communication and may be ignored

– Redundancy in the TT: some new information has to be introduced (explicated) to make the TT well-formed• e.g.: MT translating etymology of proper

names, which is redundant for communication : “Bill Fisher” => “to send a bill to a fisher”

102

Problem 3: changing priorities dynamically (1/2)• Salvadoran President-elect Alfredo

Christiani condemned the terrorist killing of Attorney General Roberto Garcia Alvarado

• SYSTRAN:• MT: Сальвадорский Избранный президент

Алфредо Чристиани осудил убийство террориста Генерального прокурора Роберто Garcia Alvarado

• MT(lit.) Salvadoran elected president Alfredo Christiani condemned the killing of a terrorist Attorney General Roberto Garcia Alvarado

103

Problem 3: changing priorities dynamically (2/2)• PROMT

• Сальвадорский Избранный президент Альфредо Чристиани осудил террористическое убийство Генерального прокурора Роберто Гарси Альварадо

• However: Who is working for the police on a terrorist killing mission?

• Кто работает для полиции на террористе, убивающем миссию?

• Lit.: Who works for police on a terrorist, killing the mission?

104

Fundamental limits of state-of-the-art MT technology (1/2)• “Wide-coverage” industrial systems:

• There is a “competition” between translation equivalents for text segments

• MT: Order of application of equivalents is fixed

• Human translators – able to assess relevance and re-arrange the order

• An MT system can be designed to translate any sentence into any language

• However, then we can always construct another sentence which will be translated wrongly

105

… Summary

• We can design a system which correctly translates any particular sentence between any two languages

• Once such system is designed we can always come up with a sentence in the SL which will be translated wrongly into the TL

106

Translation: As true as possible, as free as necessary“[…] a German maxim “so treu wie möglich,

so frei wie nötig” (as true as possible, as free as necessary) reflects the logic of translator’s decisions well: aiming at precision when this is possible, the translation allows liberty only if necessary […] The decisions taken by a translator often have the nature of a compromise, […] in the process of translation a translator often has to take certain losses. […] It follows that the requirement of adequacy has not a maximal, but an optimal nature.” (Shveitser, 1988)

107

MT and human understanding

• Cases of “contrary to the fact” translation• ORI: Swedish playmaker scored a hat-

trick in the 4-2 defeat of Heusden-Zolder• MT: Шведский плеймейкер выиграл

хет-трик в этом поражении 4-2 Heusden-Zolder. (Swedish playmaker won a hat-trick in this

defeat 4-2 Heusden-Zolder)• In English “the defeat” may be used with

opposite meanings, needs disambiguation:• “X’s defeat” == X’s loss• “X’s defeat of Y” == X’s victory

108

Comparable corpora & dynamic translation resources• Translations can be extracted from two

monolingual corpora using a bilingual dictionary– No smoking ~ DE: Rauchen verboten– DE: In diesem Bereich gilt die

Flughafenbenutzungsordung ~ – ?* Airport user’s manual applies to this area– RU: Ischerpyvajuwij otvet ~ ?* Irrefragable

answer

• ASSIST Project, Leeds and Lancaster, 2007

109

Information extraction for MT

• Salvadoran President condemned the terrorist killing of Attorney General Alvarado– Perpetrator: terrorist– Human target: Attorney General Alvarado

• Salvadoran president condemned the killing of a terrorist Attorney General Alvarado– Perpetrator: [UNKNOWN]– Human target: terrorist Attorney General Alvarado

110

MT: way forward?• Competition of equivalents for the same segments

– There is no data like more data vs. “intelligent processing” approaches

– “Not the power to remember, but its very opposite, the power to forget, is a necessary condition for our existence”. (Saint Basil, quoted in Barrow, 2003: vii)

• Text-level MT: data-driven and rule-based models– Discovering parallel ontologies– Corpus-based semantic role labeling,

attachment preferences– Information Extraction for MT

Related Documents